On this article, we introduce the Transformers package deal, and element the way it facilitates notable NLP fashions like RoBERTa, SATformer, GPT-f, and extra.

4 days in the past

•

7 min learn

Introduction

Pure language reasoning is an utility of deductive reasoning that attracts a conclusion from given premises and guidelines said in pure language. The aim of neural community structure analysis is to determine the best way to use these premises and guidelines to attract new conclusions.

Up to now, a comparable activity would have been carried out by programs that have been pre-programmed with the information already saved in a proper approach, in addition to the principles to observe to deduce new data. Nevertheless, the usage of formal representations has confirmed to be a major impediment for this department of analysis (Mark A. Musen, 1988). Now, because of the event of transformers and the distinctive efficiency they’ve proven in all kinds of NLP duties, it’s potential to keep away from the necessity for formal representations and to have transformers take part immediately in reasoning via the usage of pure language. On this article, we are going to spotlight a number of the most vital transformers for pure language reasoning duties.

RoBERTa

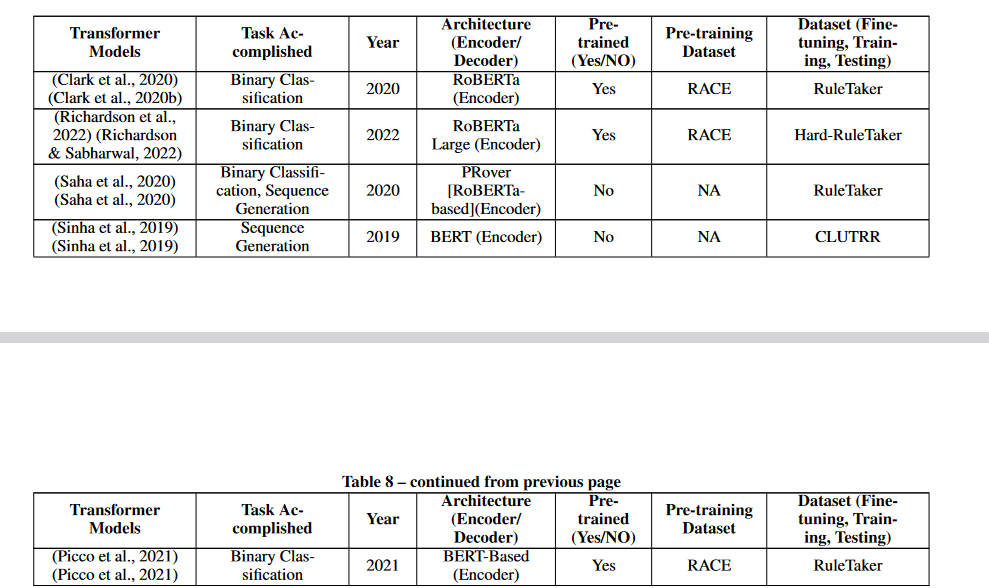

Within the analysis performed in 2020 by Clark et al. (2020b), the transformer was given a binary classification activity to carry out. The aim of this work was to find out whether or not or not a given proposition may very well be derived from a given assortment of premises and guidelines expressed in pure language.

It was pre-trained on a dataset consisting of questions from standardized highschool checks that required the usage of reasoning expertise. Due to this pre-training, the Transformer was in a position to obtain a really excessive degree of accuracy on the check dataset, which was 98%. The information set contained hypotheses that have been randomly chosen and generated utilizing predefined units of names and traits.

The duty requested the transformer to find out whether or not the given premises and guidelines (the context) led to the conclusion that the given declare (the assertion) adopted from them (Clark et al., 2020b).

RoBERTa-Massive

Within the examine by Richardson and Sabharwal (2022), the authors tried to deal with a shortcoming recognized within the paper by Clark et al. (2020b) relating to the approach used to generate the dataset. They identified that the uniform random collection of theories utilized in (Clark et al., 2020b) didn’t all the time lead to difficult instances.

They offered an progressive solution to generate difficult information units for algorithmic reasoning to get round this downside. An important element of their approach is an strategy that takes onerous examples of normal SAT propositional formulation and interprets them into pure language utilizing a predetermined assortment of English rule languages. By following this method, they have been in a position to construct a tougher dataset, which is vital for coaching sturdy fashions and for reliable analysis.

The authors performed experiments wherein they evaluated the fashions skilled on the dataset from (Clark et al., 2020b) on their newly constructed dataset. The aim of those checks was for instance the effectiveness of the approach they’d developed. In response to the outcomes, the fashions achieved an accuracy of 57.7% and 59.6% for T5 and RoBERTa, respectively. These outcomes spotlight the truth that fashions skilled on easy datasets might not be capable to clear up difficult instances.

PRover

In a separate however related paper, Saha et al. (2020) developed a mannequin they name PRover. This mannequin is an interpretable joint transformer that has the potential to supply an identical proof that’s correct 87% of the time. The issue that PRover makes an attempt to unravel is equivalent to the one studied by Clark et al. (2020b) and Richardson & Sabharwal (2022), which is to find out whether or not or not a specific conclusion follows logically from the premises and guidelines offered.

The proof generated by PRover is given within the type of a directed graph, the place the propositions and guidelines are represented by nodes, and the perimeters of the graph point out which new propositions end result from the appliance of the principles to the earlier propositions. Typically, the technique proposed by Saha et al. (2020) is a promising solution to obtain interpretable and proper reasoning fashions.

BERT-based

As described in (Picco et al., 2021), improved generalization efficiency on the RuleTaker dataset was achieved through the use of a BERT-based structure (referred to as a “neural unifier”). Even when skilled solely on shallow queries, the mannequin ought to be capable to reply complicated questions, so the authors tried to imitate sure facets of the backward chaining reasoning approach. This could enhance the mannequin’s capacity to deal with questions that require multiple step to reply.

The neural unifier has two parts: a fact-checking unit and a unification unit, each of that are typical BERT transformers.

- The actual fact-checking unit is designed to find out whether or not an embedding vector C represents a information base from which a question of depth 0 (represented by the embedding vector q-0) follows.

- The unification unit performs backward chaining by taking the embedding vector q-n of a depth-n question and the embedding vector of the information base, vector C, as enter and attempting to foretell an embedding vector q0.

BERT

In distinction to the above work, Sinha et al. (2019) created a dataset referred to as CLUTRR wherein the principles will not be offered within the enter. As an alternative, the BERT transformer mannequin should extract the hyperlinks between entities and infer the principles that govern them. For instance, if the community is supplied with a information base containing assertions comparable to “Alice is Bob’s mom” and “Jim is Alice’s father”, it may well infer that “Jim is Bob’s grandfather”.

Automated symbolic reasoning

The department of pc science referred to as automated symbolic reasoning focuses on utilizing computer systems to unravel logical issues comparable to SAT fixing and theorem proving. Heuristic search strategies have lengthy been the usual methodology for fixing such issues. Nevertheless, current analysis has explored the potential for enhancing the effectiveness and effectivity of such approaches through the use of learning-based strategies.

One methodology is to check how classical algorithms select amongst a number of heuristics to search out the best one. One other is to make use of an answer primarily based on studying information from begin to the top. Outcomes from each strategies have been promising, and each maintain promise for future developments in automated symbolic reasoning (Kurin et al., 2020; Selsam et al., 2019). Some transformer-based fashions have carried out very properly in automated symbolic reasoning duties.

SATformer

To resolve the SAT downside for boolean formulae, Shi et al. launched SATformer in 2022 (Shi et al., 2021)—a hierarchical transformer design that gives a complete, learning-based answer to the problem. It is not uncommon apply to transform a boolean system into its conjunctive regular kind (CNF) earlier than feeding it right into a SAT solver. Every sentence within the CNF system is a disjunction of the literals that make up the system, therefore the system itself is a conjunction of boolean variables and their negations. Utilizing boolean variables in a CNF system would end result within the notation (A OR B) AND (NOT A OR C), the place the clauses (A OR B) and (NOT A OR C) are composed totally of literals.

The authors make use of a graph neural community (GNN) to acquire the embeddings of the clauses within the CNF system. SATformer then operates on these clause embeddings to seize the interdependencies amongst clauses, with the self-attention weight being skilled to be excessive when teams of clauses that might probably result in an unsatisfiable system are attended collectively, and low in any other case. Via this strategy, SATformer effectively learns the correlations between clauses, leading to improved SAT prediction capabilities (Shi et al., 2022b)

TRSAT

In 2021, Shi et al. carried out analysis on MaxSAT, a particular case of the boolean SAT problem, and offered a transformer mannequin referred to as TRSAT, which capabilities as an end-to-end learning-based SAT solver (Shi et al., 2021). The satisfiability of a linear temporal system (Pnueli, 1977) is analogous to the boolean SAT wherein the target is to discover a passable symbolic hint to the system given the linear temporal formulation.

Transformer

Hahn et al. (2021) dealt with difficult issues than the binary classification duties beforehand studied by addressing the boolean SAT problem and the temporal satisfiability downside. The target of those points is to not merely categorize whether or not or not a given system is met, however reasonably to assemble an appropriate sequence task for that system. Utilizing classical solvers, the authors created linear temporal formulae with satisfying symbolic traces and boolean formulation with corresponding satisfying partial assignments to create their datasets. To carry out this operation, they used a standard transformer design. A few of the satisfying traces generated by the Transformer weren’t seen throughout coaching, demonstrating its capability to unravel the problem and never solely copy the conduct of the normal solvers.

GPT-f

Polu and Sutskever (2020) launched GPT-F, an automatic prover and proof assistant that, like GPT-2 and GPT-3, is constructed on a decoder-only transformers structure. The set.mm dataset, which incorporates over 38,000 proofs, was used to coach GPT-F. The authors’ largest mannequin has 774 million trainable parameters over 36 layers. Lots of the progressive proofs generated by this deep studying community have been adopted by teams and libraries dedicated to the examine of arithmetic.

Conclusion

On this article now we have talked about some powerfull transformers that we are able to use for pure language reasoning. There was some encouraging analysis on the usage of transformers for theorem proving and propositional reasoning, however their efficiency on pure language reasoning duties continues to be removed from preferrred. Additional analysis is required to enhance the reasoning skills of transformers and to search out new, difficult duties for them. Regardless of these caveats, transformer-based fashions have undoubtedly superior the cutting-edge in pure language processing and opened up thrilling new avenues for analysis in language understanding and reasoning.

Reference

A Complete Survey on Purposes of Transformers for Deep Studying Duties

Transformer is a deep neural community that employs a self-attention mechanism to grasp the contextual relationships inside sequential information. In contrast to standard neural networks or up to date variations of Recurrent Neural Networks (RNNs) comparable to Lengthy Brief-Time period Reminiscence (LSTM), transformer fashions excel…

Reasoning with Transformer-based Fashions: Deep Studying, however Shallow…

Latest years have seen spectacular efficiency of transformer-based fashions on completely different pure language processing duties. Nevertheless, it’s not clear to what diploma the transformers can cause on…