Introduction

Self-supervised approaches have accomplished many various kinds of NLP duties.

Denoising autoencoders skilled to get well textual content when a random subset of phrases has been masked off has confirmed to be the simplest technique.

Beneficial properties have been confirmed in current work by enhancing the masking distribution, masking prediction order, and context for changing masks tokens.

Though promising, these approaches are sometimes restricted in scope to only a few distinct duties (akin to span prediction, span creation, and so forth.).

What’s the BART Transformer Mannequin in NLP?

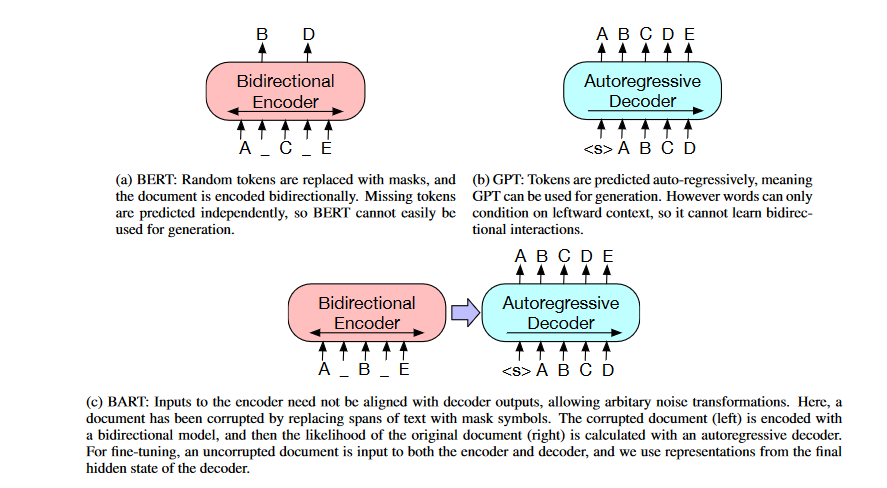

This paper introduces BART, a pre-training technique that mixes Bidirectional and Auto-Regressive Transformers. BART is a denoising autoencoder that makes use of a sequence-to-sequence paradigm, making it helpful for numerous functions. Pretraining consists of two phases: (1) textual content is corrupted utilizing an arbitrary noising perform, and (2) a sequence-to-sequence mannequin is discovered to reconstruct the unique textual content.

BART’s Transformer-based neural machine translation structure may be seen as a generalization of BERT (as a result of bidirectional encoder), GPT (With the left-to-right decoder), and lots of different up to date pre-training approaches.

Along with its power in comprehension duties, BART’s effectiveness will increase with fine-tuning for textual content era. It generates new state-of-the-art outcomes on numerous abstractive dialog, query answering, and summarization duties, matching the efficiency of RoBERTa with comparable coaching assets on GLUE and SQuAD.

Structure

Besides altering the ReLU activation features to GeLUs and initializing parameters from (0, 0.02), BART follows the overall sequence-to-sequence Transformer design (Vaswani et al., 2017). There are six layers within the encoder and decoder for the bottom mannequin and twelve layers in every for the massive mannequin.

Much like the structure utilized in BERT, the 2 most important variations are that (1) in BERT, every layer of the decoder moreover performs cross-attention over the ultimate hidden layer of the encoder (as within the transformer sequence-to-sequence mannequin); and (2) in BERT a further feed-forward community is used earlier than phrase prediction, whereas in BART there is not.

Pre-training BART

To coach BART, we first corrupt paperwork after which optimize a reconstruction loss, which is the cross-entropy between the decoder’s output and the unique doc. In distinction to standard denoising autoencoders, BART could also be used for any kind of doc corruption.

The worst-case situation for BART is when all supply data is misplaced, which turns into analogous to a language mannequin. The researchers check out a number of new and outdated transformations, however additionally they consider there’s a lot room for creating much more distinctive options.

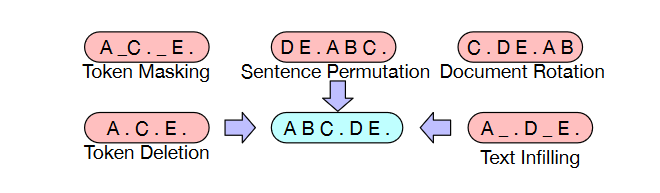

Within the following, we are going to define the transformations they carried out and supply some examples. Beneath is a abstract of the transformations they used, and an illustration of a number of the outcomes is supplied within the determine.

- Token Masking: Following BERT, random tokens are sampled and changed with MASK parts.

- Token Deletion: Random tokens are deleted from the enter. In distinction to token masking, the mannequin should predict which positions are lacking inputs.

- Textual content Infilling: A number of textual content spans are sampled, with span lengths drawn from a Poisson distribution (λ = 3). Every span is changed with a single MASK token. Textual content infilling teaches the mannequin to foretell what number of tokens are lacking from a span.

- Sentence Permutation: A doc is split into sentences based mostly on full stops, and these sentences are shuffled in random order.

- Doc Rotation: A token is chosen uniformly at random, and the doc is rotated to start with that token. This activity trains the mannequin to establish the beginning of the doc.

High-quality-tuning BART

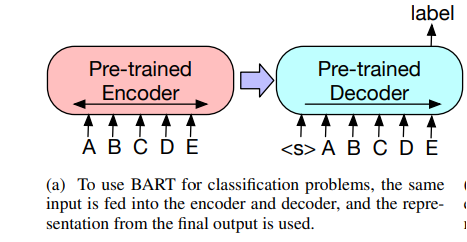

A number of potential makes use of for the representations BART generates in subsequent processing steps exist:

- Sequence Classification Duties: For sequence classification issues, the identical enter is equipped into the encoder and decoder, and the ultimate hidden states of the final decoder token is fed into the brand new multi-class linear classifier.

- Token Classification Duties: Each the encoder and decoder take the whole doc as enter, and from the decoder’s prime hidden state, a illustration of every phrase is derived. The token’s classification depends on its illustration.

- Sequence Technology Duties: For sequence-generating duties like answering summary questions and summarizing textual content, BART’s autoregressive decoder permits for direct fine-tuning. Each of those duties are associated to the pre-training aim of denoising since they contain the copying and subsequent manipulation of enter information. Right here, the enter sequence serves as enter to the encoder, whereas the decoder generates outputs in an autoregressive method.

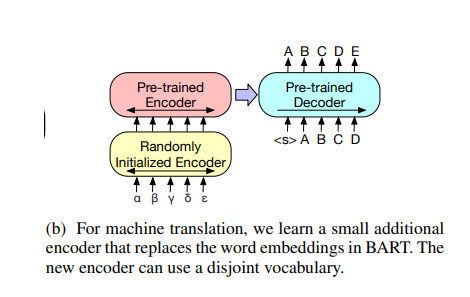

- Machine Translation: The researchers examine the feasibility of utilizing BART to boost machine translation decoders for translating into English. Utilizing pre-trained encoders has been confirmed to enhance fashions, whereas the advantages of incorporating pre-trained language fashions into decoders have been extra restricted. Utilizing a set of encoder parameters discovered from bitext, they show that the whole BART mannequin can be utilized as a single pretrained decoder for machine translation. Extra particularly, they swap out the embedding layer of BART’s encoder with a model new encoder utilizing random initialization. When the mannequin is skilled from begin to finish, the brand new encoder is skilled to map overseas phrases into an enter BART can then translate into English. In each phases of coaching, the cross-entropy loss is backpropagated from the BART mannequin’s output to coach the supply encoder. Within the first stage, they repair most of BART’s parameters and solely replace the randomly initialized supply encoder, the BART positional embeddings, and the self-attention enter projection matrix of BART’s encoder first layer. Second, they carry out a restricted variety of coaching iterations on all mannequin parameters.

BART Mannequin for Textual content Summarization

It takes a lot time for a researcher or journalist to sift by all of the long-form data on the web and discover what they want. It can save you time and vitality by skimming the highlights of prolonged literature utilizing a abstract or paraphrase synopsis.

The NLP activity of summarizing texts could also be automated with the assistance of transformer fashions. Extractive and abstractive methods exist to attain this aim. Summarizing a doc extractively includes discovering essentially the most important statements within the textual content and writing them down. One could classify this as a sort of knowledge retrieval. Tougher than literal summarizing is summary summarization, which seeks to understand the entire materials and supply paraphrased textual content to sum up the important thing factors. The second kind of abstract is carried out by transformer fashions akin to BART.

HuggingFace offers us fast and easy accessibility to 1000’s of pre-trained and fine-tuned weights for Transformer fashions, together with BART. You possibly can select a tailor-made BART mannequin for the textual content summarization project from the HuggingFace mannequin explorer web site. Every submitted mannequin features a detailed description of its configuration and coaching. The beginner-friendly bart-large-cnn mannequin deserves a glance, so let’s take a look at it. Both use the HuggingFace Set up web page or run pip set up transformers to get began. Subsequent, we’ll observe these three simple steps to create our abstract:

from transformers import pipeline

summarizer = pipeline("summarization", mannequin="fb/bart-large-cnn")Transformers mannequin pipeline must be loaded first. Module within the pipeline is outlined by naming the duty and the mannequin. The time period “summarization” is used, and the mannequin is known as “fb/bart-large-xsum.” If we need to try one thing completely different than the usual information dataset, we will use the Excessive Abstract (XSum) dataset. The mannequin was skilled to generate one-sentence summaries completely.

The final step is developing an enter sequence and placing it by its paces utilizing the summarizer() pipeline. By way of tokens, the abstract size will also be adjusted utilizing the perform’s non-compulsory max_length and min_length arguments.

from transformers import pipeline

summarizer = pipeline("summarization", mannequin="fb/bart-large-cnn")

ARTICLE = """ New York (CNN)When Liana Barrientos was 23 years outdated, she bought married in Westchester County, New York.

A 12 months later, she bought married once more in Westchester County, however to a unique man and with out divorcing her first husband.

Solely 18 days after that marriage, she bought hitched but once more. Then, Barrientos declared "I do" 5 extra occasions, typically solely inside two weeks of one another.

In 2010, she married as soon as extra, this time within the Bronx. In an utility for a wedding license, she acknowledged it was her "first and solely" marriage.

Barrientos, now 39, is going through two prison counts of "providing a false instrument for submitting within the first diploma," referring to her false statements on the

2010 marriage license utility, in accordance with court docket paperwork.

Prosecutors mentioned the marriages have been a part of an immigration rip-off.

On Friday, she pleaded not responsible at State Supreme Court docket within the Bronx, in accordance with her lawyer, Christopher Wright, who declined to remark additional.

After leaving court docket, Barrientos was arrested and charged with theft of service and prison trespass for allegedly sneaking into the New York subway by an emergency exit, mentioned Detective

Annette Markowski, a police spokeswoman. In whole, Barrientos has been married 10 occasions, with 9 of her marriages occurring between 1999 and 2002.

All occurred both in Westchester County, Lengthy Island, New Jersey or the Bronx. She is believed to nonetheless be married to 4 males, and at one time, she was married to eight males directly, prosecutors say.

Prosecutors mentioned the immigration rip-off concerned a few of her husbands, who filed for everlasting residence standing shortly after the marriages.

Any divorces occurred solely after such filings have been accredited. It was unclear whether or not any of the boys might be prosecuted.

The case was referred to the Bronx District Lawyer's Workplace by Immigration and Customs Enforcement and the Division of Homeland Safety's

Investigation Division. Seven of the boys are from so-called "red-flagged" international locations, together with Egypt, Turkey, Georgia, Pakistan and Mali.

Her eighth husband, Rashid Rajput, was deported in 2006 to his native Pakistan after an investigation by the Joint Terrorism Job Drive.

If convicted, Barrientos faces as much as 4 years in jail. Her subsequent court docket look is scheduled for Could 18.

"""

print(summarizer(ARTICLE, max_length=130, min_length=30, do_sample=False))Output:

[{'summary_text': 'Liana Barrientos, 39, is charged with two counts of "offering a false instrument for filing in the first degree" In total, she has been married 10 times, with nine of her marriages occurring between 1999 and 2002. She is believed to still be married to four men.'}]An alternative choice is to make use of BartTokenizer to generate tokens from textual content sequences and BartForConditionalGeneration for summarizing.

# Importing the mannequin

from transformers import BartForConditionalGeneration, BartTokenizer, BartConfigAs a pre-trained mannequin, ” bart-large-cnn” is optimized for the abstract job.

The from_pretrained() perform is used to load the mannequin, as seen beneath.

# Tokenizer and mannequin loading for bart-large-cnn

tokenizer=BartTokenizer.from_pretrained('fb/bart-large-cnn')

mannequin=BartForConditionalGeneration.from_pretrained('fb/bart-large-cnn')Assume it’s important to summarize the identical textual content as within the instance above. You can also make benefit of the tokenizer’s batch_encode_plus() function for this objective. When known as, this technique produces a dictionary that shops the encoded sequence or sequence pair and some other data supplied.

How can we limit the shortest potential sequence that may be returned?

In batch_encode_plus(), set the worth of the max_length parameter. To get the ids of the abstract output, we feed the input_ids into the mannequin.generate() perform.

# Transmitting the encoded inputs to the mannequin.generate() perform

inputs = tokenizer.batch_encode_plus([ARTICLE],return_tensors="pt")

summary_ids = mannequin.generate(inputs['input_ids'], num_beams=4, max_length=150, early_stopping=True)The abstract of the unique textual content has been generated as a sequence of ids by the mannequin.generate() technique. The perform mannequin.generate() has many parameters, amongst which:

- input_ids: The sequence used as a immediate for the era.

- max_length: The max size of the sequence to be generated. Between min_length and infinity. Default to twenty.

- min_length: The min size of the sequence to be generated. Between 0 and infinity. Default to 0.

- num_beams: Variety of beams for beam search. Should be between 1 and infinity. 1 means no beam search. Default to 1.

- early_stopping: if set to True beam search is stopped when not less than num_beams sentences completed per batch.

The decode() perform can be utilized to rework the ids sequence into plain textual content.

# Decoding and printing the abstract

abstract = tokenizer.decode(summary_ids[0], skip_special_tokens=True)

print(abstract)The decode() convert a listing of lists of token ids into a listing of strings. Its accepts a number of parameters amongst which we are going to point out two of them:

- token_ids: Listing of tokenized enter ids.

- skip_special_tokens : Whether or not or to not take away particular tokens within the decoding.

Consequently, we get this:

Liana Barrientos, 39, is charged with two counts of providing a false instrument for submitting within the first diploma. In whole, she has been married 10 occasions, with 9 of her marriages occurring between 1999 and 2002. At one time, she was married to eight males directly, prosecutors say.Summarizing Paperwork with BART utilizing ktrain

ktrain is a Python package deal that reduces the quantity of code required to implement machine studying. Wrapping TensorFlow and different libraries, it goals to make cutting-edge ML fashions accessible to non-experts whereas satisfying the wants of specialists within the subject. With ktrain’s streamlined interface, you’ll be able to deal with all kinds of issues with as little as three or 4 “instructions” or strains of code, no matter whether or not the info being labored with is textual, visible, graphical, or tabular.

Utilizing a pretrained BART mannequin from the transformers library, ktrain can summarize textual content. First, we’ll create TransformerSummarizer occasion to carry out the precise summarizing. (Please be aware that the set up of PyTorch is important to make use of this perform.)

from ktrain.textual content.summarization import TransformerSummarizer

ts = TransformerSummarizer()Let’s go forward and write up an article:

article = """ Saturn orbiter and Titan environment probe. Cassini is a joint

NASA/ESA mission designed to perform an exploration of the Saturnian

system with its Cassini Saturn Orbiter and Huygens Titan Probe. Cassini

is scheduled for launch aboard a Titan IV/Centaur in October of 1997.

After gravity assists of Venus, Earth and Jupiter in a VVEJGA

trajectory, the spacecraft will arrive at Saturn in June of 2004. Upon

arrival, the Cassini spacecraft performs a number of maneuvers to attain an

orbit round Saturn. Close to the tip of this preliminary orbit, the Huygens

Probe separates from the Orbiter and descends by the environment of

Titan. The Orbiter relays the Probe information to Earth for about 3 hours

whereas the Probe enters and traverses the cloudy environment to the

floor. After the completion of the Probe mission, the Orbiter

continues touring the Saturnian system for 3 and a half years. Titan

synchronous orbit trajectories will permit about 35 flybys of Titan and

focused flybys of Iapetus, Dione and Enceladus. The targets of the

mission are threefold: conduct detailed research of Saturn's environment,

rings and magnetosphere; conduct close-up research of Saturn's

satellites, and characterize Titan's environment and floor."""We will now summarize this text by utilizing TransformerSummarizer occasion:

ts.summarize(article)Conclusion

Earlier than diving into the BART structure and coaching information, this text outlined the problem BART is attempting to reply and the methodology that results in its excellent efficiency. We additionally checked out a demo inference instance utilizing HuggingFace, ktrain and BART’s Python implementation. This overview of concept and code will provide you with an incredible headstart by permitting you to construct a robust Transformer-based seq2seq mannequin in Python.

References

https://huggingface.co/transformers/v2.11.0/model_doc/bart.html

https://arxiv.org/abs/1910.13461

https://www.projectpro.io/article/transformers-bart-model-explained/553

https://github.com/amaiya/ktrain

https://www.machinelearningplus.com/nlp/text-summarization-approaches-nlp-example/