Carry this mission to life

Machine Studying (ML) is the brand new buzz phrase of the twenty first century and is the bottom of A.I. and laptop intelligence. ML makes use of predictive fashions that study the sample from knowledge to deduce future traits or outcomes. Deep Studying is a sub-domain of ML, the place the mannequin is impressed by the neural community of the human mind. These fashions are able to understanding the complicated relationships between the inputs and the outputs. Deep Studying is the rising method and has been extensively utilized within the area of Pure Language Processing, Switch Studying, Pc Imaginative and prescient and plenty of extra.

On this article, we are going to look at the Convolutional Neural Community (CNN), and dive deep to know the idea of pooling to point out why it’s utilized in CNNs. We now have additionally included a code demo that creates a CNN to categorise objects from the CIFAR10 dataset. Within the concluding part of the article, we are going to discover some rising traits in pooling layers geared toward addressing the drawbacks related to conventional pooling strategies.

Introduction to CNNs

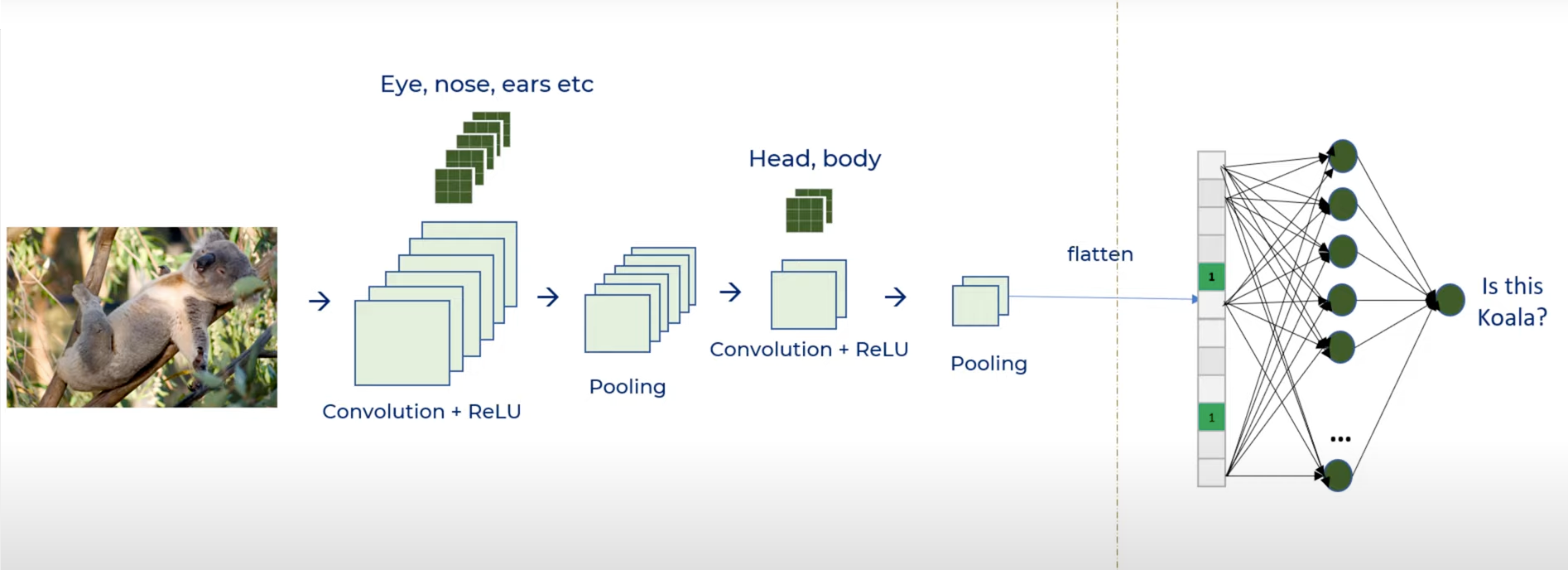

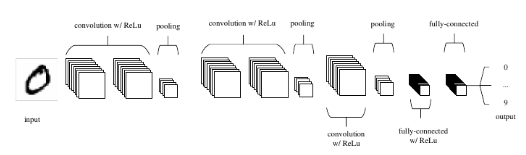

A Convolutional Neural Community (CNN) is a specialised sort of Deep Neural Community (DNN) comprising a number of convolution layers, every comprising an activation perform and a pooling layer. This DNN is generally utilized in object detection, classification or segmentation. A Convolutional Neural Community (CNN) is designed to research and classify enter photographs into varied predefined lessons.

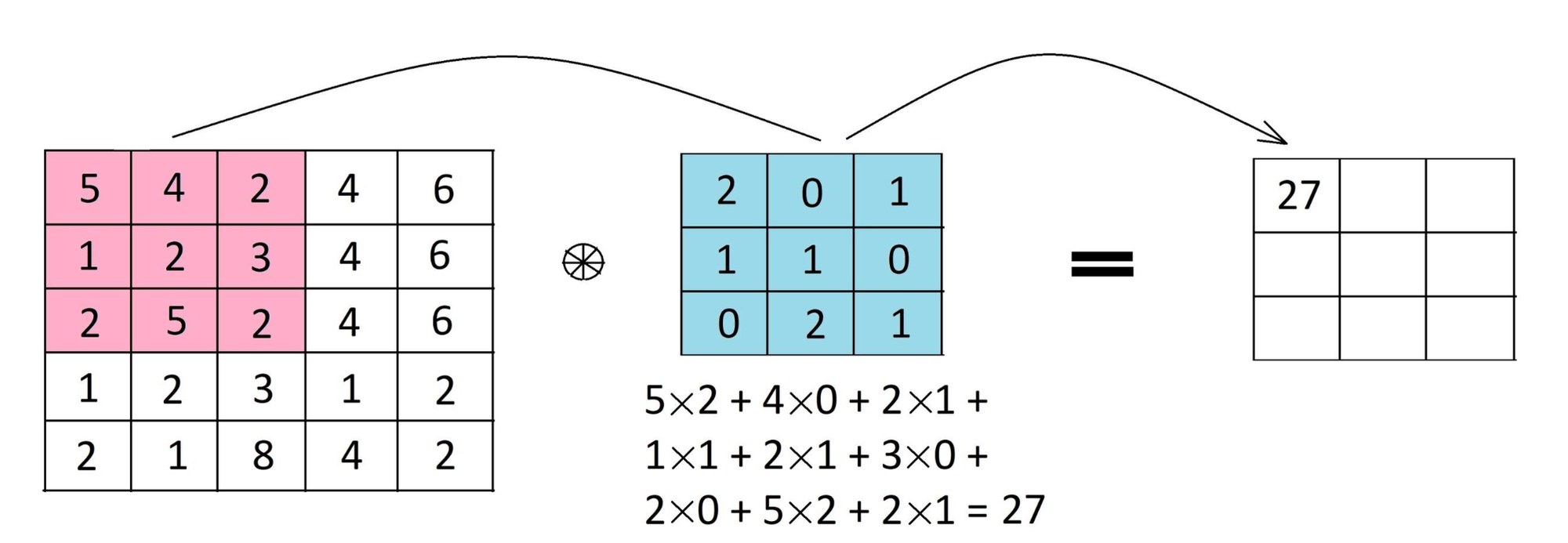

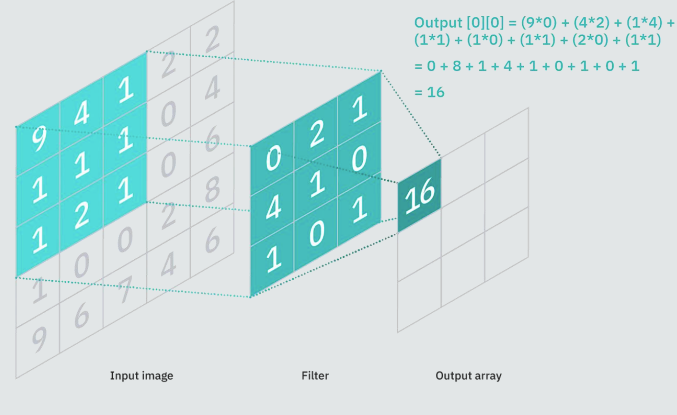

The picture undergoes a sequential course of involving completely different layers, together with convolutional layers, pooling layers, and absolutely linked layers. The convolution layer makes use of particular filters (kernels) to extract options from a picture. A filter in less complicated phrases is a function detector. Therefore, particular filters are used to detect particular options in a picture. This filter is utilized to the enter knowledge or picture by sliding it over the whole enter. At every cell ingredient smart multiplication is carried out, and outcomes are summed as much as produce a single worth for that particular cell. Moreover, the filter takes a stride soar and the identical operation is repeated till the whole picture is captured. The output of this operation is called a function map.

Function maps are accountable for capturing particular patterns relying on the filter used. Because the filter takes the stride soar throughout the enter, it detects completely different native patterns, capturing spatial info.

This operation is called Convolution operation in CNN. Convolutional layers are essential in image-related duties as a result of they permit the community to mechanically study.

To grasp the Convolution operation intimately, be at liberty to take a look at a few of our different tutorials, like this one or this one, on the Paperspace Weblog.

The pooling layer is normally added after the convolution layer. An efficient pooling methodology is anticipated to selectively extracting related info whereas discarding irrelevant particulars.

Function of Pooling in Deep Studying

Pooling is a way utilized in Convolutional Neural Networks (CNNs) to downsample the spatial dimensions of the enter function maps, lowering the quantity of computation and parameters within the community whereas retaining vital info. Pooling is often utilized after convolutional layers and earlier than absolutely linked layers in a CNN. Pooling layers are sometimes utilized to study invariant options. In less complicated phrases, the pooling layer takes within the output from the convolution layer and retains the vital info of the enter picture and discards the knowledge which is pointless. This helps in discount of the dimensionality of the function map.

Pooling results in a major discount within the spatial dimensions of the enter, serving two main aims. Firstly, it diminishes the variety of parameters or weights, thereby reducing computational prices. Secondly, it helps management overfitting within the community as a result of there are much less parameters. One other benefit of pooling is it makes the ML mannequin extra sturdy to object positions in picture as pooling makes the mannequin tolerant in the direction of variations or picture distortions.

Few of the most typical pooling strategies that are extensively used are Common Pooling, Max Pooling, Min Pooling, Combined Pooling, World Pooling, 𝑳𝑷 Pooling and few of the novel pooling strategies contains Multi-scale order-less pooling (MOP), Tremendous-pixel Pooling, and Compact Bilinear Pooling. The beneath picture reveals how CNN works for detecting an object in a picture.

How does Pooling work?

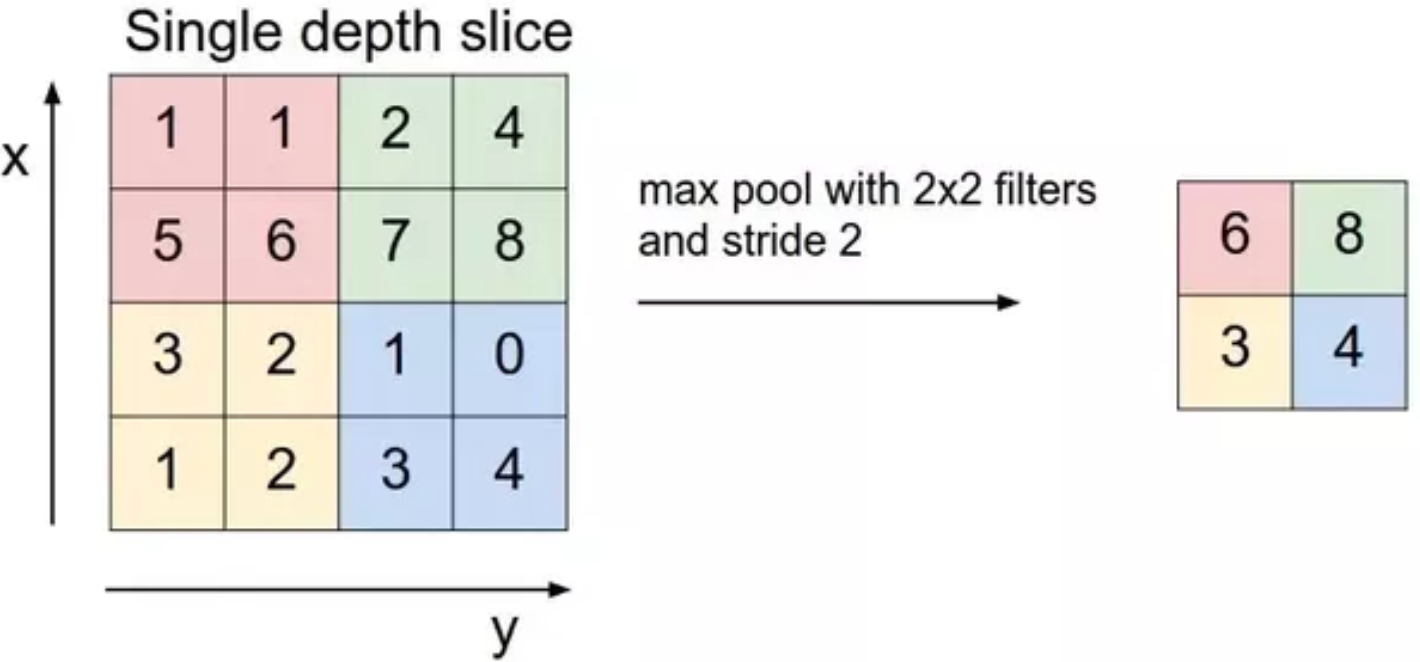

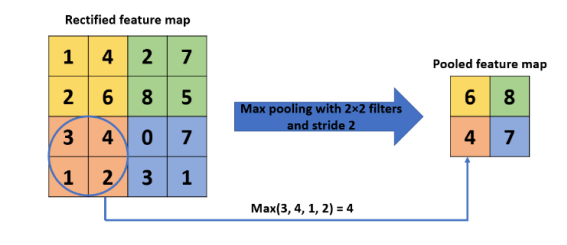

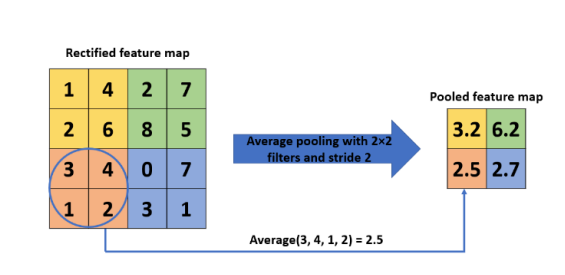

Pooling works fairly just like convolution operation, pooling requires a sure filter and this filter slides over the function map (output) obtained utilizing the convolution operation.

A sliding window strikes throughout the function map throughout pooling. This sliding window defines areas the place the pooling perform is utilized, and the output worth generated inside every area replaces the unique values contained in that particular space. Just like a convolution operation, this course of is repeated till the whole function map is processed. Not like in convolution, pooling lacks weights; as an alternative, it makes use of pooling operations that generate a single worth because the output. The particular pooling operation chosen determines how this calculation is carried out. Within the majority of circumstances, both Max-Pooling or Common Pooling is used. Max pooling grabs the biggest worth, whereas Common Pooling calculates the typical worth to make use of.

Usually, a 2×2 filter is employed and moved throughout the enter with a stride of two. This suggests that the pooling layer reduces the dimensions of the function map by an element of two. In less complicated phrases, every dimension turns into half of its earlier dimension, leading to every function map worth being lowered to one-quarter of its unique dimension. For example, if the preliminary function map is [4×4], the utilized pooling layer would output a function map of dimension [2×2]. Equally, a function map of [16x16x10], with a filter of [2×2] and a stride of two, would yield an output of [8x8x10].

More often than not, convolutions and pooling layers are stacked alternatively all the best way till the dimensions of the output function map shrinks. This output then will get fed into a completely linked neural community.

There are a number of frameworks which can be utilized to implement pooling in CNN. Tensorflow, Keras, Pytorch are few of the preferred deep studying body works extensively used to develop a CNN from scratch.

Forms of Pooling Layers:

Allow us to focus on just a few of probably the most generally used pooling layers within the area of Deep Studying. Moreover, we strongly encourage studying analysis papers that introduce novel pooling approaches. A number of of those papers are supplied as hyperlinks on the conclusion of the article.

Max Pooling

Max Pooling filtering is the most typical method, this filter selects the max worth from the required window of the function map. Thus, lowering the output dimensionality. Max Pooling retains probably the most distinguished options from the function map because it selects the very best worth. The resultant picture is far sharper than the enter picture. One of many main drawbacks of this method is that it ignores worthwhile info.

Common Pooling

Common Pooling a.okay.a. imply pooling down-samples the enter by computing the typical values from the required window of the function map.

World Pooling

One other sort of pooling often employed is called world pooling. As a substitute of downsampling patches of the enter function map, world pooling reduces the whole function map to a single worth.

World pooling is utilized in fashions to summarize the presence of a function in a picture. It is usually employed at instances as an alternative choice to using a completely linked layer for transitioning from function maps to the output prediction of the mannequin. World can additional be categorized into two sorts world common pooling and world max pooling

Each world common pooling and world max pooling are supported in Keras by way of the GlobalAveragePooling2D and GlobalMaxPooling2D lessons, respectively.

We now have already lined World Pooling in one other weblog publish. Please be at liberty to entry the supplied hyperlink for an in-depth clarification.

Code Demo

Carry this mission to life

Let’s now take a more in-depth take a look at the the idea of CNN utilizing a easy demo for Picture classification in Python. We are going to use CIFAR-10 dataset because it has 10 completely different classes of photographs naming:-

Airplane, Car, Fowl, Cat, Deer, Canine, Frog, Horse, Ship, Truck.

The dataset has 60,000 shade photographs (RGB) at 32px x 32px belonging to 10 completely different lessons (6000 photographs/class). The dataset is split into 50,000 coaching and 10,000 testing photographs.

Importing the Libraries

Let’s begin by importing the required libraries and cargo the dataset

#import the required libraries

import tensorflow as tf

from tensorflow.keras import datasets, layers, fashions

import matplotlib.pyplot as plt

import numpy as np#load the dataset and verify the form of the dataset

(X_train, y_train), (X_test,y_test) = datasets.cifar10.load_data()

X_train.form, y_train.form, X_test.form, y_test.formSubsequent, convert the 2-D array to 1-D array,

#convert the 2-D array to 1-D array

y_train = y_train.reshape(-1,)

y_test = y_test.reshape(-1,)outline a listing named ‘lessons’ that comprises the names of various classes current within the CIFAR10 dataset

#completely different classes current within the dataset

lessons = ["airplane","automobile","bird","cat","deer","dog","frog","horse","ship","truck"]and plot the picture utilizing an outlined perform.

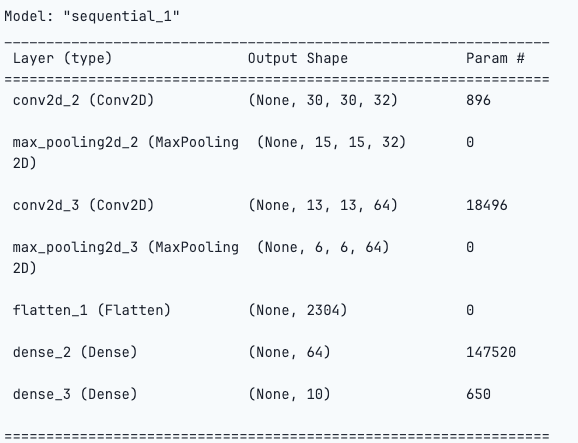

The code supplied beneath is for constructing a Convolutional Neural Community (CNN) utilizing the Keras library.

#biuld a CNN to coach within the picture dataset right here we're utilizing Max Pool

mannequin = fashions.Sequential([

layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])Allow us to break down the code for higher understanding:

- Sequential Mannequin:

- The

Sequentialmannequin is a linear stack of layers, permitting for the creation of the neural community layer by layer.

- The

- Convolutional Layers:

layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=(32, 32, 3)): That is the primary convolutional layer with 32 filters, a kernel dimension of (3, 3), ReLU activation perform, and an enter form of (32, 32, 3) indicating photographs with a dimension of 32×32 pixels and three shade channels (RGB).layers.MaxPooling2D((2, 2)): That is the primary max pooling layer with a pool dimension of (2, 2), which reduces the size of the function maps.layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu'): That is the second convolutional layer with 64 filters and a kernel dimension of (3, 3).layers.MaxPooling2D((2, 2)): That is the second max pooling layer.

- Flatten Layer:

layers.Flatten(): This layer flattens the output from the earlier layer right into a one-dimensional array. It prepares the info for the absolutely linked layers.

- Dense (Absolutely Linked) Layers:

layers.Dense(64, activation='relu'): A completely linked layer with 64 neurons and ReLU activation.layers.Dense(10, activation='softmax'): The ultimate dense layer with 10 neurons (akin to the variety of lessons within the dataset) and a softmax activation perform, which is typical for multi-class classification issues. The softmax activation normalizes the output to characterize class possibilities.

This CNN structure consists of convolutional layers for function extraction, max pooling layers for spatial discount, and absolutely linked layers for classification.

Compile the mannequin for coaching by specifying the optimizer, loss perform and metrics for mannequin analysis.

mannequin.compile(optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=['accuracy'])- Optimizer (optimizer=”adam”):

- The optimizer is accountable for updating the weights of the neural community throughout coaching. ‘adam’ is a well-liked optimization algorithm that adapts the educational charges for every parameter individually.

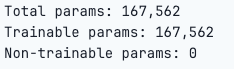

Lastly, match the mannequin utilizing the coaching dataset, on this case we’re utilizing 10 epoch.

- Loss Perform (loss=”sparse_categorical_crossentropy”):

- The loss perform is a measure of how nicely the mannequin performs. ‘sparse_categorical_crossentropy’ is usually used for classification issues with a number of lessons. It calculates the cross-entropy loss between the expected possibilities and the true labels. On this case, the true labels are supplied as integers (e.g., 0, 1, 2) with out the necessity for one-hot encoding.

- Metrics (metrics=[‘accuracy’]):

- Metrics are used to guage the efficiency of the mannequin. Right here, ‘accuracy’ is specified because the metric, which calculates the proportion of accurately labeled samples. It is a frequent metric for classification duties.

Lastly, match the mannequin utilizing the coaching dataset, on this case we’re utilizing 10 epochs.

mannequin.match(X_train, y_train, epochs=10)For 10 epochs we are able to see the output as follows:

As soon as the coaching is profitable use the check knowledge to guage the mannequin efficiency

As we are able to discover that the loss is lowering with each epoch. It is a good signal. However it begins fluctuating on the finish, which may imply the mannequin is overfitting the coaching knowledge.

mannequin.consider(X_test,y_test)313/313 [==============================] - 1s 2ms/step - loss: 0.9071 - accuracy: 0.7040

Right here, the mannequin accuracy is barely low within the check knowledge than the prepare knowledge.

We have efficiently constructed a Convolutional Neural Community utilizing TensorFlow and Keras from the bottom up!

#getting the expected lessons

y_classes = [np.argmax(pred_class) for pred_class in y_pred]

Please be at liberty to click on the hyperlink supplied to view the whole pocket book.

Rising Tendencies and Improvements

Pooling layers play a vital position in Convolutional Neural Networks (CNNs). They contribute to lowering dimensionality, introducing translation invariance, and aiding within the extraction of options. A number of novel approaches are rising today, as nicely. Nonetheless, a great pooling methodology ought to have the ability to extract helpful info and likewise cut back overfitting.

A not too long ago proposed methodology calculates the weighted common of the dominant options often known as Avg-topk. By in depth experiments, it has been proven that the Avg-TopK pooling methodology persistently attains greater accuracy in picture classification in comparison with standard pooling strategies. This method has confirmed to be extremely environment friendly to handle the drawbacks brought on by the max and common pooling layers in CNN.

The outcomes from this pooling method have confirmed to beat the knowledge loss which was a serious drawback in conventional pooling layers.

Researchers are more and more exploring novel pooling methods past the traditional max and common pooling strategies. Strategies like Inter-map Pooling, Rank-based Common Pooling, Per Pixel Pyramid Pooling, Weighted Pooling, and Genetic-based Pooling strategies are gaining prominence for his or her effectivity and talent to seize world context.

These evolving traits in pooling mirror a steady effort to reinforce the robustness, interpretability, and generalization capabilities of CNNs throughout various functions, starting from picture classification to object detection and segmentation.

Conclusion

On this article we understood how convolution operations happen in CNN. We additionally understood pooling an vital idea within the CNN structure and the way they work. We have been additionally profitable in constructing a CNN mannequin from scratch utilizing Keras and tensorflow framework.

In conclusion, pooling layers in Convolutional Neural Networks (CNNs) play a serious position in enhancing computational effectivity, managing complexity, and extracting essential options from enter knowledge.

Whereas conventional max and common pooling strategies stay basic, few of the rising traits similar to world pooling, consideration mechanisms, and Avg-topk signifies a dynamic evolution within the area.

As analysis on this space progresses, these modern approaches contribute considerably to the development of CNN architectures, resulting in extra sturdy and complicated fashions.

We hope you loved the article! Thanks for studying!!