I really like interactive issues, however I’m not a fan of costly gadgets, particularly these we have now to purchase yr after yr to have the newest {hardware} in our fingers!

I’m a fan of accessible know-how!

With that in thoughts, at this time I’m going to indicate you methods to management parts in a 3D scene utilizing simply your fingers, a webcam, and an online browser. The important thing focus right here is changing a 2D display right into a 3D area, with full depth management. I’ll preserve the give attention to that!

To realize this outcome, we are going to use Mediapipe and Three.js.

So let the enjoyable start!

Setup

First, we have to create our @mediapipe/fingers occasion to detect the hand landmarks.

export class MediaPipeHands {

constructor(videoElement, onResultsCallback) {

const fingers = new Palms({

locateFile: (file) => {

return `https://cdn.jsdelivr.internet/npm/@mediapipe/fingers/${file}`;

},

});

fingers.setOptions({

maxNumHands: 1,

modelComplexity: 1,

minDetectionConfidence: 0.5,

minTrackingConfidence: 0.5,

});

fingers.onResults(onResultsCallback);

this.digital camera = new Digicam(videoElement, {

async onFrame() {

await fingers.ship({ picture: videoElement });

},

width: 1280,

peak: 720,

});

}

begin() {

if (this.digital camera) this.digital camera.begin();

}

}

I’ve created a class referred to as MediaPipeHands that accommodates this implementation, so we are able to simply add it to the code and reuse it anyplace.

Like this:

this.mediaPiepeHands = new MediaPipeHands(videoElement, (landmarks) =>

this.onMediaPipeHandsResults(landmarks)

);

this.mediaPiepeHands.begin();The onMediaPipeHandsResults is a callback from the library that returns the talked about landmarks, it comes from fingers.onResults.

Now, let’s create our WebGL scene with Three.js, solely to have some parts we are able to work together with.

Nothing particular, a easy Three.js scene 😉

Right here’s one other class for fast prototyping, a plug-and-play static class that may be accessed anyplace in your code. Needless to say it doesn’t comply with any commonplace, it’s simply to make my life simpler.

import {

Scene,

PerspectiveCamera,

Shade,

WebGLRenderer,

AmbientLight,

DirectionalLight,

SpotLight,

Clock,

} from "three";

export class ScenesManager {

static scene;

static digital camera;

static renderer;

static clock;

static setup() {

ScenesManager.scene = new Scene();

ScenesManager.scene.background = new Shade(0xcccccc);

ScenesManager.digital camera = new PerspectiveCamera(

45,

window.innerWidth / window.innerHeight,

0.01,

100

);

ScenesManager.digital camera.place.set(0, 0, 2);

ScenesManager.clock = new Clock();

ScenesManager.renderer = new WebGLRenderer({ antialias: true });

ScenesManager.renderer.setSize(window.innerWidth, window.innerHeight);

ScenesManager.renderer.setPixelRatio(window.devicePixelRatio);

ScenesManager.renderer.shadowMap.enabled = true;

const ambLight = new AmbientLight(0xffffff, 1);

ScenesManager.scene.add(ambLight);

ScenesManager.renderer.setSize(window.innerWidth, window.innerHeight);

doc.physique.appendChild(ScenesManager.renderer.domElement);

}

static render() {

ScenesManager.renderer.render(ScenesManager.scene, ScenesManager.digital camera);

}

}Solely import it and initialize it to have a Scene:

import { ScenesManager } from "./ScenesManager.js";

.

.

.

ScenesManager.setup();Creating the controls

Let’s begin utilizing our landmarks, it’s cartesian coordinates that come from @mediapipe/fingers I’ve talked about.

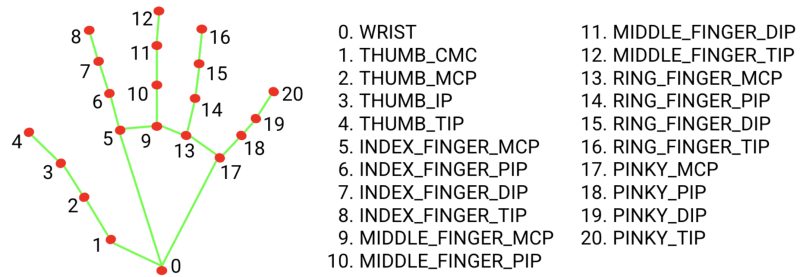

Right here is the reference so you recognize what I’m speaking about and perceive the values we are going to use.

To maneuver our parts on the x/y-axis I’ll choose just one level, 9. MIDDLE_FINGER_MCP, my selection is predicated solely on the place of this coordinate, it’s on the heart of every little thing and is sensible.

At the least for me 😅

We’re utilizing just one hand on this exploration, so the primary 21 indexes of the landmark matter.

If you wish to use two fingers, use the identical array and browse values ranging from index 21.

if (landmarks.multiHandLandmarks.size === 1) {

for (let l = 0; l < 21; l++) {

this.handsObj.kids[l].place.x = -landmarks.multiHandLandmarks[0][l].x + 0.5;

this.handsObj.kids[l].place.y = -landmarks.multiHandLandmarks[0][l].y + 0.5;

this.handsObj.kids[l].place.z = -landmarks.multiHandLandmarks[0][l].z;

}

}That is the outcome:

As you’ll be able to discover, landmark factors have the identical scale even in a 3D area, it’s as a result of @mediapipe/fingers haven’t any correct z-axis, it’s 2D, so right here the magic begins to occur! ⭐️

Sure, you’re proper, it isn’t magic, however I like this time period! 😅

Z-Depth: 2D to 3D conversion

My thought is to get 2 landmark factors, calculate the space between them in 2D area, then apply it as depth.

As you’ll be able to discover within the video above, the cursor (huge sphere), already strikes within the appropriate course reverse the landmarks.

To make it occur I chosen level 0. WRIST and 10. MIDDLE_FINGER_PIP from landmarks.

this.refObjFrom.place.copy(this.gestureCompute.depthFrom);

const depthA = this.to2D(this.refObjFrom);

this.depthPointA.set(depthA.x, depthA.y);

this.refObjTo.place.copy(this.gestureCompute.depthTo);

const depthB = this.to2D(this.refObjTo);

this.depthPointB.set(depthB.x, depthB.y);

const depthDistance = this.depthPointA.distanceTo(this.depthPointB);

this.depthZ = THREE.MathUtils.clamp(

THREE.MathUtils.mapLinear(depthDistance, 0, 1000, -3, 5),

-2,

4

);I restrict this worth between -2 and 4 to make it pleasant however it isn’t crucial, it’s all about your emotions as a consumer.

So to maneuver within the appropriate course, we have to invert this distance to a adverse worth, so when the hand is close to the digital camera the cursor is way from the digital camera.

Like this:

this.goal.place.set(

this.gestureCompute.from.x,

this.gestureCompute.from.y,

-this.depthZ

);Gestures

The identical logic I’ve used to compute closed_fist gesture.

As it’s a distinctive gesture we have to seize an object, we don’t must import one other dependency like GestureRecognizer. It is going to save load time and reminiscence utilization.

So I acquired 9. MIDDLE_FINGER_MCP and 12. MIDDLE_FINGER_TIP and utilized the identical method, based mostly on the space my hand is closed or not!

this.gestureCompute.from.set(

-landmarks.multiHandLandmarks[0][9].x + 0.5,

-landmarks.multiHandLandmarks[0][9].y + 0.5,

-landmarks.multiHandLandmarks[0][9].z

).multiplyScalar(4);

this.gestureCompute.to.set(

-landmarks.multiHandLandmarks[0][12].x + 0.5,

-landmarks.multiHandLandmarks[0][12].y + 0.5,

-landmarks.multiHandLandmarks[0][12].z

).multiplyScalar(4);

const pointsDist = this.gestureCompute.from.distanceTo(

this.gestureCompute.to

);

this.closedFist = pointsDist < 0.35;Collision Take a look at

Now we have to make it funnier (for me, all that is enjoyable lol), so let’s calculate the collision between our cursor and objects and begin grabbing them.

I wrote a easy AABB check as a result of it was extra correct and performant throughout my testing quite than utilizing a raycaster.

However bear in mind, each case is one case, so maybe it’ll carry out higher with one other algorithm for you and even with a Rasycater!

this.targetBox3.setFromObject(this.goal); // Goal is my cursor

this.objects.forEach((obj) => {

this.objectBox3.setFromObject(obj);

const targetCollision = this.targetBox3.intersectsBox(this.objectBox3);

if (targetCollision) {

// Do one thing...I did a drag and drop interplay.

}

});In order that’s our outcome:

You possibly can preview it and flick through the code right here.

Remaining phrases

I hope you get pleasure from it as a lot as I did! It was quite a lot of enjoyable and enriching for me.

We lastly have good hand monitoring within the browser. This hasn’t been potential for some time. I’m not speaking in regards to the know-how itself, which has been round for a very long time, however the efficiency wasn’t nearly as good as these days!

With this, we have now a universe of potentialities to discover and the possibility to create attention-grabbing experiences for any viewers. I consider in and defend know-how for everybody.

Right here you’ll be able to attempt a pleasant implementation of this controller, an Limitless Spaceship Sport.

Thanks very a lot for studying this and see you subsequent time!