Three years in the past, I started growing a light-weight interpolation library known as Interpol, a low-level engine for dealing with worth tweening and easy movement on the net. You could have already come throughout it on my social channels, the place I’ve shared a couple of experiments. On this article, I’ll clarify the origins of the library and the way it’s turn into a key a part of my animation workflow.

Three years in the past, I started growing a light-weight interpolation library known as @wbe/interpol. Interpol is designed to interpolate units of values utilizing a GSAP-like API. Its fundamental distinction is that it isn’t a “actual” animation library, it’s an interpolation engine.

My statement was that in lots of initiatives, I didn’t want all the ability of GSAP or anime.js, however only a easy operate to interpolate values and import a a lot lighter library. This can be a mechanism that I recreated a number of occasions by hand, so I made a decision to make a library out of it.

Necessities

My necessities for this library have been as follows:

- Light-weight: the bundle dimension needs to be round 3.5kB

- Low-level: for being maintainable and predictable, no magic, no DOM API, solely interpolation of values

- Performant: Interpol situations must be batched and up to date on a single Ticker loop occasion

- A number of interpolations: must interpolate a set of values per occasion, not just one

- Chaining interpolations: must create Timelines, with occasion offsets

- Near the GSAP and anime.js API: ought to appear to be what I’m already used to, in an effort to not need to adapt myself to a brand new API

- Strongly typed: written in TypeScript with robust sorts

- Elective RAF: Give the chance to not use the interior

requestAnimationFrame. The worldwide replace logic must be doable to name manually in a customized loop

Interpolation, what are we speaking about?

To summarize, a linear interpolation (known as lerp) is a mathematical operate that finds a worth between two others. It returns a mean of the 2 values relying on a given quantity:

operate lerp(begin: quantity, finish: quantity, quantity: quantity): quantity {

return begin + (finish - begin) * quantity

}And we will use it like this:

lerp(0, 100, 0.5) // 50

lerp(50, 100, 0.1) // 55

lerp(10, 130432, 0.74) // 96522.28For now, nothing particular. The magic occurs after we use it in a loop: The grasp characteristic we will exploit from the browser API for creating animations is the requestAnimationFrame operate that we name “RAF”. With a robust information of RAF, we will do great issues. And with RAF and lerp mixed, we will create smoothy-floppy-buttery-coolify-cleanex transitions between two values (sure, all of that).

Essentially the most traditional usages of lerp in a RAF are the lerp damping and the tweening. Interpol is time-based interpolation engine, so it’s a tweening method. Its time worth will likely be normalized between 0 and 1 and that’s what represents the development of our interpolation.

const loop = (): void => {

const worth = lerp(from, to, easing((now - begin) / length))

// Do one thing with the worth...

requestAnimationFrame(loop)

}

requestAnimationFrame(loop)All this logic is basically the identical as that utilized by animation libraries like GSAP or anime.js. Many different options are added to these libraries, after all, however this constitutes the core of a tween engine.

Tweening vs Damping

A short detour on this topic which appears vital to me to grasp effectively earlier than going additional. Initially, it took me a while to construct a psychological mannequin of how lerp operate works within the context of tweening versus damping. I lastly discovered a option to clarify it as in these graphs.

Tweening

With tweening, interpolation is strictly time-bound. Once we tween a worth over 1000ms, that length defines an absolute boundary. The animation completes exactly at that second.

In a second time, we will add an easing operate that controls how the worth progresses inside that mounted window, however the window itself stays fixed. Every coloured curve represents an interpolation of our price, from 0 to 100, in 1000ms, with a unique easing operate.

const from = 0

const to = 100

const length = 1000

const easing = (t) => t * t

const progress = easing((now - begin) / length)

// in a tween context

// we replace the progress/quantity/velocity param

✅

const worth = lerp(from, to, progress)Damping

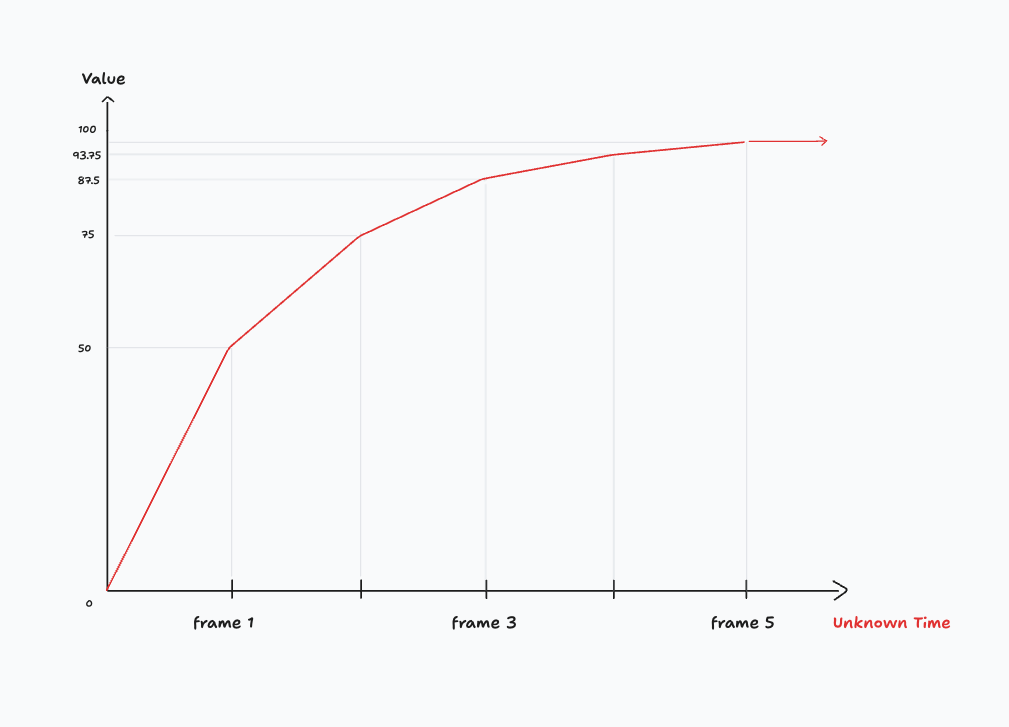

Once we discuss “lerp”, we frequently discuss with the “damping lerp” method. Nevertheless, this isn’t how Interpol works, since its interpolations are time-based. With “damped lerp” (or “damping lerp”), there is no such thing as a idea of time concerned. It’s purely frame-based. This method updates values on every body, with out contemplating elapsed time. See the graph beneath for a visible clarification.

let present = 0

let goal = 100

let quantity = 0.05

// in a damping lerp

// we replace the present worth on every body

⬇️ ✅

present = lerp(present, goal, quantity)I deliberately renamed the properties handed to the lerp operate to make it match higher within the context. Right here, it’s higher to discuss with present and goal, since these are values that will likely be mutated.

To conclude this apart, the lerp operate is the idea for a number of net animation methods. Lerp can be utilized by itself, whereas tweening is a way depending on the lerp operate. This distinction took me some time to formulate and I felt it was vital to share.

That being mentioned, let’s return to Interpol (a tween library if we will say). What does the API for this instrument appears like?

Dive contained in the API

Interpol constructor

Right here is the constructor of an Interpol occasion:

import { Interpol } from "@wbe/interpol"

new Interpol({

x: [0, 100],

y: [300, 200],

length: 1300,

onUpdate: ({ x, y }, time, progress, occasion) => {

// Do one thing with the values...

},

})First, we outline a set of values to interpolate. Keynames may be something (x, y, foo, bar, and many others.). As a result of we’re not referencing any DOM factor, we have to explicitly outline “the place we begin” and “the place we need to go”. That is the primary fundamental distinction with animations libraries that may maintain info which comes from the DOM, and implicitly outline the from worth.

// [from, to] array

x: [0, 100],

// Use an object as an alternative of an array (with optionally available particular ease)

foo: { from: 50, to: 150, ease: "power3.in" }

// Implicite from is 0, like [0, 200]

bar: 200

// use computed values that may be re-evaluated on demand

baz: [100, () => Math.random() * 500]In a second step, we outline choices just like the widespread length, paused on init, immediateRender & ease operate.

// in ms, however may be configured from the worldwide settings

length: 1300,

// begin paused

paused: true,

// Execute onUpdate technique as soon as when the occasion is created

immediateRender: true

// easing operate or typed string 'power3.out' and many others.

ease: (t) => t * tThen, we now have callbacks which are known as at completely different moments of the interpolation lifecycle. An important one is onUpdate. That is the place the magic occurs.

onUpdate: ({ x, y }, time, progress, occasion) => {

// Do one thing with the x and y values

// time is the elapsed time in ms

// progress is the normalized progress between 0 to 1

},

onStart: ({ x, y }, time, progress, occasion) => {

// the preliminary values

},

onComplete: ({ x, y }, time, progress, occasion) => {

// executed!

}By way of developer expertise, I really feel higher merging props, choices & callbacks on the similar stage within the constructor object. It reduces psychological overhead when studying an animation, particularly in a posh timeline. Alternatively, the online animation API separates keyframes & choices ; movement.dev did one thing comparable. It’s in all probability to remain as shut as doable to the native API, which is a great transfer too.

It’s only a matter of getting used to it, but it surely’s additionally the type of query that retains library builders awake at evening. As soon as the API is about, it’s tough to reverse it. Within the case of Interpol, I don’t have this sort of downside, so long as the library stays comparatively area of interest. However for API design points, and since I prefer to procrastinate on these type of particulars, these are vital questions.

Interpol strategies

Now, what about strategies? I stored the API floor to a minimal and defined beneath why I did so.

itp.play()

itp.reverse()

itp.pause()

itp.resume()

itp.cease()

itp.refresh()

itp.progress()Then, we will play with them on this sandbox!

The GUI works because of computed properties outlined as features that return new GUI_PARAMS.worth values every time you replace the x or scale sliders throughout the animation.

const itp = new Interpol({

x: [0, ()=> GUI_PARAMS.x]

})

// name refresh to recompute all computed values

itp.refresh()

To this point there shouldn’t be any shock if you’re used to animation libraries. The perfect is coming with Timelines.

Timeline & algorithm

Interpolating a set of values is one factor. Constructing a sequencing engine on prime of it’s a utterly completely different problem. The constructor and strategies appear to be what we already know:

import { Timeline } from "@wbe/interpol"

const tl = new Timeline()

// Move Interpol constructor to the add()

tl.add({

x: [-10, 100],

length: 750,

ease: t => t * t

onUpdate: ({ x }, time, progress) => {},

})

tl.add({

foo: [100, 50],

bar: [0, 500],

length: 500,

ease: "power3.out"

onUpdate: ({ foo, bar }, time, progress) => {},

})

// Second risk: Set a callback operate parameter

tl.add(() => {

// Attain this level at 50ms through absolutely the offset

}, 50)

tl.play(.3) // begin at 30% of the timeline progress, why not?This API permits us to sequence Interpol situations through add() strategies. It’s some of the difficult a part of the library as a result of it requires to keep up an array of Interpol situations internally, with some programmatic properties, like their present place, present progress, length of the present occasion, their doable offset and many others.

Within the Timeline algorithm, we principally can’t name the play() technique of every “add”, It might be a nightmare to manage. Alternatively, we now have the chance to calculate a timeline progress (our %) so long as we all know the time place of every of the Interpol situations it holds. This algorithm is predicated on the truth that a progress decrease than 0 or larger than 1 isn’t animated. On this case, the Interpol occasion isn’t taking part in in any respect, however “progressed” decrease than 0 or larger than 1.

Take this display seize: it shows 4 animated components in 4 provides (which means Interpol situations within the Timeline).

As mentioned earlier than, we calculate a world timeline progress used to switch the development worth of every inside Interpol. All provides are impacted by the worldwide progress, even when it’s not but their flip to be animated. For instance, we all know that the inexperienced sq. ought to begin its personal interpolation at a relative timeline progress .15 (roughly) or 15% of the whole timeline, however will likely be requested to progress because the Timeline is taking part in.

updateAdds(tlTime: quantity, tlProgress: quantity) {

this.provides.forEach((add) => {

// calc a progress between 0 and 1 for every add

add.progress.present = (tlTime - add.time.begin) / add.occasion.length

add.occasion.progress(add.progress.present)

})

}

tick(delta) {

// calc the timeline time and progress spend from the beginning

// and replace all of the interpolations

this.updateAdds(tlTime, tlProgress)

}It’s then as much as every add.interpol.progress() to deal with its personal logic, to execute its personal onUpdate() when wanted. Test logs on the identical animation: onUpdate is known as solely throughout the interpolation, and it’s what we would like.

Attempt it by your self!

Offsets

One other subject that’s of explicit curiosity to me is offsets. In actual fact, when animating one thing with a timeline, we at all times want this performance. It consists in repositioning a tween, comparatively from its pure place or completely, within the timeline. All timelines examples beneath use them in an effort to superimpose situations. Too many sequential animations really feel bizarre to me.

Technically offsets are about recalculating all provides begins and ends, relying of their very own offset.

tl.add({}, 110) // begin completely at 110ms

tl.add({}, "-=110") // begin comparatively at -110ms

tl.add({}, "+=110") // begin comparatively at +110ms The principle problem I encountered with this subject was testing. To make sure the offset calculations work accurately, I needed to write quite a few unit checks. This was removed from wasted time, as I nonetheless discuss with them immediately to recollect how offset calculations ought to behave in sure edge instances.

Instance: What occurs if an animation is added with a adverse offset and its begin is positioned earlier than the timeline’s begin? All solutions to this sort of questions have been coated on this timeline.offset.take a look at.

it("absolute offset ought to work with quantity", () => {

return Promise.all([

// when absolute offset of the second add is 0

/**

0 100 200 300

[- itp1 (100) -]

[ ------- itp2 (200) -------- ]

^

offset begin at absolute 0 (quantity)

^

complete length is 200

*/

testTemplate([[100], [200, 0]], 200),

})When correctly written, checks are my assure that my code aligns with my challenge’s enterprise guidelines. This can be a essential level for a library’s evolution. I might additionally say that I’ve discovered to guard myself from myself by “fixing” strict guidelines via checks, which forestall me from breaking every thing when making an attempt so as to add new options. And when you want to cowl a number of matters in your API, this enables to breathe.

Make selections & discover some workarounds

Again to the “light-weight library” subject, which required me to make a number of selections: first, by not growing any performance that I don’t at the moment want; and second, by filtering out API options that I can already categorical utilizing present instruments.

For instance, there’s no repeat characteristic in Interpol. However we will merely implement it by calling begin in a loop, so long as the tactic returns a promise:

const repeat = async (n: quantity): void => {

for (let i = 0; i < n; i++) await itp.play();

};One other instance is in regards to the lacking search technique, that permits to maneuver the animation to a particular level through a length parameter; instance: “Transfer on to 1,4s of the animation”. I largely use the identical kind of technique with a streamlined proportion parameter like progress that permits me to maneuver “comparatively” throughout all the animation, reasonably than relying on a time issue. But when wanted, it stays straightforward to “search” with progress as comply with:

const length = 1430 // ms

const itp = new Interpol({

length,

//...

})

// We need to transfer the animation at 1000ms

const targetTime = 1000

const % = targetTime / length // 0.69...

// progress take a worth between 0 and 1

itp.progress(%)

And about staggers? Once more, we will use delay for Interpol, or offsets for Timeline to cowl this want.

for (let i = 0; i < components.size; i++) {

const el = components[i];

const itp = new Interpol({

// create the stagger with a relative delay

delay: i * 20,

x: [innerWidth / 2, () => random(0, innerWidth)],

y: [innerHeight / 2, () => random(0, innerHeight)],

scale: [0, 5],

onUpdate: ({ x, y, scale, opacity }) => {

el.type.rework = `translate3d(${x}px, ${y}px, 0px) scale(${scale})`

el.type.opacity = `${opacity}`

},

});

}Equally, we will simply create our personal yoyo operate and management what occurs on every recursive name. The refresh() technique is invoked every time we play the Interpol once more to recompute all dynamic values.

const yoyo = async () => {

await itp.play()

itp.refresh()

yoyo()

}

yoyo()And right here, an instance of what we will obtain by combining these two final technics.

Digging on efficiency questions

Batching callbacks

The primary main optimization for this sort of library is to batch all callback (onUpdate) executions right into a single sequential queue. A naive implementation would set off a separate requestAnimationFrame (RAF) for every occasion. As a substitute, essentially the most performant method is to externalize and globalize a single RAF, to which all Interpol situations subscribe.

// International Ticker occasion (which accommodates the distinctive RAF)

// Utilized by all Interpol & Timeline situations

const ticker = new Ticker()

class Interpol {

// ...

play() {

ticker.add(this.replace)

}

cease() {

this.ticker.take away(this.replace)

}

replace = () => {

// Do every thing you need to do on every body

}

}The Ticker occasion works like a publish-subscriber sample, including and eradicating callbacks from its personal distinctive RAF. This technique considerably improves efficiency, guaranteeing that each one property updates, (whether or not DOM, GL properties, or others) happen precisely on the identical body.

Batching DOM replace

One other vital optimization is to batch all DOM writes inside the similar body.

When a number of components are up to date independently. For instance, setting type.rework or type.opacity throughout completely different animation situations. Every modification can set off separate structure and paint operations. By synchronizing all updates via a shared ticker, you make sure that these type adjustments happen collectively, minimizing structure thrashing and lowering reflow prices.

That is truly an excellent instance of what Interpol can’t do.

Interpol situations don’t know what occurs insider their very own onUpdate, in contrast to a conventional animation library that straight manages DOM targets and might optimize updates globally. On this sort of optimization, Interpol won’t ever have the ability to compete. It’s a part of the “low-level” philosophy of the library. It’s vital to maintain this in thoughts.

Stress take a look at

I received’t dive too deep into benchmarks, as a result of in most real-world instances, the distinction is barely noticeable in my private utilization. Nonetheless, I constructed a small sandbox to check how completely different engines behave when animating a whole lot of particles on a shared loop. Each GSAP and Interpol keep at a steady 60 FPS on this instance with a grid of 6^4 components. With 7^4, GSAP begins to win. That is purely a stress take a look at as a result of, in a real-world state of affairs, you wouldn’t construct a particle system with DOM components anyway 🙂

Conclusion

So the ultimate query is: Why use a instrument that doesn’t cowl each want? And this can be a legitimate query!

Interpol is my private analysis challenge, I apply it to my initiatives even when it’s 50% % of why I proceed to keep up it. The second 50% is that library permits me to ask deep questions on mechanisms, implementation selections, and perceive efficiency points. It’s a playground that deserves to be explored past its easy usefulness and coolness on the earth of open-source JS libraries. I’ll at all times encourage reinventing the wheel for the aim of studying and understanding the underlying ideas, that is precisely how I discovered to develop.

For positive, I proceed to make use of GSAP and anime.js on many initiatives for causes that after studying this text. They’re really easy to make use of, the work is phenomenal.

About me

As a result of there are people behind the code, I’m Willy Brauner, senior front-end developer, from Lyon (France). Beforehand lead front-end developer at Cher-ami, I’m again as a freelancer for nearly two years. I Principally work with companies on artistic initiatives, constructing front-end architectures, improvement workflows, and animations. I write about my analysis on my journal and publish open-source code on Github. You possibly can attain out to me on Bluesky, Linkedin or e-mail.

Thanks for studying alongside.

Willy