On this tutorial, you’ll find out how we at ARKx crafted the audio-reactive visuals for Coala Music’s web site. We’ll stroll via the ideas and strategies used to synchronize audio frequencies and tempo, making a dynamic visualizer with procedural particle animations.

Getting Began

We’ll initialize our Three.js scene solely after the consumer interacts; this fashion, we are able to allow the audio to autoplay and keep away from the block coverage of the primary browsers.

export default class App {

constructor() {

this.onClickBinder = () => this.init()

doc.addEventListener('click on', this.onClickBinder)

}

init() {

doc.removeEventListener('click on', this.onClickBinder)

//BASIC THREEJS SCENE

this.renderer = new THREE.WebGLRenderer()

this.digital camera = new THREE.PerspectiveCamera(70, window.innerWidth / window.innerHeight, 0.1, 10000)

this.scene = new THREE.Scene()

}

}Analyzing Audio Information

Subsequent, we initialize our Audio and BPM Managers. They’re accountable for loading the audio, analyzing it, and synchronizing it with the visible parts.

async createManagers() {

App.audioManager = new AudioManager()

await App.audioManager.loadAudioBuffer()

App.bpmManager = new BPMManager()

App.bpmManager.addEventListener('beat', () => {

this.particles.onBPMBeat()

})

await App.bpmManager.detectBPM(App.audioManager.audio.buffer)

}The AudioManager class then hundreds the audio from a URL—we’re utilizing a Spotify Preview URL—and analyzes it to interrupt down the audio alerts into frequency bins in real-time.

const audioLoader = new THREE.AudioLoader();

audioLoader.load(this.tune.url, buffer => {

this.audio.setBuffer(buffer);

})Frequency Information

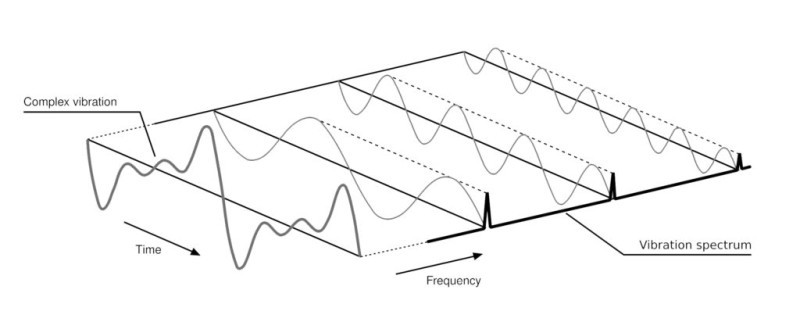

We’ve to segregate the frequency spectrum into low, mid, and excessive bands to calculate the amplitudes.

To phase the bands, we have to outline begin and finish factors (e.g., the low band vary begins on the lowFrequency worth and ends on the midFrequency begin worth). To get the common amplitude, merely multiply the frequencies by the buffer size, then divide by the pattern fee, and normalize it to a 0-1 scale.

this.lowFrequency = 10;

this.frequencyArray = this.audioAnalyser.getFrequencyData();

const lowFreqRangeStart = Math.flooring((this.lowFrequency * this.bufferLength) / this.audioContext.sampleRate)

const lowFreqRangeEnd = Math.flooring((this.midFrequency * this.bufferLength) / this.audioContext.sampleRate)

const lowAvg = this.normalizeValue(this.calculateAverage(this.frequencyArray, lowFreqRangeStart, lowFreqRangeEnd));

//THE SAME FOR MID AND HIGHDetecting Tempo

The amplitude of the frequencies isn’t sufficient to align the music beat with the visible parts. Detecting the BPM (Beats Per Minute) is crucial to make the weather react in sync with the heartbeat of the music. In Coala’s undertaking, we function many songs from their artists’ label, and we don’t know the tempo of every piece of music. Subsequently, we’re detecting the BPM asynchronously utilizing the wonderful web-audio-beat-detector module, by merely passing the audioBuffer.

const { bpm } = await guess(audioBuffer);Dispatching the Alerts

After detecting the BPM, we are able to dispatch the occasion sign utilizing setInterval.

this.interval = 60000 / bpm; // Convert BPM to interval

this.intervalId = setInterval(() => {

this.dispatchEvent({ kind: 'beat' })

}, this.interval);Procedural Reactive Particles (The enjoyable half 😎)

Now, we’re going to create our dynamic particles that can quickly be aware of audio alerts. Let’s begin with two new features that can create fundamental geometries (Field and Cylinder) with random segments and properties; this method will end in a singular construction every time.

Subsequent, we’ll add this geometry to a THREE.Factors object with a easy ShaderMaterial.

const geometry = new THREE.BoxGeometry(1, 1, 1, widthSeg, heightSeg, depthSeg)

const materials = new THREE.ShaderMaterial({

aspect: THREE.DoubleSide,

vertexShader: vertex,

fragmentShader: fragment,

clear: true,

uniforms: {

dimension: { worth: 2 },

},

})

const pointsMesh = new THREE.Factors(geometry, materials)Now, we are able to start creating our meshes with random attributes in a specified interval:

Including noise

We drew inspiration from Akella’s FBO Tutorial and included the curl noise into the vertex shader to create natural, natural-looking actions and add fluid, swirling motions to the particles. I gained’t delve deeply into the reason of Curl Noise and FBO Particles, as Akella did a tremendous job in his tutorial. You’ll be able to test it out to be taught extra about it.

Animating the particles

To summarize, within the vertex shader, we animate the factors to realize dynamic results that dictate the particles’ conduct and look. Beginning with newpos, which is the unique place of every level, we create a goal. This goal provides curl noise alongside its regular vector, various primarily based on frequency and amplitude uniforms. It’s interpolated by the ability of the space d between them. This course of creates a easy transition, easing out as the purpose approaches the goal.

vec3 newpos = place;

vec3 goal = place + (regular * .1) + curl(newpos.x * frequency, newpos.y * frequency, newpos.z * frequency) * amplitude;

float d = size(newpos - goal) / maxDistance;

newpos = combine(place, goal, pow(d, 4.));We additionally add a wave movement to newpos.z , including an additional layer of liveliness to the animation.

newpos.z += sin(time) * (.1 * offsetGain);Furthermore, the dimensions of every level adjusts dynamically primarily based on how shut the purpose is to its goal and its depth within the scene, making the animation really feel extra three-dimensional.

gl_PointSize = dimension + (pow(d,3.) * offsetSize) * (1./-mvPosition.z);Right here it’s:

Including Colours

Within the fragment shader, we’re masking out the purpose with a circle form perform and interpolating the startColor and endColor uniforms in response to the purpose’s vDistance outlined within the vertex:

vec3 circ = vec3(circle(uv,1.));

vec3 shade = combine(startColor,endColor,vDistance);

gl_FragColor=vec4(shade,circ.r * vDistance);Bringing Audio and Visuals Collectively

Now, we are able to use our creativity to assign the audio knowledge and beat to all of the properties, each within the vertex and fragment shader uniforms. We are able to additionally add some random animations to the size, place and rotation utilizing GSAP.

replace() {

// Dynamically replace amplitude primarily based on the excessive frequency knowledge from the audio supervisor

this.materials.uniforms.amplitude.worth = 0.8 + THREE.MathUtils.mapLinear(App.audioManager.frequencyData.excessive, 0, 0.6, -0.1, 0.2)

// Replace offset achieve primarily based on the low frequency knowledge for delicate impact modifications

this.materials.uniforms.offsetGain.worth = App.audioManager.frequencyData.mid * 0.6

// Map low frequency knowledge to a variety and use it to increment the time uniform

const t = THREE.MathUtils.mapLinear(App.audioManager.frequencyData.low, 0.6, 1, 0.2, 0.5)

this.time += THREE.MathUtils.clamp(t, 0.2, 0.5) // Clamp the worth to make sure it stays inside a desired vary

this.materials.uniforms.time.worth = this.time

}Conclusion

This tutorial has guided you on learn how to synchronize sound with participating visible particle results utilizing Three.js.

Hope you loved it! You probably have questions, let me know on Twitter.

Credit