With OpenAI now supporting fashions as much as GPT-4 Turbo, Python builders have an unbelievable alternative to discover superior AI functionalities. This tutorial offers an in-depth have a look at combine the ChatGPT API into your Python scripts, guiding you thru the preliminary setup levels and resulting in efficient API utilization.

The ChatGPT API refers back to the programming interface that enables builders to work together with and make the most of GPT fashions for producing conversational responses. Nevertheless it’s truly simply OpenAI’s common API that works for all their fashions.

As GPT-4 Turbo is extra superior and thrice cheaper than GPT-4, there’s by no means been a greater time to leverage this highly effective API in Python, so let’s get began!

Setting Up Your Setting

To begin off, we’ll information you thru establishing your setting to work with the OpenAI API in Python. The preliminary steps embody putting in the required libraries, establishing API entry, and dealing with API keys and authentication.

Putting in needed Python libraries

Earlier than you start, be certain that to have Python put in in your system. We advocate utilizing a digital setting to maintain the whole lot organized. You possibly can create a digital setting with the next command:

python -m venv chatgpt_env

Activate the digital setting by working:

chatgpt_envScriptsactivate(Home windows)supply chatgpt_env/bin/activate(macOS or Linux)

Subsequent, you’ll want to put in the required Python libraries which embody the OpenAI Python shopper library for interacting with the OpenAI API, and the python-dotenv package deal for dealing with configuration. To put in each packages, run the next command:

pip set up openai python-dotenv

Organising OpenAI API entry

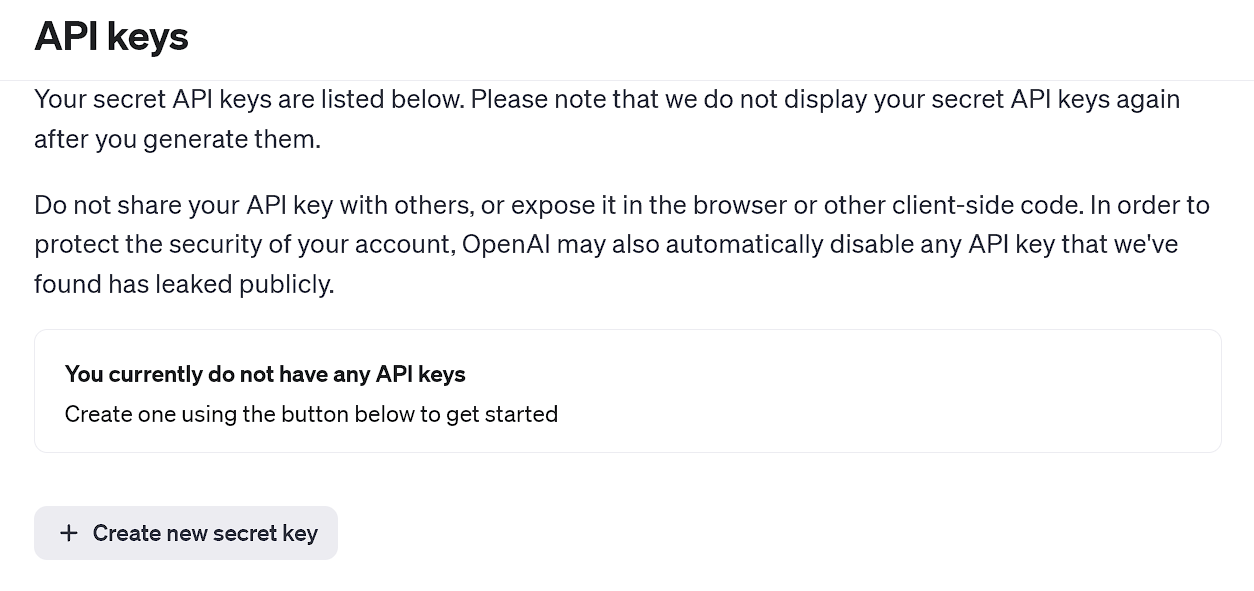

To make an OpenAI API request, you could first join on OpenAI’s platform and generate your distinctive API key. Comply with these steps:

- Go to OpenAI’s API Key web page and create a brand new account, or log in if you have already got an account.

- As soon as logged in, navigate to the API keys part and click on on Create new secret key.

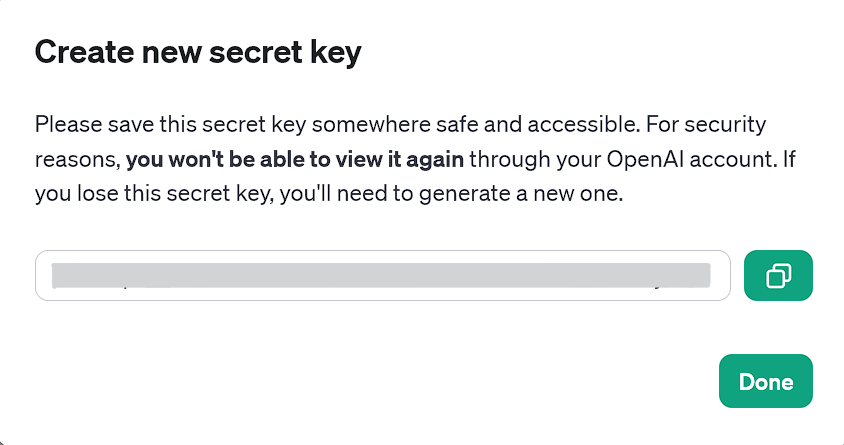

- Copy the generated API key for later use. In any other case, you’ll should generate a brand new API key in case you lose it. You gained’t be capable of view API keys by way of the OpenAI web site.

OpenAI’s API keys web page

Generated API key that can be utilized now

API Key and Authentication

After acquiring your API key, we advocate storing it as an setting variable for safety functions. To handle setting variables, use the python-dotenv package deal. To arrange an setting variable containing your API key, observe these steps:

-

Create a file named

.envin your undertaking listing. -

Add the next line to the

.envfile, changingyour_api_keywith the precise API key you copied earlier:CHAT_GPT_API_KEY=your_api_key. -

In your Python code, load the API key from the

.envfile utilizing theload_dotenvoperate from the python-dotenv package deal:

import openai

from openai import OpenAI

import os

from dotenv import load_dotenv

load_dotenv()

shopper = OpenAI(api_key=os.environ.get("CHAT_GPT_API_KEY"))

Notice: Within the newest model of the OpenAI Python library, it’s essential instantiate an OpenAI shopper to make API calls, as proven under. It is a change from the earlier variations, the place you’d instantly use international strategies.

Now you’ve added your API key and your setting is about up and prepared for utilizing the OpenAI API in Python. Within the subsequent sections of this text, we’ll discover interacting with the API and constructing chat apps utilizing this highly effective software.

Keep in mind so as to add the above code snippet to each code part down under earlier than working.

Utilizing the OpenAI API in Python

After loading up the API from the .env file, we are able to truly begin utilizing it inside Python. To make use of the OpenAI API in Python, we are able to make API calls utilizing the shopper object. Then we are able to go a sequence of messages as enter to the API and obtain a model-generated message as output.

Making a easy ChatGPT request

-

Be sure to have finished the earlier steps: making a digital setting, putting in the required libraries, and producing your OpenAI secret key and

.envfile within the undertaking listing. -

Use the next code snippet to arrange a easy ChatGPT request:

chat_completion = shopper.chat.completions.create(

mannequin="gpt-4",

messages=[{"role": "user", "content": "query"}]

)

print(chat_completion.decisions[0].message.content material)

Right here, shopper.chat.completions.create is a methodology name on the shopper object. The chat attribute accesses the chat-specific functionalities of the API, and completions.create is a technique that requests the AI mannequin to generate a response or completion primarily based on the enter offered.

Exchange the question with the immediate you want to run, and be happy to make use of any supported GPT mannequin as an alternative of the chosen GPT-4 above.

Dealing with errors

Whereas making requests, numerous points would possibly happen, together with community connectivity issues, fee restrict exceedances, or different non-standard response standing code. Subsequently, it’s important to deal with these standing codes correctly. We will use Python’s strive and besides blocks for sustaining program move and higher error dealing with:

strive:

chat_completion = shopper.chat.completions.create(

mannequin="gpt-4",

messages=[{"role": "user", "content": "query"}],

temperature=1,

max_tokens=150

)

print(chat_completion.decisions[0].message.content material)

besides openai.APIConnectionError as e:

print("The server couldn't be reached")

print(e.__cause__)

besides openai.RateLimitError as e:

print("A 429 standing code was acquired; we should always again off a bit.")

besides openai.APIStatusError as e:

print("One other non-200-range standing code was acquired")

print(e.status_code)

print(e.response)

Notice: it’s essential have out there credit score grants to have the ability to use any mannequin of the OpenAI API. If greater than three months have handed since your account creation, your free credit score grants have seemingly expired, and also you’ll have to purchase extra credit (a minimal of $5).

Now listed below are some methods you’ll be able to additional configure your API requests:

- Max Tokens. Restrict the utmost attainable output size in keeping with your wants by setting the

max_tokensparameter. This generally is a cost-saving measure, however do word that this merely cuts off the generated textual content from going previous the restrict, not making the general output shorter. - Temperature. Modify the temperature parameter to manage the randomness. (Increased values make responses extra various, whereas decrease values produce extra constant solutions.)

If any parameter isn’t manually set, it makes use of the respective mannequin’s default worth, like 0 — 7 and 1 for GPT-3.5-turbo and GPT-4, respectively.

Apart from the above parameters, there are quite a few different parameters and configurations you can also make to utilize GPT’s capabilities precisely the way in which you wish to. Learning OpenAI’s API documentation is beneficial for reference.

Nonetheless, efficient and contextual prompts are nonetheless needed, regardless of what number of parameter configurations are finished.

Superior Methods in API Integration

On this part, we’ll discover superior methods to combine the OpenAI API into your Python tasks, specializing in automating duties, utilizing Python requests for knowledge retrieval, and managing large-scale API requests.

Automating duties with the OpenAI API

To make your Python undertaking extra environment friendly, you’ll be able to automate numerous duties utilizing the OpenAI API. As an example, you would possibly wish to automate the technology of e mail responses, buyer help solutions, or content material creation.

Right here’s an instance of automate a job utilizing the OpenAI API:

def automated_task(immediate):

strive:

chat_completion = shopper.chat.completions.create(

mannequin="gpt-4",

messages=[{"role": "user", "content": prompt}],

max_tokens=250

)

return chat_completion.decisions[0].message.content material

besides Exception as e:

return str(e)

generated_text = automated_task("Write an quick word that is lower than 50 phrases to the event workforce asking for an replace on the present standing of the software program replace")

print(generated_text)

This operate takes in a immediate and returns the generated textual content as output.

Utilizing Python requests for knowledge retrieval

You should utilize the fashionable requests library to work together with the OpenAI API instantly with out counting on the OpenAI library. This methodology offers you extra management over get request, and suppleness over your API calls.

The next instance requires the requests library (in case you don’t have it, then run pip set up requests first):

headers = {

'Content material-Sort': 'software/json',

'Authorization': f'Bearer {api_key}',

}

knowledge = {

'mannequin': 'gpt-4',

'messages': [{'role': 'user', 'content': 'Write an interesting fact about Christmas.'}]

}

response = requests.put up('https://api.openai.com/v1/chat/completions', headers=headers, json=knowledge)

print(response.json())

This code snippet demonstrates making a POST request to the OpenAI API, with headers and knowledge as arguments. The JSON response could be parsed and utilized in your Python undertaking.

Managing large-scale API requests

When working with large-scale tasks, it’s essential to handle API requests effectively. This may be achieved by incorporating methods like batching, throttling, and caching.

- Batching. Mix a number of requests right into a single API name, utilizing the

nparameter within the OpenAI library:n = number_of_responses_needed. - Throttling. Implement a system to restrict the speed at which API calls are made, avoiding extreme utilization or overloading the API.

- Caching. Retailer the outcomes of accomplished API requests to keep away from redundant requires comparable prompts or requests.

To successfully handle API requests, preserve observe of your utilization and alter your config settings accordingly. Think about using the time library so as to add delays or timeouts between requests if needed.

Making use of these superior methods in your Python tasks will enable you get probably the most out of the OpenAI API whereas making certain environment friendly and scalable API integration.

Sensible Functions: OpenAI API in Actual-world Initiatives

Incorporating the OpenAI API into your real-world tasks can present quite a few advantages. On this part, we’ll focus on two particular functions: integrating ChatGPT in net growth and constructing chatbots with ChatGPT and Python.

Integrating ChatGPT in net growth

The OpenAI API can be utilized to create interactive, dynamic content material tailor-made to consumer queries or wants. As an example, you may use ChatGPT to generate customized product descriptions, create participating weblog posts, or reply widespread questions on your companies. With the facility of the OpenAI API and a bit Python code, the probabilities are countless.

Think about this straightforward instance of utilizing an API name from a Python backend:

def generate_content(immediate):

strive:

response = shopper.chat.completions.create(

mannequin="gpt-4",

messages=[{"role": "user", "content": prompt}]

)

return response.decisions[0].message.content material

besides Exception as e:

return str(e)

description = generate_content("Write a brief description of a mountaineering backpack")

You possibly can then additionally write code to combine description together with your HTML and JavaScript to show the generated content material in your web site.

Constructing chatbots with ChatGPT and Python

Chatbots powered by synthetic intelligence are starting to play an essential function in enhancing the consumer expertise. By combining ChatGPT’s pure language processing skills with Python, you’ll be able to construct chatbots that perceive context and reply intelligently to consumer inputs.

Think about this instance for processing consumer enter and acquiring a response:

def get_chatbot_response(immediate):

strive:

response = shopper.chat.completions.create(

mannequin="gpt-4",

messages=[{"role": "user", "content": prompt}]

)

return response.decisions[0].message.content material

besides Exception as e:

return str(e)

user_input = enter("Enter your immediate: ")

response = get_chatbot_response(user_input)

print(response)

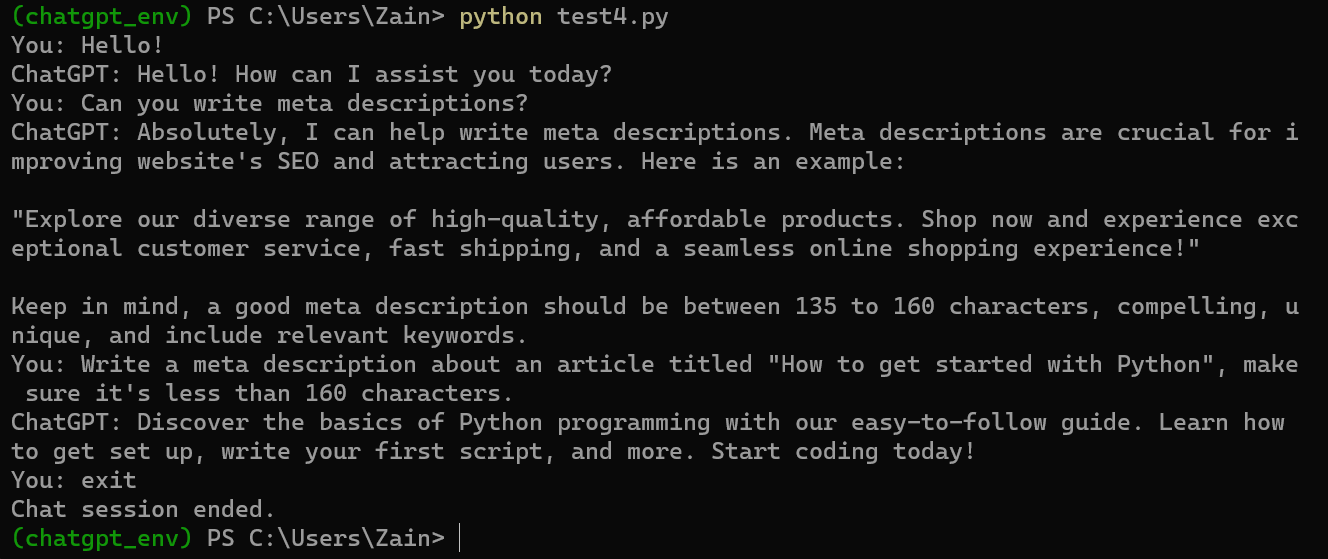

However since there’s no loop, the script will finish after working as soon as, so contemplate including conditional logic. For instance, we added a primary conditional logic the place the script will preserve on the lookout for consumer prompts till the consumer says the cease phrase “exit” or “stop”.

Contemplating the talked about logic, our full last code for working a chatbot on the OpenAI API endpoint might appear like this:

from openai import OpenAI

import os

from dotenv import load_dotenv

load_dotenv()

shopper = OpenAI(api_key=os.environ.get("CHAT_GPT_API_KEY"))

def get_chatbot_response(immediate):

strive:

response = shopper.chat.completions.create(

mannequin="gpt-4",

messages=[{"role": "user", "content": prompt}]

)

return response.decisions[0].message.content material

besides Exception as e:

return str(e)

whereas True:

user_input = enter("You: ")

if user_input.decrease() in ["exit", "quit"]:

print("Chat session ended.")

break

response = get_chatbot_response(user_input)

print("ChatGPT:", response)

Right here’s the way it appears to be like when run within the Home windows Command Immediate.

Hopefully, these examples will enable you get began on experimenting with the ChatGPT AI. General, OpenAI has opened huge alternatives for builders to create new, thrilling merchandise utilizing their API, and the probabilities are countless.

OpenAI API limitations and pricing

Whereas the OpenAI API is highly effective, there are a couple of limitations:

-

Information Storage. OpenAI retains your API knowledge for 30 days, and utilizing the API implies knowledge storage consent. Be conscious of the information you ship.

-

Mannequin Capability. Chat fashions have a most token restrict. (For instance, GPT-3 helps 4096 tokens.) If an API request exceeds this restrict, you’ll have to truncate or omit textual content.

-

Pricing. The OpenAI API is just not out there totally free and follows its personal pricing scheme, separate from the mannequin subscription charges. For extra pricing info, consult with OpenAI’s pricing particulars. (Once more, GPT-4 Turbo is thrice cheaper than GPT-4!)

Conclusion

Exploring the potential of the ChatGPT mannequin API in Python can carry vital developments in numerous functions akin to buyer help, digital assistants, and content material technology. By integrating this highly effective API into your tasks, you’ll be able to leverage the capabilities of GPT fashions seamlessly in your Python functions.

When you loved this tutorial, you may additionally take pleasure in these: