Introduction

The rise of Giant Language Fashions (LLMs) has marked a major development within the period of Synthetic Intelligence (AI). Throughout this era, Cloud Graphic Processing Models (GPUs) supplied by Paperspace + DigitalOcean have emerged as pioneers in offering high-quality NVIDIA GPUs, pushing the boundaries of computational expertise.

NVIDIA, was based in 1993 by three visionary American pc scientists – Jen-Hsun (“Jensen”) Huang, former director at LSI Logic and microprocessor designer at AMD; Chris Malachowsky, an engineer at Solar Microsystems; and Curtis Priem, senior employees engineer and graphic chip designer at IBM and Solar Microsystems – launched into its journey with a deep concentrate on creating cutting-edge graphics {hardware} for the gaming trade. This dynamic trio’s experience and keenness set the stage for NVIDIA’s exceptional progress and innovation.

As tech evolution occurred, NVIDIA acknowledged the potential of GPUs past gaming and explored the potential for parallel processing. This led to the event of CUDA (initially Compute Unified Gadget Structure) in 2006, serving to builders across the globe to make use of GPUs for a wide range of heavy computational duties. This led to the stepping stone for a deep studying revolution, positioning NVIDIA because the chief within the subject of AI analysis and improvement.

NVIDIA’s GPUs have change into integral to AI, powering advanced neural networks and enabling breakthroughs in pure language processing, picture recognition, and autonomous techniques.

Introduction to the H100: The most recent development in NVIDIA’s lineup

The corporate’s dedication to innovation continues with the discharge of the H100 GPU, a powerhouse that represents the height of recent computing. With its cutting-edge Hopper structure, the H100 is about to revolutionize deep studying, providing unmatched efficiency and effectivity.

The NVIDIA H100 Tensor Core GPU, geared up with the NVIDIA NVLink™ Swap System, permits for connecting as much as 256 H100 GPUs to speed up processing workloads. This GPU additionally contains a devoted Transformer Engine designed to deal with trillion-parameter language fashions effectively. Thanks to those technological developments, the H100 can improve the efficiency of enormous language fashions (LLMs) by as much as 30 occasions in comparison with the earlier era, delivering cutting-edge capabilities in conversational AI.

💡

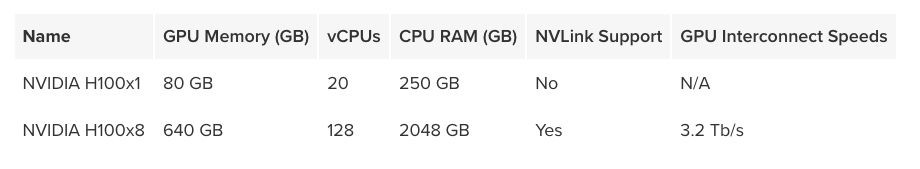

Paperspace now helps the NVIDIA H100 each with a single chip (NVIDIA H100x1) and with eight chips (NVIDIA H100x8), presently within the NYC2 datacenter.

The Structure of the H100

The NVIDIA Hopper GPU structure delivers high-performance computing with low latency and is designed to function at information heart scale. Powered by the NVIDIA Hopper structure, the NVIDIA H100 Tensor Core GPU marks a major leap in computing efficiency for NVIDIA’s information heart platforms. Constructed utilizing 80 billion transistors, the H100 is probably the most superior chip ever created by NVIDIA, that includes quite a few architectural enhancements.

As NVIDIA’s Ninth-generation information heart GPU, the H100 is designed to ship a considerable efficiency improve for AI and HPC workloads in comparison with the earlier A100 mannequin. With InfiniBand interconnect, it gives as much as 30 occasions the efficiency of the A100 for mainstream AI and HPC fashions. The brand new NVLink Swap System allows mannequin parallelism throughout a number of GPUs, concentrating on among the most difficult computing duties.

- Constructed on Hopper structure H100 GPUs is designed for high-performance computing and AI workloads.

- H100 gives fourth-generation tensor cores which permits quicker communications between chips when in comparison with A100.

- Combines software program and {hardware} optimizations to speed up Transformer mannequin coaching and inference, attaining as much as 9x quicker coaching and 30x quicker inference.

- Fourth-Era NVLink is liable for the rise in bandwidth for multi-GPU operations, offering 900 GB/sec whole bandwidth.

These architectural developments make the H100 GPU a major step ahead in efficiency and effectivity for AI and HPC functions.

Key Options and Improvements

Fourth-Era Tensor Cores:

- The fourth-gen tensor cores gives as much as 6x quicker than the A100 for chip-to-chip communication.

- Supplies 2x the Matrix Multiply-Accumulate (MMA) computational charges per Streaming Multiprocessor or SM for equal information varieties.

- Presents 4x the MMA fee utilizing the brand new FP8 information sort in comparison with earlier 16-bit floating-point choices.

- Features a Sparsity function that doubles the efficiency of normal Tensor Core operations by exploiting structured sparsity in deep studying networks.

New DPX Directions:

- Accelerates dynamic programming algorithms by as much as 7x over the A100 GPU.

- Helpful for algorithms like Smith-Waterman for genomics and Floyd-Warshall for optimizing routes in dynamic environments.

Improved Processing Charges:

- Achieves 3x quicker IEEE FP64 and FP32 processing charges in comparison with the A100, due to larger clocks and extra SM counts.

Thread Block Cluster Characteristic:

- Extends the CUDA programming mannequin to incorporate Threads, Thread Blocks, Thread Block Clusters, and Grids.

- Permits a number of Thread Blocks to synchronize and share information throughout completely different SMs.

Asynchronous Execution Enhancements:

- Encompasses a new Tensor Reminiscence Accelerator (TMA) unit for environment friendly information transfers between world and shared reminiscence.

- Helps asynchronous information copies between Thread Blocks in a Cluster.

- Introduces an Asynchronous Transaction Barrier for atomic information motion and synchronization.

New Transformer Engine:

- Combines software program with customized Hopper Tensor Core expertise to speed up Transformer mannequin coaching and inference.

- Robotically manages calculations utilizing FP8 and 16-bit precision, delivering as much as 9x quicker AI coaching and 30x quicker AI inference for big language fashions in comparison with the A100.

HBM3 Reminiscence Subsystem:

- Presents almost double the bandwidth of the earlier era.

- H100 SXM5 GPU is the primary to function HBM3 reminiscence, offering 3 TB/sec of reminiscence bandwidth.

Enhanced Cache and Multi-Occasion GPU Know-how:

- 50 MB L2 cache reduces reminiscence journeys by caching giant mannequin and dataset parts.

- Second-generation Multi-Occasion GPU (MIG) expertise gives about 3x extra compute capability and almost 2x extra reminiscence bandwidth per GPU occasion than the A100.

- MIG helps as much as seven GPU situations, every with devoted items for video decoding and JPEG processing.

Confidential Computing and Safety:

- New assist for Confidential Computing to guard person information and defend towards assaults.

- First native Confidential Computing GPU, providing higher isolation and safety for digital machines (VMs).

Fourth-Era NVIDIA NVLink®:

- Will increase bandwidth by 3x for all-reduce operations and by 50% for normal operations in comparison with the earlier NVLink model.

- Supplies 900 GB/sec whole bandwidth for multi-GPU IO, outperforming PCIe Gen 5 by 7x.

Third-Era NVSwitch Know-how:

- Connects a number of GPUs in servers and information facilities with improved change throughput.

- Enhances collective operations with multicast and in-network reductions.

NVLink Swap System:

- Allows as much as 256 GPUs to attach over NVLink, delivering 57.6 TB/sec of bandwidth.

- Helps an exaFLOP of FP8 sparse AI compute.

PCIe Gen 5:

- Presents 128 GB/sec whole bandwidth, doubling the bandwidth of Gen 4 PCIe.

- Interfaces with high-performing CPUs and SmartNICs/DPUs.

Further Enhancements:

- Enhancements to enhance sturdy scaling, cut back latency and overheads, and simplify GPU programming.

Information Middle Improvements:

- New H100-based DGX, HGX, Converged Accelerators, and AI supercomputing techniques are mentioned within the NVIDIA-Accelerated Information Facilities part.

- Particulars on H100 GPU structure and efficiency enhancements are lined in-depth in a devoted part.

Implications for the Way forward for AI

GPUs have change into essential on this ever evolving subject of AI and deep studying will proceed to develop. The parallel processing and accelerated computing are the important thing benefits of H100. The tensor cores and structure of H100 considerably will increase the efficiency of AI fashions significantly LLMs. The development is specifically through the coaching time and inferencing. This permits the builders and researchers to successfully work with advanced fashions.

The H100’s devoted Transformer Engine optimizes the coaching and inference of Transformer fashions, that are basic to many trendy AI functions, together with pure language processing and pc imaginative and prescient. This functionality helps speed up analysis and deployment of AI options throughout varied fields.

Being mentioned that blackwell is the successor to NVIDIA H100 and H200 GPUS the long run GPUs usually tend to concentrate on additional enhancing effectivity and lowering energy consumption. Therefore transferring a step in direction of sustainable environments. Additional future GPUs could supply even better flexibility in balancing precision and efficiency.

The NVIDIA H100 GPU has been thought-about to be a cutting-edge GPU in AI and computing, shaping the way forward for expertise and its functions throughout industries.

The function of the H100 in advancing AI capabilities

- Autonomous Automobiles and Robotics: The improved processing energy and effectivity may also help algorithms like YOLO to develop autonomous techniques, making self-driving vehicles and robots extra dependable and able to working in advanced environments.

- Monetary Companies: AI-driven monetary fashions and algorithms will profit from the H100’s efficiency enhancements, enabling quicker and extra correct threat assessments, fraud detection, and market predictions.

- Leisure and Media: The H100’s developments in AI can improve content material creation, digital actuality, and real-time rendering, resulting in extra immersive and interactive experiences in gaming and leisure.

- Analysis and Academia: The flexibility to deal with large-scale AI fashions and datasets will empower researchers to sort out advanced scientific challenges, driving innovation and discovery throughout varied disciplines.

- Integration of AI with Different Applied sciences: The H100’s improvements could pave the trail for edge computing, IoT, and 5G, enabling smarter, extra related gadgets and techniques.

Conclusion

NVIDIA H100 represents a large leap in AI and high-performance computing. The hopper structure and the transformer engine, has sucessfully arrange a brand new bar of effectivity and energy. As we glance to the long run, the H100’s influence on deep studying and AI will proceed to drive innovation, extra breakthroughs in fields comparable to healthcare, autonomous techniques, and scientific analysis, finally shaping the following period of technological progress.