Introduction

Apache Airflow is a robust platform that revolutionizes the administration and execution of Extracting, Reworking, and Loading (ETL) knowledge processes. It provides a scalable and extensible resolution for automating complicated workflows, automating repetitive duties, and monitoring knowledge pipelines. This text explores the intricacies of automating ETL pipelines utilizing Apache Airflow on AWS EC2. It demonstrates how Airflow can be utilized to design, deploy, and handle end-to-end knowledge pipelines effectively. The article makes use of a sensible instance of integrating a Climate API into an ETL pipeline, showcasing how Airflow orchestrates the retrieval, transformation, and loading of knowledge from various sources.

Studying Outcomes

- Understanding the pivotal function of environment friendly ETL processes in trendy knowledge infrastructure.

- Exploring the capabilities of Apache Airflow in automating complicated workflow administration.

- Harnessing the pliability and scalability of AWS EC2 for streamlined pipeline deployment.

- Demonstrating the applying of Airflow in automating knowledge extraction, transformation, and loading.

- Actual-world integration of a Climate API, showcasing Airflow’s function in driving data-driven selections.

What’s Apache Airflow?

Apache Airflow is an open-source platform that manages and displays Directed Acyclic Graphs (DAGs) workflows. It consists of a Scheduler, Executor, Metadata Database, and Internet Interface. The Scheduler manages duties, the Executor executes them on staff, and the Metadata Database shops metadata. The Internet Interface supplies a user-friendly dashboard for monitoring pipeline standing and managing workflows. Apache Airflow’s modular structure permits knowledge engineers to construct, automate, and scale knowledge pipelines with flexibility and management.

What are DAGs?

Directed Acyclic Graphs or DAGs outline the sequence of duties and their dependencies. They characterize the logical circulation of knowledge via the pipeline. Every node within the DAG represents a activity, whereas the perimeters denote the dependencies between duties. DAGs are acyclic, which implies they don’t have any cycles or loops, making certain a transparent and deterministic execution path. Airflow’s DAGs allow knowledge engineers to mannequin complicated workflows with ease, orchestrating the execution of duties in parallel or sequentially based mostly on their dependencies and schedule. By leveraging DAGs, customers can design strong and scalable knowledge pipelines that automate the extraction, transformation, and loading of knowledge with precision and effectivity.

What are Operators ?

Operators are basic constructing blocks inside Apache Airflow that outline the person models of labor to be executed inside a DAG. Every operator represents a single activity within the workflow and encapsulates the logic required to carry out that activity. Airflow supplies a variety of built-in operators, every tailor-made to particular use circumstances similar to transferring knowledge between methods, executing SQL queries, working Python scripts, sending emails, and extra. Moreover, Airflow permits customers to create customized operators to accommodate distinctive necessities not coated by the built-in choices. Operators play an important function in defining the performance and conduct of duties inside a DAG, enabling customers to assemble complicated workflows by orchestrating a sequence of operations seamlessly.

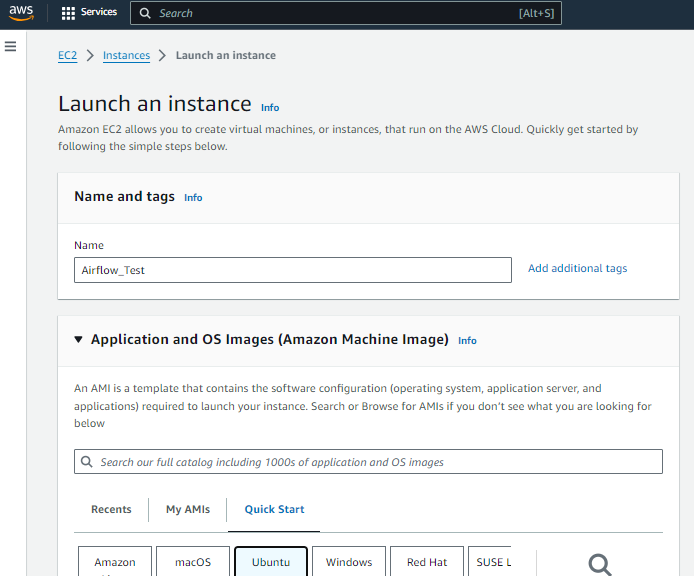

Launching EC2

Launching an EC2 occasion on AWS is a straightforward course of. It’s usually finished via the AWS Administration Console or command-line interfaces. To make sure Apache Airflow’s easy operation, configure inbound guidelines to permit visitors on port 8080, the default port utilized by Airflow’s net server. This enables safe entry to the Airflow net interface for monitoring and managing workflows. This streamlined setup balances useful resource allocation and performance, laying the groundwork for environment friendly workflow orchestration with Apache Airflow on AWS EC2.

Putting in Apache Airflow on EC2

To put in Apache Airflow on a working EC2 occasion, observe these steps:

Step1: Replace Package deal Lists

Replace package deal lists to make sure you have the most recent info on accessible packages:

sudo apt replaceStep2: Set up Python 3 pip Package deal Supervisor

Set up Python 3 pip package deal supervisor to facilitate the set up of Python packages:

sudo apt set up python3-pipStep3: Set up Python 3 Digital Surroundings Package deal

Set up Python 3 digital surroundings package deal to isolate the Airflow surroundings from the system Python set up:

sudo apt set up python3-venvStep4. Create Digital Surroundings for Airflow

python3 -m venv airflow_venvStep5. Activate the Digital Surroundings

supply airflow_venv/bin/activateStep6. Set up Required Python Packages for Airflow

pip set up pandas s3fs apache-airflowStep7. Begin Airflow Internet Server in Standalone Mode

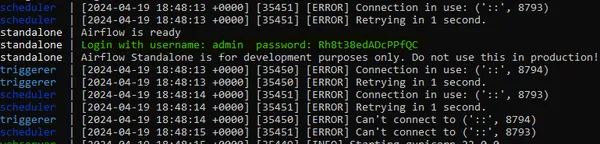

airflow webserver --port 8080With these instructions, you’ll have Apache Airflow put in and working in your EC2 occasion. You possibly can then entry the Airflow net interface by navigating to the occasion’s public IP tackle adopted by port 8080 in an online browser.

After navigating to port 8080 in your EC2 occasion’s public IP tackle, you’ll be directed to the Apache Airflow net interface. Upon your first go to, you’ll be prompted to enter the admin password. This password is generated and displayed in your terminal after working the `airflow standalone` command. Merely copy the password out of your terminal and paste it into the password area on the Airflow net interface to authenticate because the admin person. As soon as logged in, you’ll have entry to the total performance of Apache Airflow, the place you’ll be able to handle and monitor your workflows with ease.

Linking EC2 THrough SSH Extension

Let’s discover a brand new strategy by linking our EC2 occasion with VS Code via the SSH extension.

Step1. Set up Visible Studio Code on Your Native Machine

When you haven’t already, obtain and set up Visible Studio Code in your native machine from the official web site: [Visual Studio Code](https://code.visualstudio.com/).

Step2. Set up Distant – SSH Extension in VSCode

Open Visible Studio Code and set up the Distant – SSH extension. This extension permits you to connect with distant machines over SSH straight from inside VSCode.

Step3. Configure SSH on EC2 Occasion

Be sure that SSH is enabled in your EC2 occasion. You are able to do this through the occasion creation course of or by modifying the safety group settings within the AWS Administration Console. Ensure you have the important thing pair (.pem file) that corresponds to your EC2 occasion.

Step4. Retrieve EC2 Occasion’s Public IP Handle

Log in to your AWS Administration Console and navigate to the EC2 dashboard. Discover your occasion and word down its public IP tackle. You’ll want this to ascertain the SSH connection.

Step5. Hook up with EC2 Occasion from VSCode

In VSCode, press `Ctrl+Shift+P` (Home windows/Linux) or `Cmd+Shift+P` (Mac) to open the command palette. Sort “Distant-SSH: Hook up with Host” and choose it. Then, select “Add New SSH Host” and enter the next info:

- Hostname: Your EC2 occasion’s public IP tackle

- Consumer: The username used to SSH into your EC2 occasion (usually “ubuntu” for Amazon Linux or “ec2-user” for Amazon Linux 2)

- IdentityFile: The trail to the .pem file comparable to your EC2 occasion’s key pair

Step6. Join and Authenticate

After coming into the required info, VSCode will try to connect with your EC2 occasion over SSH. If prompted, select “Proceed” to belief the host. As soon as linked, VSCode will open a brand new window with entry to your EC2 occasion’s file system.

Step7. Confirm Connection

You possibly can confirm that you simply’re linked to your EC2 occasion by checking the bottom-left nook of the VSCode window. It ought to show the title of your EC2 occasion, indicating a profitable connection.

Writing DAG File

Now that you simply’ve linked your EC2 occasion to VSCode, you’re prepared to start out writing the code on your ETL pipeline utilizing Apache Airflow. You possibly can edit recordsdata straight in your EC2 occasion utilizing VSCode’s acquainted interface, making improvement and debugging a breeze.

Now we’ll enroll on the Climate API web site for API https://openweathermap.org/api and use it to get the climate knowledge.As soon as we entry our EC2 occasion in VS Code, we’ll discover the Airflow folder the place we beforehand put in the software program. Inside this listing, we’ll create a brand new folder named “DAG” to prepare our Directed Acyclic Graph (DAG) recordsdata. Right here, we’ll start writing our Python script for the DAG, laying the inspiration for our workflow orchestration.

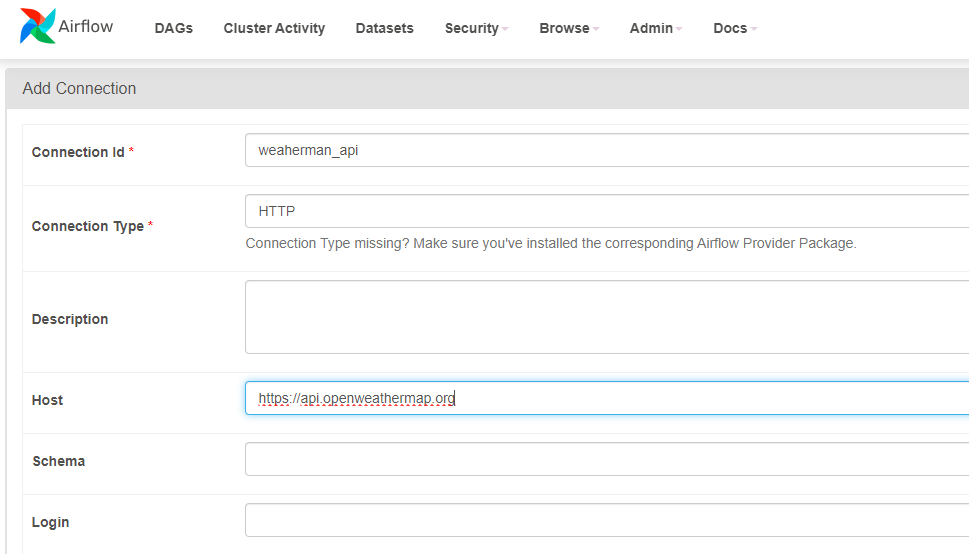

Making a Connection

To confirm the provision of the API, we’ll first navigate to the Airflow UI and entry the admin part. From there, we’ll proceed so as to add a brand new connection by clicking on the “Connections” tab. Right here, we’ll specify the connection ID as “weathermap_api” and set the sort to “HTTP”. Within the “Host” area, we’ll enter “https://api.openweathermap.org”. With these settings configured, we’ll set up the connection, making certain that our API is prepared to be used in our ETL pipeline.

So now we’re finished with step one of checking if API is obtainable we’ll extract climate knowledge from the API and retailer it in a S3 Bucket. So for Storing the information in S3 bucket we’ll want some permissions so as to add with ec2-instance for that we are going to go to our occasion click on on Actions after which choose “Safety” from the dropdown menu. Right here, you’ll discover the choice to switch the safety settings of your occasion. Click on on “Modify IAM Function” to connect an IAM function.

Attaching S3 Bucket to EC2

Within the IAM function administration web page, choose “Create new IAM function” should you haven’t already created a task for EC2 cases. Select the “EC2” service as the kind of trusted entity, then click on “Subsequent: Permissions”.

Within the permissions step, choose “Connect insurance policies straight”. Seek for and choose the insurance policies “AmazonS3FullAccess” to grant full entry to S3, and “AmazonEC2FullAccess” to offer full entry to EC2 sources.

You need to use this JSON additionally so as to add permission:

{

"Model": "2012-10-17",

"Assertion": [

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "ec2:*",

"Resource": "*"

}

]

}Proceed to the following steps to evaluate and title your IAM function. As soon as created, return to the EC2 occasion’s safety settings. Within the IAM function dropdown, choose the function you simply created and click on “Save”.

With the IAM function connected, your EC2 occasion now has the mandatory permissions to work together with S3 buckets and different AWS sources, enabling seamless knowledge storage and retrieval as a part of your ETL pipeline.

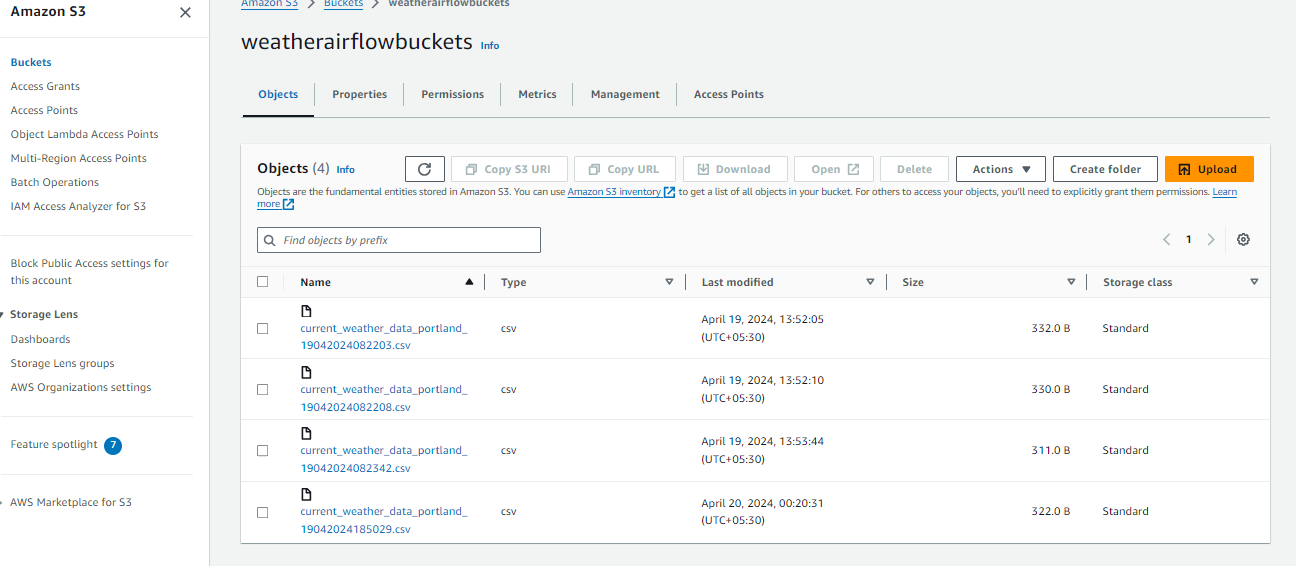

After granting the mandatory permissions, you’ll be able to proceed to create an S3 bucket to retailer the extracted climate knowledge from the API.

As soon as the bucket is created, you should use it in your DAG to retailer the extracted climate knowledge from the API. In your DAG script, you’ll have to specify the S3 bucket title in addition to the vacation spot path the place the climate knowledge will probably be saved. With the bucket configured, your DAG can seamlessly work together with the S3 bucket to retailer and retrieve knowledge as wanted.

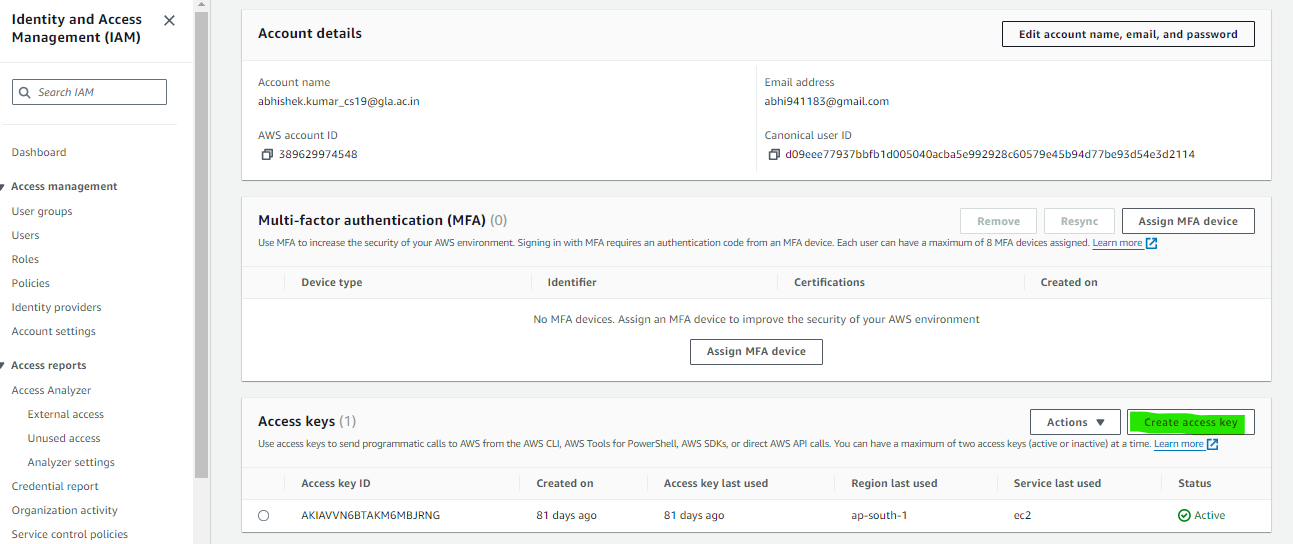

Entry Token Era

To acquire the mandatory entry token from AWS, navigate to the AWS Administration Console and click on in your account title or profile icon within the top-right nook. From the dropdown menu, choose “My Safety Credentials”. Within the “Entry keys” part, both generate a brand new entry key or retrieve an current one. Copy each the Entry Key ID and Secret Entry Key offered. These credentials will authenticate your requests to AWS providers. Guarantee to securely retailer the Secret Entry Key because it is not going to be displayed once more. With these credentials, you’ll be able to seamlessly combine AWS providers, similar to S3, into your Apache Airflow DAGs in your EC2 occasion.

And now lastly that is the DAG file with all 3 steps mixed.

from airflow import DAG

from datetime import timedelta, datetime

from airflow.suppliers.http.sensors.http import HttpSensor

import json

from airflow.suppliers.http.operators.http import SimpleHttpOperator

from airflow.operators.python import PythonOperator

import pandas as pd

# Operate to transform temperature from Kelvin to Fahrenheit

def kelvin_to_fahrenheit(temp_in_kelvin):

temp_in_fahrenheit = (temp_in_kelvin - 273.15) * (9/5) + 32

return temp_in_fahrenheit

# Operate to remodel and cargo climate knowledge to S3 bucket

def transform_load_data(task_instance):

# Extract climate knowledge from XCom

knowledge = task_instance.xcom_pull(task_ids="extract_weather_data")

# Extract related climate parameters

metropolis = knowledge["name"]

weather_description = knowledge["weather"][0]['description']

temp_farenheit = kelvin_to_fahrenheit(knowledge["main"]["temp"])

feels_like_farenheit = kelvin_to_fahrenheit(knowledge["main"]["feels_like"])

min_temp_farenheit = kelvin_to_fahrenheit(knowledge["main"]["temp_min"])

max_temp_farenheit = kelvin_to_fahrenheit(knowledge["main"]["temp_max"])

strain = knowledge["main"]["pressure"]

humidity = knowledge["main"]["humidity"]

wind_speed = knowledge["wind"]["speed"]

time_of_record = datetime.utcfromtimestamp(knowledge['dt'] + knowledge['timezone'])

sunrise_time = datetime.utcfromtimestamp(knowledge['sys']['sunrise'] + knowledge['timezone'])

sunset_time = datetime.utcfromtimestamp(knowledge['sys']['sunset'] + knowledge['timezone'])

# Remodel knowledge into DataFrame

transformed_data = {"Metropolis": metropolis,

"Description": weather_description,

"Temperature (F)": temp_farenheit,

"Feels Like (F)": feels_like_farenheit,

"Minimal Temp (F)": min_temp_farenheit,

"Most Temp (F)": max_temp_farenheit,

"Strain": strain,

"Humidity": humidity,

"Wind Pace": wind_speed,

"Time of Document": time_of_record,

"Dawn (Native Time)": sunrise_time,

"Sundown (Native Time)": sunset_time

}

transformed_data_list = [transformed_data]

df_data = pd.DataFrame(transformed_data_list)

# Retailer knowledge in S3 bucket

aws_credentials = {"key": "xxxxxxxxx", "secret": "xxxxxxxxxx"}

now = datetime.now()

dt_string = now.strftime("%dpercentmpercentYpercentHpercentMpercentS")

dt_string = 'current_weather_data_portland_' + dt_string

df_data.to_csv(f"s3://YOUR_S3_NAME/{dt_string}.csv", index=False,

storage_options=aws_credentials)

# Outline default arguments for the DAG

default_args = {

'proprietor': 'airflow',

'depends_on_past': False,

'start_date': datetime(2023, 1, 8),

'e mail': ['[email protected]'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 2,

'retry_delay': timedelta(minutes=2)

}

# Outline the DAG

with DAG('weather_dag',

default_args=default_args,

schedule_interval="@day by day",

catchup=False) as dag:

# Examine if climate API is prepared

is_weather_api_ready = HttpSensor(

task_id='is_weather_api_ready',

http_conn_id='weathermap_api',

endpoint="/knowledge/2.5/climate?q=Portland&APPID=**********************"

)

# Extract climate knowledge from API

extract_weather_data = SimpleHttpOperator(

task_id='extract_weather_data',

http_conn_id='weathermap_api',

endpoint="/knowledge/2.5/climate?q=Portland&APPID=**********************",

methodology='GET',

response_filter=lambda r: json.hundreds(r.textual content),

log_response=True

)

# Remodel and cargo climate knowledge to S3 bucket

transform_load_weather_data = PythonOperator(

task_id='transform_load_weather_data',

python_callable=transform_load_data

)

# Set activity dependencies

is_weather_api_ready >> extract_weather_data >> transform_load_weather_data

Clarification

- kelvin_to_fahrenheit: This perform converts temperature from Kelvin to Fahrenheit.

- transform_load_data: This perform extracts climate knowledge from the API response, transforms it, and hundreds it into an S3 bucket.

- default_args: These are the default arguments for the DAG, together with proprietor, begin date, and e mail settings.

- weather_dag: That is the DAG definition with the title “weather_dag” and the desired schedule interval of day by day execution.

- is_weather_api_ready: This activity checks if the climate API is prepared by making an HTTP request to the API endpoint.

- extract_weather_data: This activity extracts climate knowledge from the API response utilizing an HTTP GET request.

- transform_load_weather_data: This activity transforms the extracted knowledge and hundreds it into an S3 bucket.

- Process Dependencies: The >> operator defines the duty dependencies, making certain that duties execute within the specified order.

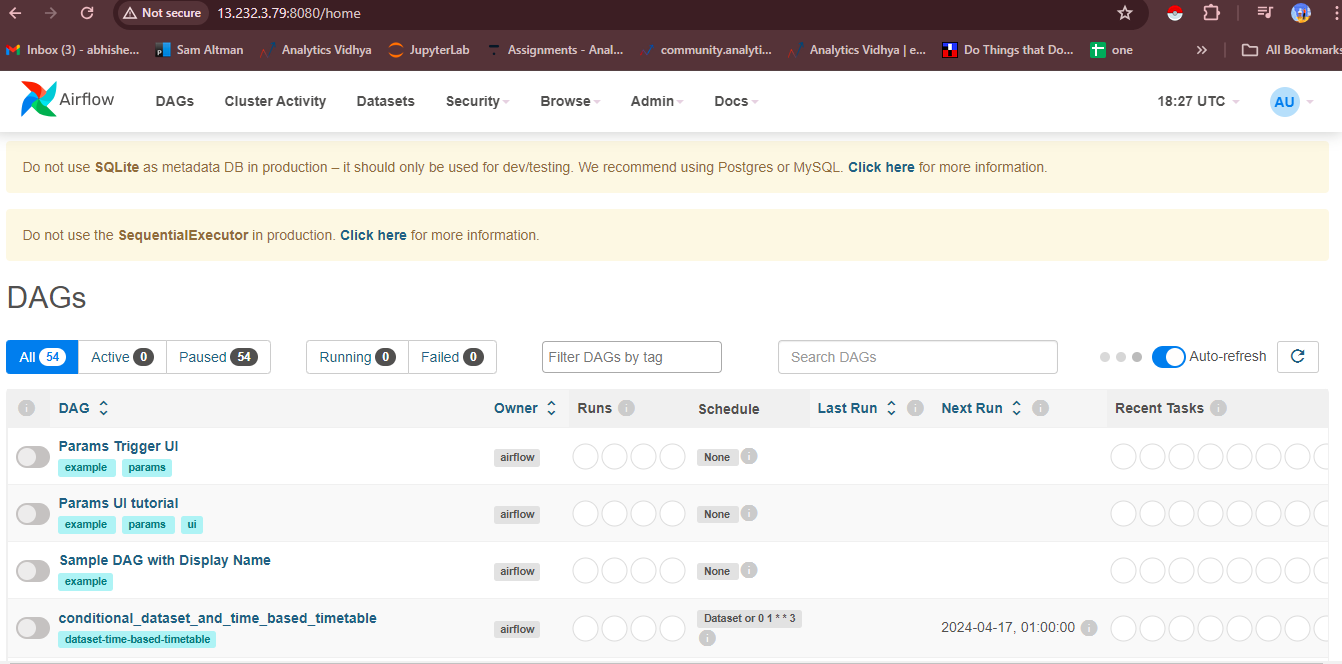

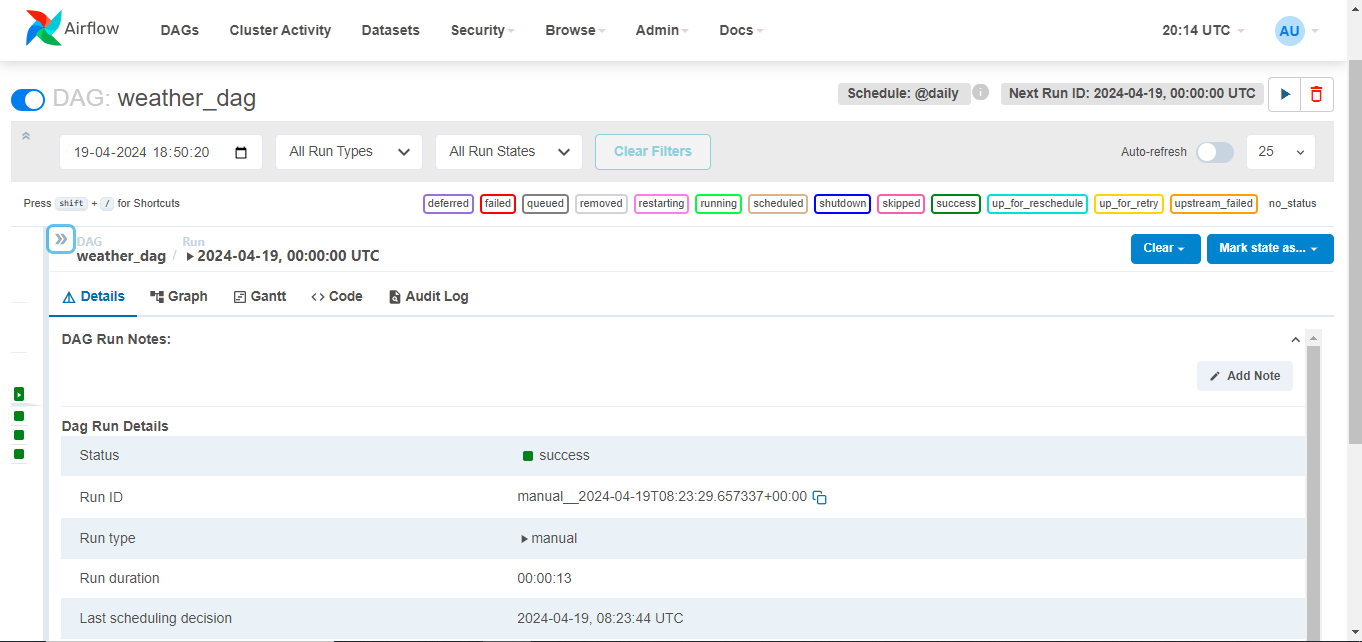

The DAG file is a device that automates the extraction, transformation, and loading of climate knowledge from the API into an S3 bucket utilizing Apache Airflow. It’s displayed within the Airflow UI, permitting customers to observe its standing, set off guide runs, and examine activity logs. To check the DAG, customers can set off a guide run, increase its particulars, and click on the “Set off DAG” button. Process logs will be considered to trace particular person duties and diagnose points. The Airflow UI simplifies the workflow orchestration course of.

After finishing the automated ETL pipeline, it’s essential to confirm the saved knowledge within the S3 bucket. Navigate to the AWS Administration Console and find the bucket the place the climate knowledge was configured. Confirm the information’s appropriate storage by exploring its contents, which ought to comprise recordsdata organized based on the desired vacation spot path. This confirms the automated ETL pipeline’s performance and safe storage within the designated S3 bucket, making certain the reliability and effectiveness of the automated knowledge processing workflow.

Conclusion

The mixing of Apache Airflow with AWS EC2 presents a strong resolution for automating ETL pipelines, facilitating environment friendly knowledge processing and evaluation. By our exploration of automating ETL processes with Airflow and leveraging AWS sources, we’ve highlighted the transformative potential of those applied sciences in driving data-driven decision-making. By orchestrating complicated workflows and seamlessly integrating with exterior providers just like the Climate API, Airflow empowers organizations to streamline knowledge administration and extract beneficial insights with ease.

Key Takeaways

- Effectivity in ETL processes is essential for organizations to derive actionable insights from their knowledge.

- Apache Airflow supplies a robust platform for automating ETL pipelines, providing flexibility and scalability.

- Leveraging AWS EC2 enhances the capabilities of Airflow, enabling seamless deployment and administration of knowledge workflows.

- Integration of exterior providers, such because the Climate API, demonstrates the flexibility of Airflow in orchestrating various knowledge sources.

- Automated ETL pipelines allow organizations to drive data-driven decision-making, fostering innovation and aggressive benefit in at the moment’s data-driven world.