Introduction

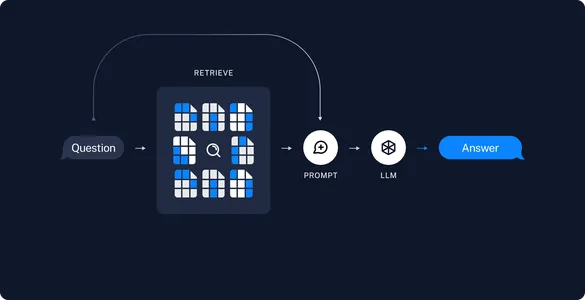

With the arrival of RAG (Retrieval Augmented Technology) and Massive Language Fashions (LLMs), knowledge-intensive duties like Doc Query Answering, have change into much more environment friendly and sturdy with out the rapid have to fine-tune a cost-expensive LLM to unravel downstream duties. On this article, we’ll dive into the world of RAG-powered doc QnA utilizing Google’s Gemini AI and Langchain. Alongside this, there was a whole lot of dialogue round preserving conversational reminiscence whereas leveraging LLMs for QnA. With that in thoughts, we may even discover ways to create a customized semantic reminiscence and combine it with our RAG to assist a conversational interface the place the consumer can ask follow-up questions and hold the chat going. With that, let’s dig in!

Studying Targets

- Learn and retailer PDF paperwork in a vector retailer utilizing Gemini Embeddings.

- Create a customized PDF reader to insert metadata data based mostly on our selection and use case.

- Generate responses for consumer queries with the assistance of the Gemini Professional mannequin.

- Implement semantic caching to retailer LLM responses to queries and use them as comparable question responses.

This text was printed as part of the Information Science Blogathon.

Conversing with Paperwork

Making a Doc Query Answering utility is way simpler now than it was a yr in the past. OpenAI API has been the core selection for many of the RAG purposes since its launch, however for small purposes with much less/no funding, OpenAI turns into an costly selection. That is the place Google’s Gemini API catches consideration. The free model of the API helps as much as 60 QPM, which could appear much less however is useful for non-customer-centric purposes or for hobbyists who don’t wish to spend {dollars} on their tasks.

Moreover, on this article, we may even be implementing semantic caching, which will likely be useful for purposes which might be utilizing the paid model of OpenAI API. Now, earlier than we soar into the hands-on actions, let’s perceive what semantic caching is.

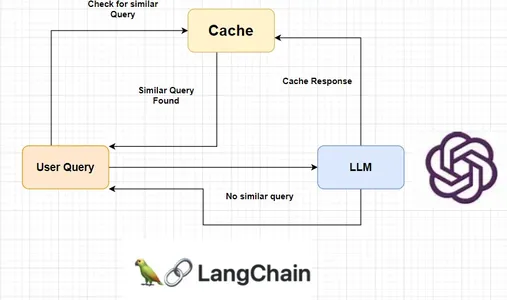

What’s Semantic Caching?

Semantic caching is a type of caching that can be utilized to cache LLM responses. It shops LLM response for question and returns the identical response when the identical question or comparable question is requested. This helps cut back pointless LLM calls and consequently reduces API prices.

Langchain offers a variety of LLM cache instruments like Redis Semantic Cache, GPTCache, AstraDB, and so forth. Nonetheless, they aren’t correct by way of precisely recognizing comparable queries. This occurs on account of excessive similarity scores for queries that differ by a quantity or phrase. Effectively, we may even deal with the difficulty by creating our personal semantic cache system utilizing the identical idea.

Arms-On Doc QnA with Langchain + Gemini Professional with Semantic Caching

Step one in constructing this RAG system is constructing the Ingestion pipeline. The Ingestion pipeline merely permits the consumer to add the doc(s) for query answering. We initialize a vector retailer after which retailer the doc contents in it. We’ll retailer doc contents, their embeddings, and doc metadata data within the vector retailer.

For the embeddings, we will likely be utilizing the embeddings mannequin supplied by Gemini. We may even create our PDF reader so as to add additional data like web page numbers, file names, and so forth., inside the metadata. We’ll use the Deeplake vector retailer for storing the paperwork for RAG in addition to for semantic caching later within the QA chain.

Step-by-Step Information to Doc QnA with Langchain + Gemini Professional

Let’s start the method of making a doc QnA system utilizing Google Gemini Professional and Langchain, with this detailed 4-step information.

Step 1: Creating Gemini API Key

Step one will likely be creating an API key for Gemini AI. You’ll be able to skip this step if you have already got a key prepared. Else, observe the under steps to create a brand new API key:

- Go to https://aistudio.google.com/app

- Click on on Get API key -> Create API key.

- Click on on the `Create API key on a brand new undertaking` or `Search Google Cloud Initiatives` and choose a undertaking. Then watch for the important thing to be generated.

- Copy the generated API key.

Now put this key within the config.py file in the GOOGLE_API_KEY variable. We at the moment are good to go. Create a digital surroundings utilizing the instrument of your selection and use the necessities.txt file supplied to put in the required packages. Please keep away from altering the supplied model of the packages as which may break your remaining utility.

Listed here are the contents of the necessities.txt file:

deeplake==3.8.19

langchain==0.1.3

langchain-community==0.0.20

langchain-core==0.1.23

langchain-google-genai==0.0.11

langsmith==0.0.87

lxml==4.9.4

nltk==3.8.1

numpy==1.26.2

openai==0.28.0

pydantic==2.5.3

pydantic_core==2.14.6

PyMuPDF==1.23.21

pypdf==3.17.4

pypdfium2==4.25.0

scipy==1.12.0

sentence-transformers==2.3.1

tiktoken==0.5.2

transformers==4.36.2Run the next code to put in all the required packages:

# For linux and macOS programs:

pip set up -r necessities.txt

# For Home windows programs:

python -m pip set up -r necessities.txtBeneath is your entire codebase for the Ingestion pipeline:

import config as cfg

from langchain.vectorstores.deeplake import DeepLake

from src.pdf_reader import PDFReader

from langchain_google_genai import (

GoogleGenerativeAIEmbeddings,

)

class Ingestion:

"""Ingestion class for ingesting paperwork to vectorstore."""

def __init__(self):

self.text_vectorstore = None

self.image_vectorstore = None

self.text_retriever = None

self.embeddings = GoogleGenerativeAIEmbeddings(

mannequin="fashions/embedding-001",

google_api_key=cfg.GOOGLE_API_KEY,

)

def ingest_documents(

self,

file: str,

):

# Initialize the PDFReader and cargo the PDF as chunks

loader = PDFReader()

chunks = loader.load_pdf(file_path=file)

# Initialize the vector retailer

vstore = DeepLake(

dataset_path="database/text_vectorstore",

embedding=self.embeddings,

overwrite=True,

num_workers=4,

verbose=False,

)

# Ingest the chunks

_ = vstore.add_documents(chunks)Let’s break down the code and perceive every line shortly. Now we have an Ingestion class that may be initialized. In its constructor, we have now initialized the Gemini Embeddings utilizing the mannequin title and API key, which we import from the config file. It’s noteworthy that, we will use any embeddings mannequin right here and never restricted to the utilization of Gemini Embeddings.

We then create a ingest_documents methodology, that reads the paperwork and dumps the content material into the vector retailer. We try this by initializing our customized PDF reader after which splitting the doc into chunks. That is dealt with internally by our PDF reader, which will likely be mentioned within the following part.

Now that we have now doc chunks we will now ingest them into the vector database. We initialize the Deeplake vector retailer utilizing the trail and the embeddings we initialized earlier within the constructor. Moreover, we set the overwrite parameter to True in order that the earlier contents within the vector retailer are overwritten. We then add the extracted chunks from the doc to the vector retailer.

Step 2: Studying, Loading, and Processing the PDF

The PDF reader that we initialized earlier is a customized PDF reader that we are going to create for our use case. We’ll make use of Langchain’s PyPDFLoader to load the PDF doc and CharacterTextSplitter to separate the doc into chunks of smaller dimension. Then we replace the chunks’ metadata utilizing our most popular data like web page quantity and filename. Beneath is your entire codebase for the PDF Reader instrument:

import os

import config as cfg

from langchain.document_loaders.pdf import PyPDFLoader

from langchain.text_splitter import (

CharacterTextSplitter,

)

from langchain.schema import Doc

class PDFReader:

"""Customized PDF Loader to embed metadata with the pdfs."""

def __init__(self) -> None:

self.file_name = ""

self.total_pages = 0

def load_pdf(self, file_path):

# Get the filename from file path

self.file_name = os.path.basename(file_path)

# Initialize Langchain's PyPDFLoader to load the PDF pages

loader = PyPDFLoader(file_path)

# Initialize the textual content splitter

text_splitter = CharacterTextSplitter(

separator="n",

chunk_size=cfg.PDF_CHARSPLITTER_CHUNKSIZE,

chunk_overlap=cfg.PDF_CHARSPLITTER_CHUNK_OVERLAP,

)

# Load the pages from the doc

pages = loader.load()

self.total_pages = len(pages)

chunks = []

# Loop by the pages

for idx, web page in enumerate(pages):

# Append every web page as Doc object with modified metadata

chunks.append(

Doc(

page_content=web page.page_content,

metadata=dict(

{

"file_name": self.file_name,

"page_no": str(idx + 1),

"total_pages": str(self.total_pages),

}

),

)

)

# Break up the paperwork utilizing splitter

final_chunks = text_splitter.split_documents(chunks)

return final_chunksLet’s break down the code and perceive every line shortly. The category constructor has two native variables file_name and total_pages that are initialized as empty string and 0 respectively. The category has a foremost methodology known as load_pdf which masses the doc contents and splits them into smaller chunks. We first initialize Langchain’s PyPDFLoader utilizing the file path. Then initialize the Character splitter object which will likely be used later to separate the chunks. The Character splitter takes in 3 arguments: separator, chunk dimension, and chunk overlap.

The default separator is ‘nn’ which isn’t useful whereas utilizing chunk overlap, so we set it to ‘n’. We are able to have chunk dimension and chunk overlap of our selection. For this instance, we will set them to 1000 and 200 respectively. Then we load the doc utilizing the loader occasion and get the full variety of pages.

We then iterate over the loaded pages from PDF and create a brand new record of doc objects utilizing the page_content and our metadata data. At this stage, we have now a listing of chunks of the doc with further metadata. Then we proceed to separate the chunks utilizing the textual content splitter and return them.

Step 3: Constructing the Semantic Cache

Subsequent, let’s construct our semantic cache service for storing LLM responses and utilizing them as responses for comparable queries. Beneath is the code for the CustomGPTCache instrument:

from typing import Record

import config as cfg

from langchain.schema import Doc

from langchain.vectorstores.deeplake import DeepLake

from langchain.embeddings.sentence_transformer import SentenceTransformerEmbeddings

class CustomGPTCache:

def __init__(self) -> None:

# Initialize the embeddings mannequin and cache vector retailer

self.embeddings = SentenceTransformerEmbeddings(

model_name="all-MiniLM-L12-v2"

)

self.response_cache_store = DeepLake(

dataset_path="database/cache_vectorstore",

embedding=self.embeddings,

read_only=False,

num_workers=4,

verbose=False,

)

def cache_query_response(self, question: str, response: str):

# Create a Doc object utilizing question because the content material and it is

# response as metadata

doc = Doc(

page_content=question,

metadata={"response": response},

)

# Insert the Doc object into cache vectorstore

_ = self.response_cache_store.add_documents(paperwork=[doc])

def find_similar_query_response(self, question: str, threshold: int):

strive:

# Discover comparable question based mostly on the enter question

sim_response = self.response_cache_store.similarity_search_with_score(

question=question, okay=1

)

# Return the response from the fetched entry if it is rating is extra

# than threshold

return [

{

"response": res[0].metadata["response"],

}

for res in sim_response

if res[1] > threshold

]

besides Exception as e:

elevate Exception(e)Let’s stroll by how we’re constructing the cache system. We’re utilizing the all-MiniLM-L12-v2 mannequin from Sentence Transformers for creating embeddings for the cache. The motivation for this method was that: for embeddings of upper dimension, we get a better vary of similarity rating between dissimilar queries which ends up in mis-triggering of the cache.

From intensive analysis, I’ve noticed that utilizing embeddings of decrease dimensions, say 256, we get a wider vary of similarity scores between 0.3 to 1.0. Coming again to the code, we initialize the embeddings utilizing Langchain’s SentenceTransformerEmbeddings by specifying the mannequin title. Subsequent, we initialize the Deeplake vector retailer the place the question and responses will likely be saved.

Word that when initializing cache vector retailer we don’t set the overwrite parameter to True as that will be counter-intuitive. We’ll add two strategies to the CustomGPTCache class: cache_query_response and find_similar_query_response. Let’s focus on about them.

- cache_query_response: This methodology is known as within the QAChain when an LLM response is generated. A Doc object is created with the question within the page_content and the response within the metadata subject. Then, that Doc object is added to the vector retailer.

- find_similar_query_response: This methodology is known as within the QAChain for each run. It returns a listing of dictionaries containing responses. The consumer question is handed into this methodology for each run. It makes use of the question to do a similarity search on the cache vector retailer to search out probably the most comparable question that’s cached. We solely fetch one comparable entry from the vector retailer. Then we create a listing of these responses and put a threshold on the similarity rating to forestall returning irrelevant responses.

Step 4: Doc Questions and Answering Utilizing QAChain

Lastly, we’ll have a look at how QAChain makes use of the cache vector retailer and docstore to generate responses to consumer queries. We initialize Gemini embeddings and Gemini mannequin within the class constructor utilizing the required parameters like mannequin title, API key, and so forth. We additionally initialize the cache right here which will likely be used within the different strategies. Then, we’ll add two strategies to the QAChain class for dealing with consumer queries: generate_response and ask_question.

- generate_response: This methodology handles the LLM response a part of the QAChain. It merely takes a consumer question and generates a response for the question utilizing Langchain’s RetrievalQA Chain. After the response is generated, it’s cached utilizing the cache_query_response methodology of CustomGPTCache, and the response is returned.

- 2. ask_question: This methodology is known as for each consumer response. First, the find_similar_query_response methodology of CustomGPTCache is known as utilizing the consumer question. If it returns a listing of responses, the response is returned, in any other case, the generate_response methodology is known as utilizing the consumer question. Moreover, the generate_response methodology is known as if any exception is raised from the cache aspect. Beneath is the codebase for the QAChain pipeline for reference.

import config as cfg

from src.cache import CustomGPTCache

from langchain.chains import RetrievalQA

from langchain.prompts import PromptTemplate

from langchain.vectorstores.deeplake import DeepLake

from langchain_google_genai import (

GoogleGenerativeAIEmbeddings,

ChatGoogleGenerativeAI

)

class QAChain:

def __init__(self) -> None:

# Initialize Gemini Embeddings

self.embeddings = GoogleGenerativeAIEmbeddings(

mannequin="fashions/embedding-001",

google_api_key=cfg.GOOGLE_API_KEY,

task_type="retrieval_query",

)

# Initialize Gemini Chat mannequin

self.mannequin = ChatGoogleGenerativeAI(

mannequin="gemini-pro",

temperature=0.3,

google_api_key=cfg.GOOGLE_API_KEY,

convert_system_message_to_human=True,

)

# Initialize GPT Cache

self.cache = CustomGPTCache()

self.text_vectorstore = None

self.text_retriever = None

def ask_question(self, question):

strive:

# Seek for comparable question response in cache

cached_response = self.cache.find_similar_query_response(

question=question, threshold=cfg.CACHE_THRESHOLD

)

# If comparable question response is current,vreturn it

if len(cached_response) > 0:

print("Utilizing cache")

outcome = cached_response[0]["response"]

# Else generate response for the question

else:

print("Producing response")

outcome = self.generate_response(question=question)

besides Exception as _:

print("Exception raised. Producing response.")

outcome = self.generate_response(question=question)

return outcome

def generate_response(self, question: str):

# Initialize the vectorstore and retriever object

vstore = DeepLake(

dataset_path="database/text_vectorstore",

embedding=self.embeddings,

read_only=True,

num_workers=4,

verbose=False,

)

retriever = vstore.as_retriever(search_type="similarity")

retriever.search_kwargs["distance_metric"] = "cos"

retriever.search_kwargs["fetch_k"] = 20

retriever.search_kwargs["k"] = 15

# Write immediate to information the LLM to generate response

prompt_template = """

<YOUR PROMPT HERE>

Context: {context}

Query: {query}

Reply:

"""

PROMPT = PromptTemplate(

template=prompt_template, input_variables=["context", "question"]

)

chain_type_kwargs = {"immediate": PROMPT}

# Create Retrieval QA chain

qa = RetrievalQA.from_chain_type(

llm=self.mannequin,

retriever=retriever,

verbose=False,

chain_type_kwargs=chain_type_kwargs,

)

# Run the QA chain and retailer the response in cache

outcome = qa({"question": question})["result"]

self.cache.cache_query_response(question=question, response=outcome)

return outcomeNow that we have now your entire pipeline prepared, let’s use them to check our utility. Step one is to ingest the doc. We initialize the Ingestion object and name the ingest_document methodology utilizing the file path for the doc that’s to be saved.

from src.ingestion import Ingestion

ingestion = Ingestion()

file = "Apple 10k.pdf"

ingestion.ingest_documents(

file=file

)After the doc is ingested, we’ll initialize the QAChain object. Then we name the ask_question methodology utilizing the consumer question to generate a response.

from src.qachain import QAChain

qna = QAChain()

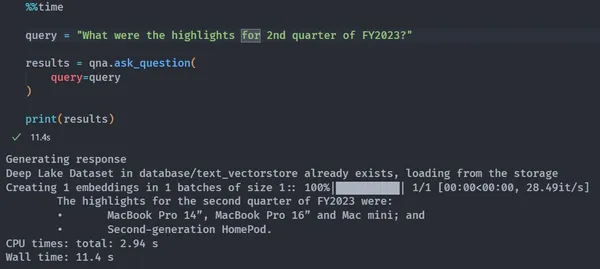

%%time

question = "What have been the highlights for 2nd quarter of FY2023?"

outcomes = qna.ask_question(

question=question

)

print(outcomes)

# OUTPUT:

# Producing response

# The highlights for the second quarter of FY2023 have been:

# • MacBook Professional 14”, MacBook Professional 16” and Mac mini; and

# • Second-generation HomePod.

# CPU instances: whole: 2.94 s

# Wall time: 11.4 s

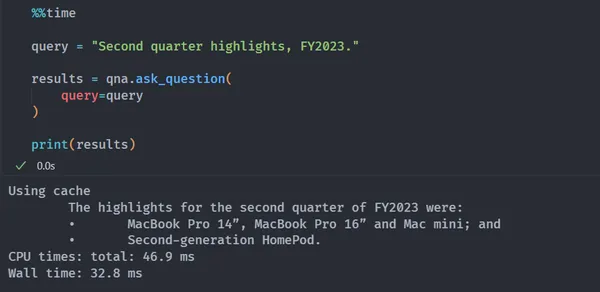

%%time

question = "Second quarter highlights, FY2023."

outcomes = qna.ask_question(

question=question

)

print(outcomes)

# OUTPUT:

# Utilizing cache

# The highlights for the second quarter of FY2023 have been:

# • MacBook Professional 14”, MacBook Professional 16” and Mac mini; and

# • Second-generation HomePod.

# CPU instances: whole: 46.9 ms

# Wall time: 32.8 ms

Software Efficiency and Limitations

There are a number of RAG analysis libraries that can be utilized to guage the efficiency of the applying. The 2 hottest ones are RAGAS and Tonic Validate Metrics. Each supply comparable metrics like Reply similarity rating, Retrieval precision, Augmentation precision, Augmentation accuracy/relevance, and Retrieval k-recall, which assist consider the applying efficiency. Though few exceptions are dealt with within the above codebases, there are a number of circumstances the place the applying may crash. The PDFReader presently has no verify on the enter file kind which must be dealt with.

This utility is extensively reusable in a variety of enterprise use circumstances like medical, monetary, industrial, e-commerce, and so forth. In medical industries, RAG pipelines might help in shortly troubleshooting instrument failures, deciding the proper drugs for a medical situation, and so forth. RAG pipelines might help reply queries from a big corpus of economic paperwork within the blink of an eye fixed. Their fast retrieval and answering capabilities make them superb for query answering over massive datasets. RAG pipelines may also allow fast evaluation of earlier analysis developments of a subject and recommend additional analysis scope.

Whereas our present pipeline is prepared for use for any use case, there are particular limitations. Gemini professional mannequin presently has a context size of 32k which could not be appropriate for giant data bases. An alternate suggestion can be to make use of the Gemini Professional 1.5 mannequin which helps a context size of as much as 1 million. The present pipeline has no chat reminiscence, so, the mannequin is not going to have the context to earlier conversations. Langchain offers a number of reminiscence choices (Reminiscence Docs) that may be built-in into the pipeline effortlessly.

Code Reusability

- The Ingestion pipeline code could be reused to construct ingestion programs for different purposes that require doc storage and retrieval.

- The PDF Reader instrument could be repurposed for different purposes that require processing and metadata extraction from PDF paperwork.

- The CustomGPTCache code can be utilized in different tasks to implement environment friendly caching and retrieval of LLM responses or comparable use circumstances.

- The QAChain class could be tailored for different question-answering programs that use doc shops and caching mechanisms.

Conclusion

So, people, that’s how one can talk together with your paperwork by RAG-powered query answering and semantic caching utilizing Gemini Professional and Langchain!

On this article, we’ve developed a pipeline that showcases a complete method to dealing with paperwork for clever QnA. By integrating Google’s Gemini embeddings, a customized PDF reader, and semantic caching, this RAG system maintains effectivity and accuracy in offering solutions to consumer queries. Furthermore, the codebase is very adaptable scalable, and dependable for a wide range of QnA use circumstances with minimal code modifications!

Key Takeaways

- The RAG system entails constructing an Ingestion pipeline to add and retailer paperwork for question-answering.

- The Ingestion pipeline makes use of Gemini embeddings for doc embeddings and a customized PDF reader for metadata extraction.

- A customized semantic cache service is applied to retailer and retrieve LLM responses for comparable queries effectively.

- The QAChain class is answerable for producing responses to consumer queries and using the cache and vector retailer.

The media proven on this article shouldn’t be owned by Analytics Vidhya and is used on the Creator’s discretion.