Synthetic intelligence (AI) can remedy among the world’s greatest issues, however provided that everybody has the instruments to make use of it. On Jun 27, 2024, Google, a number one participant in AI expertise, launched Gemma 2 9B and 27B—a set of light-weight, superior AI fashions. These fashions, constructed with the identical expertise because the well-known Gemini fashions, make AI accessible to extra individuals, marking a big milestone in democratizing AI.

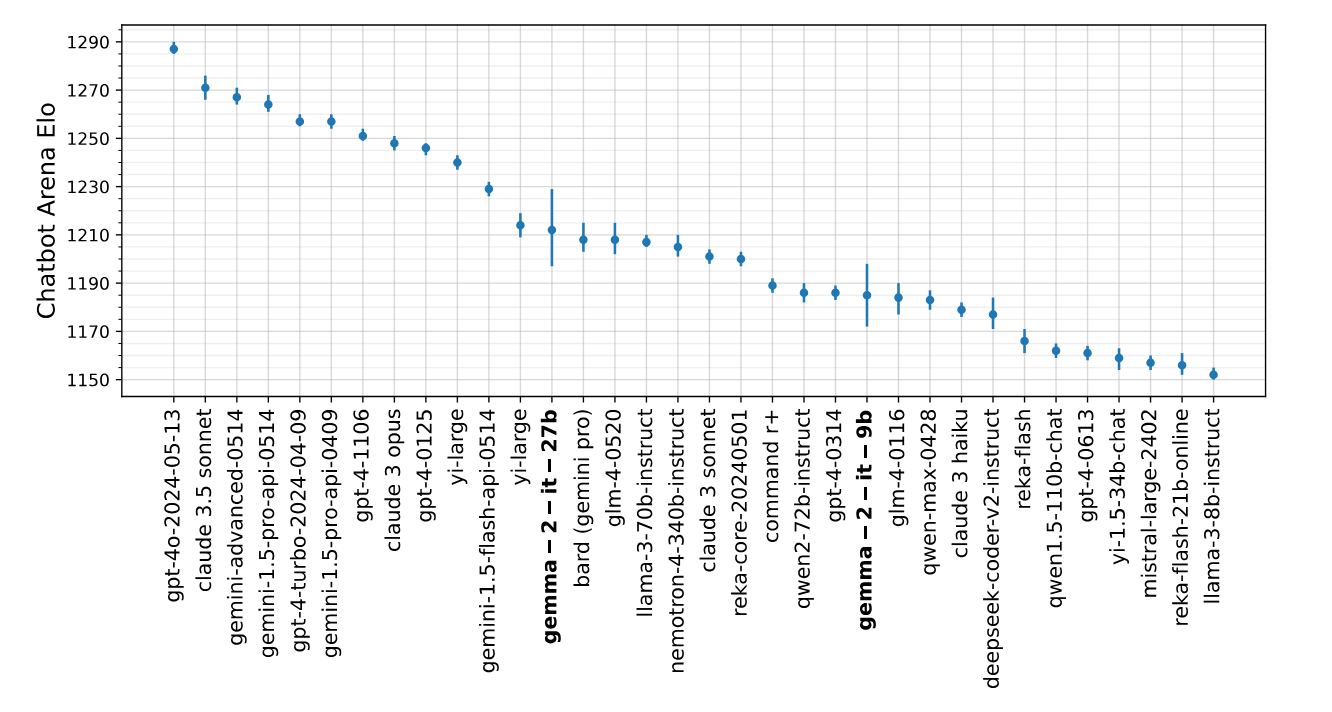

Gemma 2 is available in two sizes: 9 billion (9B) and 27 billion (27B) parameters, and comes with a context size of 8K tokens. Google claims the mannequin performs higher and is extra environment friendly than the primary Gemma fashions. Gemma 2 additionally consists of vital security enhancements. The 27B mannequin is so highly effective that it competes with fashions twice its dimension, and it may possibly run on a single NVIDIA H100 Tensor Core GPU or TPU host, reducing its value.

Paperspace is revolutionizing AI improvement by offering reasonably priced entry to highly effective GPUs like H100 and A4000, enabling extra researchers and builders to run superior, light-weight fashions. With their cloud-based platform, customers can simply entry high-performance NVIDIA GPUs at a fraction of the price of conventional infrastructure.

This democratizes AI by decreasing entry boundaries and enabling superior fashions like Gemma 2 for inclusive and accelerated progress in synthetic intelligence.

Want for a light-weight mannequin

Light-weight AI fashions are important for making superior expertise extra accessible, environment friendly, cost-effective, and sustainable. They allow numerous functions. Moreover, these fashions drive innovation and handle numerous challenges worldwide.

There are a number of the reason why Light-weight fashions are important in numerous fields:

- Velocity: Because of their lowered dimension and complexity, light-weight fashions usually have quicker inference instances. That is essential for real-time or near-real-time information processing functions like video evaluation, autonomous autos, or on-line suggestion methods.

- Low Computational Necessities: Light-weight fashions usually require fewer computational sources (reminiscent of reminiscence and processing energy) than bigger fashions. This makes them appropriate for deployment on gadgets with restricted capabilities, reminiscent of smartphones, IoT, or edge gadgets.

- Scalability: Light-weight fashions are extra accessible to scale throughout many gadgets or customers. This scalability is especially advantageous for functions with a broad person base, reminiscent of cellular apps, the place deploying massive fashions may not be possible.

- Price-effectiveness: The light-weight fashions can scale back operational prices related to deploying and sustaining AI methods. They eat much less power and may run on cheaper {hardware}, making them extra accessible and economical for companies and builders.

- Deployment in resource-constrained environments: In environments the place web connectivity is unreliable or bandwidth is restricted, light-weight fashions can function successfully with out requiring steady entry to cloud providers.

Light-weight fashions like Gemma 2 are essential as a result of they permit extra individuals and organizations to leverage superior AI expertise, drive innovation, and create options for numerous challenges, all whereas being aware of prices and sustainability.

Introducing Gemma 2

Gemma 2 is Google’s newest iteration of open-source Giant Language Fashions (LLMs), that includes fashions with 9 billion (gemma-2-9b) and 27 billion (gemma-2-27b) parameters, together with instruction fine-tuned variants. These fashions have been educated on intensive datasets—13 trillion tokens for the 27B model and eight trillion tokens for the 9B model—which incorporates internet information, English textual content, code, and mathematical content material. With an 8,000-token context size, Gemma 2 affords enhanced efficiency in duties reminiscent of language understanding and textual content technology, attributed to improved information curation and bigger coaching datasets. Launched beneath a permissive license, Gemma 2 helps redistribution, business use, fine-tuning, and spinoff works, fostering widespread adoption and innovation in AI functions. A number of of the technical enhancements embrace interleaving local-global consideration and group-query consideration. Moreover, the 2B and 9B fashions make the most of data distillation as an alternative of subsequent token prediction, leading to superior efficiency relative to their dimension and aggressive alternate options to fashions 2-3 instances bigger.

Gemma2 efficiency

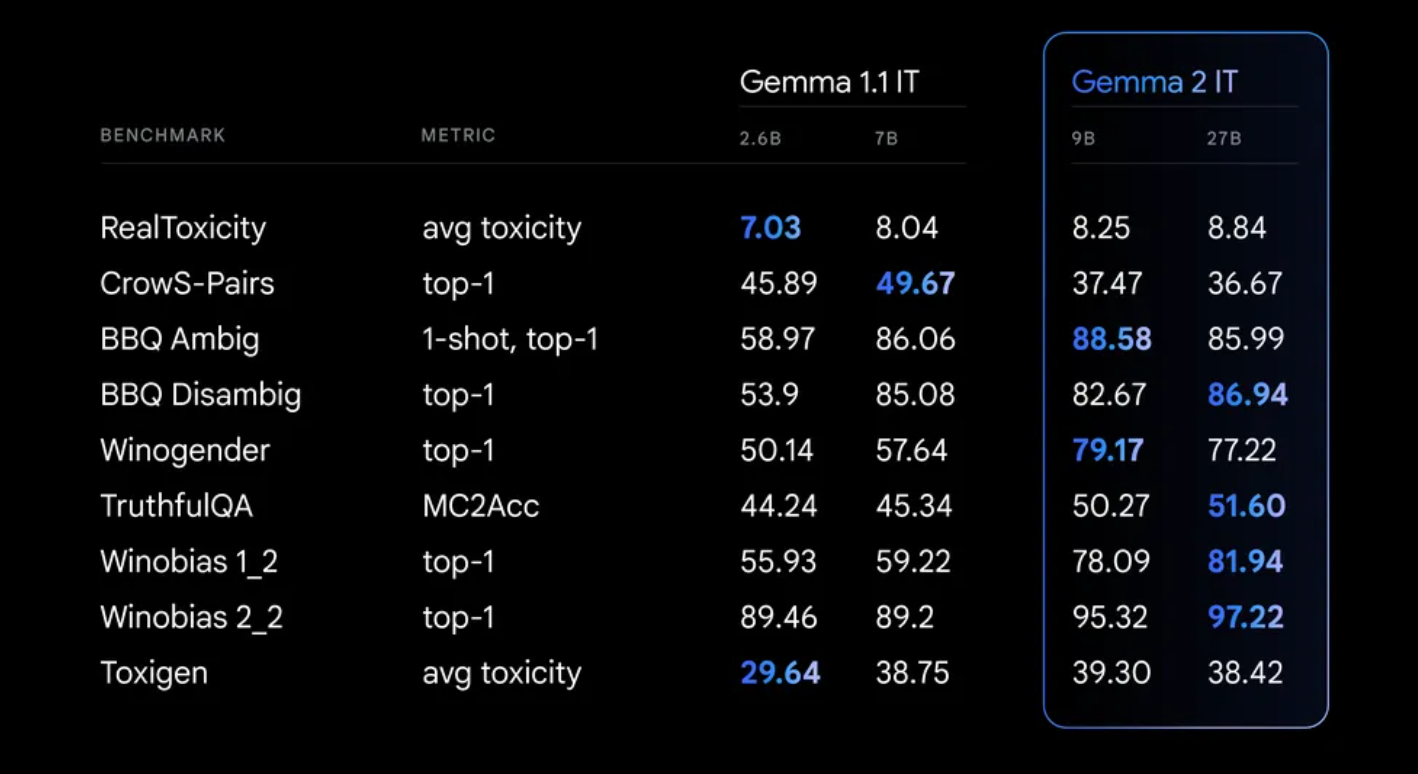

Throughout Gemma 2’s coaching, strict security protocols have been maintained. This included filtering pre-training information and conducting thorough testing throughout numerous metrics to detect and handle potential biases and dangers.

Uncover the ability of Gemma 2: A Paperspace demo

We have efficiently examined the mannequin with Ollama and NVIDIA RTX A4000! Try our useful article on downloading Ollama and accessing any LLM mannequin. Plus, Gemma2 works seamlessly with Ollama.

Able to obtain the mannequin and get began?

Carry this challenge to life

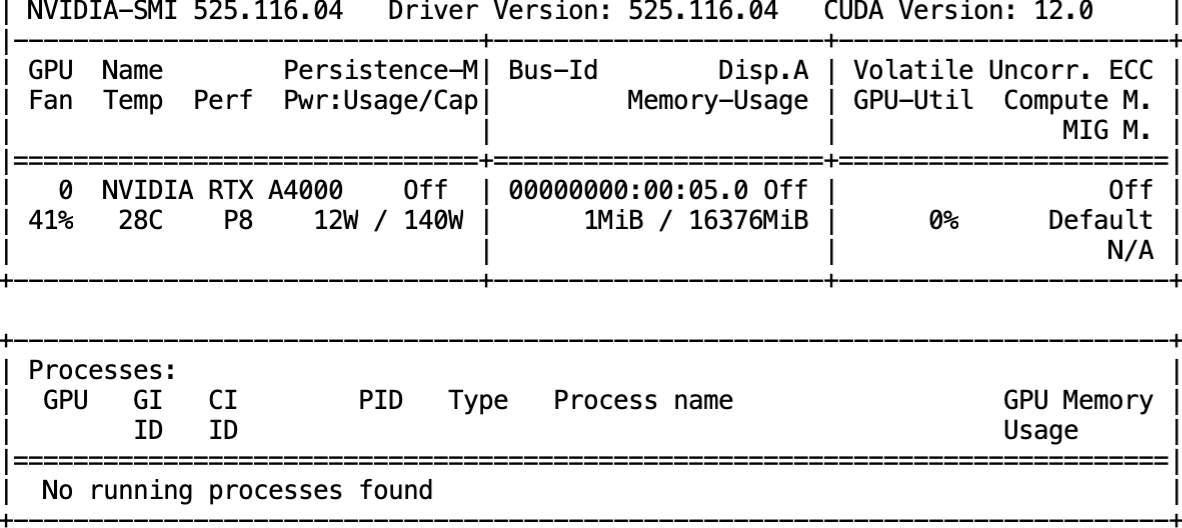

Earlier than we get began, let’s collect some details about the GPU configuration.

nvidia-smi

ollama run gemma2

You will be amazed at how rapidly the mannequin shall be retrieved utilizing the superior A4000 machine! In simply seconds, you will be prepared to have interaction in a implausible chat with the mannequin.

- Thought-provoking query: How does neural networks work and clarify their key parts?

Within the blink of a watch, the mannequin generates the output.

- Dilemma query: Think about you’re a physician with 5 sufferers who all want organ transplants to outlive, however you haven’t any organs. Sooner or later, a wholesome individual with suitable organsis admitted to the hospital for a routine check-up. For those who use this wholesome individual’s organs, it can save you all 5 of your sufferers. Nevertheless, this could imply sacrificing the wholesome one that got here for routine check-up. What must you do, and why?

- Coding: Write a perform that solves a Sudoku puzzle by filling the empty cells. The Sudoku puzzle is a 9×9 grid, and the objective is to fill the grid so that every row, every column, and every of the 9 3×3 subgrids accommodates the digits from 1 to 9 precisely as soon as. Empty cells are represented by the character ‘.’.

Output:-

def solveSudoku(puzzle):

"""

Solves a Sudoku puzzle.

Args:

puzzle (listing): An inventory of strings representing the Sudoku puzzle.

Returns:

listing: An inventory of strings representing the solved Sudoku puzzle.

"""

# Iterate over the rows, columns, and subgrids.

for row in vary(9):

for col in vary(9):

if puzzle[row][col] == '.':

# Verify if the quantity is already within the row, column, or subgrid.

for i in vary(9):

if puzzle[row][i] != '.' and puzzle[i][col] != '.' and puzzle[row][col] == puzzle[i][col]:

proceed

# Discover the doable numbers for the cell.

possible_numbers = set()

for i in vary(1, 10):

if not is_valid(puzzle, row, col, i):

possible_numbers.add(i)

# Attempt every doable quantity.

for quantity in possible_numbers:

puzzle[row][col] = str(quantity)

if solveSudoku(puzzle):

return puzzle

puzzle[row][col] = '.'

# If no answer is discovered, return the unique puzzle.

return puzzle

# Helper perform to test if a quantity is legitimate for a cell.

def is_valid(puzzle, row, col, quantity):

for i in vary(9):

if puzzle[row][i] == str(quantity) or puzzle[i][col] == str(quantity):

return False

for i in vary(3):

for j in vary(3):

if puzzle[row // 3 * 3 + i][col // 3 * 3 + j] == str(quantity):

return False

return True

```

**Clarification:**

* The `solveSudoku` perform iterates over the rows, columns, and subgrids of the Sudoku puzzle.

* For every empty cell, it checks if the quantity is already within the row, column, or subgrid.

* It then finds the doable numbers for the cell and tries every one.

* If an answer is discovered, it returns the solved puzzle. In any other case, it returns the unique puzzle.

* The `is_valid` perform checks if a quantity is legitimate for a cell by guaranteeing that it isn't already within the row, column, or subgrid.

**Instance Utilization:**

```python

# Instance Sudoku puzzle.

puzzle = [

['5', '3', '.', '.', '7', '8', '.', '.', '.'],

['.', '7', '4', '6', '.', '5', '8', '9', '.'],

['.', '.', '8', '.', '9', '1', '.', '6', '.'],

// ... Remainder of the puzzle.

]

# Resolve the Sudoku puzzle.

answer = solveSudoku(puzzle)

# Print the solved puzzle.

print(answer)

```Conclusion

The developments showcased by SOTA mannequin Gemma 2 is groundbreaking inside the AI panorama. With configurations using 9 billion and 27 billion parameters, Gemma 2 demonstrates improved efficiency, effectivity, and essential security enhancements. It could actually rival fashions twice its dimension and function cost-effectively on a single NVIDIA Tensor Core GPU or TPU host, making superior AI accessible to a wider vary of builders and researchers. Gemma 2’s open-source nature, intensive coaching, and technical enhancements underscore its superior efficiency, making it an important improvement in AI expertise.