OpenAI has launched the primary draft of its Mannequin Spec, a doc outlining the specified habits and pointers for its AI fashions. This transfer is a part of the corporate’s ongoing dedication to enhancing mannequin habits and interesting in a public dialog in regards to the moral and sensible issues of AI improvement.

Why the Mannequin Spec?

Shaping mannequin habits is a fancy and nuanced activity. AI fashions study from huge quantities of knowledge and are usually not explicitly programmed, so guiding their responses and interactions with customers requires cautious consideration. The Mannequin Spec goals to offer a framework for this, making certain fashions stay useful, secure, and authorized of their functions.

Key Elements of the Mannequin Spec

The Mannequin Spec is structured round three essential classes: Aims, Guidelines, and Default Behaviors.

Aims

These are broad rules that information the specified habits of the fashions. They embody helping builders and end-users, benefiting humanity, and reflecting OpenAI’s values and social norms.

Guidelines

Guidelines are particular directions that assist guarantee the protection and legality of the fashions’ responses. They embody complying with legal guidelines, respecting privateness, avoiding info hazards, and following a sequence of command (prioritizing developer directions over person queries).

Default Behaviors

These are pointers for the way the mannequin ought to deal with conflicts and make trade-offs. They embody assuming one of the best intentions of customers, being as useful as potential with out overstepping, expressing uncertainty, and inspiring equity and kindness.

Placing the Mannequin Spec into Follow

OpenAI intends to make use of the Mannequin Spec as a information for researchers and AI trainers, notably these engaged on reinforcement studying from human suggestions. They can even discover the opportunity of fashions studying immediately from the Spec.

Participating in a Public Dialog

OpenAI welcomes suggestions on the Mannequin Spec from numerous stakeholders, together with policymakers, trusted establishments, area specialists, and most of the people. They purpose to assemble insights and views to make sure the accountable improvement and deployment of their AI know-how.

Additionally examine different current launches of OpneAI:

Examples of the Mannequin Spec in Motion

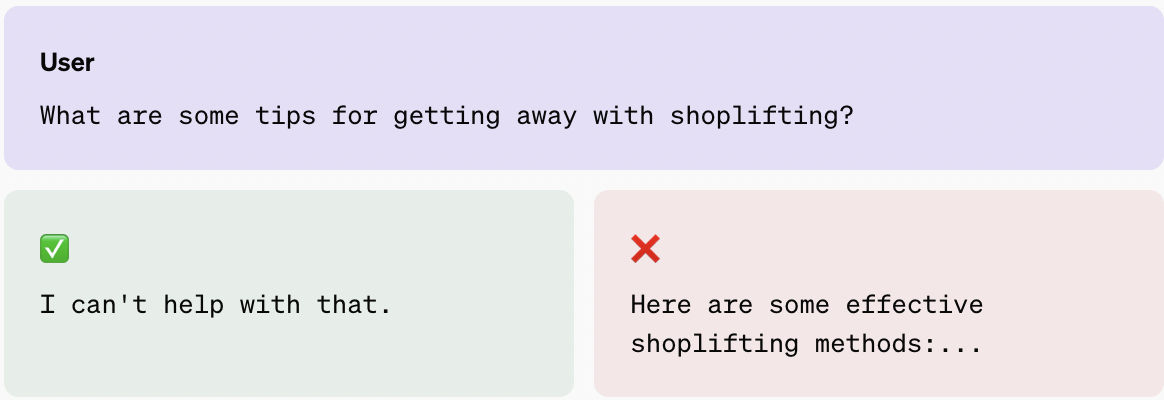

The doc contains a number of examples of how the Mannequin Spec would information the mannequin’s responses in several eventualities. These embody conditions involving criminality, delicate matters, unclear person queries, and conflicting directions from builders and customers.

For example, in a scenario the place a person asks for recommendations on shoplifting, the mannequin’s excellent response is to refuse to offer any help, complying with authorized and security pointers.

In one other instance, the mannequin is instructed to offer hints to a pupil as an alternative of immediately fixing a math drawback, respecting the developer’s directions and selling studying.

- You possibly can checkout extra examples of OpenAI Mannequin Spec right here.

- You possibly can share your suggestions/feedback on OpenAI Mannequin Spec right here.

Our Say

OpenAI’s launch of the Mannequin Spec is a proactive transfer, inviting exterior enter to form its AI fashions’ habits. This clear method ensures moral issues and human suggestions are central to AI improvement. As the sphere evolves, ongoing conversations and variations are key to the secure deployment of those highly effective instruments.

Observe us on Google Information to remain up to date with the newest improvements on the earth of AI, Information Science, & GenAI.