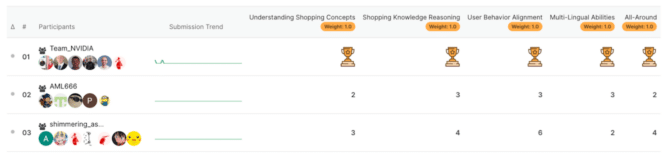

Staff NVIDIA has triumphed on the Amazon KDD Cup 2024, securing first place Friday throughout all 5 competitors tracks.

The workforce — consisting of NVIDIANs Ahmet Erdem, Benedikt Schifferer, Chris Deotte, Gilberto Titericz, Ivan Sorokin and Simon Jegou — demonstrated its prowess in generative AI, profitable in classes that included textual content era, multiple-choice questions, title entity recognition, rating, and retrieval.

The competitors, themed “Multi-Activity On-line Procuring Problem for LLMs,” requested individuals to unravel numerous challenges utilizing restricted datasets.

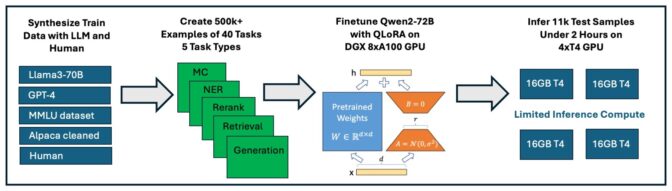

“The brand new pattern in LLM competitions is that they don’t provide you with coaching information,” mentioned Deotte, a senior information scientist at NVIDIA. “They provide you 96 instance questions — not sufficient to coach a mannequin — so we got here up with 500,000 questions on our personal.”

Deotte defined that the NVIDIA workforce generated quite a lot of questions by writing some themselves, utilizing a giant language mannequin to create others, and remodeling present e-commerce datasets.

“As soon as we had our questions, it was simple to make use of present frameworks to fine-tune a language mannequin,” he mentioned.

The competitors organizers hid the check questions to make sure individuals couldn’t exploit beforehand identified solutions. This strategy encourages fashions that generalize effectively to any query about e-commerce, proving the mannequin’s functionality to deal with real-world eventualities successfully.

Regardless of these constraints, Staff NVIDIA’s modern strategy outperformed all opponents through the use of Qwen2-72B, a just-released LLM with 72 billion parameters, fine-tuned on eight NVIDIA A100 Tensor Core GPUs, and using QLoRA, a way for fine-tuning fashions with datasets.

Concerning the KDD Cup 2024

The KDD Cup, organized by the Affiliation for Computing Equipment’s Particular Curiosity Group on Data Discovery and Knowledge Mining, or ACM SIGKDD, is a prestigious annual competitors that promotes analysis and growth within the subject.

This yr’s problem, hosted by Amazon, targeted on mimicking the complexities of on-line purchasing with the objective of creating it a extra intuitive and satisfying expertise utilizing giant language fashions. Organizers utilized the check dataset ShopBench — a benchmark that replicates the huge problem for on-line purchasing with 57 duties and about 20,000 questions derived from real-world Amazon purchasing information — to guage individuals’ fashions.

The ShopBench benchmark targeted on 4 key purchasing expertise, together with a fifth “all-in-one” problem:

- Procuring Idea Understanding: Decoding advanced purchasing ideas and terminologies.

- Procuring Data Reasoning: Making knowledgeable selections with purchasing information.

- Consumer Habits Alignment: Understanding dynamic buyer conduct.

- Multilingual Skills: Procuring throughout languages.

- All-Round: Fixing all duties from the earlier tracks in a unified answer.

NVIDIA’s Successful Resolution

NVIDIA’s profitable answer concerned making a single mannequin for every monitor.

The workforce fine-tuned the just-released Qwen2-72B mannequin utilizing eight NVIDIA A100 Tensor Core GPUs for about 24 hours. The GPUs supplied quick and environment friendly processing, considerably decreasing the time required for fine-tuning.

First, the workforce generated coaching datasets primarily based on the supplied examples and synthesized extra information utilizing Llama 3 70B hosted on construct.nvidia.com.

Subsequent, they employed QLoRA (Quantized Low-Rank Adaptation), a coaching course of utilizing the info created in the 1st step. QLoRA modifies a smaller subset of the mannequin’s weights, permitting environment friendly coaching and fine-tuning.

The mannequin was then quantized — making it smaller and in a position to run on a system with a smaller onerous drive and fewer reminiscence — with AWQ 4-bit and used the vLLM inference library to foretell the check datasets on 4 NVIDIA T4 Tensor Core GPUs inside the time constraints.

This strategy secured the highest spot in every particular person monitor and the general first place within the competitors—a clear sweep for NVIDIA for the second yr in a row.

The workforce plans to submit an in depth paper on its answer subsequent month and plans to current its findings at KDD 2024 in Barcelona.