Carry this venture to life

Overview

The continued pattern in current Giant Language Fashions (LLMs) growth has focussed consideration on bigger fashions, usually neglecting the sensible necessities of on-device processing, vitality effectivity, low reminiscence footprint, and response effectivity. These elements are vital for situations that prioritize privateness, safety, and sustainable deployment. Mobillama, a compact mannequin is a paradigm shift in direction of “much less is extra”, by tackling the problem of crafting Small Language Fashions (SLMs) which can be each correct and environment friendly for resource-constrained gadgets.

The introduction of MobiLlama, an open-source SLM with 0.5 billion (0.5B) parameters launched on twenty sixth February 2024. MobiLlama is particularly designed to fulfill the wants of resource-constrained computing, emphasizing enhanced efficiency whereas minimizing useful resource calls for. The SLM design of MobiLlama originates from a bigger mannequin and incorporates a parameter sharing scheme, successfully decreasing each pre-training and deployment prices.

Introduction

In recent times, there was a major growth in Giant Language Fashions (LLMs) like ChatGPT, Bard, and Claude. These fashions present spectacular talents in fixing complicated duties, and there is a pattern of creating them bigger for higher efficiency. For instance, the 70 billion (70B) mannequin of Llama-2 is most well-liked for dealing with dialogues and logical reasoning in comparison with its smaller counterpart.

Nonetheless, a downside of those giant fashions is their measurement and the necessity for in depth computational assets. The Falcon 180B mannequin, for example, requires a considerable quantity of GPUs and high-performance servers. Nonetheless, now we have an in depth article on tips on how to get a fingers on expertise with falcon-70b utilizing Paperspace platform.

Alternatively, Small Language Fashions (SLMs), like Microsoft’s Phi-2 2.7 billion, are gaining consideration. These smaller fashions present first rate efficiency with fewer parameters, providing benefits in effectivity, value, flexibility, and customizability. SLMs are extra resource-efficient, making them appropriate for purposes the place environment friendly useful resource use is essential, particularly on low-powered gadgets like edge gadgets. Additionally they assist on-device processing, resulting in enhanced privateness, safety, response time, and personalization. This integration might end in superior private assistants, cloud-independent purposes, and improved vitality effectivity with a diminished environmental influence.

Structure Temporary Overview

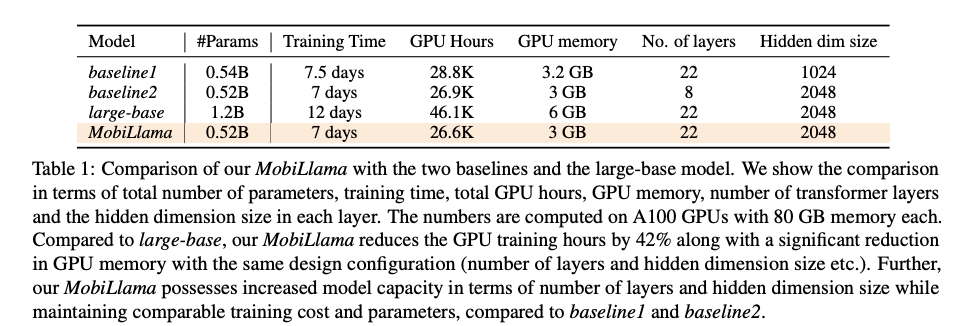

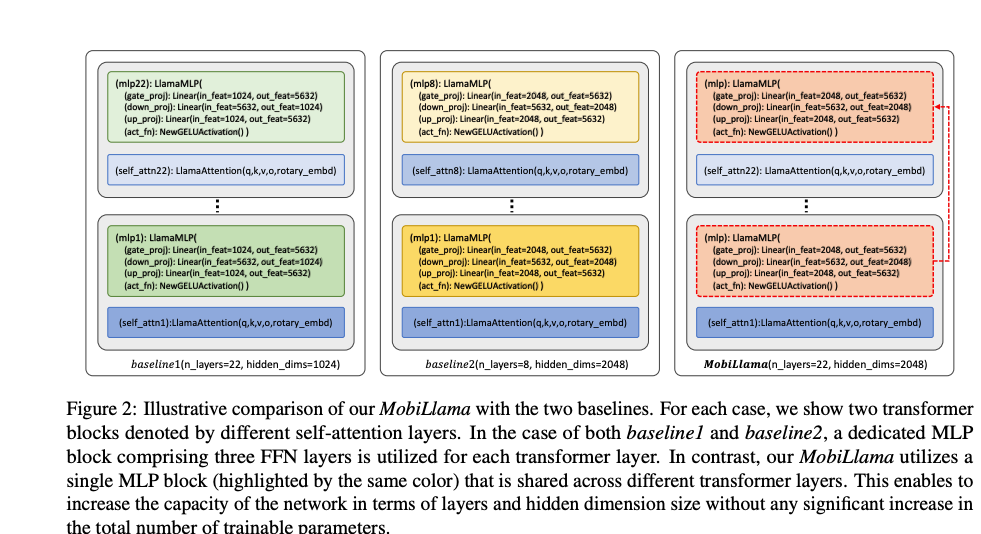

The Mobillama, baseline Small Language Mannequin (SLM) structure of 0.5 billion (0.5B) parameters, is impressed by TinyLlama and Llama-2 fashions. This baseline has N layers with hidden dimensions of M and intermediate measurement (MLPs) of 5632. The vocabulary measurement is 32,000, and the utmost context size is denoted as C.

- Baseline1: This has 22 layers with a hidden measurement of 1024.

- Baseline2: This has 8 layers with a hidden measurement of 2048.

Each of the baselines confronted challenges in balancing accuracy and effectivity. Baseline1, with a smaller hidden measurement, enhances computational effectivity however could compromise the mannequin’s means to seize complicated patterns. Baseline2, with fewer layers, hampers the mannequin’s depth and its functionality for deep linguistic understanding.

To deal with these points, combining some great benefits of each baselines right into a single mannequin (22 layers and hidden measurement of 2048) leads to a bigger 1.2 billion (1.2B) parameterized mannequin referred to as “largebase,” with elevated coaching prices.

The authors then introduce their proposed MobiLlama 0.5B mannequin design, aiming to keep up hidden dimension measurement and the overall variety of layers whereas guaranteeing comparable coaching effectivity. This new design seeks to attain a stability between computational effectivity and the mannequin’s capability to grasp complicated language patterns.

Paperspace Demo & Code Clarification

Carry this venture to life

Allow us to soar to essentially the most intersting half to make the mannequin working utilizing Paperspace highly effective A6000.

- Start with putting in and updating the required packages.

!pip set up -U transformers

!pip set up flash_attnfrom transformers import AutoTokenizer, AutoModelForCausalLM

- Load the pre-trained tokenizer for the mannequin referred to as “MBZUAI/MobiLlama-1B-Chat.”

tokenizer = AutoTokenizer.from_pretrained("MBZUAI/MobiLlama-1B-Chat", trust_remote_code=True)

- Subsequent load the pre-trained language mannequin for language modeling related to the “MBZUAI/MobiLlama-1B-Chat” mannequin utilizing the Hugging Face Transformers library. Additional, transfer the mannequin to cuda gadget.

mannequin = AutoModelForCausalLM.from_pretrained("MBZUAI/MobiLlama-1B-Chat", trust_remote_code=True)

mannequin.to('cuda')

- Outline a template for the response.

template= "A chat between a curious human and a man-made intelligence assistant. The assistant offers useful, detailed, and well mannered solutions to the human's questions.n### Human: Received any artistic concepts for a ten yr outdated’s birthday?n### Assistant: After all! Listed below are some artistic concepts for a 10-year-old's celebration:n1. Treasure Hunt: Arrange a treasure hunt in your yard or close by park. Create clues and riddles for the youngsters to resolve, main them to hidden treasures and surprises.n2. Science Social gathering: Plan a science-themed celebration the place youngsters can have interaction in enjoyable and interactive experiments. You'll be able to arrange totally different stations with actions like making slime, erupting volcanoes, or creating easy chemical reactions.n3. Out of doors Film Night time: Arrange a yard film evening with a projector and a big display or white sheet. Create a comfortable seating space with blankets and pillows, and serve popcorn and snacks whereas the youngsters take pleasure in a favourite film below the celebrities.n4. DIY Crafts Social gathering: Prepare a craft celebration the place youngsters can unleash their creativity. Present quite a lot of craft provides like beads, paints, and materials, and allow them to create their very own distinctive masterpieces to take house as celebration favors.n5. Sports activities Olympics: Host a mini Olympics occasion with varied sports activities and video games. Arrange totally different stations for actions like sack races, relay races, basketball capturing, and impediment programs. Give out medals or certificates to the individuals.n6. Cooking Social gathering: Have a cooking-themed celebration the place the youngsters can put together their very own mini pizzas, cupcakes, or cookies. Present toppings, frosting, and adorning provides, and allow them to get hands-on within the kitchen.n7. Superhero Coaching Camp: Create a superhero-themed celebration the place the youngsters can have interaction in enjoyable coaching actions. Arrange an impediment course, have them design their very own superhero capes or masks, and manage superhero-themed video games and challenges.n8. Out of doors Journey: Plan an outside journey celebration at a neighborhood park or nature reserve. Prepare actions like mountaineering, nature scavenger hunts, or a picnic with video games. Encourage exploration and appreciation for the outside.nRemember to tailor the actions to the birthday kid's pursuits and preferences. Have a fantastic celebration!n### Human: {immediate}n### Assistant:"

- Use the pre-trained mannequin to generate response for the immediate concerning working towards mindfulness. The

generatemethodology is used for textual content era, and parameters reminiscent ofmax_lengthmanagement the utmost size of the generated textual content, andpad_token_idspecifies the token ID for padding.

immediate = "What are the important thing advantages of working towards mindfulness meditation?"

input_str = template.format(immediate=immediate)

input_ids = tokenizer(input_str, return_tensors="pt").to('cuda').input_ids

outputs = mannequin.generate(input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id)

print(tokenizer.batch_decode(outputs[:, input_ids.shape[1]:-1])[0].strip())

output: –

Mindfulness meditation is a observe that helps people turn out to be extra conscious of their ideas, feelings, and bodily sensations. It has a number of key advantages, together with:

1. Diminished stress and anxiousness: Mindfulness meditation will help cut back stress and anxiousness by permitting people to deal with the current second and cut back their ideas and feelings.

2. Improved sleep: Mindfulness meditation will help enhance sleep high quality by decreasing stress and anxiousness, which may result in higher sleep.

3. Improved focus and focus: Mindfulness meditation will help enhance focus and focus by permitting people to deal with the current second and cut back their ideas and feelings.

4. Improved emotional regulation: Mindfulness meditation will help enhance emotional regulation by permitting people to turn out to be extra conscious of their ideas, feelings, and bodily sensations.

5. Improved total well-being: Mindfulness meditation will help enhance total well-being by permitting people to turn out to be extra conscious of their ideas, feelings, and bodily sensations.We’ve got added the hyperlink to the pocket book, please be at liberty to examine the mannequin and the Paperspace platform to experiment additional.

Conclusion

On this article now we have experimented with a brand new SLM referred to as MobiLlama that makes part of the transformer block extra environment friendly by decreasing pointless repetition. In MobiLlama, authors advised utilizing a shared design for a part of the system referred to as feed ahead layers (FFN) throughout all of the blocks within the SLM. MobiLlama was examined on 9 totally different duties and located that it performs effectively in comparison with different strategies for fashions with lower than 1 billion parameters. This mannequin effectively handles each textual content and pictures collectively, making it a flexible SLM.

Nonetheless, there are some limitations. We predict there’s room to make MobiLlama even higher at understanding context. Whereas MobiLlama is designed to be very clear in the way it works, it is essential for us to do extra analysis to ensure it would not unintentionally present any improper or biased info.

We hope you loved the article and the demo of Mobillama!