Enhancing Japan’s AI sovereignty and strengthening its analysis and improvement capabilities, Japan’s Nationwide Institute of Superior Industrial Science and Expertise (AIST) will combine 1000’s of NVIDIA H200 Tensor Core GPUs into its AI Bridging Cloud Infrastructure 3.0 supercomputer (ABCI 3.0). The HPE Cray XD system will characteristic NVIDIA Quantum-2 InfiniBand networking for superior efficiency and scalability.

ABCI 3.0 is the most recent iteration of Japan’s large-scale Open AI Computing Infrastructure designed to advance AI R&D. This collaboration underlines Japan’s dedication to advancing its AI capabilities and fortifying its technological independence.

“In August 2018, we launched ABCI, the world’s first large-scale open AI computing infrastructure,” mentioned AIST Govt Officer Yoshio Tanaka. “Constructing on our expertise over the previous a number of years managing ABCI, we’re now upgrading to ABCI 3.0. In collaboration with NVIDIA we intention to develop ABCI 3.0 right into a computing infrastructure that may advance additional analysis and improvement capabilities for generative AI in Japan.”

“As generative AI prepares to catalyze international change, it’s essential to quickly domesticate analysis and improvement capabilities inside Japan,” mentioned AIST Options Co. Producer and Head of ABCI Operations Hirotaka Ogawa. “I’m assured that this main improve of ABCI in our collaboration with NVIDIA and HPE will improve ABCI’s management in home business and academia, propelling Japan in direction of international competitiveness in AI improvement and serving because the bedrock for future innovation.”

ABCI 3.0: A New Period for Japanese AI Analysis and Improvement

ABCI 3.0 is constructed and operated by AIST, its enterprise subsidiary, AIST Options, and its system integrator, Hewlett Packard Enterprise (HPE).

The ABCI 3.0 mission follows help from Japan’s Ministry of Economic system, Commerce and Business, often known as METI, for strengthening its computing assets by way of the Financial Safety Fund and is a part of a broader $1 billion initiative by METI that features each ABCI efforts and investments in cloud AI computing.

NVIDIA is intently collaborating with METI on analysis and schooling following a go to final 12 months by firm founder and CEO, Jensen Huang, who met with political and enterprise leaders, together with Japanese Prime Minister Fumio Kishida, to debate the way forward for AI.

NVIDIA’s Dedication to Japan’s Future

Huang pledged to collaborate on analysis, significantly in generative AI, robotics and quantum computing, to spend money on AI startups and supply product help, coaching and schooling on AI.

Throughout his go to, Huang emphasised that “AI factories” — next-generation information facilities designed to deal with probably the most computationally intensive AI duties — are essential for turning huge quantities of information into intelligence.

“The AI manufacturing unit will turn into the bedrock of recent economies the world over,” Huang mentioned throughout a gathering with the Japanese press in December.

With its ultra-high-density information heart and energy-efficient design, ABCI offers a sturdy infrastructure for creating AI and large information functions.

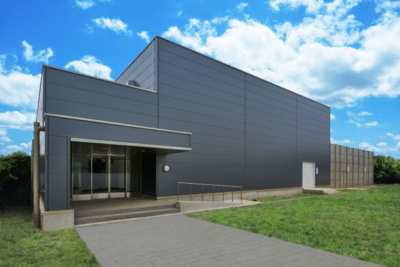

The system is predicted to come back on-line by the tip of this 12 months and provide state-of-the-art AI analysis and improvement assets. It is going to be housed in Kashiwa, close to Tokyo.

Unmatched Computing Efficiency and Effectivity

The ability will provide:

- 6 AI exaflops of computing capability, a measure of AI-specific efficiency with out sparsity

- 410 double-precision petaflops, a measure of normal computing capability

- Every node is related by way of the Quantum-2 InfiniBand platform at 200GB/s of bisectional bandwidth.

NVIDIA know-how varieties the spine of this initiative, with tons of of nodes every geared up with 8 NVLlink-connected H200 GPUs offering unprecedented computational efficiency and effectivity.

NVIDIA H200 is the primary GPU to supply over 140 gigabytes (GB) of HBM3e reminiscence at 4.8 terabytes per second (TB/s). The H200’s bigger and sooner reminiscence accelerates generative AI and LLMs, whereas advancing scientific computing for HPC workloads with higher power effectivity and decrease complete price of possession.

NVIDIA H200 GPUs are 15X extra energy-efficient than ABCI’s previous-generation structure for AI workloads reminiscent of LLM token technology.

The mixing of superior NVIDIA Quantum-2 InfiniBand with In-Community computing — the place networking gadgets carry out computations on information, offloading the work from the CPU — ensures environment friendly, high-speed, low-latency communication, essential for dealing with intensive AI workloads and huge datasets.

ABCI boasts world-class computing and information processing energy, serving as a platform to speed up joint AI R&D with industries, academia and governments.

METI’s substantial funding is a testomony to Japan’s strategic imaginative and prescient to reinforce AI improvement capabilities and speed up the usage of generative AI.

By subsidizing AI supercomputer improvement, Japan goals to scale back the time and prices of creating next-generation AI applied sciences, positioning itself as a frontrunner within the international AI panorama.