Introduction

In at present’s data-driven panorama, companies should combine information from numerous sources to derive actionable insights and make knowledgeable selections. This significant course of, referred to as Extract, Rework, Load (ETL), includes extracting information from a number of origins, reworking it right into a constant format, and loading it right into a goal system for evaluation. With information volumes rising at an unprecedented fee, organizations face important challenges in sustaining their ETL processes’ pace, accuracy, and scalability. This information delves into methods for optimizing information integration and creating environment friendly ETL workflows.

Studying Targets

- Perceive ETL processes to combine and analyze information from various sources successfully.

- Consider and choose applicable ETL instruments and applied sciences for scalability and compatibility.

- Implement parallel information processing to reinforce ETL efficiency utilizing frameworks like Apache Spark.

- Apply incremental loading methods to course of solely new or up to date information effectively.

- Guarantee information high quality by way of profiling, cleaning, and validation inside ETL pipelines.

- Develop sturdy error dealing with and retry mechanisms to keep up ETL course of reliability and information integrity.

This text was revealed as part of the Information Science Blogathon.

Understanding Your Information Sources

Earlier than diving into ETL growth, it’s essential to have a complete understanding of your information sources. This contains figuring out the forms of information sources obtainable, corresponding to databases, recordsdata, APIs, and streaming sources, and understanding the construction, format, and high quality of the information inside every supply. By gaining insights into your information sources, you may higher plan your ETL technique and anticipate any challenges or complexities that will come up through the integration course of.

Deciding on the suitable instruments and applied sciences is crucial for constructing environment friendly ETL pipelines. Quite a few ETL instruments and frameworks can be found out there, every providing distinctive options and capabilities. Some standard choices embrace Apache Spark, Apache Airflow, Talend, Informatica, and Microsoft Azure Information Manufacturing unit. When selecting a device, contemplate scalability, ease of use, integration capabilities, and compatibility together with your current infrastructure. Moreover, consider whether or not the device helps the particular information sources and codecs it’s essential combine.

Parallel Information Processing

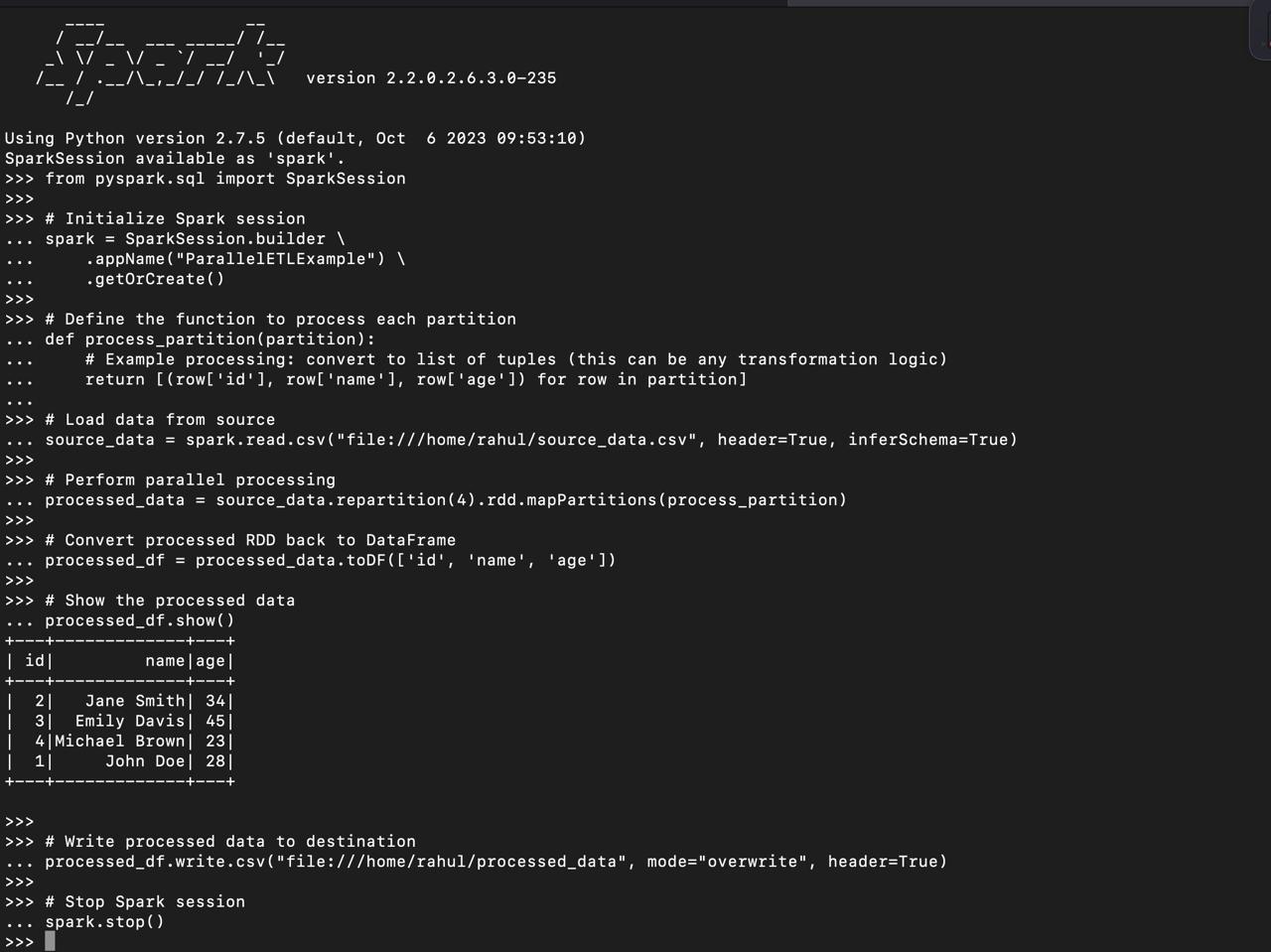

One extremely efficient strategy to improve ETL course of efficiency is by parallelizing information processing duties. This includes dividing these duties into smaller, unbiased models that may run concurrently throughout a number of processors or nodes. By harnessing the facility of distributed methods, parallel processing can dramatically scale back processing time. Apache Spark is a broadly used framework that helps parallel information processing throughout in depth clusters. By partitioning your information and using Spark’s capabilities, you may obtain substantial efficiency positive aspects in your ETL workflows.

To run the offered PySpark script, you could set up the required dependencies. Right here’s an inventory of the required dependencies and their set up instructions:

- PySpark: That is the first library for working with Apache Spark in Python.

- Pandas (non-obligatory if it’s essential manipulate information with Pandas earlier than or after Spark processing).

You may set up these dependencies utilizing pip:

pip set up pyspark pandasfrom pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder

.appName("ParallelETLExample")

.getOrCreate()

# Outline the operate to course of every partition

def process_partition(partition):

# Instance processing: convert to listing of tuples (this may be any transformation logic)

return [(row['id'], row['name'], row['age']) for row in partition]

# Load information from supply

source_data = spark.learn.csv("file:///house/rahul/source_data.csv", header=True, inferSchema=True)

# Carry out parallel processing

processed_data = source_data.repartition(4).rdd.mapPartitions(process_partition)

# Convert processed RDD again to DataFrame

processed_df = processed_data.toDF(['id', 'name', 'age'])

# Present the processed information

processed_df.present()

# Write processed information to vacation spot

processed_df.write.csv("file:///house/rahul/processed_data", mode="overwrite", header=True)

# Cease Spark session

spark.cease()

On this instance, we’re utilizing Apache Spark to parallelize information processing from a CSV supply. The repartition(4) technique distributes the information throughout 4 partitions for parallel processing, enhancing effectivity.

source_data.csv file, right here’s a small instance of how one can create it domestically:

id,title,age

1,John Doe,28

2,Jane Smith,34

3,Emily Davis,45

4,Michael Brown,23Implementing Incremental Loading

As a substitute of processing your complete dataset every time, think about using incremental loading methods to deal with solely new or up to date information. Incremental loading focuses on figuring out and extracting simply the information that has modified because the final ETL run, which reduces processing overhead and minimizes useful resource use. This method may be applied by sustaining metadata or utilizing change information seize (CDC) mechanisms to trace modifications in your information sources over time. By processing solely the incremental modifications, you may considerably increase the effectivity and efficiency of your ETL processes.

Detailed Steps and Instance Code

Let’s stroll by way of an instance to reveal how incremental loading may be applied utilizing SQL. We’ll create a easy situation with supply and goal tables and present learn how to load new information right into a staging desk and merge it into the goal desk.

Step 1: Create the Supply and Goal Tables

First, let’s create the supply and goal tables and insert some preliminary information into the supply desk.

sql

-- Create supply desk

CREATE TABLE source_table (

id INT PRIMARY KEY,

column1 VARCHAR(255),

column2 VARCHAR(255),

timestamp DATETIME

);

-- Insert preliminary information into supply desk

INSERT INTO source_table (id, column1, column2, timestamp) VALUES

(1, 'data1', 'info1', '2023-01-01 10:00:00'),

(2, 'data2', 'info2', '2023-01-02 10:00:00'),

(3, 'data3', 'info3', '2023-01-03 10:00:00');

-- Create goal desk

CREATE TABLE target_table (

id INT PRIMARY KEY,

column1 VARCHAR(255),

column2 VARCHAR(255),

timestamp DATETIME

);

On this SQL instance, we’re loading new information from a supply desk right into a staging desk primarily based on a timestamp column. Then, we use a merge operation to replace current data within the goal desk and insert new data from the staging desk.

Step 2: Create the Staging Desk

Subsequent, create the staging desk that quickly holds the brand new information extracted from the supply desk.

-- Create staging desk

CREATE TABLE staging_table (

id INT PRIMARY KEY,

column1 VARCHAR(255),

column2 VARCHAR(255),

timestamp DATETIME

);

Step 3: Load New Information into the Staging Desk

We’ll write a question to load new information from the supply desk into the staging desk. This question will choose data from the supply desk the place the timestamp is larger than the utmost timestamp within the goal desk.

-- Load new information into staging desk

INSERT INTO staging_table

SELECT *

FROM source_table

WHERE source_table.timestamp > (SELECT MAX(timestamp) FROM target_table);Step 4: Merge Information from Staging to Goal Desk

Lastly, we use a merge operation to replace current data within the goal desk and insert new data from the staging desk.

-- Merge staging information into goal desk

MERGE INTO target_table AS t

USING staging_table AS s

ON t.id = s.id

WHEN MATCHED THEN

UPDATE SET t.column1 = s.column1, t.column2 = s.column2, t.timestamp = s.timestamp

WHEN NOT MATCHED THEN

INSERT (id, column1, column2, timestamp)

VALUES (s.id, s.column1, s.column2, s.timestamp);

-- Clear the staging desk after the merge

TRUNCATE TABLE staging_table;Rationalization of Every Step

- Extract New Information: The INSERT INTO staging_table assertion extracts new or up to date rows from the source_table primarily based on the timestamp column. This ensures that solely the modifications because the final ETL run are processed.

- Merge Information: The MERGE INTO target_table assertion merges the information from the staging_table into the target_table.

- Clear Staging Desk: After the merge operation, the TRUNCATE TABLE staging_table assertion clears the staging desk to arrange it for the following ETL run.

Monitoring and Optimising Efficiency

Recurrently monitoring your ETL processes is essential for pinpointing bottlenecks and optimizing efficiency. Use instruments and frameworks like Apache Airflow, Prometheus, or Grafana to trace metrics corresponding to execution time, useful resource utilization, and information throughput. Leveraging these efficiency insights means that you can fine-tune ETL workflows, modify configurations, or scale infrastructure as wanted for steady effectivity enhancements. Moreover, implementing automated alerting and logging mechanisms may help you establish and handle efficiency points in actual time, guaranteeing your ETL processes stay clean and environment friendly

Information High quality Assurance

Making certain information high quality is essential for dependable evaluation and decision-making. Information high quality points can come up from numerous sources, together with inaccuracies, inconsistencies, duplicates, and lacking values. Implementing sturdy information high quality assurance processes as a part of your ETL pipeline may help establish and rectify such points early within the information integration course of. Information profiling, cleaning, validation guidelines, and outlier detection may be employed to enhance information high quality.

# Carry out information profiling

data_profile = source_data.describe()

# Determine duplicates

duplicate_rows = source_data.groupBy(source_data.columns).rely().the place("rely > 1")

# Information cleaning

cleaned_data = source_data.dropna()

# Validate information in opposition to predefined guidelines

validation_rules = {

"column1": lambda x: x > 0,

"column2": lambda x: isinstance(x, str),

}

invalid_rows = cleaned_data.filter ----(write Filter situations right here)...

On this Python instance, we carry out information profiling, establish duplicates, carry out information cleaning by eradicating null values, and validate information in opposition to predefined guidelines to make sure information high quality.

Error Dealing with and Retry Mechanisms

Regardless of finest efforts, errors can happen through the execution of ETL processes for numerous causes, corresponding to community failures, information format mismatches, or system crashes. Implementing error dealing with and retry mechanisms is crucial to make sure the robustness and reliability of your ETL pipeline. Logging, error notification, automated retries, and back-off methods may help mitigate failures and guarantee information integrity.

from tenacity import retry, stop_after_attempt, wait_fixed

@retry(cease=stop_after_attempt(3), wait=wait_fixed(2))

def process_data(information):

# Course of information

...

# Simulate potential error

if error_condition:

increase Exception("Error processing information")

attempt:

process_data(information)

besides Exception as e:

# Log error and notify stakeholders

logger.error(f"Error processing information: {e}")

notify_stakeholders("ETL course of encountered an error")

This Python instance defines a operate to course of information with retry and back-off mechanisms. If an error happens, the operate retries the operation as much as 3 times with a hard and fast wait time between makes an attempt.

Scalability and Useful resource Administration

As information volumes and processing necessities develop, guaranteeing the scalability of your ETL pipeline turns into paramount. Scalability includes effectively dealing with rising information volumes and processing calls for with out compromising efficiency or reliability. Implementing scalable architectures and useful resource administration methods permits your ETL pipeline to scale seamlessly with rising information masses and consumer calls for. Methods corresponding to horizontal scaling, auto-scaling, useful resource pooling, and workload administration may help optimize useful resource utilization and guarantee constant efficiency throughout various workloads and information volumes. Moreover, leveraging cloud-based infrastructure and managed providers can present elastic scalability and alleviate the burden of infrastructure administration, permitting you to concentrate on constructing sturdy and scalable ETL processes.

Conclusion

Environment friendly information integration is crucial for organizations to unlock the total potential of their information property and drive data-driven decision-making. By implementing methods corresponding to parallelizing information processing, incremental loading, and efficiency optimization, you may streamline your ETL processes and make sure the well timed supply of high-quality insights. Adapt these methods to your particular use case and leverage the appropriate instruments and applied sciences to realize optimum outcomes. With a well-designed and environment friendly ETL pipeline, you may speed up your information integration efforts and acquire a aggressive edge in at present’s fast-paced enterprise atmosphere.

Key Takeaways

- Understanding your information sources is essential for efficient ETL growth. It means that you can anticipate challenges and plan your technique accordingly.

- Selecting the best instruments and applied sciences primarily based on scalability and compatibility can streamline your ETL processes and enhance effectivity.

- Parallelizing information processing duties utilizing frameworks like Apache Spark can considerably scale back processing time and improve efficiency.

- Implementing incremental loading methods and sturdy error-handling mechanisms ensures information integrity and reliability in your ETL pipeline.

- Scalability and useful resource administration are important concerns to accommodate rising information volumes and processing necessities whereas sustaining optimum efficiency and value effectivity.

The media proven on this article should not owned by Analytics Vidhya and is used on the Writer’s discretion.

Steadily Requested Questions

A. ETL stands for Extract, Rework, Load. It’s a course of used to extract information from numerous sources, remodel it right into a constant format, and cargo it right into a goal system for evaluation. ETL is essential for integrating information from disparate sources and making it accessible for analytics and decision-making.

A. You may enhance the efficiency of your ETL processes by parallelizing information processing duties, implementing incremental loading methods to course of solely new or up to date information, optimizing useful resource allocation and utilization, and monitoring and optimizing efficiency frequently.

A. Frequent challenges in ETL growth embrace coping with various information sources and codecs, guaranteeing information high quality and integrity, gracefully dealing with errors and exceptions, managing scalability and useful resource constraints, and assembly efficiency and latency necessities.

A. A number of ETL instruments and applied sciences can be found, together with Apache Spark, Apache Airflow, Talend, Informatica, Microsoft Azure Information Manufacturing unit, and AWS Glue. The selection of device depends upon components corresponding to scalability, ease of use, integration capabilities, and compatibility with current infrastructure.

A. Making certain information high quality in ETL processes includes implementing information profiling to grasp the construction and high quality of information, performing information cleaning and validation to appropriate errors and inconsistencies, establishing information high quality metrics and guidelines, and monitoring information high quality constantly all through the ETL pipeline.