Introduction

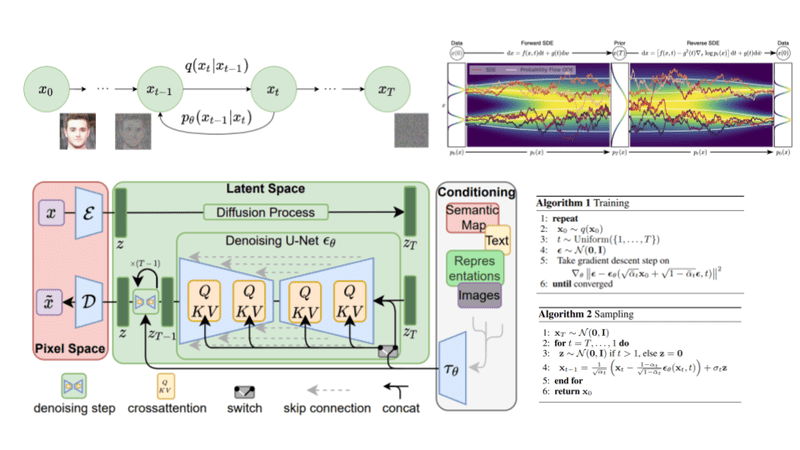

Diffusion-based generative fashions had been first offered within the 12 months 2015, and Ho et al.’s publication of the work titled “Denoising Diffusion Probabilistic Fashions” (DDPMs) within the 12 months 2020 was the catalyst that led to their widespread adoption . An attention-grabbing subject of examine referred to as diffusion probabilistic modeling is exhibiting a variety of promise within the subject of picture technology. The thought behind diffusion fashions is to interrupt the method of picture technology right into a sequential software of denoising autoencoders. That is the elemental idea underlying these fashions. The strategy of reverse diffusion is then used to hold out the “denoising” operation in an iterative method, happening in a sequence of small steps that deliver us again to the info subspace.

The Denoising Diffusion Probabilistic Mannequin, typically referred to as the DDPM, is an instance of a generative mannequin. Its function is to supply new pictures which are similar to an authentic assortment of pictures by utilizing denoising strategies. The DDPM will function as described beneath:

- Utilizing a way referred to as ahead diffusion, DDPM regularly introduces Gaussian noise into the unique picture.

- Over time, the variance of the Gaussian noise decreases, leading to a much less noisy picture.

- The DDPM makes use of a neural community to foretell the quantity of noise launched to the picture at every step of the ahead diffusion course of.

- The DDPM makes use of the anticipated noise in what’s known as the “reverse course of,” which is a sequence of steps used to get rid of the noise from the picture.

- A brand new picture is generated by the reverse course of that’s similar to the unique, however has much less noise.

- Utilizing a most chance estimate method, the DDPM is skilled to make predictions concerning the noise launched at every step of the ahead diffusion course of.

Implementation with Pytorch

Set up

If you happen to’re utilizing PyTorch, you may get the denoising_diffusion_pytorch package deal to put in the denoising diffusion mannequin.

$ pip set up denoising_diffusion_pytorchUtilization

The beneath code snippet reveals tips on how to implement the denoising diffusion mannequin in PyTorch.

import torch

from denoising_diffusion_pytorch import Unet, GaussianDiffusion

mannequin = Unet(

dim = 64,

dim_mults = (1, 2, 4, 8)

)

diffusion = GaussianDiffusion(

mannequin,

image_size = 128,

timesteps = 1000 # variety of steps

)

training_images = torch.rand(8, 3, 128, 128) # pictures are normalized from 0 to 1

loss = diffusion(training_images)

loss.backward()

# after a variety of coaching

sampled_images = diffusion.pattern(batch_size = 4)

sampled_images.form # (4, 3, 128, 128)- The above code makes use of the denoising_diffusion_pytorch package deal’s Unet and GaussianDiffusion lessons.

- The GaussianDiffusion class describes the diffusion mannequin by utilizing the denoising mannequin together with extra parameters corresponding to picture measurement and variety of timesteps. The Unet mannequin outlined by this code has a 64-level depth and a downsampling issue of (1, 2, 4, 8).

- The training_images variable is a tensor that incorporates eight noisy pictures. The training_images tensor is distributed as enter to the diffusion object, which is then used to denoise the picture.

- The denoising loss that was decided by the diffusion mannequin is the variable that represents the loss.

- To calculate the loss gradients with respect to the mannequin parameters, we use the backward() operate on the loss variable.

- The sampled_images variable is a tensor containing 4 denoised pictures produced by the diffusion mannequin by the pattern() operate.

Alternately, the Coach class permits for simple mannequin coaching by the easy enter of a folder title and the specified picture dimensions.

from denoising_diffusion_pytorch import Unet, GaussianDiffusion, Coach

mannequin = Unet(

dim = 64,

dim_mults = (1, 2, 4, 8)

)

diffusion = GaussianDiffusion(

mannequin,

image_size = 128,

timesteps = 1000, # variety of steps

sampling_timesteps = 250 # variety of sampling timesteps (utilizing ddim for quicker inference [see citation for ddim paper])

)

coach = Coach(

diffusion,

'path/to/your/pictures',

train_batch_size = 32,

train_lr = 8e-5,

train_num_steps = 700000, # whole coaching steps

gradient_accumulate_every = 2, # gradient accumulation steps

ema_decay = 0.995, # exponential transferring common decay

amp = True, # activate combined precision

calculate_fid = True # whether or not to calculate fid throughout coaching

)

coach.practice()- The Coach class is the place the entire training-specific settings are configured. This consists of the diffusion object, the trail to the coaching pictures, the batch measurement, the educational fee, the variety of coaching steps, the gradient accumulation steps, the exponential transferring common decay, the mixed-precision toggle, and the Fréchet Inception Distance (FID) calculation toggle.

- To coach the mannequin, we use the practice methodology on the coach object.

- Take into account that you may want to vary the trail/to/your/pictures choice to level to the placement of the coaching pictures listing.

- The variety of information batches which are processed earlier than the gradients are collected and the mannequin parameters are up to date is ready by the gradient_accumulate_every parameter within the Coach class of the denoising_diffusion_pytorch package deal.

- In the course of the coaching course of, the exponential transferring common (EMA) of the mannequin parameters is given a decay fee that’s specified by the ema_decay=0.995 parameter within the Coach class of the denoising_diffusion_pytorch package deal.

- Samples generated by Generative Adversarial Networks (GANs) and different generative fashions are sometimes analyzed utilizing the FID(Fréchet Inception Distance). The FID is a metric for gauging the diploma to which real-world picture distributions and synthetically-generated picture distributions are related in function area. Generated pictures with a decrease FID rating usually tend to carefully resemble the originals, indicating that the generative mannequin is extra correct.

Conclusion

General, the DDPM is a strong generative mannequin that will present wonderful outcomes when used to generate new pictures. The mannequin generates a brand new picture that resembles the unique however with much less noise by first including noise to the unique picture after which eliminating the noise. The mannequin is then skilled to offer most chance estimations of the noise ranges at every step of the ahead diffusion course of. The DDPM has been utilized in quite a few contexts as a consequence of its profitable manufacturing of high-quality pictures with out the necessity for adversarial coaching.

References

Denoising Diffusion Probabilistic Fashions

We current top quality picture synthesis outcomes utilizing diffusion probabilistic fashions, a category of latent variable fashions impressed by concerns from nonequilibrium thermodynamics. Our greatest outcomes are obtained by coaching on a weighted variational sure designed based on a novel connection be…

Denoising Diffusion Probabilistic Fashions (DDPM)

PyTorch implementation and tutorial of the paper Denoising Diffusion Probabilistic Fashions (DDPM).

How diffusion fashions work: the mathematics from scratch | AI Summer season

A deep dive into the arithmetic and the instinct of diffusion fashions. Learn the way the diffusion course of is formulated, how we will information the diffusion, the primary precept behind steady diffusion, and their connections to score-based fashions.

GitHub – lucidrains/denoising-diffusion-pytorch: Implementation of Denoising Diffusion Probabilistic Mannequin in Pytorch

Implementation of Denoising Diffusion Probabilistic Mannequin in Pytorch – GitHub – lucidrains/denoising-diffusion-pytorch: Implementation of Denoising Diffusion Probabilistic Mannequin in Pytorch