Deliver this venture to life

In our research journey to establish the very best picture synthesis fashions, Steady Diffusion has come up time and time once more as the very best out there, open supply mannequin. Whether or not our activity is textual content to picture, picture to picture, video era, or any of the big variety of prolonged capabilities of diffusion modeling for picture era, variations of Steady Diffusion proceed to be the very best and hottest fashions for picture synthesis duties. That is largely because of the fashions unbelievable versatility, in a position to deal with all kinds of bodily and summary mixtures of objects within the latent house, but additionally because of its widespread adoption by each the AI developer neighborhood in addition to parts of the broader inhabitants.

We now have talked ceaselessly about our help for the AUTOMATIC1111 Steady Diffusion Net UI as the very best platform to run Steady Diffusion on the cloud – and particularly on Paperspace. The Steady Diffusion Net UI was constructed utilizing the highly effective Gradio library to deliver a bunch of various interactive capabilities for serving the fashions to the consumer, and it has a plethora of various extensions to increase this potential utility additional.

One matter we now have not coated in regards to the Steady Diffusion Net UI (and by extension our favourite fork of this venture, Quick Steady Diffusion), is its built-in FastAPI performance. FastAPI is a notable and highly effective Python framework for creating RESTful APIs. Utilizing the FastAPI backend of the Net UI, we will allow a complete new diploma of enter and output management over a generative workflow with Steady Diffusion, together with integrating a number of pc techniques, creating Discord chatbots, and rather more.

On this article, we’re going to run a brief demonstration for understanding find out how to work together with the Steady Diffusion Net UI’s FastAPI endpoint. Readers ought to count on to have the ability to use this info to assist recreate each the demo we make under, and simply combine related content material from this into their very own purposes. Earlier than continuing, try a few of our different tutorials like this one on coaching SD XL LoRA fashions.

Organising Steady Diffusion

To setup the mannequin in a Paperspace Pocket book is definitely fairly easy. All we have to do is click on on this hyperlink (additionally discovered within the Run on Paperspace hyperlink firstly of this text). This may take us immediately right into a workspace with all of the required information and packages on the hit of the Begin Pocket book button.

That is utilizing the Quick Steady Diffusion implementation of the AUTOMATIC1111 Steady Diffusion Net UI. Because of the creator of the venture, TheLastBen, who optimizes the PPS repo to work immediately with Paperspace.

As soon as the Pocket book has began operating, we’ll need to open up the PPS-A1111.ipynb pocket book utilizing the file navigator on the left facet of the display. From there, all we have to do is click on the Run All button within the prime proper of the display. This may sequentially execute the code cells there inside, which downloads all of the required packages and related updates, any lacking mannequin checkpoint information for normal Steady Diffusion releases, offers the choice to obtain ControlNet fashions (these have to be manually modified), after which launches the net utility. Scroll to the very backside of the pocket book to get the general public Gradio utility hyperlink, which is able to start with https://tensorboard-.

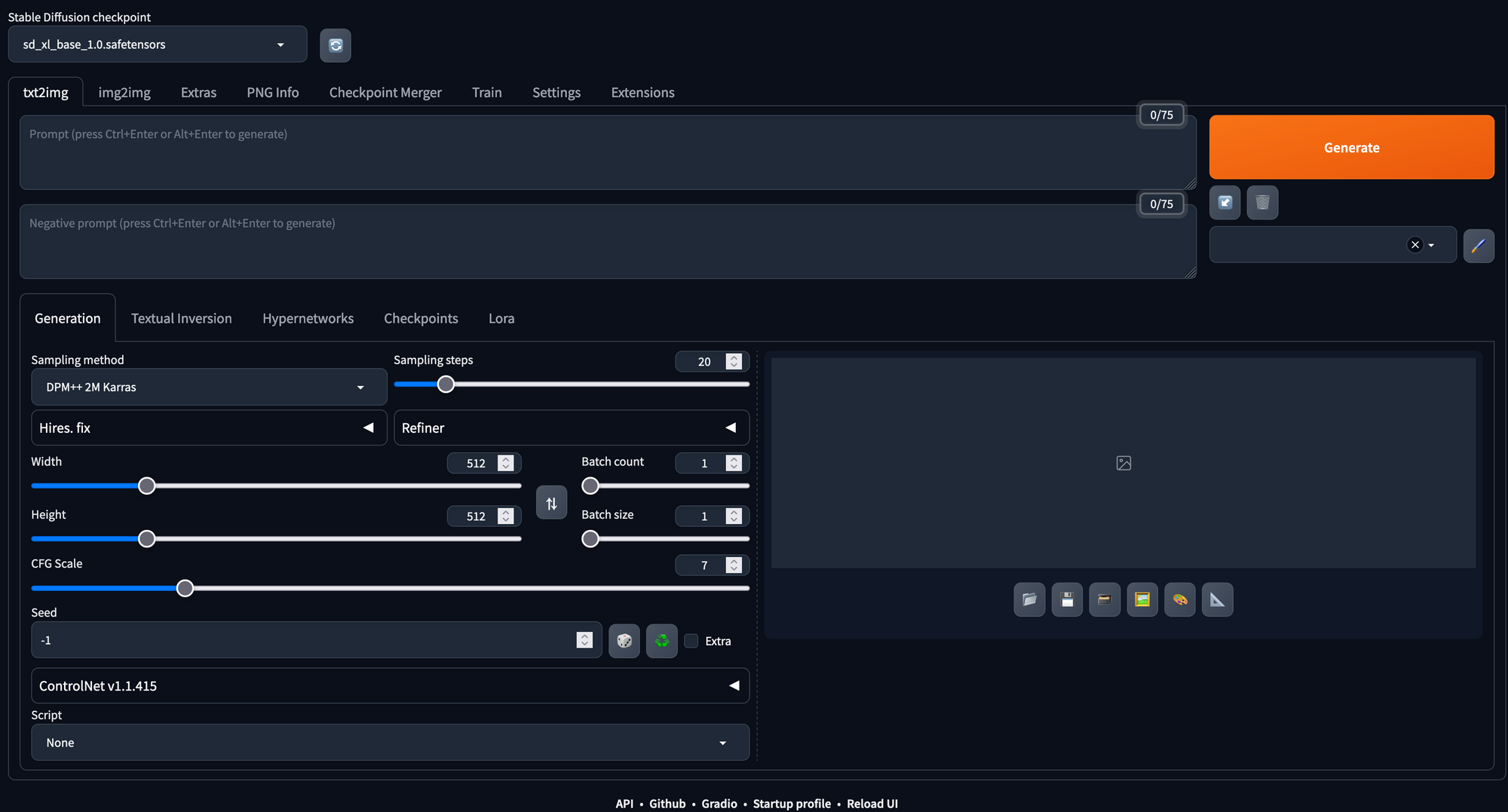

Utilizing the Quick Steady Diffusion utility from the net browser

Clicking the hyperlink will take us immediately into the net app in a brand new tab. From right here, we will start producing photographs immediately. Hitting the generate button right here will create some random picture, however we will add in textual content to the immediate and damaging immediate fields to manage this synthesis like we might add code in a Diffusers inference implementation with the mannequin. All of the hyperparameters could be adjusted utilizing easy and easy-to-use sliders and textual content fields within the web page.

The net app makes it straightforward to run textual content to picture, picture to picture, and tremendous decision synthesis with Steady Diffusion with little to no trouble. Moreover, it has all kinds of extra capabilities like mannequin merging, textual inversion embedding creation and coaching, and almost 100 open supply extensions that are appropriate with the applying.

For extra particulars about find out how to run the applying from the browser to generate AI art work with Steady Diffusion, you’ll want to try a few of our earlier tutorials within the Steady Diffusion tab. Let’s now check out the Gradio purposes FastAPI documentation, and find out how we will deal with the net UI as an API endpoint.

Utilizing the Steady Diffusion Net UI FastAPI endpoint

Deliver this venture to life

Now that we now have our endpoint created for the Quick Steady Diffusion Net UI, we will start utilizing the endpoint for improvement in different eventualities. This can be utilized to plug our generated photographs in anyplace, comparable to Chatbots and different Software integrations. These permit customers on exterior purposes to reap the benefits of the Paperspace GPU velocity to generate the photographs with none arrange or trouble. For instance, that is probably how lots of the common Discord chatbots are built-in to supply photographs with Steady Diffusion to customers on the platform.

From right here, we might want to both open a brand new workspace, Pocket book, or swap to our native, because the cell operating the endpoint must be left undisturbed. Restarting it’ll change the URL.

Open up a brand new IPython Pocket book in your atmosphere of alternative, or use the hyperlink above to open this on a Paperspace Pocket book operating on a Free GPU. To get began with the API, use this pattern code snippet from the API wiki, and change the <url> inside along with your Net UI’s URL:

import json

import requests

import io

import base64

from PIL import Picture

url="https://tensorboard-nalvsnv752.clg07azjl.paperspacegradient.com"

payload = {

"immediate": "pet canine",

"steps": 50,

"seed":5

}

response = requests.put up(url=f'{url}/sdapi/v1/txt2img', json=payload)

r = response.json()

picture = Picture.open(io.BytesIO(base64.b64decode(r['images'][0])))

# picture.save('output.png')

show(picture)This makes use of the payload dictionary object to hold the immediate and steps values we wish to the mannequin through the endpoint, generate a picture utilizing these parameters, and return it to the native atmosphere to be decoded and displayed. Under is an instance picture we made following these steps:

As we will see, it is rather easy with the requests library to question the mannequin and synthesize photographs identical to we might utilizing the buttons and fields within the utility itself. Let us take a look at a extra detailed potential immediate for the mannequin:

import json

import requests

import io

import base64

from PIL import Picture

url="https://tensorboard-nalvsnv752.clg07azjl.paperspacegradient.com"

payload = {

"immediate": "pet canine",

"negative_prompt": "yellow fur",

"types": [

"string"

],

"seed": 5, # we will re-use the worth to make it repeatable

"subseed": -1, # add variation to axisting seed

"subseed_strength": 0, #energy of variation

"seed_resize_from_h": -1, # generate as if utilizing the seed producing at completely different decision

"seed_resize_from_w": -1, # generate as if utilizing the seed producing at completely different decision

"sampler_name": "UniPC", # which sampler mannequin, e.g. DPM++ 2M Karras or UniPC

"batch_size": 1, # what number of photographs to generate on this run

"n_iter": 1, # what number of runs to generate photographs

"steps": 50, # what number of diffusion steps to take to generate picture (extra is healthier typically, with diminishing returns after 50)

"cfg_scale": 7, # how robust the impact of the immediate is on the generated picture

"width": 512, # width of generated picture

"top": 512, # top of generated picture

"restore_faces": True, # Use GFPGAN to upscale faces generated

"tiling": True, # create a repeating sample like a wallpaper

"do_not_save_samples": False,

"do_not_save_grid": False,

"eta": 0, # a noise multiplier that impacts sure samplers, altering the setting permits variations of the picture on the similar seed in response to ClashSAN https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/4079#discussioncomment-4022543

"denoising_strength": 0, # how a lot noise so as to add to inputted picture earlier than reverse diffusion, solely related in img2img eventualities

"s_min_uncond": 0, # impacts gamma, could make the outputs roughly stochastic by including noise

"s_churn": 0, # impacts gamma

"s_tmax": 0, # impacts gamma

"s_tmin": 0, # impacts gamma

"s_noise": 0, # impacts gamma

"override_settings": {}, # new default settings

"override_settings_restore_afterwards": True,

"refiner_checkpoint": None, # which checkpoint to make use of as refiner in SD XL eventualities

"refiner_switch_at": 0, # which step to modify to refiner on

"disable_extra_networks": False, # disables hypernetworks and loras

"feedback": {},

"enable_hr": False, # activates excessive decision

"firstphase_width": 0, # the place to restrict excessive decision scaling

"firstphase_height": 0, # the place to restrict excessive decision scaling

"hr_scale": 2, # how a lot to upscale

"hr_upscaler": None, # which upscaler to make use of, default latent diffusion with similar mannequin

"hr_second_pass_steps": 0, # what number of steps to upscale with

"hr_resize_x": 0, # scale to resize x scale

"hr_resize_y": 0, # scale to resize y scale

"hr_checkpoint_name": None, # which upscaler to make use of, default latent diffusion with similar mannequin

"hr_sampler_name": None, # which sampler to make use of in upscaling

"hr_prompt": "", # excessive decision damaging immediate, similar as enter immediate

"hr_negative_prompt": "", # excessive decision damaging immediate, similar as enter immediate

"sampler_index": "Euler", #alias for sampler, redundancy

"script_name": None, # script to run era by

"script_args": [],

"send_images": True, # ship output to be acquired in response

"save_images": False, # save output to predesignated outputs vacation spot

"alwayson_scripts": {} # extra scripts for affecting mannequin outputs

}

response = requests.put up(url=f'{url}/sdapi/v1/txt2img', json=payload)

r = response.json()

picture = Picture.open(io.BytesIO(base64.b64decode(r['images'][0])))

picture.save('output.png')

show(picture)

Utilizing the settings above, we have been in a position to generate this completely different picture by adjusting our settings. Notably, we added a damaging immediate for “yellow fur”, modified the sampler to UniPC. With these adjusted settings, we get the picture under:

Now that we now have all the pieces arrange, we’re good to go! It’s now doable to combine the Steady Diffusion Net UI with no matter venture we want. For instance, it’s doable to combine it with a chatbot utility for Discord.

Closing Ideas

On this article, we revisited the Steady Diffusion Net UI by AUTOMATIC1111 and their contributors with the specific purpose of interacting with the Gradio utility’s FastAPI backend. To do that, we explored accessing the API, the adjustable settings out there when POSTing a txt2img request, after which carried out it in Python to run with Paperspace or in your native.