Trendy giant language fashions (LLMs) are very highly effective and turning into extra so by the day. Nonetheless, their energy is generic: they’re good at answering questions, following directions, and so forth., on public content material on the web, however can quickly fall away when coping with specialised topics outdoors of what they have been educated on. They both have no idea what to output, or worse, fabricate one thing incorrect that may seem authentic.

For a lot of companies and purposes, it’s in exactly this realm of specialised info that an LLM can present its best value-add, as a result of it permits their customers to get the element info that they want from a product. An instance is answering questions on an organization’s documentation that will not in any other case be public or a part of a coaching set.

Retrieval-augmented technology (RAG) permits this worth to be enabled on LLMs by supplementing their generic coaching knowledge with additional or specialised info, together with:

- Details about a selected matter in higher element

- Information that was not in any other case public

- Info that’s new or has modified because the base mannequin was educated

RAG may be approached by doing issues in a extra low-code approach, reminiscent of utilizing a vendor product that abstracts away the LLM or different code getting used, or in a extra open however decrease degree approach by immediately utilizing numerous rising frameworks reminiscent of LangChain, LlamaIndex, and DSPy.

DigitalOcean’s Sammy AI Assistant chatbot in our documentation exposes the previous method to the consumer. Right here, we deal with the latter, displaying how a couple of traces of code mean you can increase a generic LLM with your individual webpages or paperwork.

Particularly, we instantiate:

- LangChain

- GPT-4o

- Webpages and PDFs

and we run them on an ordinary DigitalOcean+Paperspace Gradient Pocket book. We present how a mannequin with RAG offers considerably higher solutions on our new knowledge than the identical mannequin with out it. The chain formulation of LangChain makes this comparability of RAG versus base simple.

The ensuing fashions may then be deployed to manufacturing on an endpoint within the normal approach. Right here our focus is on the development of RAG versus a base mannequin, and the benefit of getting your individual knowledge into the method.

Lastly, it is price noting that different approaches to enhancing mannequin outputs moreover RAG can be found, reminiscent of mannequin finetuning. We’ve got different blogposts that includes this, reminiscent of right here. Finetuning is extra computationally intensive than RAG.

A few different current RAG posts on the Paperspace weblog are right here and right here.

Why this method?

What makes the actual method on this blogpost enticing is that it is rather normal. By beginning a DigitalOcean+Paperspace (DO+PS) Gradient Pocket book, you may:

- Use any LLM mannequin accessible by an API from LangChain (there are rather a lot, free or paid)

- Use any certainly one of a lot of supported vector databases for the mannequin embeddings

- Increase with any webpage or PDF that it will probably learn, both public or your individual (billions)

- Put the outcome into manufacturing and prolong the work in some ways: internet software, different LLM fashions reminiscent of code, and so forth

As a result of DO+PS is generic, the identical concept would additionally work with LlamaIndex, DSPy, or different rising frameworks.

Elements

LangChain

LangChain is certainly one of a number of rising frameworks that allow working with LLMs extra simply and placing them into manufacturing. Whereas which framework will win out long-term will not be but established, this one works properly for our functions right here of demonstrating working RAG on DO+PS.

GPT-4o

We wish one of the best solutions to our questions, and LangChain can name APIs, so we use one of the best mannequin: OpenAI’s GPT-4o.

This can be a paid mannequin, however different OpenAI fashions, paid, free or open supply fashions from elsewhere, may be substituted.

For instance, we regarded on the solutions from the older GPT-3.5 Turbo as properly. These are sometimes virtually pretty much as good as GPT-4o for the questions right here, though GPT-4o is best at recognizing context reminiscent of when it’s being requested a query that’s not answered inside our paperwork, however the immediate requested it to make use of the paperwork.

Chroma Vector Database

RAG works by discovering the shortest “distance” between your question and your added paperwork. Because of this the information must be saved in a kind for which distance between arbitrary textual content may be measured. This way is embeddings, and works properly in a vector database.

LangChain has assist for a lot of vector databases, and the instance right here instantiates one which makes use of Chroma.

DigitalOcean has present assist for integrations with databases, and so the usage of a vector one is a pure extension inside our infrastructure.

Setup

💡

All instructions proven on this blogpost are Python from the pocket book and never bash from the terminal.

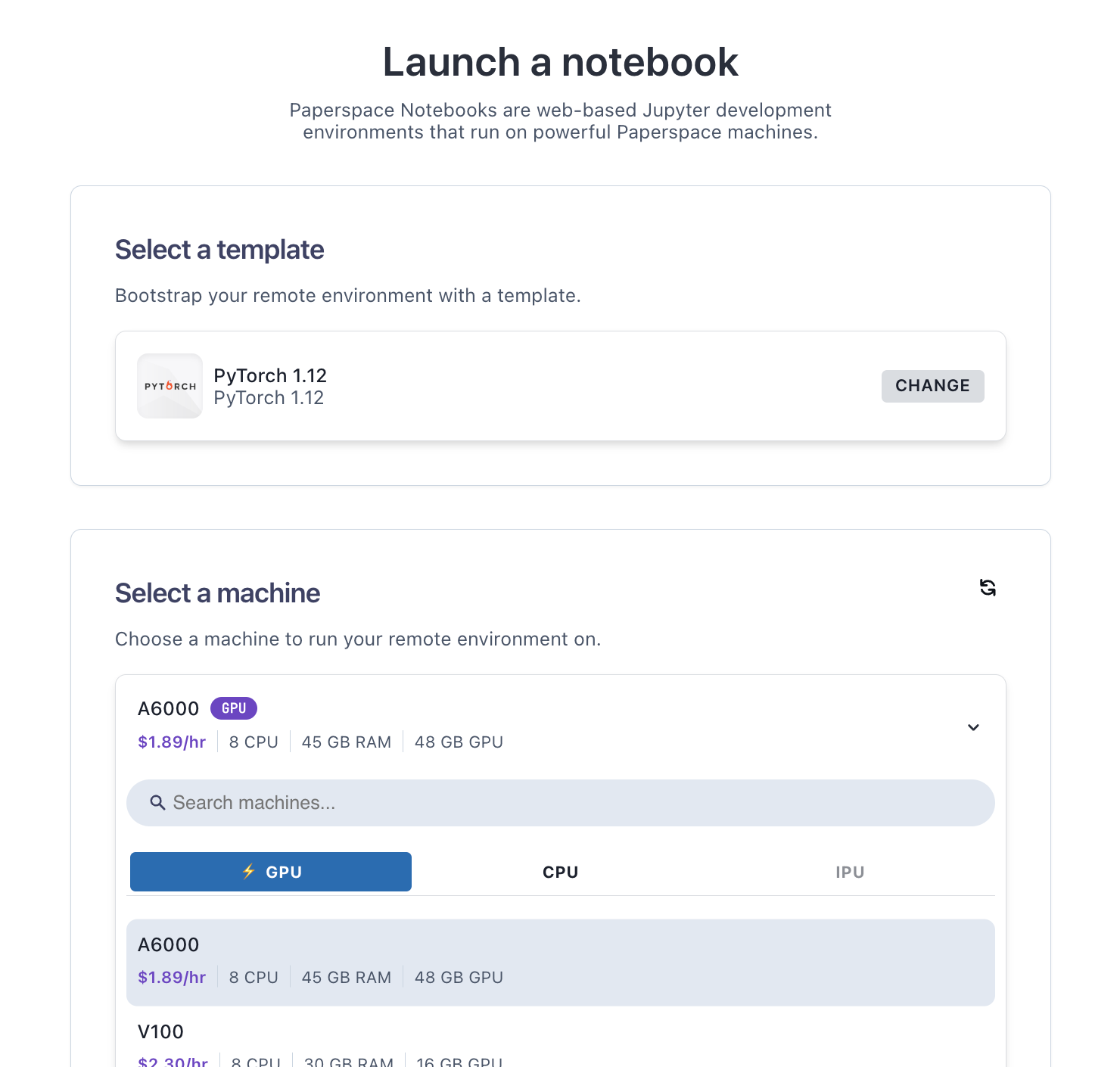

We use an ordinary Gradient Pocket book setup, launching on a single A6000 GPU on the PyTorch runtime, however different related GPUs will work.

We then add the software program onto PS+DO’s primary set that this use case wants, fixing the variations on a set that works (like a necessities.txt):

%pip set up --upgrade

langchain==0.2.8

langchain-community==0.2.7

langchainhub==0.1.20

langchain-openai==0.1.16

chromadb==0.5.3

bs4==0.0.2Some tweaks are wanted to make it work accurately on the actual stack that outcomes from our Gradient runtime plus these installs:

%pip set up --upgrade transformers==4.42.4

%pip set up pydantic==1.10.8(Python improve!)

!sudo apt replace

!sudo apt set up software-properties-common -y

!sudo add-apt-repository ppa:deadsnakes/ppa -y

!sudo apt set up Python3.11 -y

!rm /usr/native/bin/python

!ln -s /usr/bin/python3.11 /usr/native/bin/python%pip set up pysqlite3-binary==0.5.3

OpenAI API key

To entry GPT-4o, we want an OpenAI API key. This may be obtained out of your account after signing up and logging in, and setting the permissions to all-permissions and never learn solely.

Then set it as an atmosphere variable utilizing

import os

os.environ["OPENAI_API_KEY"] = "<Your API key>"or use, e.g., getpass to keep away from a key being within the code.

We at the moment are able to see LangChain and RAG in motion.

RAG outcomes

The sequence of steps right here is predicated on the LangChain query answering quickstart for webpages. We prolong the instance to PDFs.

Webpages

Webpages may be parsed utilizing BeautifulSoup.

Import what we want:

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitterThen the remainder is

loader = WebBaseLoader(

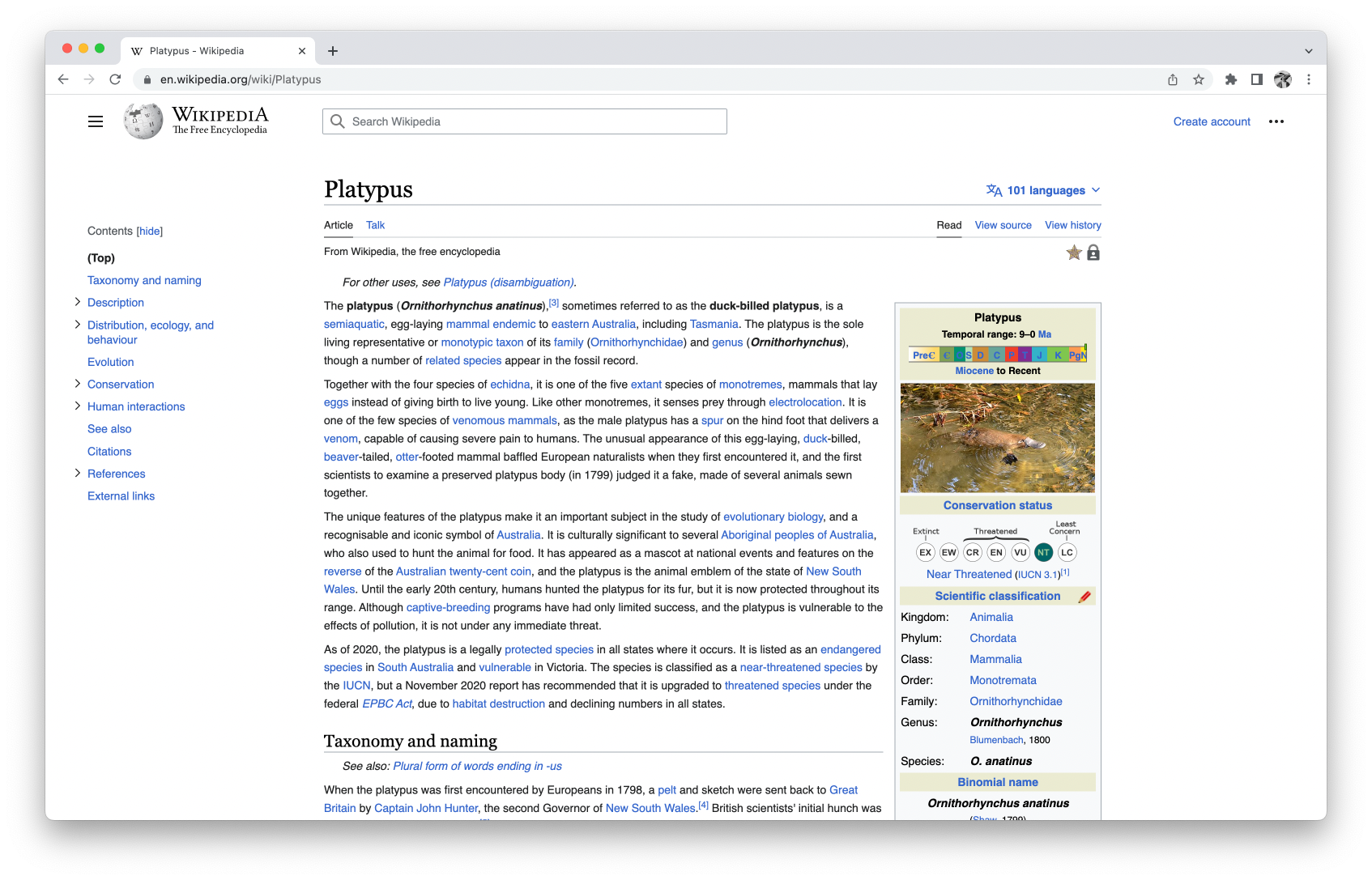

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

vectorstore = Chroma.from_documents(paperwork=splits, embedding=OpenAIEmbeddings())

retriever = vectorstore.as_retriever()

immediate = hub.pull("rlm/rag-prompt")

llm = ChatOpenAI(model_name="gpt-4o", temperature=0)

def format_docs(docs):

return "nn".be a part of(doc.page_content for doc in docs)

rag_chain = (

format_docs, "query": RunnablePassthrough()

| immediate

| llm

| StrOutputParser()

)Right here, the code begins by loading and parsing a webpage with loader = WebBaseLoader(...). This works for any web page that may be parsed so you may substitute your individual. We’re utilizing the identical blogpost because the LangChain quickstart: https://lilianweng.github.io/posts/2023-06-23-agent/ .

text_splitter and splits cut up the textual content into tokens for the LLM. They’re completed in chunks to higher slot in a mannequin context window, however with overlap in order that textual content context will not be misplaced.

vectorstore places the outcome right into a Chroma vector database as embeddings.

retriever is the perform to retrieve from the database.

immediate gives textual content that goes initially of the immediate to the mannequin to manage its habits, for instance, the textual content of the immediate we use right here is:

You're an assistant for question-answering duties. Use the next items of retrieved context to reply the query. If you do not know the reply, simply say that you do not know. Use three sentences most and preserve the reply concise.llm is the mannequin we wish to use, right here gpt-4o.

Then lastly rag_chain is constructed that represents the RAG course of we would like, with a perform format_docs to move them to the chain accurately.

The chain is run on the webpage utilizing, e.g.,

rag_chain.invoke("What's Activity Decomposition?")and right here we will ask it any query we would like.

An instance reply is:

rag_chain.invoke("What's self-reflection?")Self-reflection is a course of that enables autonomous brokers to iteratively enhance by refining previous motion choices and correcting earlier errors. It includes analyzing failed trajectories and utilizing supreme reflections to information future adjustments within the plan, that are then saved within the agent’s working reminiscence for future reference. This mechanism is essential for enhancing reasoning expertise and efficiency in real-world duties the place trial and error are inevitable.

We see that the reply is appropriate, and that it’s summarizing the content material from the paper, not simply repeating again blocks of textual content.

We are able to additionally view the information that now we have loaded from the webpage as a sanity examine to examine that the mannequin is utilizing the textual content that we predict it’s, e.g.,

len(docs[0].page_content)

43131print(docs[0].page_content[:500])

LLM Powered Autonomous Brokers

Date: June 23, 2023 | Estimated Studying Time: 31 min | Writer: Lilian Weng

Constructing brokers with LLM (giant language mannequin) as its core controller is a cool idea. A number of proof-of-concepts demos, reminiscent of AutoGPT, GPT-Engineer and BabyAGI, function inspiring examples. The potentiality of LLM extends past producing well-written copies, tales, essays and applications; it may be framed as a robust normal downside solver.

Agent System Overview#

Inwhich we see is identical textual content that the webpage begins with.

For extra info, the LangChain RAG Q&A quickstart has additional commentary and hyperlinks to the elements of its documentation coping with every part in full.

Two extensions to the above are to load a number of webpages into the augmentation on the identical time, and evaluate the RAG solutions to these from a base mannequin. We present each of those under for PDFs.

PDFs

As with webpages, any set of PDFs that the LangChain parser can learn can be utilized to RAG-augment the LLM. Right here, we use a few of the writer’s set of refereed astronomy publications from the arXiv preprint server. That is partly for curiosity, but in addition for the comfort that the right solutions are each fairly obscure however largely identified. This implies they’re unlikely to be answered properly by a non-RAG mannequin, and we see clearly how the method improves outcomes on a specialised matter. Questions outdoors the scope of the publications may also be simply requested as a management.

To work with PDFs, the method is much like above. For a single doc, we will use PyPDFLoader and level to its location as a URL or native file.

We wish to use a number of PDFs, so we use PyPDFDirectoryLoader and put the PDFs in an area listing /notebooks/PDFs first.

%pip set up pypdf==4.3.0The code is much like the webpage instance:

from langchain_community.document_loaders import PyPDFDirectoryLoader

loader = PyPDFDirectoryLoader("/notebooks/PDFs/")

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150)

splits = text_splitter.split_documents(docs)

vectorstore = Chroma.from_documents(paperwork=splits, embedding=OpenAIEmbeddings(disallowed_special=()))

retriever = vectorstore.as_retriever()

immediate = hub.pull("rlm/rag-prompt")

llm = ChatOpenAI(model_name="gpt-4o", temperature=0)

def format_docs(docs):

return "nn".be a part of(doc.page_content for doc in docs)

rag_chain = (

format_docs, "query": RunnablePassthrough()

| immediate

| llm

| StrOutputParser()

)and just like the webpages we will sanity examine the information with instructions like

len(docs)

97which is the right whole variety of pages, and

print(docs[0].page_content)

...

We evaluate the present state of knowledge mining and machine learni ng in astronomy. Information

Miningcan have a considerably combined connotation from the perspective o f a researcher in

this field. If used accurately, it may be a robust method, h olding the potential to totally

exploit the exponentially growing quantity of obtainable d ata, promising nice scientific

advance. Nonetheless, ifmisused, it may be little greater than the b lack-box software of com-

...which is the start textual content of the primary paper, though the parsing to ASCII will not be excellent.

For a extra superior system and a bigger variety of paperwork, they might be saved in a NoSQL database.

Comparability to base mannequin

Let’s evaluate our RAG solutions on the PDFs to these of a base non-RAG mannequin. The LangChain formulation makes this simple as a result of we will substitute the RAG chain:

rag_chain = (

format_docs, "query": RunnablePassthrough()

| immediate

| llm

| StrOutputParser()

)with one which merely factors to the bottom mannequin:

base_chain = base_prompt | llm | StrOutputParser()We have to alter the immediate when utilizing the bottom mannequin in order that we do not inform it to make use of a now non-existent RAG context. Prompts are handed utilizing a template, and the RAG one is

template = """Use the next items of context to reply the query on the finish.

If you do not know the reply, simply say that you do not know, do not attempt to make up a solution.

Use three sentences most and preserve the reply as concise as attainable.

{context}

Query: {query}

Useful Reply:"""

immediate = PromptTemplate.from_template(template)For the bottom, we modify this to

template = """You're an assistant for question-answering duties.

Use your present information to reply the query.

If you do not know the reply, simply say that you do not know.

Use three sentences most and preserve the reply concise.

Query: {query}

Useful Reply:"""

base_prompt = PromptTemplate.from_template(template)Observe that the {context} argument is eliminated, since there now is not one to move from.

Now we will ask questions and evaluate base GPT-4o to RAG-augmented GPT-4o with our PDFs.

A query to the bottom mannequin:

base_chain.invoke("What number of galaxies and stars have been the classifications correct for?")I haven’t got the precise numbers for what number of galaxies and stars have been precisely categorized. The accuracy of classifications can range relying on the strategies and applied sciences used, reminiscent of these in tasks just like the Sloan Digital Sky Survey or the Hubble Area Telescope. For exact figures, you would wish to consult with the newest knowledge from these particular astronomical surveys.

The identical query to the RAG mannequin:

rag_chain.invoke("What number of galaxies and stars have been the classifications correct for?")The classifications have been correct for 900,000 galaxies and 6.7 million stars.

which is the right reply for these PDFs.

Utilizing this and different questions, we will clearly see that the RAG-augmented mannequin offers higher solutions in regards to the particular new info within the PDFs. Like with the webpages, the solutions once more don’t simply duplicate textual content from the paper, however shorten and summarize it appropriately.

When requested management questions outdoors the scope of the PDFs, the solutions are good for the bottom mannequin.

For the RAG mannequin, the place in our specific immediate we’re asking it to make use of the PDFs and to say that it would not know if there isn’t any good reply from them, it says it doesn’t know:

base_chain.invoke("What are some good locations to go to within the San Francisco Bay Space?")Some good locations to go to within the San Francisco Bay Space embody the Golden Gate Bridge, Alcatraz Island, and Fisherman’s Wharf. Different well-liked spots are Golden Gate Park, the San Francisco Museum of Trendy Artwork (SFMOMA), and the tech hub of Silicon Valley. For pure magnificence, take into account Muir Woods Nationwide Monument and the scenic drive alongside Freeway 1.

rag_chain.invoke("What are some good locations to go to within the San Francisco Bay Space?")I do not know.

So now we have now a helpful system of RAG-augmentation of LLMs with our personal webpages and PDFs.

Clearly there’s extra that may be completed than this, and our blogpost right here doesn’t present the answer to every part. For instance, optimizing your prompts, stopping hallucinations, proving RAG all the time helps, and very best parsing of inputs.

However we do see clearly that higher solutions are given right here by RAG than with out it, and never a number of code is required. Analogous enchancment is probably going by yourself knowledge, and may be realized on DO+PS.

Conclusions and subsequent steps

We’ve got proven that we will add our personal paperwork to an LLM mannequin, and have it give helpful solutions to questions on them, for instance, explaining technical phrases or summarizing longer textual content into shorter kind.

Particularly, we instantiated:

- LangChain

- GPT-4o

- Webpages and PDFs

Different fashions, reminiscent of GPT-3.5 Turbo, or free and open supply fashions, can simply be substituted. The tactic works for any webpages and PDFs that the LangChain parser can learn.

You’ll be able to adapt the code to your individual knowledge, most well-liked LLM, and use case.

💡

Watch out when working fashions reminiscent of GPT-4o, as a result of they’re paid, and so prices could add up with prolonged utilization!

Whereas RAG improves your mannequin’s outcomes over a non-RAG mannequin, it doesn’t fully resolve the generic LLM downside of differentiation between fact and fiction. That is topic to ongoing analysis, and numerous approaches can be found. A great method at current is to encourage LLM use in contexts the place the data given both doesn’t must be “true”, reminiscent of technology of concepts and drafts, or the place it’s simply verifiable, reminiscent of answering questions on documentation {that a} consumer can observe up upon.

There are a selection of enhancements to the essential processes proven on this blogpost:

- Extra detailed webpage or PDF parsing

- Different doc varieties reminiscent of code

- Extra refined retrieval reminiscent of multi-query

- Alteration of tunable parameters such because the immediate, chunking, overlap, and mannequin temperature

- Add LangChain’s LangSmith to see traces of extra advanced chains

- Present the supply paperwork utilized in producing the reply to the consumer

- Add a deployed endpoint, consumer interface, chat historical past, and so forth.

The LangChain documentation exhibits many examples of those enhancements, and different frameworks moreover LangChain reminiscent of LlamaIndex and DSPy can be found that may work equally on our generic DO+PS infrastructure.

Offered customers are conscious that LLMs’ outputs could generally be incorrect, and particularly when working in regimes the place the LLM output info may be rapidly and simply verified, RAG provides a number of worth.

You’ll be able to run your individual by going to Paperspace + DigitalOcean, and our documentation.