Introduction

Instruction tuning is a technique for enhancing language fashions’ performance in NLP purposes. As a substitute of coaching it to deal with a single process, the language mannequin is fine-tuned on a set of duties outlined by directions. The aim of instruction tuning is to optimize the mannequin for NLP drawback fixing. By instructing the mannequin to hold out NLP duties within the type of instructions or directions, as within the FLAN (Advantageous-tuned Language Internet) mannequin, researchers have seen enhancements within the mannequin’s capability to course of and interpret pure language.

Presentation of Flann assortment 2022

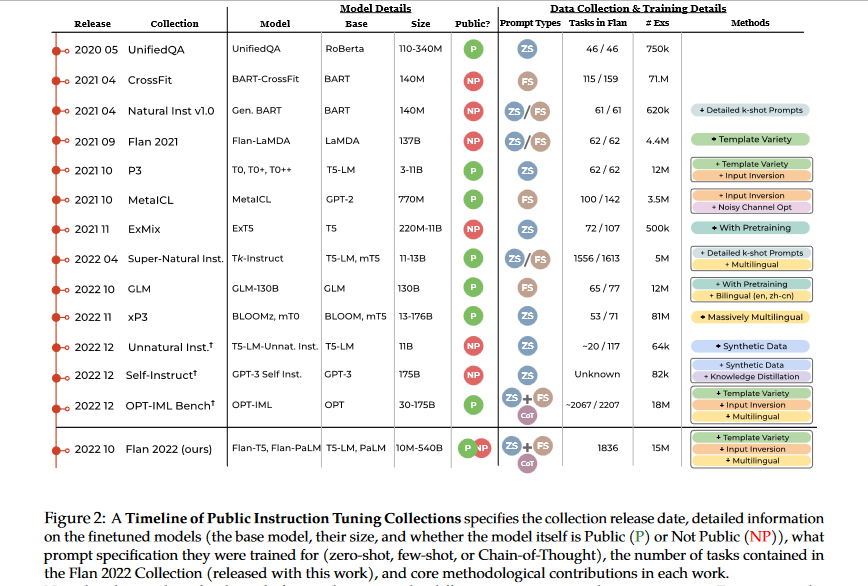

The Flan Assortment 2022 is an open-source toolkit for analyzing and optimizing instruction tuning that features quite a lot of duties, templates, and methodologies. It is probably the most complete assortment of duties and strategies for instruction tuning that’s accessible to the general public, and it’s an enchancment above earlier approaches. The Flan 2022 Assortment contains new dialogue, program synthesis, and sophisticated reasoning challenges along with these already current in collections from FLAN, P3/T0, and Pure Directions. The Flan 2022 Assortment additionally has a whole bunch of premium templates, improved formatting, and information augmentation.

The gathering options fashions which were fine-tuned throughout quite a lot of duties, from zero-shot prompts to few-shot prompts and chain-of-thought prompts. The gathering contains fashions of a number of sizes and accessibility ranges.

Google used quite a lot of input-output specs, together with people who merely offered directions (zero-shot prompting), people who offered examples of the duty (few-shot prompting), and people who requested for a proof together with the reply (chain of thought prompting), to generate the Flan Assortment 2022. The resultant fashions are far smarter and extra well timed than their predecessors.

The Flan Assortment 2022 was developed to carry out very properly on quite a lot of language-related duties, similar to query answering, textual content categorization, and machine translation. As a result of it has been fine-tuned on a couple of thousand additional duties encompassing extra languages than T5 fashions, its efficiency is superior to that of T5 fashions. As well as, the Flan Assortment 2022 is customizable, which permits developpers to tailor it to their very own necessities in a granular method.

Flan-T5 small, Flan-T5 base, Flan-T5 huge, Flan-T5 XL, and Flan-T5 XXL are all a part of the Flan Assortment 2022. These fashions can be utilized as-is with no additional fine-tuning, or the Hugging Face Coach class can be utilized to fine-tune them for a given dataset. The FLAN Instruction Tuning Repository comprises the code wanted to load the FLAN instruction tuning dataset.

Distinction between pretrain-finetune, prompting and instruction tuning

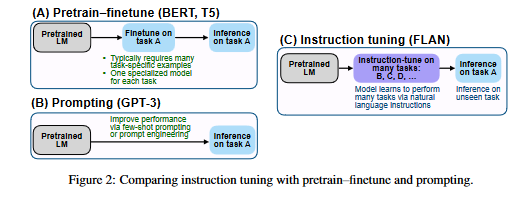

The diagram that follows presents a comparability of three distinct approaches to the method of coaching language fashions. These approaches are known as pretrain-finetune (illustrated by BERT and T5), prompting (illustrated by GPT-3) and instruction tuning (illustrated by FLAN).

The pretrain-finetune technique contains first coaching a language mannequin on an enormous corpus of textual content (generally known as pretraining), after which fine-tuning the mannequin for a selected process by offering enter and output samples which can be distinctive to the duty .

If you immediate a language mannequin, you present it a immediate, which is a small little bit of textual content. The language mannequin then makes use of this immediate to provide a bigger piece of textual content that fulfills the necessity of the duty.

Instruction tuning is a way that includes traits of prompting and pretrain-finetune right into a single approach. It entails fine-tuning a language mannequin by making use of it to a group of duties which can be framed as directions, the place every instruction consists of a immediate and a set of input-output situations. After then, the mannequin is evaluated on unseen duties.

FLAN: Instruction tuning improves zero-shot studying

Language fashions’ receptivity to pure language processing (NLP) directions is a key incentive for instruction tuning. The idea is that an LM will be taught to comply with directions and full duties with out being proven what to do by offering it with a supervised setting during which to take action. With a purpose to assess how properly a mannequin performs on duties it hasn’t seen earlier than, they cluster datasets in keeping with process kind and isolate particular person clusters for evaluation, performing instruction tuning on the remaining clusters.

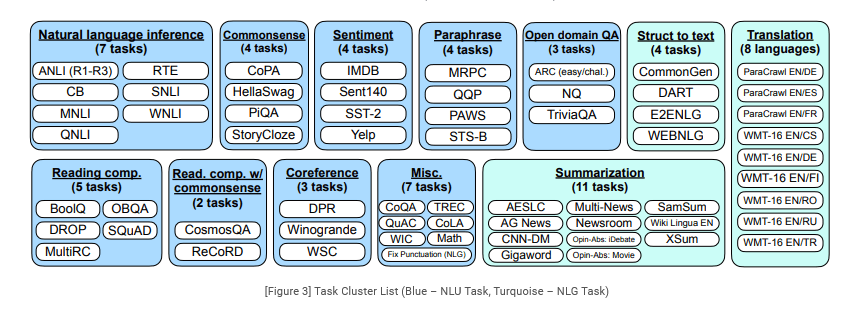

Since it could be time-consuming and resource-consuming to create an instruction tuning dataset from scratch with quite a few duties, the researchers convert present datasets from the analysis neighborhood into an educational format. They mix 62 textual content datasets, together with each language understanding and language era challenges, from Tensorflow Datasets. These datasets are proven in Determine 3; they’re divided into 12 completely different process clusters, every together with datasets that carry out comparable duties.

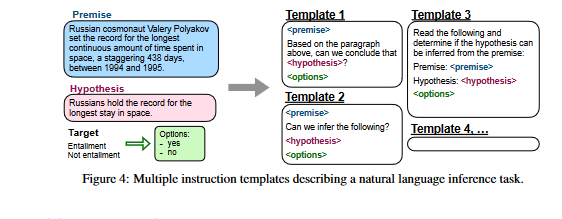

For every dataset, we manually compose ten distinctive templates that use pure language directions to explain the duty for that dataset. Whereas many of the ten templates describe the unique process, to extend variety, for every dataset we additionally embrace as much as three templates that “turned the duty round,”(e.g., for sentiment classification we embrace templates asking to generate a film evaluate).

We then instruction tune a pretrained language mannequin on the combination of all datasets, with examples in every dataset formatted by way of a randomly chosen instruction template for that dataset. Determine 4 reveals a number of instruction templates for a pure language inference dataset.

The premise of this process is that the Russian cosmonaut Valery Polyakov spent 438 days in orbit between 1994 and 1995, setting the file for the longest steady period of time spent in house. In keeping with the speculation, the Russians have the file for the longest keep in house.

Our goal is to examine whether or not the speculation will be deduced from the premise

There are a couple of templates for answering, relying on whether or not you assume the speculation will be inferred or not, or if you wish to create a brand new assertion that follows from the premise and both helps or contradicts the speculation.

Coaching

Mannequin structure and pretraining

Of their experiments, the researchers use LaMDA-PT, a dense left-to-right decoder-only transformer language mannequin with 137B parameters (Thoppilan et al., 2022). This mannequin was pre-trained on quite a lot of on-line pages, together with these containing pc code, dialog information, and Wikipedia. These had been tokenized into 2.49 trillion BPE tokens utilizing the SentencePiece library, and a lexicon of 32,000 phrases was used (Kudo & Richardson, 2018). Roughly ten % of the information collected previous to coaching was non-English. You will need to be aware that LaMDA-PT solely gives pre-training of the language mannequin, in contrast to LaMDA, which has been fine-tuned for dialogue.

Instruction tuning process

FlAN is the instruction tuned model of LaMDA-PT. The instruction tuning course of combines all information units and randomly attracts samples from every of them. The researchers restrict the variety of coaching examples per dataset to 30k and comply with the example-proportional mixing approach (Raffel et al., 2020), which permits for a mixing charge of not more than 3k.2. This helps them obtain a stability between the completely different dataset sizes.

They use the Adafactor Optimizer (Shazeer & Stern, 2018) with a studying charge of 3e-5 to superb tune all fashions for a complete of 30k gradient steps, with a batch dimension of 8,192 tokens and a studying charge of 3e-5. The enter and goal sequence lengths used within the tuning course of are 1024 and 256, respectively. They mixture quite a few coaching situations right into a single sequence utilizing a way referred to as packing (Raffel et al., 2020), which separates inputs from targets utilizing a novel EOS token. This instruction tuning takes round 60 hours on a TPUv3 with 128 cores. For all evaluations, they report outcomes on the ultimate checkpoint educated for 30k steps

Outcomes

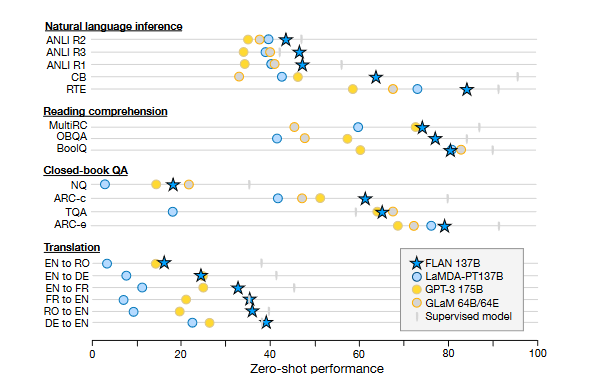

The researchers consider FLAN on pure language inference, studying comprehension, closed-book QA, translation, commonsense reasoning, coreference decision, and struct-to-text. Outcomes on pure language inference, studying comprehension, closed-book QA, and translation are

summarized and described under:

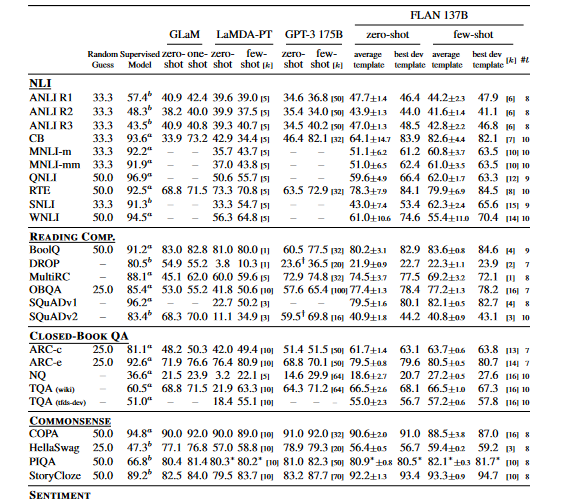

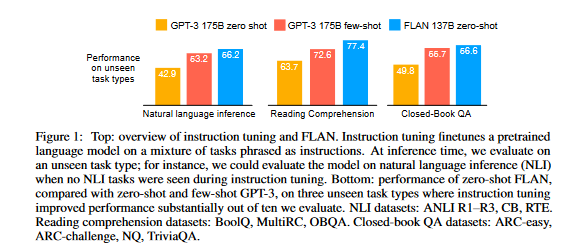

- Pure language inference (NLI): On 5 NLI datasets, the place a mannequin is requested to find out whether or not a speculation is true given a group of premises, FLAN achieves state-of-the-art efficiency relative to all baselines. One attainable rationalization for GPT-3’s difficulties with NLI is that NLI situations are sometimes awkwardly constructed as a continuation of a sentence, so they’re unlikely to have appeared organically in an unsupervised coaching set (Brown et al., 2020). The researchers enhance the efficiency of FLAN by phrasing NLI because the extra pure “Does <premise> imply that <speculation>?”.

- Studying comprehension: By way of efficiency, FLAN is superior to the baselines for MultiRC (Khashabi et al., 2018) and OBQA (Mihaylov et al., 2018) in studying comprehension duties during which fashions are requested to reply a query concerning a provided passage. Though LaMDA-PT already achieves nice efficiency on BoolQ (Clark et al., 2019a), FLAN considerably outperforms GPT-3 on this dataset.

- Closed-book QA: FLAN outperforms GPT-3 on all 4 datasets on closed-book QA, the place fashions are requested to reply questions in regards to the world with out being given any of the knowledge which may reveal the reply. On ARC-e and ARC-c, FLAN outperforms GLaM (Clark et al., 2018), however on NQ (Lee et al., 2019; Kwiatkowski et al., 2019) and TQA (Joshi et al., 2017), FLAN performs barely worse.

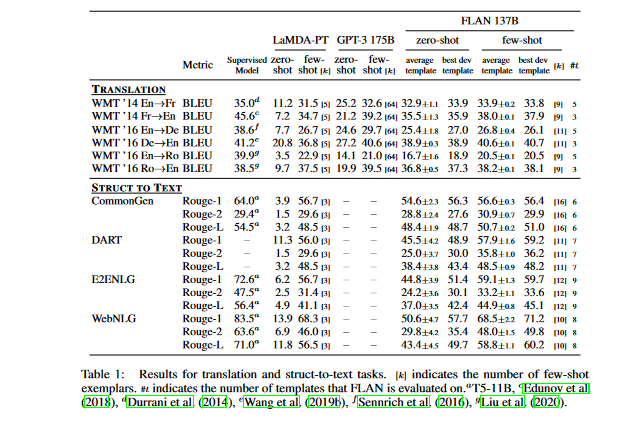

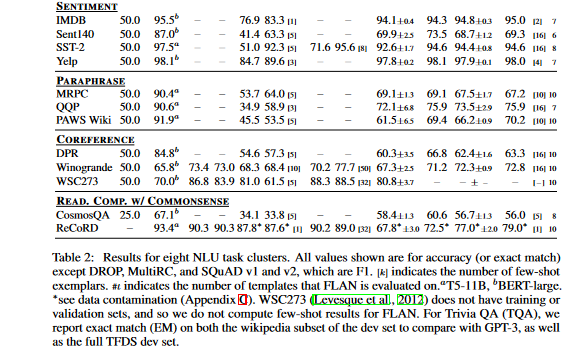

- Translation: LaMDA-PT’s coaching information is much like that of GPT-3 in that it’s principally English with a small quantity of non-English textual content. The outcomes of FLAN’s machine translation are evaluated for the three datasets used within the GPT-3 paper: French-English from WMT’14 (Bojar et al., 2014), German-English from WMT’16 (Bojar et al., 2016), and Romanian-English from WMT’16 (Bojar et al., 2016). FLAN outperforms GPT-3 general, and zero-shot GPT-3 particularly, on all six evaluations; nevertheless, it usually performs worse than few-shot GPT-3. FLAN achieves spectacular efficiency when translating into English, and it performs properly in opposition to supervised translation baselines, as does GPT-3. Nevertheless, FLAN makes use of an English sentence piece tokenizer, and most of its pretraining information is in English, so its efficiency when translating from English to different languages was fairly poor.

- Further duties: Whereas instruction tuning reveals promise for the aforementioned process clusters, it isn’t as efficient for a lot of language modeling duties (similar to commonsense reasoning or coreference decision duties expressed as sentence completions). In Desk 2, we are able to observe that, of the seven coreference decision and commonsense reasoning duties, FLAN solely performs higher than LaMDA-PT on three of them. This detrimental consequence means that instruction tuning is just not helpful when the downstream process is equivalent to the unique language modeling pre-training goal (i.e., in conditions the place directions are primarily repetitive). Lastly, in Desk 2 and Desk 1, the authors current the outcomes of the sentiment evaluation, paraphrase detection, and struct-to-text duties, in addition to different datasets for which GPT-3 outcomes are usually not obtainable. Normally, zero-shot FLAN outperforms zero-shot LaMDA-PT and is on par with and even higher than the efficiency of few-shot LaMDA-PT.

The determine under compares the efficiency of three completely different sorts of unseen duties between zero-shot FLAN, zero-shot and few-shot GPT-3. Out of ten assessments, three of those duties confirmed a big enchancment in efficiency due to instruction tuning.

Ablation research contain systematically eradicating sure components of the mannequin to evaluate their affect on efficiency. These research reveal that the success of instruction tuning depends on key components such because the variety of finetuning datasets, mannequin scale, and pure language directions.

The critique of the paper

The critique of the paper “Advantageous-tuned Language Fashions are Zero-Shot Learners” sheds gentle on a number of key areas that might be essential enchancment factors for future analysis. Here’s a strategic plan for addressing these factors.

- Higher Analysis Metrics: The paper has been criticized for the anomaly within the outcomes obtained and drawn conclusions. This might be improved by growing and utilizing higher analysis metrics that may quantitively rank the efficiency of those fashions. The metrics ought to be complete sufficient to incorporate accuracy, precision, recall, and in addition, incorporate the suggestions from human annotators. This may assist in offering a extra clear comparability of the outcomes obtained.

- Contemplating Actual-World Context: Critiques talked about that the duties had been designed with much less real-world context. Future analysis ought to concentrate on utilizing real-world, task-specific datasets whereas coaching the fashions. This might be achieved by crowd-sourcing language duties which can be extra consultant of real-world purposes of AI.

- Addressing Overparameterization: Overparameterization was recognized as a big concern on this paper. It results in elevated computational energy and time. Future analysis might concentrate on growing strategies to scale back the variety of parameters with out considerably affecting efficiency. Strategies like pruning, data distillation, or utilizing transformer variants with fewer parameters might be explored.

- Bias and Moral concerns: The paper fails to deal with potential biases in educated fashions and moral points linked to AI’s language understanding capabilities. Future analysis ought to focus extra on bias detection and mitigation strategies to make sure the credibility and equity of those fashions. Researchers might additionally discover and implement methods to make the mannequin extra clear and explainable.

- Useful resource consumption: Advantageous-tuned language fashions are computation-heavy and devour a big quantity of power, making them much less accessible. Future research might concentrate on growing extra resource-efficient fashions or strategies to make them extra accessible and fewer power-hungry.

- Improved Documentation: The paper was criticized for dicey explanations of some ideas. Future analysis ought to emphasize extra on an in depth rationalization of each idea, concept, and outcomes obtained to make sure the understanding of a broad viewers.

Conclusion

FLAN is a potent useful resource for enhancing language mannequin effectivity throughout a variety of pure language processing (NLP) duties. Builders which can be making an attempt to create pure language processing fashions that may carry out properly on unseen duties will discover its zero-shot studying capabilities to be a useful useful resource. The variety of duties, massive dimension of the mannequin, and use of pure language directions are all explanation why FLAN has been so profitable.

References

Introducing FLAN: Extra generalizable Language Fashions with Instruction Advantageous-Tuning

Finetuned Language Fashions Are Zero-Shot Learners

This paper explores a easy technique for enhancing the zero-shot studying skills of language fashions. We present that instruction tuning — finetuning language fashions on a group of duties described by way of directions — considerably improves zero-shot efficiency on unseen duties. We take a 137B p…

The Flan Assortment: Designing Knowledge and Strategies for Efficient Instruction Tuning

We research the design selections of publicly obtainable instruction tuning strategies, and break down the event of Flan 2022 (Chung et al., 2022). By means of cautious ablation research on the Flan Assortment of duties and strategies, we tease aside the impact of design selections which allow Flan-T5 to outper…