Introduction

Let’s say you’re interacting with an AI that not solely solutions your questions however understands the nuances of your intent. It crafts tailor-made, coherent responses that nearly really feel human. How does this occur? Most individuals don’t even notice the key lies in LLM parameters.

In the event you’ve ever questioned how AI fashions like ChatGPT generate remarkably lifelike textual content, you’re in the fitting place. These fashions don’t simply magically know what to say subsequent. As a substitute, they depend on key parameters to find out all the things from creativity to accuracy to coherence. Whether or not you’re a curious newbie or a seasoned developer, understanding these parameters can unlock new ranges of AI potential in your initiatives.

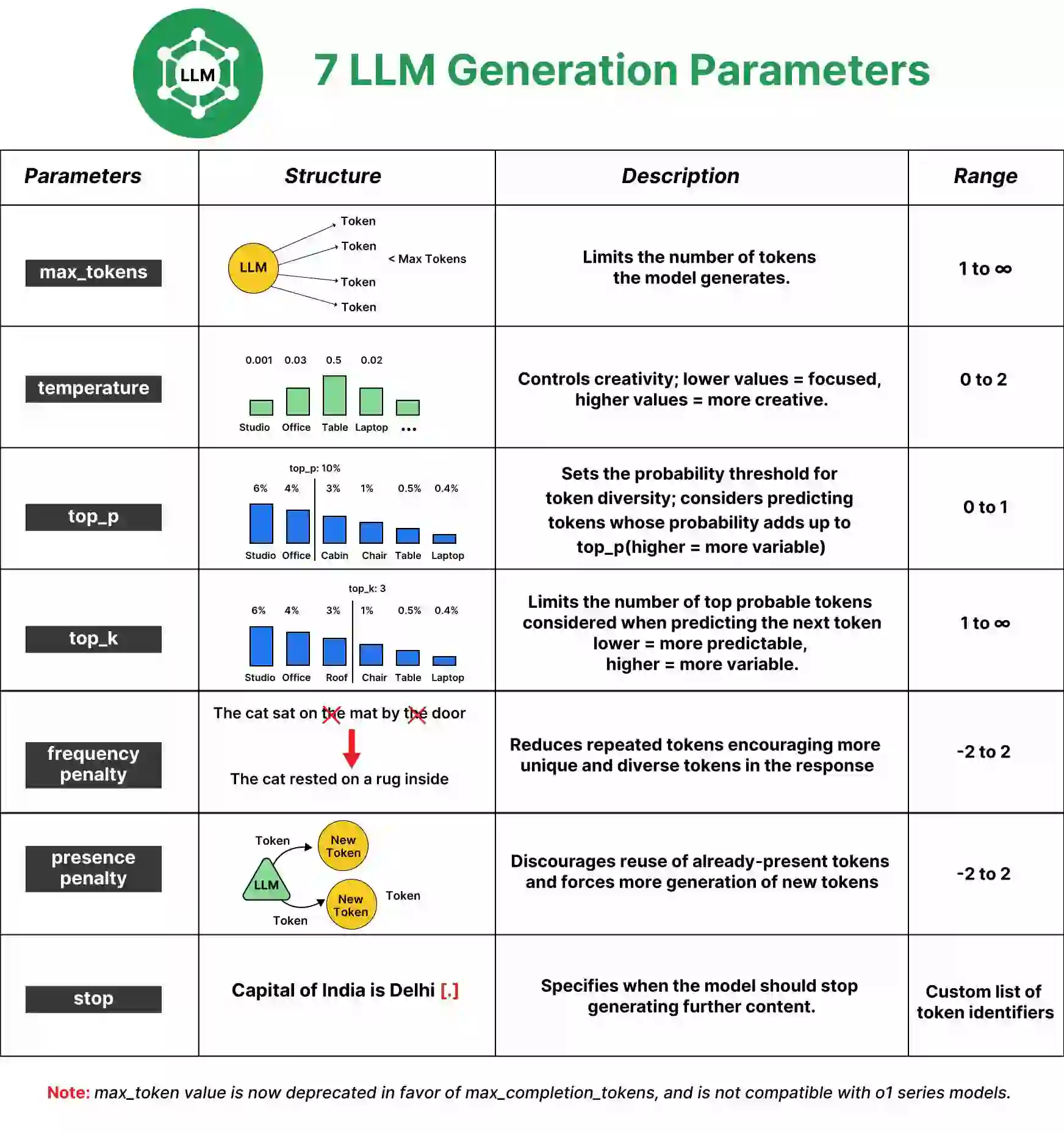

This text will focus on the 7 important technology parameters that form how massive language fashions (LLMs) like GPT-4o function. From temperature settings to top-k sampling, these parameters act because the dials you may modify to manage the AI’s output. Mastering them is like gaining the steering wheel to navigate the huge world of AI textual content technology.

Overview

- Find out how key parameters like temperature, max_tokens, and top-p form AI-generated textual content.

- Uncover how adjusting LLM parameters can improve creativity, accuracy, and coherence in AI outputs.

- Grasp the 7 important LLM parameters to customise textual content technology for any software.

- Wonderful-tune AI responses by controlling output size, range, and factual accuracy with these parameters.

- Keep away from repetitive and incoherent AI outputs by tweaking frequency and presence penalties.

- Unlock the total potential of AI textual content technology by understanding and optimizing these essential LLM settings.

What are LLM Technology Parameters?

Within the context of Giant Language Fashions (LLMs) like GPT-o1, technology parameters are settings or configurations that affect how the mannequin generates its responses. These parameters assist decide varied points of the output, corresponding to creativity, coherence, accuracy, and even size.

Consider technology parameters because the “management knobs” of the mannequin. By adjusting them, you may change how the AI behaves when producing textual content. These parameters information the mannequin in navigating the huge area of attainable phrase combos to pick out essentially the most appropriate response primarily based on the consumer’s enter.

With out these parameters, the AI could be much less versatile and infrequently unpredictable in its behaviour. By fine-tuning them, customers can both make the mannequin extra centered and factual or enable it to discover extra artistic and various responses.

Key Facets Influenced by LLM Technology Parameters:

- Creativity vs. Accuracy: Some parameters management how “artistic” or “predictable” the mannequin’s responses are. Would you like a protected and factual response or search one thing extra imaginative?

- Response Size: These settings can affect how a lot or how little the mannequin generates in a single response.

- Variety of Output: The mannequin can both give attention to the probably subsequent phrases or discover a broader vary of potentialities.

- Danger of Hallucination: Overly artistic settings could lead the mannequin to generate “hallucinations” or plausible-sounding however factually incorrect responses. The parameters assist stability that danger.

Every LLM technology parameter performs a singular function in shaping the ultimate output, and by understanding them, you may customise the AI to fulfill your particular wants or objectives higher.

Sensible Implementation of seven LLM Parameters

Set up Obligatory Libraries

Earlier than utilizing the OpenAI API to manage parameters like max_tokens, temperature, and so forth., that you must set up the OpenAI Python consumer library. You are able to do this utilizing pip:

!pip set up openaiAs soon as the library is put in, you should use the next code snippets for every parameter. Make certain to interchange your_openai_api_key along with your precise OpenAI API key.

Fundamental Setup for All Code Snippets

This setup will stay fixed in all examples. You possibly can reuse this part as your base setup for interacting with GPT Fashions.

import openai

# Set your OpenAI API key

openai.api_key = 'your_openai_api_key'

# Outline a easy immediate that we will reuse in examples

immediate = "Clarify the idea of synthetic intelligence in easy phrases"

1. Max Tokens

The max_tokens parameter controls the size of the output generated by the mannequin. A “token” may be as quick as one character or so long as one phrase, relying on the complexity of the textual content.

- Low Worth (e.g., 10): Produces shorter responses.

- Excessive Worth (e.g., 1000): Generates longer, extra detailed responses.

Why is it Necessary?

By setting an acceptable max_tokens worth, you may management whether or not the response is a fast snippet or an in-depth clarification. That is particularly vital for purposes the place brevity is vital, like textual content summarization, or the place detailed solutions are wanted, like in knowledge-intensive dialogues.

Word: Max_token worth is now deprecated in favor of max_completion_tokens and isn’t suitable with o1 sequence fashions.

Implementation

Right here’s how one can management the size of the generated output by utilizing the max_tokens parameter with the OpenAI mannequin:

import openai

consumer = openai.OpenAI(api_key='Your_api_key')

max_tokens=10

temperature=0.5

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{"role": "user",

"content": "What is the capital of India? Give 7 places to Visit"}

],

max_tokens=max_tokens,

temperature=temperature,

n=1,

)

print(response.selections[0].message.content material)Output

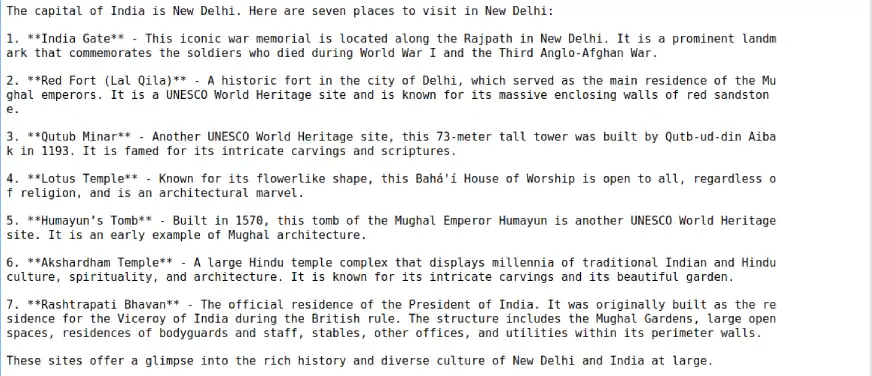

max_tokens = 10

- Output: ‘The capital of India is New Delhi. Listed below are’

- The response could be very temporary and incomplete, lower off because of the token restrict. It offers primary info however doesn’t elaborate. The sentence begins however doesn’t end, slicing off simply earlier than itemizing locations to go to.

max_tokens = 20

- Output: ‘The capital of India is New Delhi. Listed below are seven locations to go to in New Delhi:n1.’

- With a barely larger token restrict, the response begins to record locations however solely manages to begin the primary merchandise earlier than being lower off once more. It’s nonetheless too quick to supply helpful element and even end a single place description.

max_tokens = 50

- Output: ‘The capital of India is New Delhi. Listed below are seven locations to go to in New Delhi:n1. **India Gate**: This iconic monument is a warfare memorial situated alongside the Rajpath in New Delhi. It’s devoted to the troopers who died throughout World’

- Right here, the response is extra detailed, providing an entire introduction and the start of an outline for the primary location, India Gate. Nevertheless, it’s lower off mid-sentence, which suggests the 50-token restrict isn’t sufficient for a full record however can provide extra context and clarification for not less than one or two objects.

max_tokens = 500

- Output: (Full detailed response with seven locations)

- With this bigger token restrict, the response is full and offers an in depth record of seven locations to go to in New Delhi. Every place features a temporary however informative description, providing context about its significance and historic significance. The response is absolutely articulated and permits for extra complicated and descriptive textual content.

2. Temperature

The temperature parameter influences how random or artistic the mannequin’s responses are. It’s basically a measure of how deterministic the responses needs to be:

- Low Temperature (e.g., 0.1): The mannequin will produce extra centered and predictable responses.

- Excessive Temperature (e.g., 0.9): The mannequin will produce extra artistic, different, and even “wild” responses.

Why is it Necessary?

That is good for controlling the tone. Use low temperatures for duties like producing technical solutions, the place precision issues, and better temperatures for artistic writing duties, corresponding to storytelling or poetry.

Implementation

The temperature parameter controls the randomness or creativity of the output. Right here’s learn how to use it with the newer mannequin:

import openai

consumer = openai.OpenAI(api_key=api_key)

max_tokens=500

temperature=0.1

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{"role": "user",

"content": "What is the capital of India? Give 7 places to Visit"}

],

max_tokens=max_tokens,

temperature=temperature,

n=1,

cease=None

)

print(response.selections[0].message.content material)Output

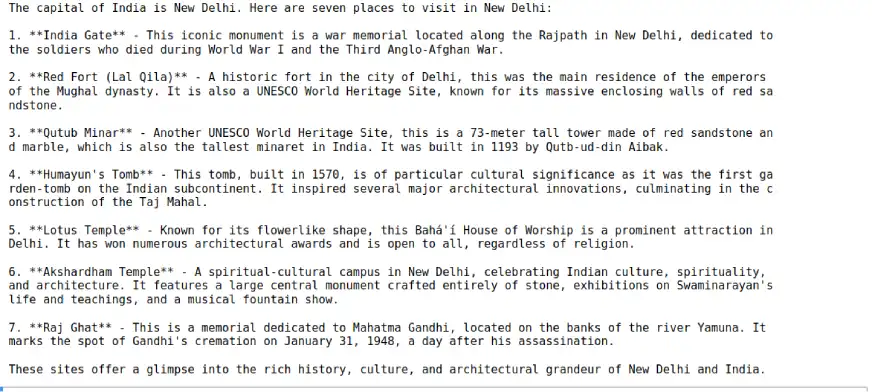

temperature=0.1

The output is strictly factual and formal, offering concise, easy info with minimal variation or embellishment. It reads like an encyclopedia entry, prioritizing readability and precision.

temperature=0.5

This output retains factual accuracy however introduces extra variability in sentence construction. It provides a bit extra description, providing a barely extra partaking and inventive tone, but nonetheless grounded in info. There’s a little bit extra room for slight rewording and extra element in comparison with the 0.1 output.

temperature=0.9

Probably the most artistic model, with descriptive and vivid language. It provides subjective parts and vibrant particulars, making it really feel extra like a journey narrative or information, emphasizing ambiance, cultural significance, and info.

3. Prime-p (Nucleus Sampling)

The top_p parameter, also called nucleus sampling, helps management the range of responses. It units a threshold for the cumulative chance distribution of token selections:

- Low Worth (e.g., 0.1): The mannequin will solely think about the highest 10% of attainable responses, limiting variation.

- Excessive Worth (e.g., 0.9): The mannequin considers a wider vary of attainable responses, rising variability.

Why is it Necessary?

This parameter helps stability creativity and precision. When paired with temperature, it might produce various and coherent responses. It’s nice for purposes the place you need artistic flexibility however nonetheless want some degree of management.

Implementation

The top_p parameter, also called nucleus sampling, controls the range of the responses. Right here’s learn how to use it:

import openai

consumer = openai.OpenAI(api_key=api_key)

max_tokens=500

temperature=0.1

top_p=0.5

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{"role": "user",

"content": "What is the capital of India? Give 7 places to Visit"}

],

max_tokens=max_tokens,

temperature=temperature,

n=1,

top_p=top_p,

cease=None

)

print(response.selections[0].message.content material)Output

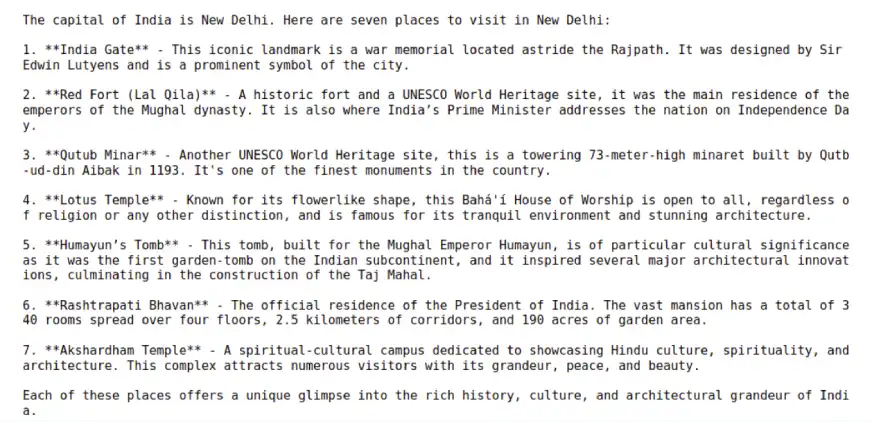

temperature=0.1

top_p=0.25

Extremely deterministic and fact-driven: At this low top_p worth, the mannequin selects phrases from a slim pool of extremely possible choices, resulting in concise and correct responses with minimal variability. Every location is described with strict adherence to core info, leaving little room for creativity or added particulars.

As an illustration, the point out of India Gate focuses purely on its function as a warfare memorial and its historic significance, with out further particulars just like the design or ambiance. The language stays easy and formal, making certain readability with out distractions. This makes the output ideally suited for conditions requiring precision and an absence of ambiguity.

temperature=0.1

top_p=0.5

Balanced between creativity and factual accuracy: With top_p = 0.5, the mannequin opens up barely to extra different phrasing whereas nonetheless sustaining a powerful give attention to factual content material. This degree introduces further contextual info that gives a richer narrative with out drifting too removed from the principle info.

For instance, within the description of Crimson Fort, this output consists of the element in regards to the Prime Minister hoisting the flag on Independence Day—some extent that provides extra cultural significance however isn’t strictly vital for the placement’s historic description. The output is barely extra conversational and fascinating, interesting to readers who need each info and a little bit of context.

- Extra relaxed however nonetheless factual in nature, permitting for slight variability in phrasing however nonetheless fairly structured.

- The sentences are much less inflexible, and there’s a wider vary of info included, corresponding to mentioning the hoisting of the nationwide flag at Crimson Fort on Independence Day and the design of India Gate by Sir Edwin Lutyens.

- The wording is barely extra fluid in comparison with top_p = 0.1, although it stays fairly factual and concise.

temperature = 0.5

top_p=1

Most various and creatively expansive output: At top_p = 1, the mannequin permits for optimum selection, providing a extra versatile and expansive description. This model consists of richer language and extra, generally much less anticipated, content material.

For instance, the inclusion of Raj Ghat within the record of notable locations deviates from the usual historic or architectural landmarks and provides a human contact by highlighting its significance as a memorial to Mahatma Gandhi. Descriptions may additionally embrace sensory or emotional language, like how Lotus Temple has a serene setting that pulls guests. This setting is right for producing content material that isn’t solely factually right but additionally partaking and interesting to a broader viewers.

4. Prime-k (Token Sampling)

The top_k parameter limits the mannequin to solely contemplating the highest ok most possible subsequent tokens when predicting (producing) the subsequent phrase.

- Low Worth (e.g., 50): Limits the mannequin to extra predictable and constrained responses.

- Excessive Worth (e.g., 500): Permits the mannequin to contemplate a bigger variety of tokens, rising the number of responses.

Why is it Necessary?

Whereas just like top_p, top_k explicitly limits the variety of tokens the mannequin can select from, making it helpful for purposes that require tight management over output variability. If you wish to generate formal, structured responses, utilizing a decrease top_k will help.

Implementation

The top_k parameter isn’t straight accessible within the OpenAI API like top_p, however top_p presents an analogous option to restrict token selections. Nevertheless, you may nonetheless management the randomness of tokens utilizing the top_p parameter as a proxy.

import openai

# Initialize the OpenAI consumer along with your API key

consumer = openai.OpenAI(api_key=api_key)

max_tokens = 500

temperature = 0.1

top_p = 0.9

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{"role": "user", "content": "What is the capital of India? Give 7 places to Visit"}

],

max_tokens=max_tokens,

temperature=temperature,

n=1,

top_p=top_p,

cease=None

)

print("Prime-k Instance Output (Utilizing top_p as proxy):")

print(response.selections[0].message.content material)Output

Prime-k Instance Output (Utilizing top_p as proxy):The capital of India is New Delhi. Listed below are seven notable locations to go to in

New Delhi:1. **India Gate** - This can be a warfare memorial situated astride the Rajpath, on

the japanese fringe of the ceremonial axis of New Delhi, India, previously known as

Kingsway. It's a tribute to the troopers who died throughout World Conflict I and

the Third Anglo-Afghan Conflict.2. **Crimson Fort (Lal Qila)** - A historic fort within the metropolis of Delhi in India,

which served as the principle residence of the Mughal Emperors. Yearly on

India's Independence Day (August 15), the Prime Minister hoists the nationwide

flag on the essential gate of the fort and delivers a nationally broadcast speech

from its ramparts.3. **Qutub Minar** - A UNESCO World Heritage Website situated within the Mehrauli

space of Delhi, Qutub Minar is a 73-meter-tall tapering tower of 5

storeys, with a 14.3 meters base diameter, lowering to 2.7 meters on the high

of the height. It was constructed in 1193 by Qutb-ud-din Aibak, founding father of the

Delhi Sultanate after the defeat of Delhi's final Hindu kingdom.4. **Lotus Temple** - Notable for its flowerlike form, it has turn into a

distinguished attraction within the metropolis. Open to all, no matter faith or any

different qualification, the Lotus Temple is a wonderful place for meditation

and acquiring peace.5. **Humayun's Tomb** - One other UNESCO World Heritage Website, that is the tomb

of the Mughal Emperor Humayun. It was commissioned by Humayun's first spouse

and chief consort, Empress Bega Begum, in 1569-70, and designed by Mirak

Mirza Ghiyas and his son, Sayyid Muhammad.6. **Akshardham Temple** - A Hindu temple, and a spiritual-cultural campus in

Delhi, India. Additionally known as Akshardham Mandir, it shows millennia

of conventional Hindu and Indian tradition, spirituality, and structure.7. **Rashtrapati Bhavan** - The official residence of the President of India.

Situated on the Western finish of Rajpath in New Delhi, the Rashtrapati Bhavan

is an unlimited mansion and its structure is breathtaking. It incorporates

varied types, together with Mughal and European, and is a

5. Frequency Penalty

The frequency_penalty parameter discourages the mannequin from repeating beforehand used phrases. It reduces the chance of tokens which have already appeared within the output.

- Low Worth (e.g., 0.0): The mannequin received’t penalize for repetition.

- Excessive Worth (e.g., 2.0): The mannequin will closely penalize repeated phrases, encouraging the technology of latest content material.

Why is it Importnt?

That is helpful once you need the mannequin to keep away from repetitive outputs, like in artistic writing, the place redundancy would possibly diminish high quality. On the flip facet, you may want decrease penalties in technical writing, the place repeated terminology might be helpful for readability.

Implementation

The frequency_penalty parameter helps management repetitive phrase utilization within the generated output. Right here’s learn how to use it with gpt-4o:

import openai

# Initialize the OpenAI consumer along with your API key

consumer = openai.OpenAI(api_key='Your_api_key')

max_tokens = 500

temperature = 0.1

top_p=0.25

frequency_penalty=1

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{"role": "user", "content": "What is the capital of India? Give 7 places to Visit"}

],

max_tokens=max_tokens,

temperature=temperature,

n=1,

top_p=top_p,

frequency_penalty=frequency_penalty,

cease=None

)

print(response.selections[0].message.content material)Output

frequency_penalty=1

Balanced output with some repetition, sustaining pure circulate. Best for contexts like artistic writing the place some repetition is appropriate. The descriptions are clear and cohesive, permitting for simple readability with out extreme redundancy. Helpful when each readability and circulate are required.

frequency_penalty=1.5

Extra different phrasing with diminished repetition. Appropriate for contexts the place linguistic range enhances readability, corresponding to reviews or articles. The textual content maintains readability whereas introducing extra dynamic sentence buildings. Useful in technical writing to keep away from extreme repetition with out shedding coherence.

frequency_penalty=2

Maximizes range however could sacrifice fluency and cohesion. The output turns into much less uniform, introducing extra selection however generally shedding smoothness. Appropriate for artistic duties that profit from excessive variation, although it might cut back readability in additional formal or technical contexts as a result of inconsistency.

6. Presence Penalty

The presence_penalty parameter is just like the frequency penalty, however as a substitute of penalizing primarily based on how usually a phrase is used, it penalizes primarily based on whether or not a phrase has appeared in any respect within the response to this point.

- Low Worth (e.g., 0.0): The mannequin received’t penalize for reusing phrases.

- Excessive Worth (e.g., 2.0): The mannequin will keep away from utilizing any phrase that has already appeared.

Why is it Necessary?

Presence penalties assist encourage extra various content material technology. It’s particularly helpful once you need the mannequin to repeatedly introduce new concepts, as in brainstorming classes.

Implementation

The presence_penalty discourages the mannequin from repeating concepts or phrases it has already launched. Right here’s learn how to apply it:

import openai

# Initialize the OpenAI consumer along with your API key

consumer = openai.OpenAI(api_key='Your_api_key')

# Outline parameters for the chat request

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{

"role": "user",

"content": "What is the capital of India? Give 7 places to visit."

}

],

max_tokens=500, # Max tokens for the response

temperature=0.1, # Controls randomness

top_p=0.1, # Controls range of responses

presence_penalty=0.5, # Encourages the introduction of latest concepts

n=1, # Generate only one completion

cease=None # Cease sequence, none on this case

)

print(response.selections[0].message.content material)Output

presence_penalty=0.5

The output is informative however considerably repetitive, because it offers well-known info about every web site, emphasizing particulars which will already be acquainted to the reader. As an illustration, the descriptions of India Gate and Qutub Minar don’t diverge a lot from frequent data, sticking intently to traditional summaries. This demonstrates how a decrease presence penalty encourages the mannequin to stay inside acquainted and already established content material patterns.

presence_penalty=1

The output is extra different in the way it presents particulars, with the mannequin introducing extra nuanced info and restating info in a much less formulaic approach. For instance, the outline of Akshardham Temple provides a further sentence about millennia of Hindu tradition, signaling that the upper presence penalty pushes the mannequin towards introducing barely totally different phrasing and particulars to keep away from redundancy, fostering range in content material.

7. Cease Sequence

The cease parameter permits you to outline a sequence of characters or phrases that may sign the mannequin to cease producing additional content material. This lets you cleanly finish the technology at a particular level.

- Instance Cease Sequences: May very well be durations (.), newlines (n), or particular phrases like “The top”.

Why is it Necessary?

This parameter is very useful when engaged on purposes the place you need the mannequin to cease as soon as it has reached a logical conclusion or after offering a sure variety of concepts, corresponding to in Q&A or dialogue-based fashions.

Implementation

The cease parameter means that you can outline a stopping level for the mannequin when producing textual content. For instance, you may cease it after producing an inventory of things.

import openai

# Initialize the OpenAI consumer along with your API key

consumer = openai.OpenAI(api_key='Your_api_key')

max_tokens = 500

temperature = 0.1

top_p = 0.1

response = consumer.chat.completions.create(

mannequin="gpt-4o",

messages=[

{"role": "user", "content": "What is the capital of India? Give 7 places to Visit"}

],

max_tokens=max_tokens,

temperature=temperature,

n=1,

top_p=top_p,

cease=[".", "End of list"] # Outline cease sequences

)

print(response.selections[0].message.content material)Output

The capital of India is New Delhi

How do These LLM Parameters Work Collectively?

Now, the true magic occurs once you begin combining these parameters. For instance:

- Use temperature and top_p collectively to fine-tune artistic duties.

- Pair max_tokens with cease to restrict long-form responses successfully.

- Leverage frequency_penalty and presence_penalty to keep away from repetitive textual content, which is especially helpful for duties like poetry technology or brainstorming classes.

Conclusion

Understanding these LLM parameters can considerably enhance the way you work together with language fashions. Whether or not you’re creating an AI-based assistant, producing artistic content material, or performing technical duties, figuring out learn how to tweak these parameters helps you get one of the best output in your particular wants.

By adjusting LLM parameters like temperature, max_tokens, and top_p, you acquire management over the mannequin’s creativity, coherence, and size. Alternatively, penalties like frequency and presence be sure that outputs stay recent and keep away from repetitive patterns. Lastly, the cease sequence ensures clear and well-defined completions.

Experimenting with these settings is vital, because the optimum configuration is determined by your software. Begin by tweaking one parameter at a time and observe how the outputs shift—this may enable you to dial within the good setup in your use case!

Are you in search of a web-based Generative AI course? If sure, discover this: GenAI Pinnacle Program.

Steadily Requested Questions

Ans. LLM technology parameters management how AI fashions like GPT-4 generate textual content, affecting creativity, accuracy, and size.

Ans. The temperature controls how artistic or centered the mannequin’s output is. Decrease values make it extra exact, whereas larger values enhance creativity.

Ans. Max_tokens limits the size of the generated response, with larger values producing longer and extra detailed outputs.

Ans. Prime-p (nucleus sampling) controls the range of responses by setting a threshold for the cumulative chance of token selections, balancing precision and creativity.

Ans. These penalties cut back repetition and encourage the mannequin to generate extra various content material, enhancing general output high quality.