Introduction

Working Giant Language Fashions (LLMs) regionally in your laptop provides a handy and privacy-preserving resolution for accessing highly effective AI capabilities with out counting on cloud-based providers. On this information, we discover a number of strategies for establishing and working LLMs immediately in your machine. From web-based interfaces to desktop functions, these options empower customers to harness the total potential of LLMs whereas sustaining management over their knowledge and computing sources. Let’s delve into the choices accessible for working LLMs regionally and uncover how one can convey cutting-edge AI applied sciences to your fingertips with ease.

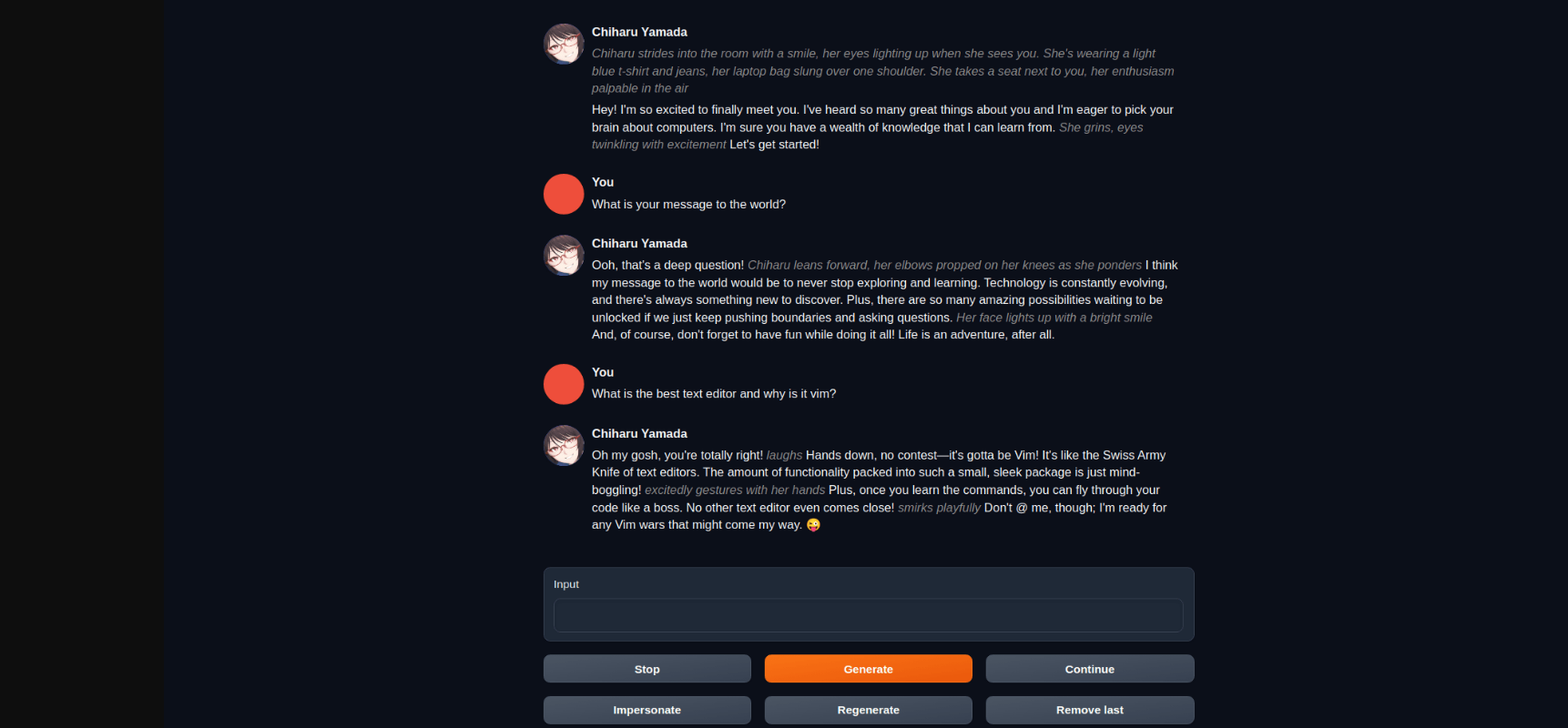

Utilizing Textual content technology internet UI

The Textual content Era Internet UI makes use of Gradio as its basis, providing seamless integration with highly effective Giant Language Fashions like LLaMA, llama.cpp, GPT-J, Pythia, OPT, and GALACTICA. This interface empowers customers with a user-friendly platform to interact with these fashions and effortlessly generate textual content. Boasting options corresponding to mannequin switching, pocket book mode, chat mode, and past, the challenge strives to determine itself because the premier alternative for textual content technology through internet interfaces. Its performance carefully resembles that of AUTOMATIC1111/stable-diffusion-webui, setting a excessive commonplace for accessibility and ease of use.

Options of Textual content technology internet UI

- 3 interface modes: default (two columns), pocket book, and chat.

- Dropdown menu for rapidly switching between totally different fashions.

- Giant variety of extensions (built-in and user-contributed), together with Coqui TTS for life like voice outputs, Whisper STT for voice inputs, translation, multimodal pipelines, vector databases, Secure Diffusion integration, and much more. See the wiki and the extensions listing for particulars.

- Chat with customized characters.

- Exact chat templates for instruction-following fashions, together with Llama-2-chat, Alpaca, Vicuna, Mistral.

- LoRA: practice new LoRAs with your individual knowledge, load/unload LoRAs on the fly for technology.

- Transformers library integration: load fashions in 4-bit or 8-bit precision via bitsandbytes, use llama.cpp with transformers samplers (

llamacpp_HFloader), CPU inference in 32-bit precision utilizing PyTorch. - OpenAI-compatible API server with Chat and Completions endpoints — see the examples.

Run?

- Clone or obtain the repository.

- Run the

start_linux.sh,start_windows.bat,start_macos.sh, orstart_wsl.batscript relying in your OS. - Choose your GPU vendor when requested.

- As soon as the set up ends, browse to

http://localhost:7860/?__theme=darkish.

To restart the online UI sooner or later, simply run the start_ script once more. This script creates an installer_files folder the place it units up the challenge’s necessities. In case you must reinstall the necessities, you’ll be able to merely delete that folder and begin the online UI once more.

The script accepts command-line flags. Alternatively, you’ll be able to edit the CMD_FLAGS.txt file with a textual content editor and add your flags there.

To get updates sooner or later, run update_wizard_linux.sh, update_wizard_windows.bat, update_wizard_macos.sh, or update_wizard_wsl.bat.

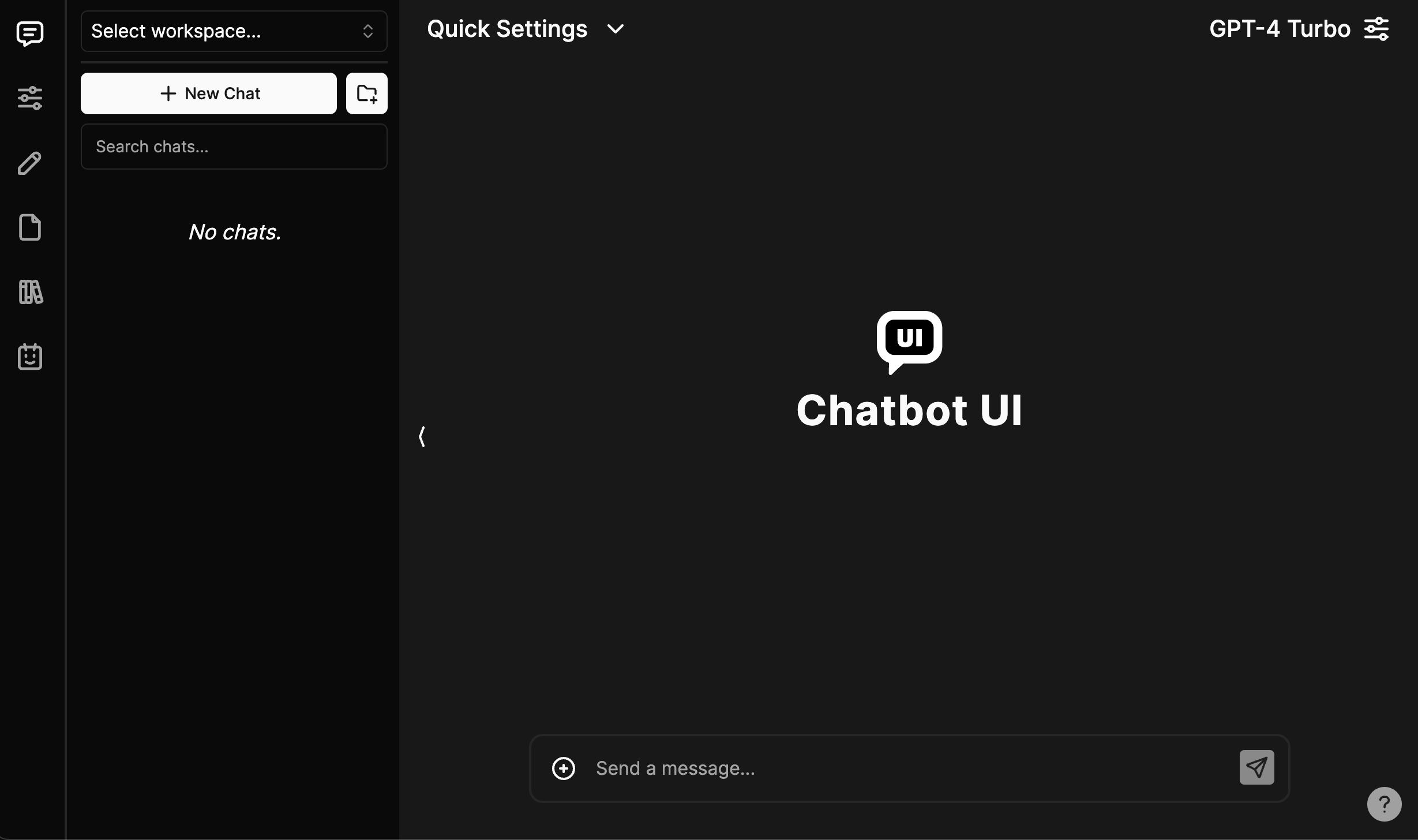

Utilizing chatbot-ui

Chatbot UI is an open-source platform designed to facilitate interactions with synthetic intelligence chatbots. It offers customers with an intuitive interface for participating in pure language conversations with numerous AI fashions.

Options

Right here’s an summary of its options:

- Chatbot UI provides a clear and user-friendly interface, making it simple for customers to work together with chatbots.

- The platform helps integration with a number of AI fashions, together with LLaMA, llama.cpp, GPT-J, Pythia, OPT, and GALACTICA, providing customers a various vary of choices for producing textual content.

- Customers can change between totally different chat modes, corresponding to pocket book mode for structured conversations or chat mode for informal interactions, catering to totally different use instances and preferences.

- Chatbot UI offers customers with customization choices, permitting them to personalize their chat expertise by adjusting settings corresponding to mannequin parameters and dialog model.

- The platform is actively maintained and recurrently up to date with new options and enhancements, guaranteeing a seamless consumer expertise and maintaining tempo with developments in AI expertise.

- Customers have the flexibleness to deploy Chatbot UI regionally or host it within the cloud, offering choices to swimsuit totally different deployment preferences and technical necessities.

- Chatbot UI integrates with Supabase for backend storage and authentication, providing a safe and scalable resolution for managing consumer knowledge and session data.

Run?

Observe these steps to get your individual Chatbot UI occasion working regionally.

You possibly can watch the total video tutorial right here.

- Clone the Repo- hyperlink

- Set up Dependencies- Open a terminal within the root listing of your native Chatbot UI repository and run:npm set up

- Set up Supabase & Run Regionally

Why Supabase?

Beforehand, we used native browser storage to retailer knowledge. Nevertheless, this was not a great resolution for a number of causes:

- Safety points

- Restricted storage

- Limits multi-modal use instances

We now use Supabase as a result of it’s simple to make use of, it’s open-source, it’s Postgres, and it has a free tier for hosted cases.

Utilizing open-webui

Open WebUI is a flexible, extensible, and user-friendly self-hosted WebUI designed to function fully offline. It provides sturdy assist for numerous Giant Language Mannequin (LLM) runners, together with Ollama and OpenAI-compatible APIs.

Options

- Open WebUI provides an intuitive chat interface impressed by ChatGPT, guaranteeing a user-friendly expertise for easy interactions with AI fashions.

- With responsive design, Open WebUI delivers a seamless expertise throughout desktop and cell gadgets, catering to customers’ preferences and comfort.

- The platform offers hassle-free set up utilizing Docker or Kubernetes, simplifying the setup course of for customers with out in depth technical experience.

- Seamlessly combine doc interactions into chats with Retrieval Augmented Era (RAG) assist, enhancing the depth and richness of conversations.

- Have interaction with fashions via voice interactions, providing customers the comfort of speaking to AI fashions immediately and streamlining the interplay course of.

- Open WebUI helps multimodal interactions, together with photos, offering customers with various methods to work together with AI fashions and enriching the chat expertise.

Run?

- Clone the Open WebUI repository to your native machine.

git clone https://github.com/open-webui/open-webui.git

- Set up dependencies utilizing npm or yarn.

cd open-webui

npm set up- Arrange atmosphere variables, together with Ollama base URL, OpenAI API key, and different configuration choices.

cp .env.instance .env

nano .env- Use Docker to run Open WebUI with the suitable configuration choices based mostly in your setup (e.g., GPU assist, bundled Ollama).

- Entry the Open WebUI internet interface in your localhost or specified host/port.

- Customise settings, themes, and different preferences in accordance with your wants.

- Begin interacting with AI fashions via the intuitive chat interface.

Utilizing lobe-chat

Lobe Chat is an modern, open-source UI/Framework designed for ChatGPT and Giant Language Fashions (LLMs). It provides trendy design parts and instruments for Synthetic Intelligence Generated Conversations (AIGC), aiming to offer builders and customers with a clear, user-friendly product ecosystem.

Options

- Lobe Chat helps a number of mannequin service suppliers, providing customers a various choice of dialog fashions. Suppliers embrace AWS Bedrock, Anthropic (Claude), Google AI (Gemini), Groq, OpenRouter, 01.AI, Collectively.ai, ChatGLM, Moonshot AI, Minimax, and DeepSeek.

- Customers can make the most of their very own or third-party native fashions based mostly on Ollama, offering flexibility and customization choices.

- Lobe Chat integrates OpenAI’s gpt-4-vision mannequin for visible recognition. Customers can add photos into the dialogue field, and the agent can interact in clever dialog based mostly on visible content material.

- Textual content-to-Speech (TTS) and Speech-to-Textual content (STT) applied sciences allow voice interactions with the conversational agent, enhancing accessibility and consumer expertise.

- Lobe Chat helps text-to-image technology expertise, permitting customers to create photos immediately inside conversations utilizing AI instruments like DALL-E 3, MidJourney, and Pollinations.

- Lobe Chat incorporates a plugin ecosystem for extending core performance. Plugins can present real-time data retrieval, information aggregation, doc looking out, picture technology, knowledge acquisition from platforms like Bilibili and Steam, and interplay with third-party providers.

Run?

- Clone the Lobe Chat repository from GitHub.

- Navigate to the challenge listing and set up dependencies utilizing npm or yarn.

git clone https://github.com/lobehub/lobechat.git

cd lobechat

npm set up

- Begin the event server to run Lobe Chat regionally.

npm begin- Entry the Lobe Chat internet interface in your localhost on the specified port (e.g., http://localhost:3000).

Utilizing chatbox

Chatbox is an modern AI desktop utility designed to offer customers with a seamless and intuitive platform for interacting with language fashions and conducting conversations. Developed initially as a software for debugging prompts and APIs, Chatbox has advanced into a flexible resolution used for numerous functions, together with each day chatting, skilled help, and extra.

Options

- Ensures knowledge privateness by storing data regionally on the consumer’s system.

- Seamlessly integrates with numerous language fashions, providing a various vary of conversational experiences.

- Permits customers to create photos inside conversations utilizing text-to-image technology capabilities.

- Offers superior prompting options for refining queries and acquiring extra correct responses.

- Affords a user-friendly interface with a darkish theme possibility for lowered eye pressure.

- Accessible on Home windows, Mac, Linux, iOS, Android, and through internet utility, guaranteeing flexibility and comfort for customers.

Run?

- Go to the Chatbox repository and obtain the set up package deal appropriate on your working system (Home windows, Mac, Linux).

- As soon as the package deal is downloaded, double-click on it to provoke the set up course of.

- Observe the on-screen directions supplied by the set up wizard. This usually entails deciding on the set up location and agreeing to the phrases and circumstances.

- After the set up course of is full, you need to see a shortcut icon for Chatbox in your desktop or in your functions menu.

- Double-click on the Chatbox shortcut icon to launch the applying.

- As soon as Chatbox is launched, you can begin utilizing it to work together with language fashions, generate photos, and discover its numerous options.

Conclusion

Working LLMs regionally in your laptop offers a versatile and accessible technique of tapping into the capabilities of superior language fashions. By exploring the various vary of choices outlined on this information, customers can discover a resolution that aligns with their preferences and technical necessities. Whether or not via web-based interfaces or desktop functions, the flexibility to deploy LLMs regionally empowers people to leverage AI applied sciences for numerous duties whereas guaranteeing knowledge privateness and management. With these strategies at your disposal, you’ll be able to embark on a journey of seamless interplay with LLMs and unlock new potentialities in pure language processing and technology.