Laptop imaginative and prescient is a subject that permits machines to interpret and perceive the visible world. Its purposes are quickly increasing, from healthcare and autonomous autos to safety programs and retail.

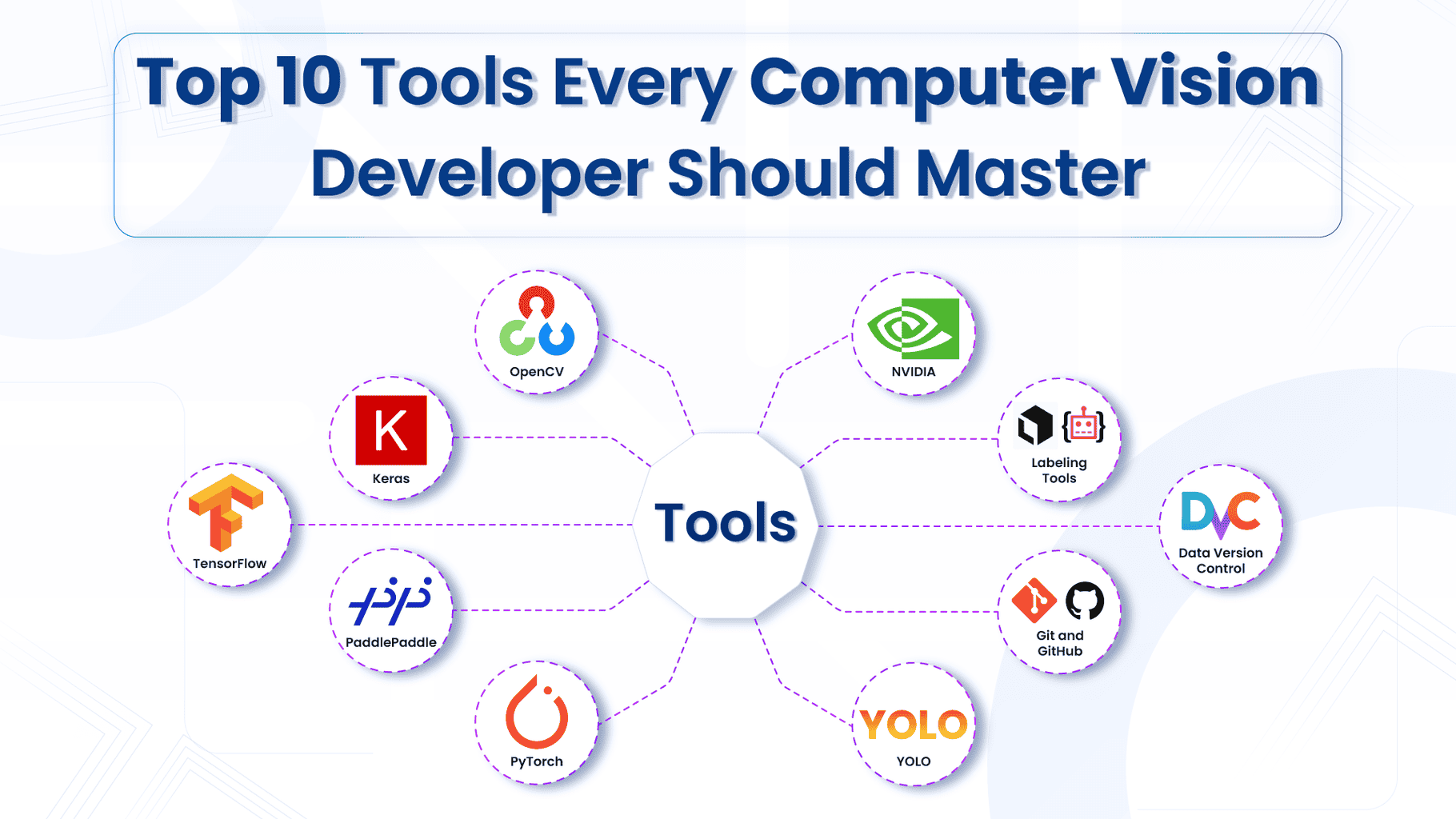

On this article, we’ll go over ten important instruments that each laptop imaginative and prescient developer, whether or not a newbie or a complicated person, ought to grasp. These instruments vary from libraries for picture processing to platforms that assist with machine studying workflows.

1. OpenCV

- Learners:

OpenCV is a well-liked open-source library designed for laptop imaginative and prescient duties. It’s an excellent place to begin for novices as a result of it allows you to simply carry out duties like picture filtering, manipulation, and fundamental function detection. With OpenCV, you can begin by studying basic picture processing strategies equivalent to resizing, cropping, and edge detection, which kind the inspiration for extra advanced duties.

- Superior:

Knowledgeable Customers: As you progress, OpenCV presents numerous functionalities for real-time video processing, object detection, and digital camera calibration. Superior customers can leverage OpenCV for high-performance purposes, together with integrating it with machine studying fashions or utilizing it in real-time programs for duties like facial recognition or augmented actuality.

2. TensorFlow

- Learners:

TensorFlow is a strong framework developed by Google for constructing and coaching machine studying fashions, particularly in deep studying. It’s beginner-friendly on account of its intensive documentation and tutorials. As a brand new developer, you can begin with pre-built fashions for duties like picture classification and object detection, permitting you to grasp the fundamentals of how fashions be taught from information.

- Superior:

For superior customers, TensorFlow’s flexibility means that you can construct advanced neural networks, together with Convolutional Neural Networks (CNNs), Transformers for superior picture recognition duties. Its means to scale from small fashions to giant production-level purposes makes it a vital device for any laptop imaginative and prescient professional. Moreover, TensorFlow helps distributed coaching, making it splendid for large-scale datasets and high-performance purposes.

3. PyTorch

- Learners:

PyTorch, developed by Fb, is one other deep studying framework broadly used for constructing neural networks. Its easy, Pythonic nature makes it straightforward for novices to understand the fundamentals of mannequin creation and coaching. Learners will respect PyTorch’s flexibility in creating easy fashions for picture classification with out having to fret about an excessive amount of technical overhead. - Superior:

Superior customers can use PyTorch’s dynamic computation graph, permitting higher flexibility when constructing advanced architectures, customized loss features, and optimizers. It’s an excellent selection for researchers, as PyTorch presents seamless experimentation with cutting-edge fashions like Imaginative and prescient Language Fashions, Generative Adversarial Networks (GANs) and deep reinforcement studying. Due to its environment friendly reminiscence administration and GPU help, it additionally excels in dealing with giant datasets.

4. Keras

- Learners:

Keras is a high-level neural community API that runs on prime of TensorFlow. It’s excellent for novices because it abstracts a lot of the complexity concerned in constructing deep studying fashions. With Keras, you possibly can shortly prototype fashions for duties like picture classification, object detection, or much more advanced duties like segmentation with no need intensive data of deep studying algorithms.

- Superior:

Skilled Customers: For extra skilled builders, Keras stays a great tool for fast prototyping of fashions earlier than diving into deeper customization. Whereas it simplifies the method, Keras additionally permits customers to scale their initiatives by integrating instantly with TensorFlow, giving superior customers the management to fine-tune fashions and handle efficiency optimization on giant datasets.

5. PaddlePaddle (PaddleOCR for Optical Character Recognition)

- Learners:

PaddlePaddle, developed by Baidu, presents a straightforward solution to work with Optical Character Recognition (OCR) duties by its PaddleOCR module. Learners can shortly arrange OCR fashions to extract textual content from pictures with minimal code. The simplicity of the API makes it straightforward to use pre-trained fashions to your individual initiatives, equivalent to scanning paperwork or studying textual content in real-time from pictures. - Superior:

Skilled Customers can profit from PaddleOCR’s flexibility by customizing architectures and coaching fashions on their very own datasets. The device permits fine-tuning for particular OCR duties, equivalent to multilingual textual content recognition or handwritten textual content extraction.

PaddlePaddle additionally integrates properly with different deep studying frameworks, offering room for superior experimentation and growth in advanced pipelines.

6. Labeling Instruments (e.g., Labelbox, Supervisely)

- Learners:

Labeling instruments are important for creating annotated datasets, particularly for supervised studying duties in laptop imaginative and prescient. Instruments like Labelbox and Supervisely simplify the method of annotating pictures by providing intuitive person interfaces, making it simpler for novices to create coaching datasets. Whether or not you’re engaged on easy object detection or extra superior segmentation duties, these instruments make it easier to get began with correct information labeling. - Superior:

Skilled professionals working with large-scale datasets, labeling instruments like Supervisely supply automation options, equivalent to pre-annotation or AI-assisted labeling, which considerably velocity up the method. These instruments additionally help integration along with your machine studying pipelines, enabling seamless collaboration throughout groups and managing annotations at scale. Professionals also can benefit from cloud-based instruments for distributed labeling, model management, and dataset administration.

7. NVIDIA CUDA and cuDNN

- Learners:

CUDA is a parallel computing platform and programming mannequin developed by NVIDIA, whereas cuDNN is a GPU-accelerated library for deep neural networks. For novices, these instruments could appear technical, however their major function is to speed up the coaching of deep studying fashions by using GPU energy. By establishing CUDA and cuDNN correctly throughout the coaching setting, a big enhance in velocity and optimization of mannequin coaching may be achieved, particularly when working with frameworks like TensorFlow and PyTorch. - Superior:

Specialists can harness the complete energy of CUDA and cuDNN to optimize efficiency in high-demand purposes. This contains writing customized CUDA kernels for particular operations, managing GPU reminiscence effectively, and fine-tuning neural community coaching for max velocity and scalability. These instruments are important for builders working with giant datasets and needing top-tier efficiency from their fashions.

8. YOLO (You Solely Look As soon as)

- Learners:

YOLO is a quick object detection algorithm that’s particularly common for real-time purposes. Learners can use pre-trained YOLO fashions to shortly detect objects in pictures or movies with comparatively easy code. The benefit of use makes YOLO an excellent entry level for these trying to discover object detection with no need to construct advanced fashions from scratch. - Superior:

YOLO gives alternatives for fine-tuning fashions on customized datasets to detect particular objects, enhancing detection velocity and accuracy. YOLO’s light-weight nature permits it to be deployed in resource-constrained environments, like cellular units, making it a go-to resolution for real-time purposes. Professionals also can experiment with newer variations of YOLO, adjusting parameters to suit particular challenge wants.

9. DVC (Knowledge Model Management)

- Learners:

DVC is a model management system for machine studying initiatives. For novices, it helps handle and monitor datasets, mannequin recordsdata, and experiments, making it straightforward to maintain all the things organized. As an alternative of versioning solely code (as Git does), DVC ensures that the information and fashions you’re engaged on are persistently tracked, lowering the trouble of manually managing information for machine studying initiatives. - Superior:

Knowledgeable customers can leverage DVC for large-scale initiatives, enabling reproducibility and collaboration throughout groups. DVC integrates properly with present workflows, making it simpler to handle a number of experiments, monitor adjustments in giant datasets, and optimize fashions primarily based on earlier runs. For advanced machine studying pipelines, DVC helps streamline the workflow by conserving all the things below model management, making certain consistency from information assortment to mannequin deployment.

10. Git and GitHub

- Learners:

Git and GitHub are important instruments for model management and collaboration. Learners will discover Git helpful for managing challenge historical past and monitoring adjustments, whereas GitHub permits straightforward sharing of code with others. In the event you’re simply beginning out in laptop imaginative and prescient, studying Git can assist you preserve organized challenge workflows, collaborate on open-source initiatives, and get aware of fundamental model management strategies. - Superior:

Skilled professionals can make the most of Git and GitHub to handle advanced analysis initiatives, deal with contributions from a number of builders, and guarantee model consistency in giant repositories. GitHub Actions permit automation of workflows, equivalent to testing and deploying fashions, which is very helpful for steady integration and deployment (CI/CD) in machine studying pipelines. Superior customers also can profit from utilizing Git LFS (Giant File Storage) to handle giant datasets inside their Git initiatives.

Roundup

Instruments like OpenCV and Keras present straightforward entry factors for novices, whereas superior choices like PyTorch, TensorFlow, and DVC assist skilled builders sort out extra advanced challenges.

GPU acceleration with CUDA, superior object detection with YOLO, and environment friendly information administration with labeling instruments guarantee you possibly can construct, practice, and deploy highly effective fashions successfully.