Vertex AI Studio is an internet surroundings for constructing AI apps, that includes Gemini, Google’s personal multimodal generative AI mannequin that may work with textual content, code, audio, pictures, and video. Along with Gemini, Vertex AI supplies entry to greater than 40 proprietary fashions and greater than 60 open supply fashions in its Mannequin Backyard, for instance the proprietary PaLM 2, Imagen, and Codey fashions from Google Analysis, open supply fashions like Llama 2 from Meta, and Claude 2 and Claude 3 from Anthropic. Vertex AI additionally provides pre-trained APIs for speech, pure language, translation, and imaginative and prescient.

Vertex AI helps immediate engineering, hyper-parameter tuning, retrieval-augmented technology (RAG), and mannequin tuning. You possibly can tune basis fashions with your individual information, utilizing tuning choices akin to adapter tuning and reinforcement studying from human suggestions (RLHF), or carry out model and topic tuning for picture technology.

Vertex AI Extensions join fashions to real-world information and real-time actions. Vertex AI lets you work with fashions each within the Google Cloud console and through APIs in Python, Node.js, Java, and Go.

Aggressive merchandise embody Amazon Bedrock, Azure AI Studio, LangChain/LangSmith, LlamaIndex, Poe, and the ChatGPT GPT Builder. The technical ranges, scope, and programming language assist of those merchandise differ.

Vertex AI Studio

Vertex AI Studio is a Google Cloud console software for constructing and testing generative AI fashions. It lets you design and take a look at prompts and customise basis fashions to satisfy your utility’s wants.

Basis fashions are one other time period for the generative AI fashions present in Vertex AI. Calling them basis fashions emphasizes the truth that they are often personalized together with your information for the specialised functions of your utility. They will generate textual content, chat, picture, code, video, multimodal information, and embeddings.

Embeddings are vector representations of different information, for instance textual content. Search engines like google usually use vector embeddings, a cosine metric, and a nearest-neighbor algorithm to seek out textual content that’s related (comparable) to a question string.

The proprietary Google generative AI fashions out there in Vertex AI embody:

- Gemini API: Superior reasoning, multi-turn chat, code technology, and multimodal prompts.

- PaLM API: Pure language duties, textual content embeddings, and multi-turn chat.

- Codey APIs: Code technology, code completion, and code chat.

- Imagen API: Picture technology, picture enhancing, and visible captioning.

- MedLM: Medical query answering and summarization (personal GA).

Vertex AI Studio lets you take a look at fashions utilizing immediate samples. The immediate galleries are organized by the kind of mannequin (multimodal, textual content, imaginative and prescient, or speech) and the duty being demonstrated, for instance “summarize key insights from a monetary report desk” (textual content) or “learn the textual content from this handwritten notice picture” (multimodal).

Vertex AI additionally lets you design and save your individual prompts. The kinds of immediate are damaged down by function, for instance textual content technology versus code technology and single-shot versus chat. Iterating in your prompts is a surprisingly highly effective approach of customizing a mannequin to provide the output you need, as we’ll focus on beneath.

When immediate engineering isn’t sufficient to coax a mannequin into producing the specified output, and you’ve got a coaching information set in an acceptable format, you may take the following step and tune a basis mannequin in certainly one of a number of methods: supervised tuning, RLHF tuning, or distillation. Once more, we’ll focus on this in additional element afterward on this evaluate.

The Vertex AI Studio speech software can convert speech to textual content and textual content to speech. For textual content to speech you may select your most popular voice and management its velocity. For speech to textual content, Vertex AI Studio makes use of the Chirp mannequin, however has size and file format limits. You possibly can circumvent these through the use of the Cloud Speech-to-Textual content Console as an alternative.

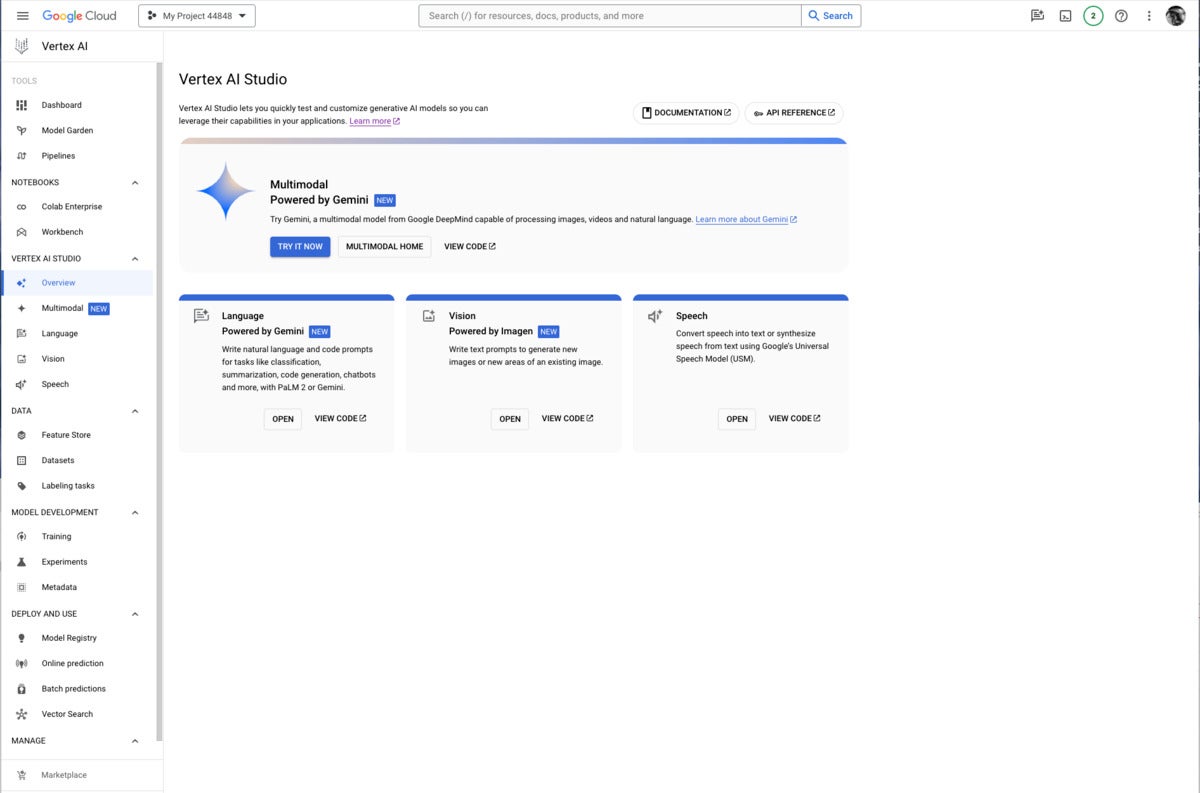

IDG

IDGGoogle Vertex AI Studio overview console, emphasizing Google’s latest proprietary generative AI fashions. Notice the usage of Google Gemini for multimodal AI, PaLM2 or Gemini for language AI, Imagen for imaginative and prescient (picture technology and infill), and the Common Speech Mannequin for speech recognition and synthesis.

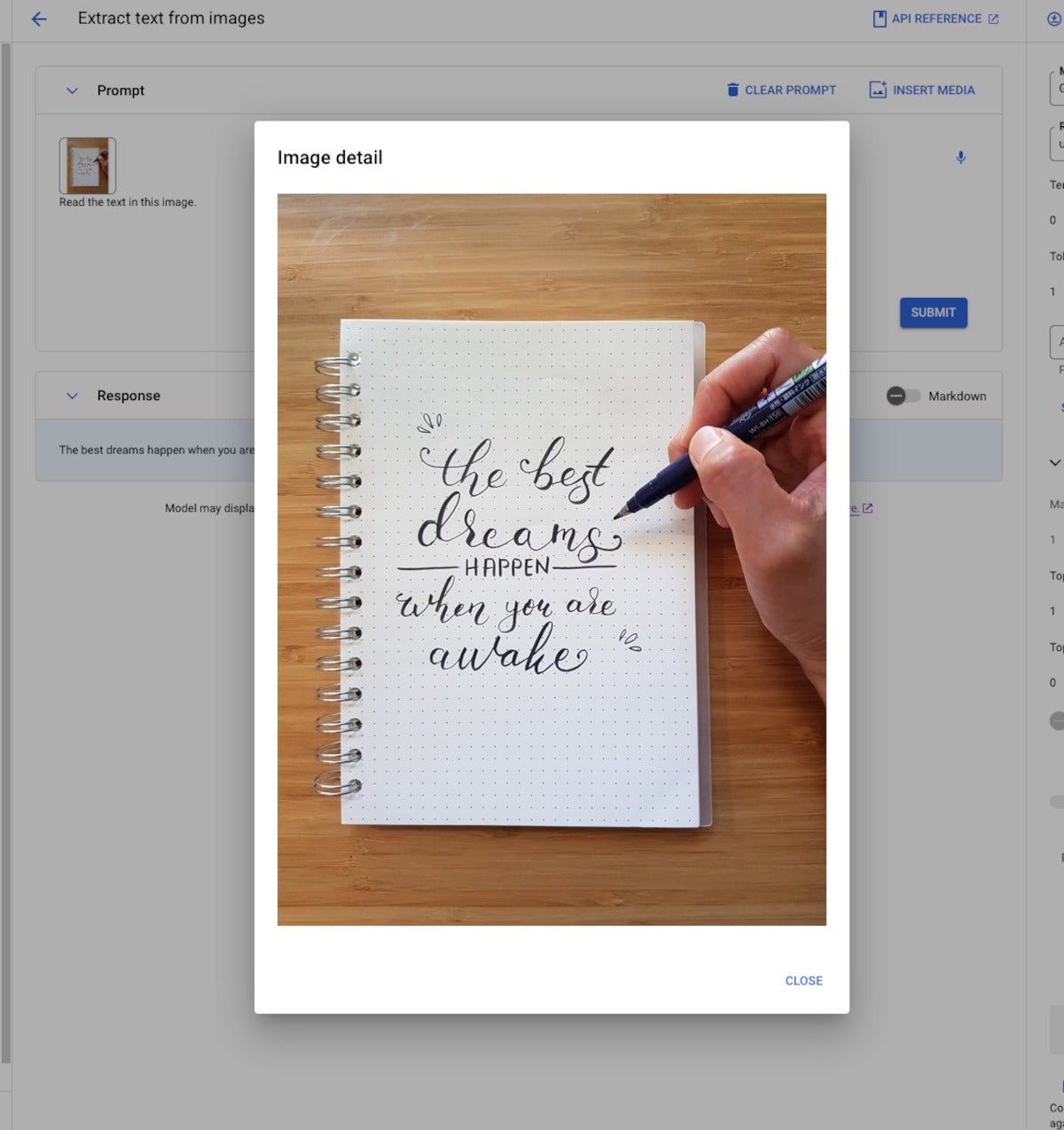

IDG

IDGMultimodal generative AI demonstration from Vertex AI. The mannequin, Gemini Professional Imaginative and prescient, is ready to learn the message from the picture regardless of the flowery calligraphy.

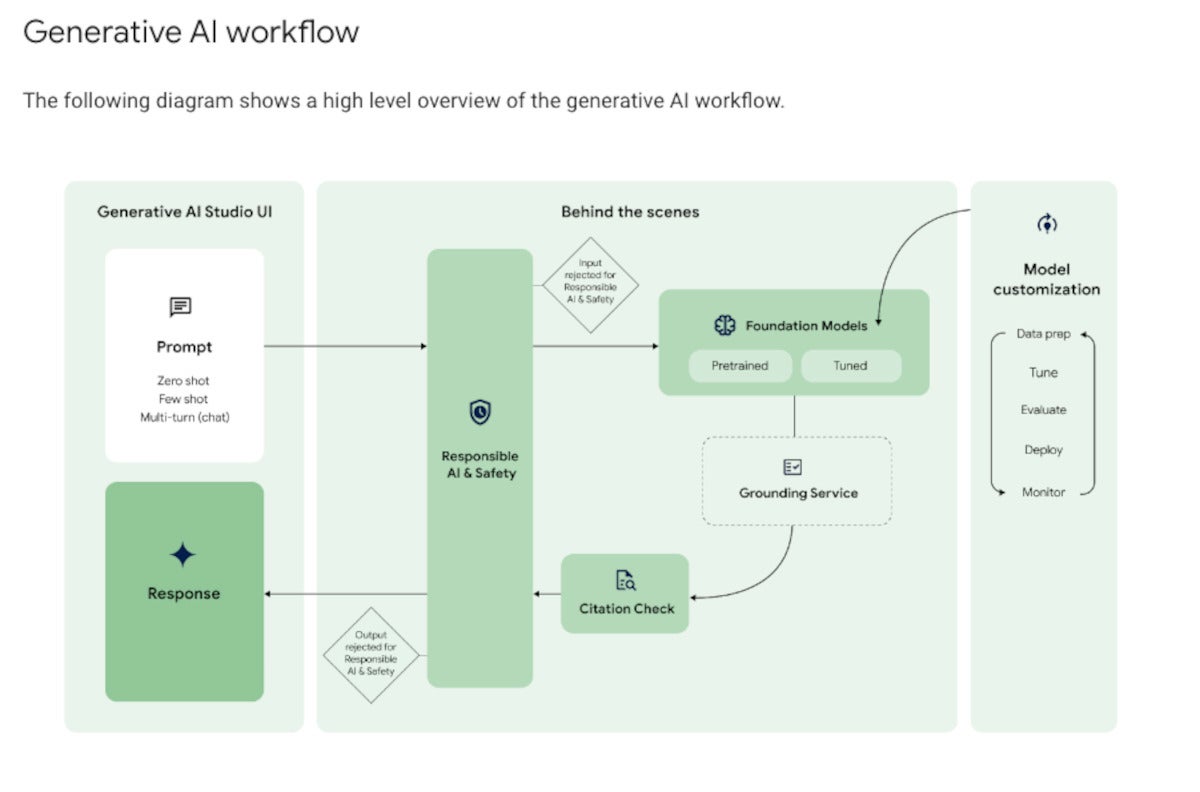

Generative AI workflow

As you may see within the diagram beneath, Google Vertex AI’s generative AI workflow is a little more difficult than merely throwing a immediate over the wall and getting a response again. Google’s accountable AI and security filter applies each to the enter and output, shielding the mannequin from malicious prompts and the person from malicious responses.

The inspiration mannequin that processes the question could be pre-trained or tuned. Mannequin tuning, if desired, could be carried out utilizing a number of strategies, all of that are out-of-band for the question/response workflow and fairly time-consuming.

If grounding is required, it’s utilized right here. The diagram reveals the grounding service after the mannequin within the circulation; that’s not precisely how RAG works, as I defined in January. Out-of-band, you construct your vector database. In-band, you generate an embedding vector for the question, use it to carry out a similarity search in opposition to the vector database, and eventually you embody what you’ve retrieved from the vector database as an augmentation to the unique question and move it to the mannequin.

At this level, the mannequin generates solutions, probably primarily based on a number of paperwork. The workflow permits for the inclusion of citations earlier than sending the response again to the person by way of the security filter.

IDG

IDGThe generative AI workflow usually begins with prompting by the person. On the again finish, the immediate passes by way of a security filter to pre-trained or tuned basis fashions, optionally utilizing a grounding service for RAG. After a quotation examine, the reply passes again by way of the security filter and to the person.

Grounding and Vertex AI Search

As you would possibly anticipate from the best way RAG works, Vertex AI requires you to take a number of steps to allow RAG. First, it’s worthwhile to “onboard to Vertex AI Search and Dialog,” a matter of some clicks and some minutes of ready. Then it’s worthwhile to create an AI Search information retailer, which could be achieved by crawling web sites, importing information from a BigQuery desk, importing information from a Cloud Storage bucket (PDF, HTML, TXT, JSONL, CSV, DOCX, or PPTX codecs), or by calling an API.

Lastly, it’s worthwhile to arrange a immediate with a mannequin that helps RAG (presently solely text-bison and chat-bison, each PaLM 2 language fashions) and configure it to make use of your AI Search and Dialog information retailer. If you’re utilizing the Vertex AI console, this setup is within the superior part of the immediate parameters, as proven within the first screenshot beneath. If you’re utilizing the Vertex AI API, this setup is within the groundingConfig part of the parameters:

{

"situations": [

{ "prompt": "PROMPT"}

],

"parameters": {

"temperature": TEMPERATURE,

"maxOutputTokens": MAX_OUTPUT_TOKENS,

"topP": TOP_P,

"topK": TOP_K,

"groundingConfig": {

"sources": [

{

"type": "VERTEX_AI_SEARCH",

"vertexAiSearchDatastore": "VERTEX_AI_SEARCH_DATA_STORE"

}

]

}

}

}

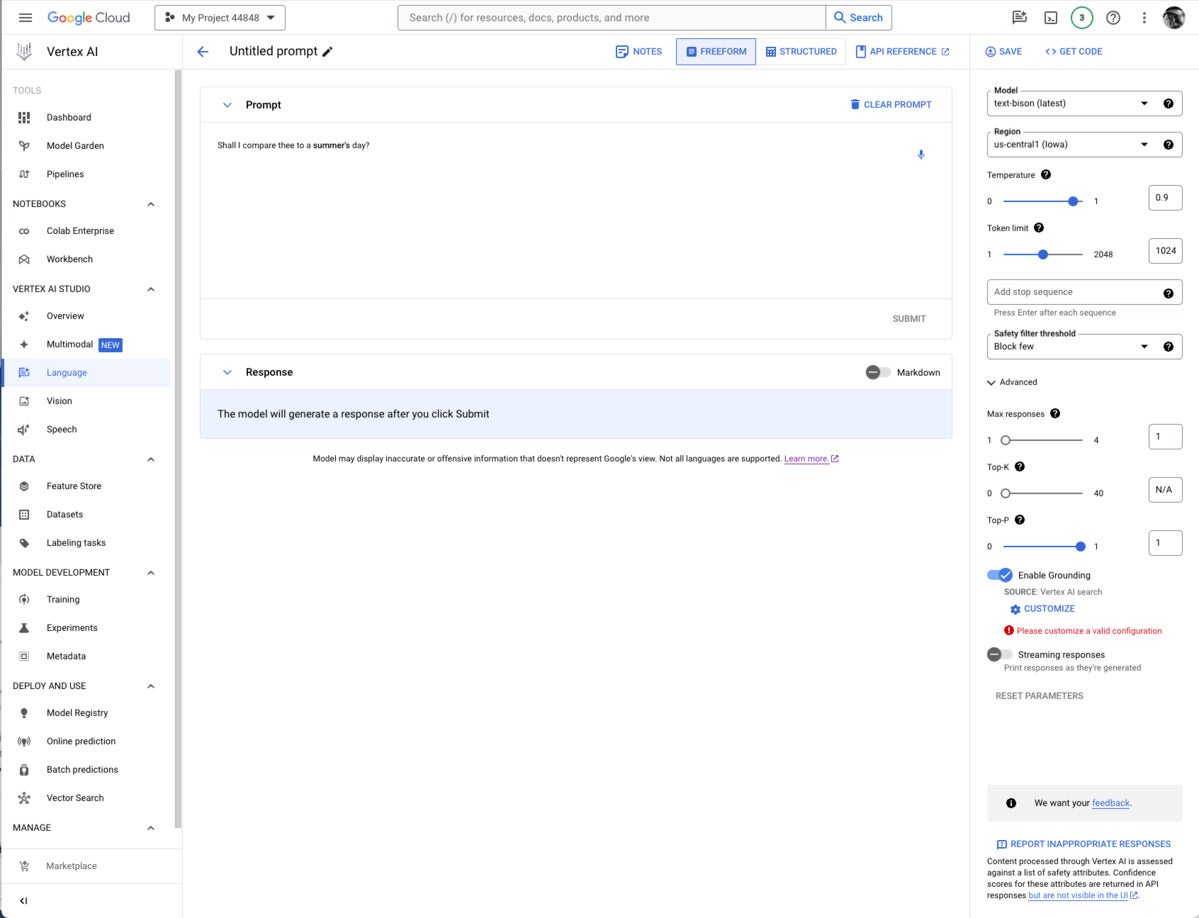

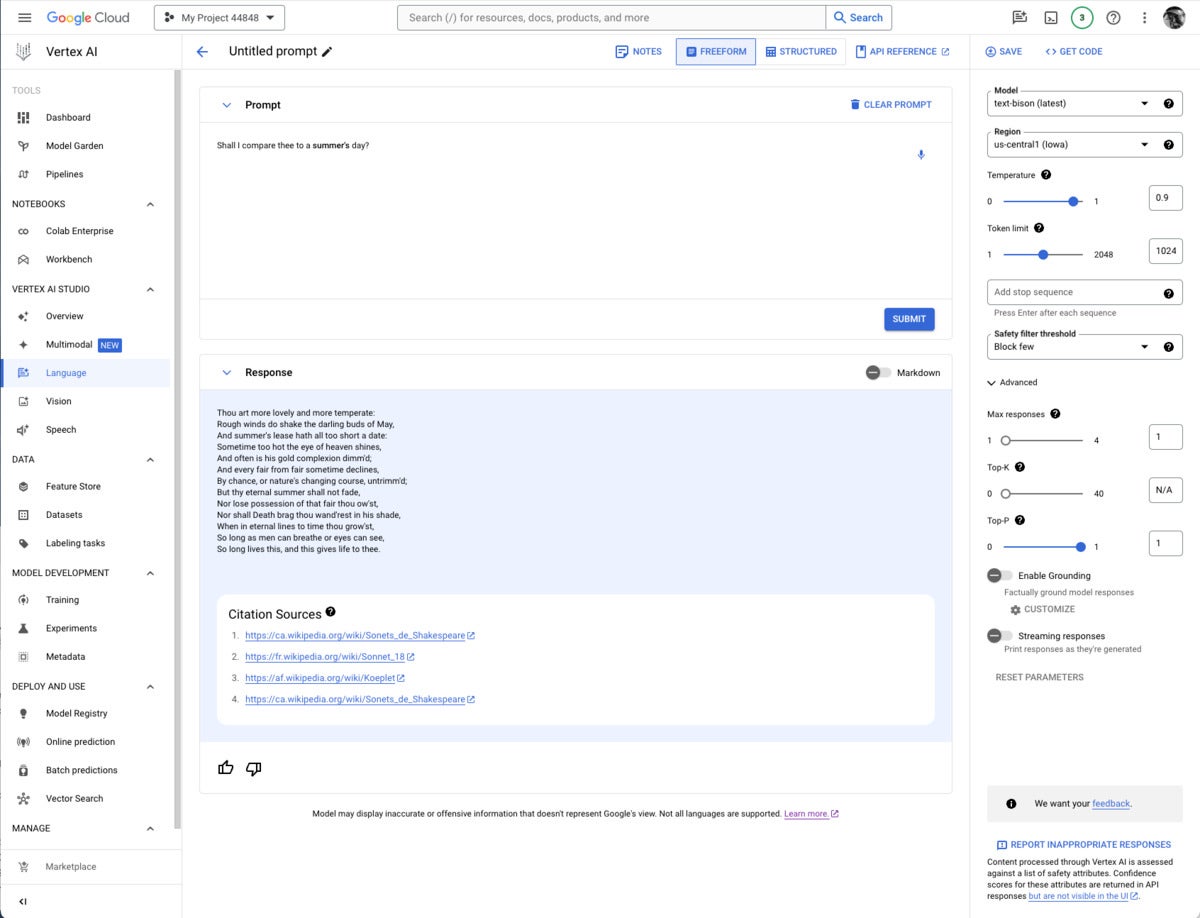

IDG

IDGShould you’re developing a immediate for a mannequin that helps grounding, the Allow Grounding toggle on the proper, underneath Superior, shall be enabled, and you may click on it, as I’ve right here. Clicking on Customise brings up one other right-hand panel the place you may choose Vertex AI Search from the drop-down checklist and fill within the path to the Vertex AI information retailer.

Notice that grounding or RAG might or is probably not wanted, relying on how and when the mannequin was skilled.

IDG

IDGIt’s often value checking to see whether or not you want grounding for any given immediate/mannequin pair. I believed I would want so as to add the poems part of the Poetry.org web site to get a very good completion for “Shall I examine thee to a summer season’s day?” However as you may see above, the text-bison mannequin already knew the sonnet from 4 sources it may (and did) cite.

Gemini, Imagen, Chirp, Codey, and PaLM 2

Google’s proprietary fashions provide a few of the added worth of the Vertex AI web site. Gemini was distinctive in being a multimodal mannequin (in addition to a textual content and code technology mannequin) as just lately as a number of weeks earlier than I wrote this. Then OpenAI GPT-4 included DALL-E, which allowed it to generate textual content or pictures. At present, Gemini can generate textual content from pictures and movies, however GPT-4/DALL-E can’t.

Gemini variations presently supplied on Vertex AI embody Gemini Professional, a language mannequin with “the very best performing Gemini mannequin with options for a variety of duties;” Gemini Professional Imaginative and prescient, a multimodal mannequin “created from the bottom as much as be multimodal (textual content, pictures, movies) and to scale throughout a variety of duties;” and Gemma, “open checkpoint variants of Google DeepMind’s Gemini mannequin fitted to a wide range of textual content technology duties.”

Further Gemini variations have been introduced: Gemini 1.0 Extremely, Gemini Nano (to run on units), and Gemini 1.5 Professional, a mixture-of-experts (MoE) mid-size multimodal mannequin, optimized for scaling throughout a variety of duties, that performs at an analogous stage to Gemini 1.0 Extremely. In accordance with Demis Hassabis, CEO and co-founder of Google DeepMind, Gemini 1.5 Professional comes with a regular 128,000 token context window, however a restricted group of shoppers can attempt it with a context window of as much as 1 million tokens through Vertex AI in personal preview.

Imagen 2 is a text-to-image diffusion mannequin from Google Mind Analysis that Google says has “an unprecedented diploma of photorealism and a deep stage of language understanding.” It’s aggressive with DALL-E 3, Midjourney 6, and Adobe Firefly 2, amongst others.

Chirp is a model of a Common Speech Mannequin that has over 2B parameters and may transcribe in over 100 languages in a single mannequin. It may well flip audio speech to formatted textual content, caption movies for subtitles, and transcribe audio content material for entity extraction and content material classification.

Codey exists in variations for code completion (code-gecko), code technology (̉code-bison), and code chat (codechat-bison). The Codey APIs assist the Go, GoogleSQL, Java, JavaScript, Python, and TypeScript languages, and Google Cloud CLI, Kubernetes Useful resource Mannequin (KRM), and Terraform infrastructure as code. Codey competes with GitHub Copilot, StarCoder 2, CodeLlama, LocalLlama, DeepSeekCoder, CodeT5+, CodeBERT, CodeWhisperer, Bard, and numerous different LLMs which were fine-tuned on code akin to OpenAI Codex, Tabnine, and ChatGPTCoding.

PaLM 2 exists in variations for textual content (text-bison and text-unicorn), chat (̉chat-bison), and security-specific duties (sec-palm, presently solely out there by invitation). PaLM 2 text-bison is sweet for summarization, query answering, classification, sentiment evaluation, and entity extraction. PaLM 2 chat-bison is fine-tuned to conduct pure dialog, for instance to carry out customer support and technical assist or function a conversational assistant for web sites. PaLM 2 text-unicorn, the biggest mannequin within the PaLM household, excels at advanced duties akin to coding and chain-of-thought (CoT).

Google additionally supplies embedding fashions for textual content (textembedding-gecko and textembedding-gecko-multilingual) and multimodal (multimodalembedding). Embeddings plus a vector database (Vertex AI Search) will let you implement semantic or similarity search and RAG, as described above.

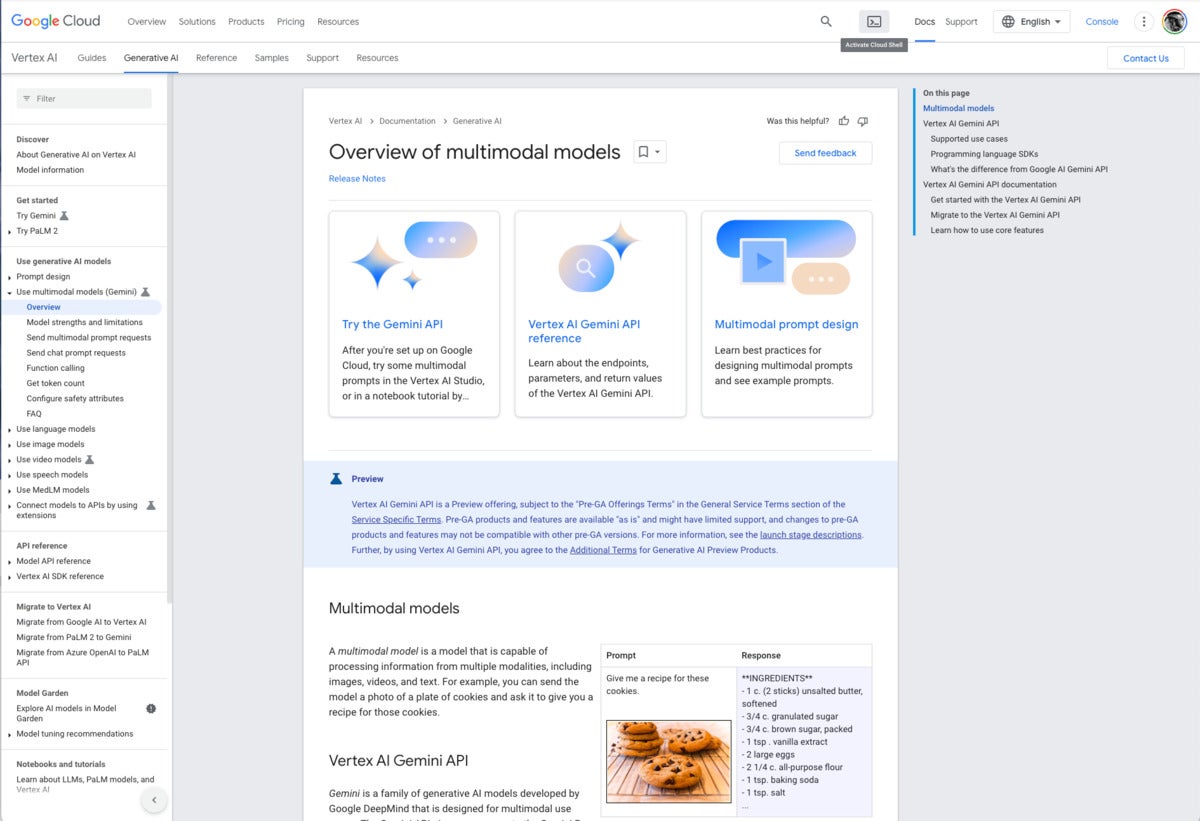

IDG

IDGVertex AI documentation overview of multimodal fashions. Notice the instance on the decrease proper. The textual content immediate “Give me a recipe for these cookies” and an unlabeled image of chocolate-chip cookies causes Gemini to reply with an precise recipe for chocolate-chip cookies.

Vertex AI Mannequin Backyard

Along with Google’s proprietary fashions, the Mannequin Backyard (documentation) presently provides roughly 90 open-source fashions and 38 task-specific options. Usually, the fashions have mannequin playing cards. The Google fashions can be found by way of Vertex AI APIs and Google Colab in addition to within the Vertex AI console. The APIs are billed on a utilization foundation.

The opposite fashions are usually out there in Colab Enterprise and could be deployed as an endpoint. Notice that endpoints are deployed on severe situations with accelerators (for instance 96 CPUs and eight GPUs), and subsequently accrue vital prices so long as they’re deployed.

Basis fashions supplied embody Claude 3 Opus (coming quickly), Claude 3 Sonnet (preview), Claude 3 Haiku (coming quickly), Llama 2, and Secure Diffusion v1-5. Fantastic-tunable fashions embody PyTorch-ZipNeRF for 3D reconstruction, AutoGluon for tabular information, Secure Diffusion LoRA (MediaPipe) for textual content to picture technology, and ̉̉MoViNet Video Motion Recognition.

Generative AI immediate design

The Google AI immediate design methods web page does a good and usually vendor-neutral job of explaining easy methods to design prompts for generative AI. It emphasizes readability, specificity, together with examples (few-shot studying), including contextual data, utilizing prefixes for readability, letting fashions full partial inputs, breaking down advanced prompts into easier parts, and experimenting with totally different parameter values to optimize outcomes.

Let’s have a look at three examples, one every for multimodal, textual content, and imaginative and prescient. The multimodal instance is attention-grabbing as a result of it makes use of two pictures and a textual content query to get a solution.