new_my_likes

Mix the brand new and outdated knowledge:

deduped_my_likes

And, lastly, save the up to date knowledge by overwriting the outdated file:

rio::export(deduped_my_likes, 'my_likes.parquet')

Step 4. View and search your knowledge the traditional method

I prefer to create a model of this knowledge particularly to make use of in a searchable desk. It features a hyperlink on the finish of every publish’s textual content to the unique publish on Bluesky, letting me simply view any pictures, replies, dad and mom, or threads that aren’t in a publish’s plain textual content. I additionally take away some columns I don’t want within the desk.

my_likes_for_table

mutate(

Publish = str_glue("{Publish} >>"),

ExternalURL = ifelse(!is.na(ExternalURL), str_glue("{substr(ExternalURL, 1, 25)}..."), "")

) |>

choose(Publish, Identify, CreatedAt, ExternalURL)

Right here’s one approach to create a searchable HTML desk of that knowledge, utilizing the DT package deal:

DT::datatable(my_likes_for_table, rownames = FALSE, filter="prime", escape = FALSE, choices = record(pageLength = 25, autoWidth = TRUE, filter = "prime", lengthMenu = c(25, 50, 75, 100), searchHighlight = TRUE,

search = record(regex = TRUE)

)

)

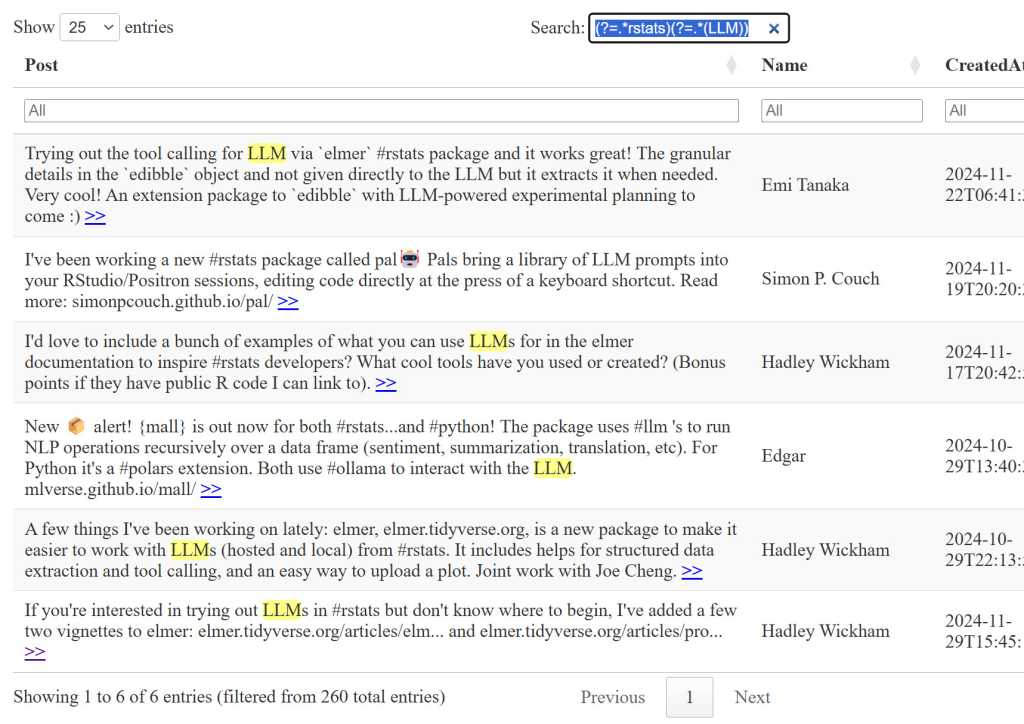

This desk has a table-wide search field on the prime proper and search filters for every column, so I can seek for two phrases in my desk, such because the #rstats hashtag in the primary search bar after which any publish the place the textual content incorporates LLM (the desk’s search isn’t case delicate) within the Publish column filter bar. Or, as a result of I enabled common expression looking with the search = record(regex = TRUE) choice, I might use a single regexp lookahead sample (?=.rstats)(?=.(LLM)) within the search field.

IDG

Generative AI chatbots like ChatGPT and Claude might be fairly good at writing complicated common expressions. And with matching textual content highlights turned on within the desk, it is going to be simple so that you can see whether or not the regexp is doing what you need.

Question your Bluesky likes with an LLM

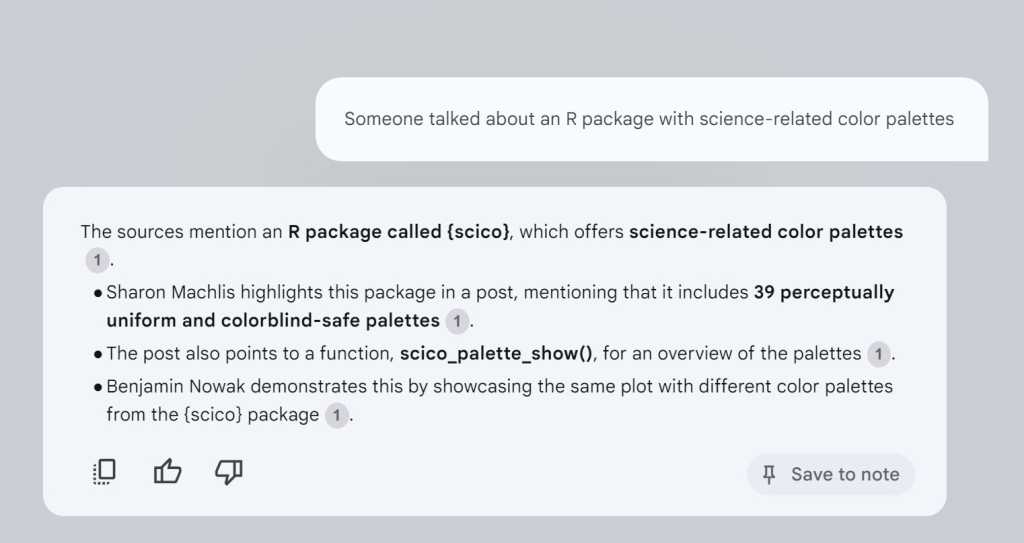

The best free method to make use of generative AI to question these posts is by importing the info file to a service of your selection. I’ve had good outcomes with Google’s NotebookLM, which is free and reveals you the supply textual content for its solutions. NotebookLM has a beneficiant file restrict of 500,000 phrases or 200MB per supply, and Google says it received’t practice its giant language fashions (LLMs) in your knowledge.

The question “Somebody talked about an R package deal with science-related coloration palettes” pulled up the precise publish I used to be considering of — one which I had preferred after which re-posted with my very own feedback. And I didn’t have to provide NotebookLLM my very own prompts or directions to inform it that I wished to 1) use solely that doc for solutions, and a pair of) see the supply textual content it used to generate its response. All I needed to do was ask my query.

IDG

I formatted the info to be a bit extra helpful and fewer wasteful by limiting CreatedAt to dates with out instances, holding the publish URL as a separate column (as a substitute of a clickable hyperlink with added HTML), and deleting the exterior URLs column. I saved that slimmer model as a .txt and never .csv file, since NotebookLM doesn’t deal with .csv extentions.

my_likes_for_ai

mutate(CreatedAt = substr(CreatedAt, 1, 10)) |>

choose(Publish, Identify, CreatedAt, URL)

rio::export(my_likes_for_ai, "my_likes_for_ai.txt")

After importing your likes file to NotebookLM, you may ask questions instantly as soon as the file is processed.

IDG

If you happen to actually wished to question the doc inside R as a substitute of utilizing an exterior service, one choice is the Elmer Assistant, a undertaking on GitHub. It needs to be pretty simple to switch its immediate and supply data on your wants. Nevertheless, I haven’t had nice luck operating this domestically, despite the fact that I’ve a reasonably strong Home windows PC.

Replace your likes by scheduling the script to run robotically

To be able to be helpful, you’ll have to maintain the underlying “posts I’ve preferred” knowledge updated. I run my script manually on my native machine periodically after I’m lively on Bluesky, however it’s also possible to schedule the script to run robotically daily or as soon as per week. Listed below are three choices:

- Run a script domestically. If you happen to’re not too fearful about your script all the time operating on a precise schedule, instruments equivalent to taskscheduleR for Home windows or cronR for Mac or Linux may help you run your R scripts robotically.

- Use GitHub Actions. Johannes Gruber, the writer of the atrrr package deal, describes how he makes use of free GitHub Actions to run his R Bloggers Bluesky bot. His directions might be modified for different R scripts.

- Run a script on a cloud server. Or you might use an occasion on a public cloud equivalent to Digital Ocean plus a cron job.

You might have considered trying a model of your Bluesky likes knowledge that doesn’t embody each publish you’ve preferred. Typically you could click on like simply to acknowledge you noticed a publish, or to encourage the writer that individuals are studying, or since you discovered the publish amusing however in any other case don’t anticipate you’ll wish to discover it once more.

Nevertheless, a warning: It may get onerous to manually mark bookmarks in a spreadsheet in the event you like lots of posts, and it’s worthwhile to be dedicated to maintain it updated. There’s nothing improper with looking by your total database of likes as a substitute of curating a subset with “bookmarks.”

That mentioned, right here’s a model of the method I’ve been utilizing. For the preliminary setup, I recommend utilizing an Excel or .csv file.

Step 1. Import your likes right into a spreadsheet and add columns

I’ll begin by importing the my_likes.parquet file and including empty Bookmark and Notes columns, after which saving that to a brand new file.

my_likes

mutate(Notes = as.character(""), .earlier than = 1) |>

mutate(Bookmark = as.character(""), .after = Bookmark)

rio::export(likes_w_bookmarks, "likes_w_bookmarks.xlsx")

After some experimenting, I opted to have a Bookmark column as characters, the place I can add simply “T” or “F” in a spreadsheet, and never a logical TRUE or FALSE column. With characters, I don’t have to fret whether or not R’s Boolean fields will translate correctly if I resolve to make use of this knowledge outdoors of R. The Notes column lets me add textual content to elucidate why I would wish to discover one thing once more.

Subsequent is the handbook a part of the method: marking which likes you wish to maintain as bookmarks. Opening this in a spreadsheet is handy as a result of you may click on and drag F or T down a number of cells at a time. If in case you have lots of likes already, this can be tedious! You might resolve to mark all of them “F” for now and begin bookmarking manually going ahead, which can be much less onerous.

Save the file manually again to likes_w_bookmarks.xlsx.

Step 2. Hold your spreadsheet in sync along with your likes

After that preliminary setup, you’ll wish to maintain the spreadsheet in sync with the info because it will get up to date. Right here’s one approach to implement that.

After updating the brand new deduped_my_likes likes file, create a bookmark verify lookup, after which be part of that along with your deduped likes file.

bookmark_check

choose(URL, Bookmark, Notes)

my_likes_w_bookmarks

relocate(Bookmark, Notes)

Now you may have a file with the brand new likes knowledge joined along with your current bookmarks knowledge, with entries on the prime having no Bookmark or Notes entries but. Save that to your spreadsheet file.

rio::export(my_likes_w_bookmarks, "likes_w_bookmarks.xlsx")

An alternative choice to this considerably handbook and intensive course of may very well be utilizing dplyr::filter() in your deduped likes knowledge body to take away objects you understand you received’t need once more, equivalent to posts mentioning a favourite sports activities staff or posts on sure dates when you understand you targeted on a subject you don’t have to revisit.

Subsequent steps

Need to search your personal posts as effectively? You possibly can pull them by way of the Bluesky API in an analogous workflow utilizing atrrr’s get_skeets_authored_by() perform. When you begin down this street, you’ll see there’s much more you are able to do. And also you’ll possible have firm amongst R customers.