Whereas AI presents infinite new alternatives — it additionally introduces an entire array of recent threats. Generative AI permits malicious actors to create deepfakes and faux web sites, ship spam, and even impersonate your family and friends. This publish covers how neural networks are getting used for scams and phishing, and, after all, we’ll share tips about find out how to keep protected. For a extra detailed have a look at AI-powered phishing schemes, take a look at the total report on Securelist.

Pig butchering, catfishing, and deepfakes

Scammers are utilizing AI bots that faux to be actual individuals, particularly in romance scams. They create fabricated personas and use them to speak with a number of victims concurrently to construct robust emotional connections. This could go on for weeks and even months, beginning with gentle flirting and regularly shifting to discussions about “profitable funding alternatives”. The long-term private connection helps dissolve any suspicions the sufferer might need, however the rip-off, after all, ends as soon as the sufferer invests their cash in a fraudulent mission. These sorts of fraudulent schemes are referred to as “pig butchering”, which we coated intimately in a earlier publish. Whereas they had been as soon as run by large rip-off farms in Southeast Asia using 1000’s of individuals, these scams now more and more depend on AI.

Neural networks have made catfishing — the place scammers create a faux identification or impersonate an actual particular person — a lot simpler. Fashionable generative neural networks can imitate an individual’s look, voice, or writing type with a enough diploma of accuracy. All a scammer wants do is collect publicly accessible details about an individual and feed that knowledge to the AI. And something and the whole lot may be helpful: images, movies, public posts and feedback, details about family, hobbies, age, and so forth.

So, if a member of the family or good friend messages you from a brand new account and, say, asks to lend them cash, it’s in all probability probably not your relative or good friend. In a scenario like that, the very best factor to do is attain out to the true particular person by means of a unique channel — for instance, by calling them — and ask them straight if the whole lot’s okay. Asking just a few private questions {that a} scammer wouldn’t be capable of discover on-line and even in your previous messages is one other sensible factor to do.

However convincing textual content impersonation is simply a part of the issue — audio and video deepfakes are a good larger menace. We not too long ago shared how scammers use deepfakes of fashionable bloggers and crypto buyers on social media. These faux celebrities invite followers to “private consultations” or “unique funding chats”, or promise money prizes and costly giveaways.

Social media isn’t the one place the place deepfakes are getting used, although. They’re additionally being generated for real-time video and audio calls. Earlier this 12 months, a Florida girl misplaced US$15,000 after pondering she was speaking to her daughter, who’d supposedly been in a automotive accident. The scammers used a practical deepfake of her daughter’s voice, and even mimicked her crying.

Consultants from Kaspersky’s GReAT discovered affords on the darkish internet for creating real-time video and audio deepfakes. The worth of those companies is dependent upon how subtle and lengthy the content material must be — beginning at simply US$30 for voice deepfakes and US$50 for movies. Simply a few years in the past, these companies price much more — as much as US$20 000 per minute — and real-time technology wasn’t an possibility.

The listings provide completely different choices: real-time face swapping in video conferences or messaging apps, face swapping for identification verification, or changing a picture from a telephone or digital digital camera.

Scammers additionally provide instruments for lip-syncing any textual content in a video — even in overseas languages, in addition to voice cloning instruments that may change tone and pitch to match a desired emotion.

Nonetheless, our specialists suspect that many of those dark-web listings may be scams themselves — designed to trick different would-be scammers into paying for companies that don’t really exist.

Easy methods to keep protected

- Don’t belief on-line acquaintances you’ve by no means met in particular person. Even if you happen to’ve been chatting some time and really feel such as you’ve discovered a “kindred spirit”, be cautious if they create up crypto, investments, or some other scheme that requires you to ship them cash.

- Don’t fall for surprising, interesting affords seemingly coming from celebrities or large corporations on social media. All the time go to their official accounts to double-check the knowledge. Cease if at any level in a “giveaway”, you’re requested to pay a charge, tax, or transport price, or to enter your bank card particulars to obtain a money prize.

- If mates or family message you with uncommon requests, contact them by means of a unique channel similar to phone. To be protected, ask them about one thing you talked about throughout your newest real-life dialog. For shut family and friends, it’s a good suggestion to agree on a code phrase beforehand that solely the 2 of you realize. In case you share your location with one another, verify it and ensure the place the particular person is. And don’t fall for the “hurry up” manipulation — the scammer or AI may inform you the scenario is pressing they usually don’t have time to reply “foolish” questions.

- In case you have doubts throughout a video name, ask the particular person to show their head sideways or make a sophisticated hand motion. Deepfakes normally can’t fulfill such requests with out breaking the phantasm. Additionally, if the particular person isn’t blinking, or their lip actions or facial expressions appear unusual, that’s one other purple flag.

- By no means dictate or in any other case share bank-card numbers, one-time codes, or some other confidential info.

An instance of a deepfake falling aside when the top turns. Supply

Automated calls

These are an environment friendly technique to trick individuals with out having to speak with them straight. Scammers are utilizing AI to make faux automated calls from banks, wi-fi carriers, and authorities companies. On the opposite finish of the road is only a bot pretending to be a assist agent. It feels actual as a result of many reliable corporations use automated voice assistants. Nonetheless, an actual firm won’t ever name you to say your account was hacked or ask for a verification code.

In case you get a name like this, the important thing factor is to remain calm. Don’t fall for scare ways like “a hacked account” or “stolen cash”. Simply hold up, and use the official quantity on the corporate’s web site to name the real firm. Remember that trendy scams can contain a number of individuals who cross you off from one to a different. They could name or textual content from completely different numbers and faux to be financial institution workers, authorities officers, and even the police.

Phishing-susceptible chatbots and AI brokers

Many individuals now want to make use of chatbots like ChatGPT or Gemini as an alternative of acquainted serps. What might be the dangers, you may ask? Effectively, giant language fashions are educated on consumer knowledge, and fashionable chatbots have been recognized to recommend phishing websites to customers. After they carry out internet searches, AI brokers hook up with serps that may additionally comprise phishing hyperlinks.

In a current experiment, researchers had been in a position to trick the AI agent within the Comet browser by Perplexity with a faux e mail. The e-mail was supposedly from an funding supervisor at Wells Fargo, one of many world’s largest banks. The researchers despatched the e-mail from a newly created Proton Mail account. It included a hyperlink to an actual phishing web page that had been lively for a number of days however was but to be flagged as malicious by Google Protected Looking. Whereas going by means of the consumer’s inbox, the AI agent marked the message as a “to-do merchandise from the financial institution”. With none additional checks, it adopted the phishing hyperlink, opened the faux login web page, after which prompted the consumer to enter their credentials; it even helped fill out the shape! The AI primarily vouched for the phishing web page. The consumer by no means noticed the suspicious sender’s e mail deal with or the phishing hyperlink itself. As an alternative, they had been instantly taken to a password entry web page given by the “useful” AI assistant.

In the identical experiment, the researchers used the AI-powered internet growth platform Loveable to create a faux web site that mimicked a Walmart retailer. They then visited the location in Comet — one thing an unsuspecting consumer may simply do in the event that they had been fooled by a phishing hyperlink or advert. They requested the AI agent to purchase an Apple Watch. The agent analyzed the faux web site, discovered a “cut price”, added the watch to the cart, entered the deal with and financial institution card info saved within the browser, and accomplished the “buy” with out asking for any affirmation. If this had been an actual fraudulent web site, the consumer would have misplaced a piece of change whereas they served their banking particulars on a silver platter to the scammers.

Sadly, AI brokers at present behave like naive newcomers on the Net, simply falling for social engineering. We’ve talked intimately earlier than in regards to the dangers of integrating AI into browsers and find out how to decrease them. However as a reminder, to keep away from changing into the subsequent sufferer of an excessively trusting assistant, it’s best to critically consider the knowledge it gives, restrict the permissions you give to AI brokers, and set up a dependable safety resolution that can block entry to malicious websites.

AI-generated phishing web sites

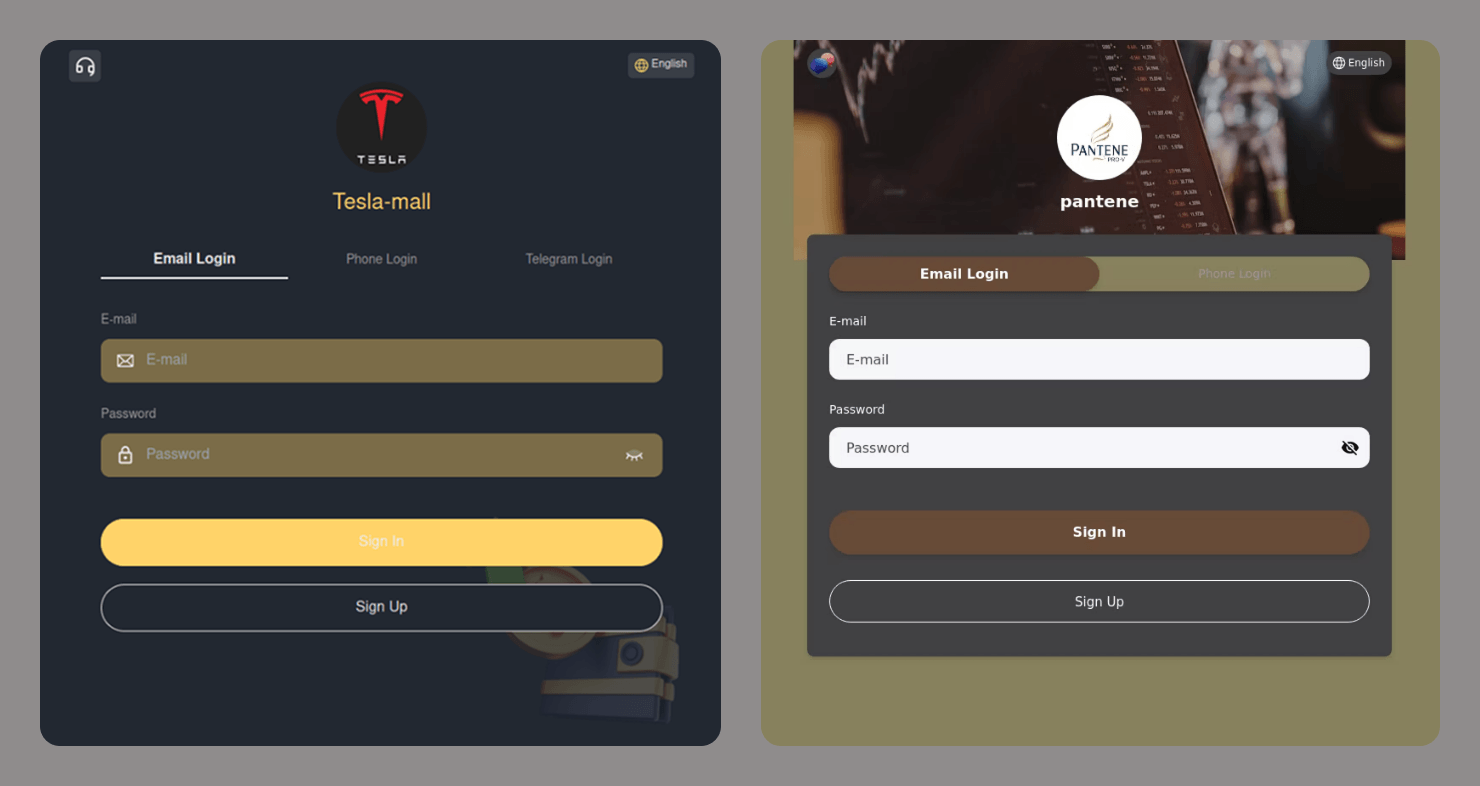

The times of sketchy, poorly designed phishing websites loaded with intrusive adverts are lengthy gone. Fashionable scammers do their finest to create life like fakes which use the HTTPS protocol, present consumer agreements and cookie consent warnings, and have moderately good designs. AI-powered instruments have made creating such web sites less expensive and quicker, if not practically instantaneous. You may discover a hyperlink to one in all these websites wherever: in a textual content message, an e mail, on social media, and even in search outcomes.

Easy methods to spot a phishing web site

- Verify the URL, title, and content material for typos.

- Learn how lengthy the web site’s area has been registered. You’ll be able to verify this right here.

- Take note of the language. Is the location making an attempt to scare or accuse you? Is it making an attempt to lure you in, or dashing you to behave? Any emotional manipulation is a giant purple flag.

- Allow the link-checking characteristic in any of our safety options.

- In case your browser warns you about an unsecured connection, depart the location. Reputable websites use the HTTPS protocol.

- Seek for the web site identify on-line and evaluate the URL you will have with the one within the search outcomes. Watch out, as serps may present sponsored phishing hyperlinks on the high of the web page. Be certain there isn’t a “Advert” or “Sponsored” label subsequent to the hyperlink.

Learn extra about utilizing AI safely: