Deep studying (DL) revolutionised pc imaginative and prescient (CV) and synthetic intelligence generally. It was an enormous breakthrough (circa 2012) that allowed AI to blast into the headlines and into our lives like by no means earlier than. ChatGPT, DALL-E 2, autonomous automobiles, and many others. – deep studying is the engine driving these tales. DL is so good, that it has reached a degree the place each resolution to an issue involving AI is now most likely being solved utilizing it. Simply check out any tutorial convention/workshop and scan by way of the offered publications. All of them, regardless of who, what, the place or when, current their options with DL.

Now, DL is nice, don’t get me improper. I’m lapping up all of the achievements we’ve been witnessing. What a time to be alive! Furthermore, deep studying is liable for putting CV on the map within the business, as I’ve mentioned in earlier posts of mine. CV is now a worthwhile and helpful enterprise, so I actually don’t have anything to complain about. (CV used to only be a predominantly theoretical discipline discovered normally solely in academia as a result of inherent issue of processing movies and pictures.)

Nonetheless, I do have one little qualm with what is occurring round us. With the ubiquity of DL, I really feel as if creativity in AI has been killed.

To clarify what I imply, I’ll talk about first how DL modified the way in which we do issues. I’ll stick with examples in pc imaginative and prescient to make issues simpler, however you’ll be able to simply transpose my opinions/examples to different fields of AI.

Conventional Pc Imaginative and prescient

Earlier than the emergence of DL for those who had a process resembling object classification/detection in pictures (the place you attempt to write an algorithm to detect what objects are in a picture), you’ll sit down and work out what options outline each specific object that you just wished to detect. What are the salient options that outline a chair, a motorcycle, a automotive, and many others.? Bikes have two wheels, a handlebar and pedals. Nice! Let’s put that into our code: “Machine, search for clusters of pixels that match this definition of a motorcycle wheel, pedal, and many others. For those who discover sufficient of those options, we’ve got a bicycle in our picture!”

So, I might take a photograph of my bike leaning towards my white wall and I then feed it to my algorithm. At every iteration of my experiments I might work away by manually advantageous tuning my “bike definition” in my code to get my algorithm to detect that exact bike in my picture: “Machine, really it is a higher definition of a pedal. Do this one out now.”

As soon as I might begin to see issues working, I’d take a couple of extra footage of my bike at totally different angles and repeat the method on these pictures till I might get my algorithm to work fairly properly on these too.

Then it will be time to ship the algorithm to shoppers.

Unhealthy thought! It seems {that a} easy process like this turns into inconceivable to do as a result of a motorcycle in a real-world image has an infinite variety of variations. They arrive in numerous shapes, sizes, colors after which on high of that you need to add the totally different variations that happen with lighting and climate modifications and occlusions from different objects. To not point out the infinite variety of angles into which you’ll be able to place a motorcycle. All these permutations are an excessive amount of to deal with for us mere people: “Machine, really I merely can’t offer you all of the doable definitions when it comes to clusters of pixels of a motorcycle wheel as a result of there are too many parameters for me to take care of manually. Sorry.”

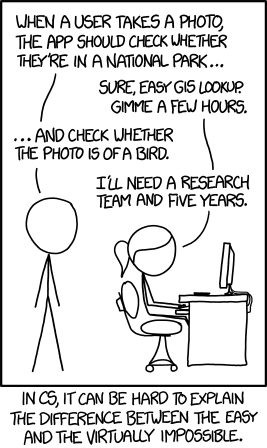

By the way, there’s a well-known xkcd cartoon that captures the issue properly:

Creativity in Conventional Pc Imaginative and prescient

Now, I’ve simplified the above course of enormously and abstracted over a number of issues. However the fundamental gist is there: the actual world was exhausting for AI to work in and to create workable options you have been compelled to be artistic. Creativity on the a part of engineers and researchers revolved round getting to grasp the issue exceptionally properly after which turning in direction of an revolutionary and visionary thoughts to discover a excellent resolution.

Algorithms abounded to help us. For instance, one would generally make use of issues like edge detection, nook detection, and color segmentation to simplify pictures to help us with finding our objects, for instance. The picture under reveals you ways an edge detector works to “break down” a picture:

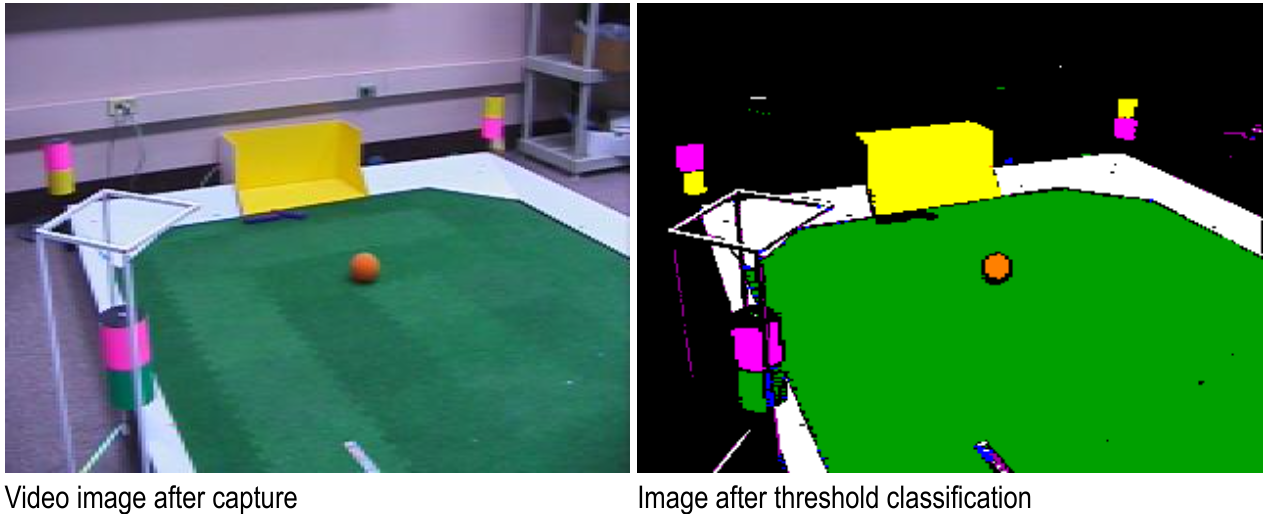

Color segmentation works by altering all shades of dominant colors in a picture into one shade solely, like so:

The second picture is way simpler to take care of. For those who needed to write an algorithm for a robotic to seek out the ball, you’ll now ask the algorithm to search for patches of pixels of solely ONE specific shade of orange. You’ll not want to fret about modifications in lighting and shading that may have an effect on the color of the ball (like within the left picture) as a result of all the things could be uniform. That’s, all pixels that you’d take care of could be one single color. And all of the sudden your definitions of objects that you just have been attempting to find weren’t as dense. The variety of parameters wanted dropped considerably.

Machine studying would even be employed. Algorithms like SVM, k-means clustering, random choice forests, Naive Bayes have been there at our disposal. You would need to take into consideration which of those would finest fit your use-case and the way finest to optimise them.

After which there have been additionally function detectors – algorithms that tried to detect salient options for you that will help you within the course of of making your personal definitions of objects. The SIFT and SURF algorithms deserve Oscars for what they did on this respect again within the day.

Most likely, my favorite algorithm of all time is the Viola-Jones Face Detection algorithm. It’s ingenious in its simplicity and for the primary time allowed face detection (and never solely) to be carried out in real-time in 2001. It was an enormous breakthrough in these days. You could possibly use this algorithm to detect the place faces have been in a picture after which focus your evaluation on that exact space for facial recognition duties. Drawback simplified!

Anyway, all of the algorithms have been there to help us in our duties. When issues labored, it was like watching a symphony taking part in in concord. This algorithm coupled with this algorithm utilizing this machine studying approach that was then fed by way of this specific process, and many others. It was stunning. I might go so far as to say that at occasions it was artwork.

However even with the help of all these algorithms, a lot was nonetheless performed manually as I described above – and actuality was nonetheless on the finish of the day an excessive amount of to deal with. There have been too many parameters to take care of. Machines and people collectively struggled to get something significant to work.

The Introduction of Deep Studying

When DL was launched (circa 2012) it launched the idea of end-to-end studying the place (in a nutshell) the machine is informed to study what to search for with respect to every particular class of object. It really works out essentially the most descriptive and salient options for every object all by itself. In different phrases, neural networks are informed to find the underlying patterns in courses of pictures. What’s the definition of a motorcycle? A automotive? A washer? The machine works this all out for you. Wired journal places it this manner:

If you wish to train a [deep] neural community to acknowledge a cat, as an illustration, you don’t inform it to search for whiskers, ears, fur, and eyes. You merely present it hundreds and hundreds of pictures of cats, and ultimately it really works issues out. If it retains misclassifying foxes as cats, you don’t rewrite the code. You simply preserve teaching it.

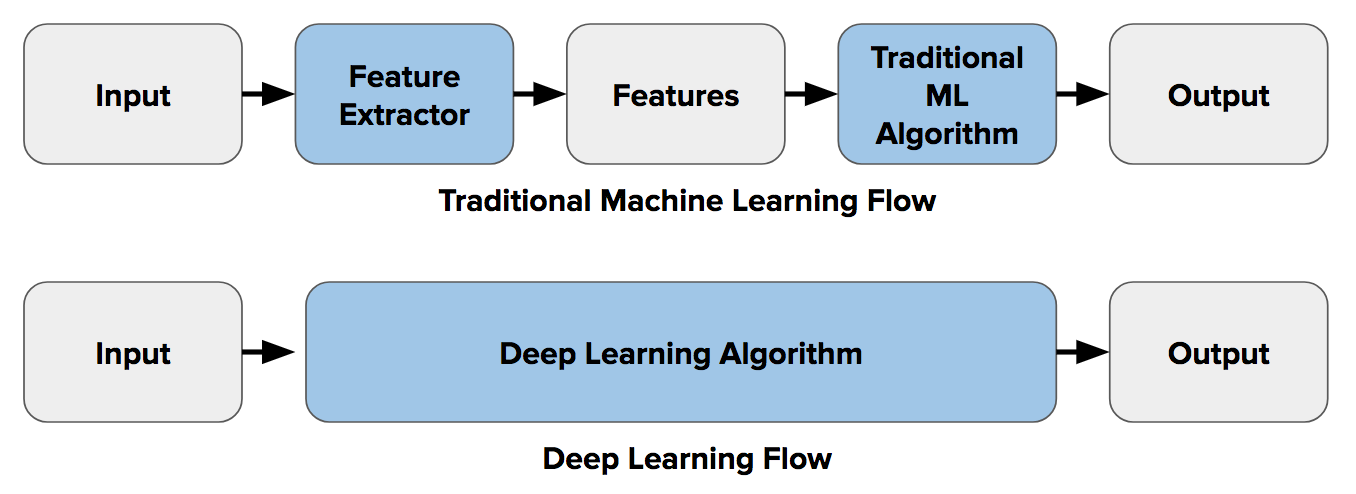

The picture under portrays this distinction between function extraction (utilizing conventional CV) and end-to-end studying:

Deep studying works by organising a neural community that may include tens of millions and even billions of parameters (neurons). These parameters are initially “clean”, let’s say. Then, hundreds and hundreds of pictures are despatched by way of the community and slowly over time the parameters are aligned and adjusted accordingly.

Beforehand, we must modify these parameters ourselves in a method or one other, and never in a neural community – however we may solely deal with tons of or hundreds of parameters. We didn’t have the means to handle extra.

So, deep studying has given us the chance to take care of a lot, rather more advanced duties. It has really been a revolution for AI. The xkcd comedian above is not related. That downside has been just about solved.

The Lack of Creativity in DL

Like I mentioned, now when we’ve got an issue to unravel, we throw knowledge at a neural community after which get the machine to work out find out how to clear up the issue – and that’s just about it! The lengthy and artistic pc imaginative and prescient pipelines of algorithms and duties are gone. We simply use deep studying. There are actually solely two bottlenecks that we’ve got to take care of: the necessity for knowledge and time for coaching. In case you have these (and cash to pay for the electrical energy required to energy your machines), you are able to do magic.

(In this text of mine I describe when conventional pc imaginative and prescient methods nonetheless do a greater job than deep studying – nevertheless, the artwork is dying out).

Positive, there are nonetheless many issues that you’ve management over when choosing a deep neural community resolution, e.g. variety of layers, and naturally hyper-parameters resembling studying fee, batch dimension, and variety of epochs. However when you get these more-or-less proper, additional tuning has diminishing returns.

You even have to decide on the neural community that most accurately fits your duties: convolutional, generative, recurrent, and the like. We roughly know, nevertheless, which structure works finest for which process.

Let me put it to you this manner: creativity has a lot been eradicated from AI that there are actually automated instruments accessible to unravel your issues utilizing deep studying. AutoML by Google is my favorite of those. An individual with no background in AI or pc imaginative and prescient can use these instruments with ease to get very spectacular outcomes. They only have to throw sufficient knowledge on the factor and the device works the remaining out for them routinely.

I dunno, however that feels form of boring to me.

Possibly I’m improper. Possibly that’s simply me. I’m nonetheless proud to be a pc imaginative and prescient professional however it appears that evidently a number of the enjoyable has been sucked out of it.

Nevertheless, the outcomes that we get from deep studying will not be boring in any respect! No manner. Maybe I ought to cease complaining, then.

To learn when new content material like that is posted, subscribe to the mailing checklist: