On this tutorial, you’ll discover ways to use Python and the OpenAI API to carry out knowledge mining and evaluation in your knowledge.

Manually analyzing datasets to extract helpful knowledge, and even utilizing easy applications to do the identical, can typically get difficult and time consuming. Fortunately, with the OpenAI API and Python it’s attainable to systematically analyze your datasets for fascinating info with out over-engineering your code and losing time. This can be utilized as a common answer for knowledge evaluation, eliminating the necessity to use completely different strategies, libraries and APIs to research various kinds of knowledge and knowledge factors inside a dataset.

Let’s stroll by means of the steps of utilizing the OpenAI API and Python to research your knowledge, beginning with tips on how to set issues up.

Setup

To mine and analyze knowledge by means of Python utilizing the OpenAI API, set up the openai and pandas libraries:

pip3 set up openai pandas

After you’ve performed that, create a brand new folder and create an empty Python file inside your new folder.

Analyzing Textual content Recordsdata

For this tutorial, I believed it might be fascinating to make Python analyze Nvidia’s newest earnings name.

Obtain the newest Nvidia earnings name transcript that I obtained from The Motley Idiot and transfer it into your venture folder.

Then open your empty Python file and add this code.

The code reads the Nvidia earnings transcript that you just’ve downloaded and passes it to the extract_info operate because the transcript variable.

The extract_info operate passes the immediate and transcript because the person enter, in addition to temperature=0.3 and mannequin="gpt-3.5-turbo-16k". The explanation it makes use of the “gpt-3.5-turbo-16k” mannequin is as a result of it could course of massive texts corresponding to this transcript. The code will get the response utilizing the openai.ChatCompletion.create endpoint and passes the immediate and transcript variables as person enter:

completions = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo-16k",

messages=[

{"role": "user", "content": prompt+"nn"+text}

],

temperature=0.3,

)

The total enter will seem like this:

Extract the next info from the textual content:

Nvidia's income

What Nvidia did this quarter

Remarks about AI

Nvidia earnings transcript goes right here

Now, if we cross the enter to the openai.ChatCompletion.create endpoint, the total output will seem like this:

{

"selections": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Actual response",

"role": "assistant"

}

}

],

"created": 1693336390,

"id": "request-id",

"mannequin": "gpt-3.5-turbo-16k-0613",

"object": "chat.completion",

"utilization": {

"completion_tokens": 579,

"prompt_tokens": 3615,

"total_tokens": 4194

}

}

As you may see, it returns the textual content response in addition to the token utilization of the request, which could be helpful in case you’re monitoring your bills and optimizing your prices. However since we’re solely within the response textual content, we get it by specifying the completions.selections[0].message.content material response path.

If you happen to run your code, you need to get an analogous output to what’s quoted under:

From the textual content, we will extract the next info:

- Nvidia’s income: Within the second quarter of fiscal 2024, Nvidia reported report Q2 income of 13.51 billion, which was up 88% sequentially and up 101% 12 months on 12 months.

- What Nvidia did this quarter: Nvidia skilled distinctive development in numerous areas. They noticed report income of their knowledge heart section, which was up 141% sequentially and up 171% 12 months on 12 months. Additionally they noticed development of their gaming section, with income up 11% sequentially and 22% 12 months on 12 months. Moreover, their skilled visualization section noticed income development of 28% sequentially. Additionally they introduced partnerships and collaborations with firms like Snowflake, ServiceNow, Accenture, Hugging Face, VMware, and SoftBank.

- Remarks about AI: Nvidia highlighted the robust demand for his or her AI platforms and accelerated computing options. They talked about the deployment of their HGX methods by main cloud service suppliers and shopper web firms. Additionally they mentioned the purposes of generative AI in numerous industries, corresponding to advertising, media, and leisure. Nvidia emphasised the potential of generative AI to create new market alternatives and increase productiveness in several sectors.

As you may see, the code extracts the data that’s specified within the immediate (Nvidia’s income, what Nvidia did this quarter, and remarks about AI) and prints it.

Analyzing CSV Recordsdata

Analyzing earnings-call transcripts and textual content recordsdata is cool, however to systematically analyze massive volumes of knowledge, you’ll have to work with CSV recordsdata.

As a working instance, obtain this Medium articles CSV dataset and paste it into your venture file.

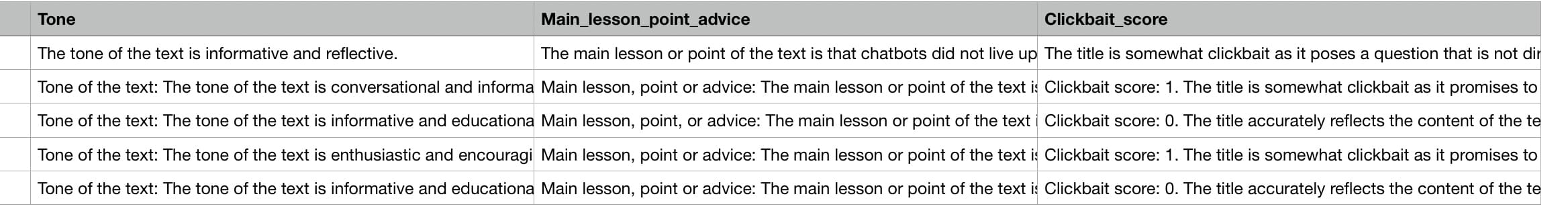

If you happen to have a look into the CSV file, you’ll see that it has the “creator”, “claps”, “reading_time”, “hyperlink”, “title” and “textual content” columns. For analyzing the medium articles with OpenAI, you solely want the “title” and “textual content” columns.

Create a brand new Python file in your venture folder and paste this code.

This code is a bit completely different from the code we used to research a textual content file. It reads CSV rows one after the other, extracts the required items of data, and provides them into new columns.

For this tutorial, I’ve picked a CSV dataset of Medium articles, which I obtained from HSANKESARA on Kaggle. This CSV evaluation code will discover the general tone and the primary lesson/level of every article, utilizing the “title” and “article” columns of the CSV file. Since I all the time come throughout clickbaity articles on Medium, I additionally thought it might be fascinating to inform it to seek out how “clickbaity” every article is by giving every one a “clickbait rating” from 0 to three, the place 0 isn’t any clickbait and three is excessive clickbait.

Earlier than I clarify the code, analyzing your complete CSV file would take too lengthy and value too many API credit, so for this tutorial, I’ve made the code analyze solely the primary 5 articles utilizing df = df[:5].

You might be confused concerning the following a part of the code, so let me clarify:

for di in vary(len(df)):

title = titles[di]

summary = articles[di]

additional_params = extract_info('Title: '+str(title) + 'nn' + 'Textual content: ' + str(summary))

strive:

consequence = additional_params.break up("nn")

besides:

consequence = {}

This code iterates by means of all of the articles (rows) within the CSV file and, with every iteration, will get the title and physique of every article and passes it to the extract_info operate, which we noticed earlier. It then turns the response of the extract_info operate into a listing to separate the completely different items of information utilizing this code:

strive:

consequence = additional_params.break up("nn")

besides:

consequence = {}

Subsequent, it provides every bit of information into a listing, and if there’s an error (if there’s no worth), it provides “No consequence” into the record:

strive:

apa1.append(consequence[0])

besides Exception as e:

apa1.append('No consequence')

strive:

apa2.append(consequence[1])

besides Exception as e:

apa2.append('No consequence')

strive:

apa3.append(consequence[2])

besides Exception as e:

apa3.append('No consequence')

Lastly, after the for loop is completed, the lists that include the extracted information are inserted into new columns within the CSV file:

df = df.assign(Tone=apa1)

df = df.assign(Main_lesson_or_point=apa2)

df = df.assign(Clickbait_score=apa3)

As you may see, it provides the lists into new CSV columns which might be identify “Tone”, “Main_lesson_or_point” and “Clickbait_score”.

It then appends them to the CSV file with index=False:

df.to_csv("knowledge.csv", index=False)

The explanation why you need to specify index=False is to keep away from creating new index columns each time you append new columns to the CSV file.

Now, in case you run your Python file, look forward to it to complete and test our CSV file in a CSV file viewer, you’ll see the brand new columns, as pictured under.

If you happen to run your code a number of instances, you’ll discover that the generated solutions differ barely. It is because the code makes use of temperature=0.3 so as to add a little bit of creativity into its solutions, which is beneficial for subjective subjects like clickbait.

Working with A number of Recordsdata

If you wish to mechanically analyze a number of recordsdata, it’s good to first put them inside a folder and ensure the folder solely accommodates the recordsdata you’re excited by, to forestall your Python code from studying irrelevant recordsdata. Then, set up the glob library utilizing pip3 set up glob and import it in your Python file utilizing import glob.

In your Python file, use this code to get a listing of all of the recordsdata in your knowledge folder:

data_files = glob.glob("data_folder/*")

Then put the code that does the evaluation in a for loop:

for i in vary(len(data_files)):

Contained in the for loop, learn the contents of every file like this for textual content recordsdata:

f = open(f"data_folder/{data_files[i]}", "r")

txt_data = f.learn()

Additionally like this for CSV recordsdata:

df = pd.read_csv(f"data_folder/{data_files[i]}")

As well as, be sure that to avoid wasting the output of every file evaluation right into a separate file utilizing one thing like this:

df.to_csv(f"output_folder/knowledge{i}.csv", index=False)

Conclusion

Keep in mind to experiment along with your temperature parameter and modify it to your use case. If you would like the AI to make extra artistic solutions, improve your temperature, and in order for you it to make extra factual solutions, be sure that to decrease it.

The mixture of OpenAI and Python knowledge evaluation has many purposes aside from article and earnings name transcript evaluation. Examples embody information evaluation, e-book evaluation, buyer evaluate evaluation, and way more! That mentioned, when testing your Python code on large datasets, be sure that to solely take a look at it on a small a part of the total dataset to avoid wasting API credit and time.