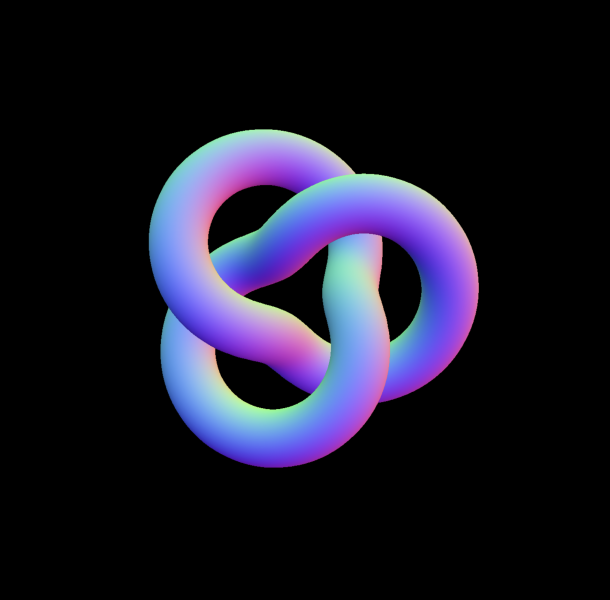

Blackbird was a enjoyable, experimental website that I used as a approach to get aware of WebGL within Strong.js. It went by the story of how the SR-71 was in-built tremendous technical element. The wireframe impact lined right here helped visualize the know-how beneath the floor of the SR-71 whereas preserving the polished metallic exterior seen that matched the websites aesthetic.

Right here is how the impact seems like on the Blackbird website:

On this tutorial, we’ll rebuild that impact from scratch: rendering a mannequin twice, as soon as as a stable and as soon as as a wireframe, then mixing the 2 collectively in a shader for a easy, animated transition. The tip consequence is a versatile approach you need to use for technical reveals, holograms, or any second the place you need to present each the construction and the floor of a 3D object.

There are three issues at work right here: materials properties, render targets, and a black-to-white shader gradient. Let’s get into it!

However First, a Little About Strong.js

Strong.js isn’t a framework title you hear usually, I’ve switched my private work to it for the ridiculously minimal developer expertise and since JSX stays the best factor since sliced bread. You completely don’t want to make use of the Strong.js a part of this demo, you possibly can strip it out and use vanilla JS all the identical. However who is aware of, chances are you’ll get pleasure from it 🙂

Intrigued? Take a look at Strong.js.

Why I Switched

TLDR: Full-stack JSX with out the entire opinions of Subsequent and Nuxt, plus it’s like 8kb gzipped, wild.

The technical model: Written in JSX, however doesn’t use a digital DOM, so a “reactive” (assume useState()) doesn’t re-render a complete part, only one DOM node. Additionally runs isomorphically, so "use consumer" is a factor of the previous.

Setting Up Our Scene

We don’t want something wild for the impact: a Mesh, Digicam, Renderer, and Scene will do. I take advantage of a base Stage class (for theatrical-ish naming) to manage when issues get initialized.

A World Object for Monitoring Window Dimensions

window.innerWidth and window.innerHeight set off doc reflow once you use them (extra about doc reflow right here). So I hold them in a single object, solely updating it when needed and studying from the article, as a substitute of utilizing window and inflicting reflow. Discover these are all set to 0 and never precise values by default. window will get evaluated as undefined when utilizing SSR, so we need to wait to set this till our app is mounted, GL class is initialized, and window is outlined to keep away from everyone’s favourite error: Can not learn properties of undefined (studying ‘window’).

// src/gl/viewport.js

export const viewport = {

width: 0,

peak: 0,

devicePixelRatio: 1,

aspectRatio: 0,

};

export const resizeViewport = () => {

viewport.width = window.innerWidth;

viewport.peak = window.innerHeight;

viewport.aspectRatio = viewport.width / viewport.peak;

viewport.devicePixelRatio = Math.min(window.devicePixelRatio, 2);

};A Primary Three.js Scene, Renderer, and Digicam

Earlier than we are able to render something, we want a small framework to deal with our scene setup, rendering loop, and resizing logic. As an alternative of scattering this throughout a number of information, we’ll wrap it in a Stage class that initializes the digicam, renderer, and scene in a single place. This makes it simpler to maintain our WebGL lifecycle organized, particularly as soon as we begin including extra advanced objects and results.

// src/gl/stage.js

import { WebGLRenderer, Scene, PerspectiveCamera } from 'three';

import { viewport, resizeViewport } from './viewport';

class Stage {

init(factor) {

resizeViewport() // Set the preliminary viewport dimensions, helps to keep away from utilizing window within viewport.js for SSR-friendliness

this.digicam = new PerspectiveCamera(45, viewport.aspectRatio, 0.1, 1000);

this.digicam.place.set(0, 0, 2); // again the digicam up 2 items so it is not on high of the meshes we make later, you will not see them in any other case.

this.renderer = new WebGLRenderer();

this.renderer.setSize(viewport.width, viewport.peak);

factor.appendChild(this.renderer.domElement); // connect the renderer to the dom so our canvas reveals up

this.renderer.setPixelRatio(viewport.devicePixelRatio); // Renders larger pixel ratios for screens that require it.

this.scene = new Scene();

}

render() {

this.renderer.render(this.scene, this.digicam);

requestAnimationFrame(this.render.bind(this));

// All the scenes youngster courses with a render technique could have it referred to as mechanically

this.scene.youngsters.forEach((youngster) => {

if (youngster.render && typeof youngster.render === 'operate') {

youngster.render();

}

});

}

resize() {

this.renderer.setSize(viewport.width, viewport.peak);

this.digicam.side = viewport.aspectRatio;

this.digicam.updateProjectionMatrix();

// All the scenes youngster courses with a resize technique could have it referred to as mechanically

this.scene.youngsters.forEach((youngster) => {

if (youngster.resize && typeof youngster.resize === 'operate') {

youngster.resize();

}

});

}

}

export default new Stage();And a Fancy Mesh to Go With It

With our stage prepared, we can provide it one thing attention-grabbing to render. A torus knot is ideal for this: it has loads of curves and element to point out off each the wireframe and stable passes. We’ll begin with a easy MeshNormalMaterial in wireframe mode so we are able to clearly see its construction earlier than transferring on to the blended shader model.

// src/gl/torus.js

import { Mesh, MeshBasicMaterial, TorusKnotGeometry } from 'three';

export default class Torus extends Mesh {

constructor() {

tremendous();

this.geometry = new TorusKnotGeometry(1, 0.285, 300, 26);

this.materials = new MeshNormalMaterial({

colour: 0xffff00,

wireframe: true,

});

this.place.set(0, 0, -8); // Again up the mesh from the digicam so its seen

}

}A fast word on lights

For simplicity we’re utilizing MeshNormalMaterial so we don’t need to mess with lights. The unique impact on Blackbird had six lights, waaay too many. The GPU on my M1 Max was choked to 30fps making an attempt to render the advanced fashions and realtime six-point lighting. However decreasing this to only 2 lights (which visually seemed similar) ran at 120fps no downside. Three.js isn’t like Blender the place you may plop in 14 lights and torture your beefy pc with the render for 12 hours whilst you sleep. The lights in WebGL have penalties 🫠

Now, the Strong JSX Parts to Home It All

// src/elements/GlCanvas.tsx

import { onMount, onCleanup } from 'solid-js';

import Stage from '~/gl/stage';

export default operate GlCanvas() {

// let is used as a substitute of refs, these aren't reactive

let el;

let gl;

let observer;

onMount(() => {

if(!el) return

gl = Stage;

gl.init(el);

gl.render();

observer = new ResizeObserver((entry) => gl.resize());

observer.observe(el); // use ResizeObserver as a substitute of the window resize occasion.

// It's debounced AND fires as soon as when initialized, no have to name resize() onMount

});

onCleanup(() => {

if (observer) {

observer.disconnect();

}

});

return (

<div

ref={el}

type={{

place: 'mounted',

inset: 0,

peak: '100lvh',

width: '100vw',

}}

/>

);

}let is used to declare a ref, there is no such thing as a formal useRef() operate in Strong. Alerts are the one reactive technique. Learn extra on refs in Strong.

Then slap that part into app.tsx:

// src/app.tsx

import { Router } from '@solidjs/router';

import { FileRoutes } from '@solidjs/begin/router';

import { Suspense } from 'solid-js';

import GlCanvas from './elements/GlCanvas';

export default operate App() {

return (

<Router

root={(props) => (

<Suspense>

{props.youngsters}

<GlCanvas />

</Suspense>

)}

>

<FileRoutes />

</Router>

);

}Every 3D piece I take advantage of is tied to a particular factor on the web page (normally for timeline and scrolling), so I create a person part to manage every class. This helps me hold organized when I’ve 5 or 6 WebGL moments on one web page.

// src/elements/WireframeDemo.tsx

import { createEffect, createSignal, onMount } from 'solid-js'

import Stage from '~/gl/stage';

import Torus from '~/gl/torus';

export default operate WireframeDemo() {

let el;

const [element, setElement] = createSignal(null);

const [actor, setActor] = createSignal(null);

createEffect(() => {

setElement(el);

if (!factor()) return;

setActor(new Torus()); // Stage is initialized when the web page initially mounts,

// so it isn't accessible till the following tick.

// A sign forces this replace to the following tick,

// after Stage is out there.

Stage.scene.add(actor());

});

return <div ref={el} />;

}createEffect() as a substitute of onMount(): this mechanically tracks dependencies (factor, and actor on this case) and fires the operate after they change, no extra useEffect() with dependency arrays 🙃. Learn extra on createEffect in Strong.

Then a minimal route to place the part on:

// src/routes/index.tsx

import WireframeDemo from '~/elements/WiframeDemo';

export default operate House() {

return (

<important>

<WireframeDemo />

</important>

);

}

Now you’ll see this:

Switching a Materials to Wireframe

I cherished wireframe styling for the Blackbird website! It match the prototype really feel of the story, totally textured fashions felt too clear, wireframes are a bit “dirtier” and unpolished. You possibly can wireframe nearly any materials in Three.js with this:

// /gl/torus.js

this.materials.wireframe = true

this.materials.needsUpdate = true;

However we need to do that dynamically on solely a part of our mannequin, not on your entire factor.

Enter render targets.

The Enjoyable Half: Render Targets

Render Targets are a brilliant deep subject however they boil right down to this: No matter you see on display is a body on your GPU to render, in WebGL you may export that body and re-use it as a texture on one other mesh, you’re making a “goal” on your rendered output, a render goal.

Since we’re going to wish two of those targets, we are able to make a single class and re-use it.

// src/gl/render-target.js

import { WebGLRenderTarget } from 'three';

import { viewport } from '../viewport';

import Torus from '../torus';

import Stage from '../stage';

export default class RenderTarget extends WebGLRenderTarget {

constructor() {

tremendous();

this.width = viewport.width * viewport.devicePixelRatio;

this.peak = viewport.peak * viewport.devicePixelRatio;

}

resize() {

const w = viewport.width * viewport.devicePixelRatio;

const h = viewport.peak * viewport.devicePixelRatio;

this.setSize(w, h)

}

}That is simply an output for a texture, nothing extra.

Now we are able to make the category that can devour these outputs. It’s loads of courses, I do know, however splitting up particular person items like this helps me hold observe of the place stuff occurs. 800 line spaghetti mega-classes are the stuff of nightmares when debugging WebGL.

// src/gl/targeted-torus.js

import {

Mesh,

MeshNormalMaterial,

PerspectiveCamera,

PlaneGeometry,

} from 'three';

import Torus from './torus';

import { viewport } from './viewport';

import RenderTarget from './render-target';

import Stage from './stage';

export default class TargetedTorus extends Mesh {

targetSolid = new RenderTarget();

targetWireframe = new RenderTarget();

scene = new Torus(); // The form we created earlier

digicam = new PerspectiveCamera(45, viewport.aspectRatio, 0.1, 1000);

constructor() {

tremendous();

this.geometry = new PlaneGeometry(1, 1);

this.materials = new MeshNormalMaterial();

}

resize() {

this.targetSolid.resize();

this.targetWireframe.resize();

this.digicam.side = viewport.aspectRatio;

this.digicam.updateProjectionMatrix();

}

}Now, swap our WireframeDemo.tsx part to make use of the TargetedTorus class, as a substitute of Torus:

// src/elements/WireframeDemo.tsx

import { createEffect, createSignal, onMount } from 'solid-js';

import Stage from '~/gl/stage';

import TargetedTorus from '~/gl/targeted-torus';

export default operate WireframeDemo() {

let el;

const [element, setElement] = createSignal(null);

const [actor, setActor] = createSignal(null);

createEffect(() => {

setElement(el);

if (!factor()) return;

setActor(new TargetedTorus()); // << change me

Stage.scene.add(actor());

});

return <div ref={el} data-gl="wireframe" />;

}“Now all I see is a blue sq. Nathan, it really feel like we’re going backwards, present me the cool form once more”.

Shhhhh, It’s by design I swear!

From MeshNormalMaterial to ShaderMaterial

We are able to now take our Torus rendered output and smack it onto the blue aircraft as a texture utilizing ShaderMaterial. MeshNormalMaterial doesn’t allow us to use a texture, and we’ll want shaders quickly anyway. Within targeted-torus.js take away the MeshNormalMaterial and swap this in:

// src/gl/targeted-torus.js

this.materials = new ShaderMaterial({

vertexShader: `

various vec2 v_uv;

void important() {

gl_Position = projectionMatrix * modelViewMatrix * vec4(place, 1.0);

v_uv = uv;

}

`,

fragmentShader: `

various vec2 v_uv;

various vec3 v_position;

void important() {

gl_FragColor = vec4(0.67, 0.08, 0.86, 1.0);

}

`,

});Now we’ve got a a lot prettier purple aircraft with the assistance of two shaders:

- Vertex shaders manipulate vertex places of our materials, we aren’t going to the touch this one additional

- Fragment shaders assign the colours and properties to every pixel of our materials. This shader tells each pixel to be purple

Utilizing the Render Goal Texture

To indicate our Torus as a substitute of that purple colour, we are able to feed the fragment shader a picture texture by way of uniforms:

// src/gl/targeted-torus.js

this.materials = new ShaderMaterial({

vertexShader: `

various vec2 v_uv;

void important() {

gl_Position = projectionMatrix * modelViewMatrix * vec4(place, 1.0);

v_uv = uv;

}

`,

fragmentShader: `

various vec2 v_uv;

various vec3 v_position;

// declare 2 uniforms

uniform sampler2D u_texture_solid;

uniform sampler2D u_texture_wireframe;

void important() {

// declare 2 photos

vec4 wireframe_texture = texture2D(u_texture_wireframe, v_uv);

vec4 solid_texture = texture2D(u_texture_solid, v_uv);

// set the colour to that of the picture

gl_FragColor = solid_texture;

}

`,

uniforms: {

u_texture_solid: { worth: this.targetSolid.texture },

u_texture_wireframe: { worth: this.targetWireframe.texture },

},

});And add a render technique to our TargetedTorus class (that is referred to as mechanically by the Stage class):

// src/gl/targeted-torus.js

render() {

this.materials.uniforms.u_texture_solid.worth = this.targetSolid.texture;

Stage.renderer.render(this.scene, this.digicam);

Stage.renderer.setRenderTarget(this.targetSolid);

Stage.renderer.clear();

Stage.renderer.setRenderTarget(null);

}THE TORUS IS BACK. We’ve handed our picture texture into the shader and its outputting our authentic render.

Mixing Wireframe and Strong Supplies with Shaders

Shaders have been black magic to me earlier than this undertaking. It was my first time utilizing them in manufacturing and I’m used to frontend the place you assume in bins. Shaders are coordinates 0 to 1, which I discover far more durable to grasp. However, I’d used Photoshop and After Results with layers loads of occasions. These purposes do loads of the identical work shaders can: GPU computing. This made it far simpler. Beginning out by picturing or drawing what I needed, considering how I would do it in Photoshop, then asking myself how I might do it with shaders. Photoshop or AE into shaders is far much less mentally taxing once you don’t have a deep basis in shaders.

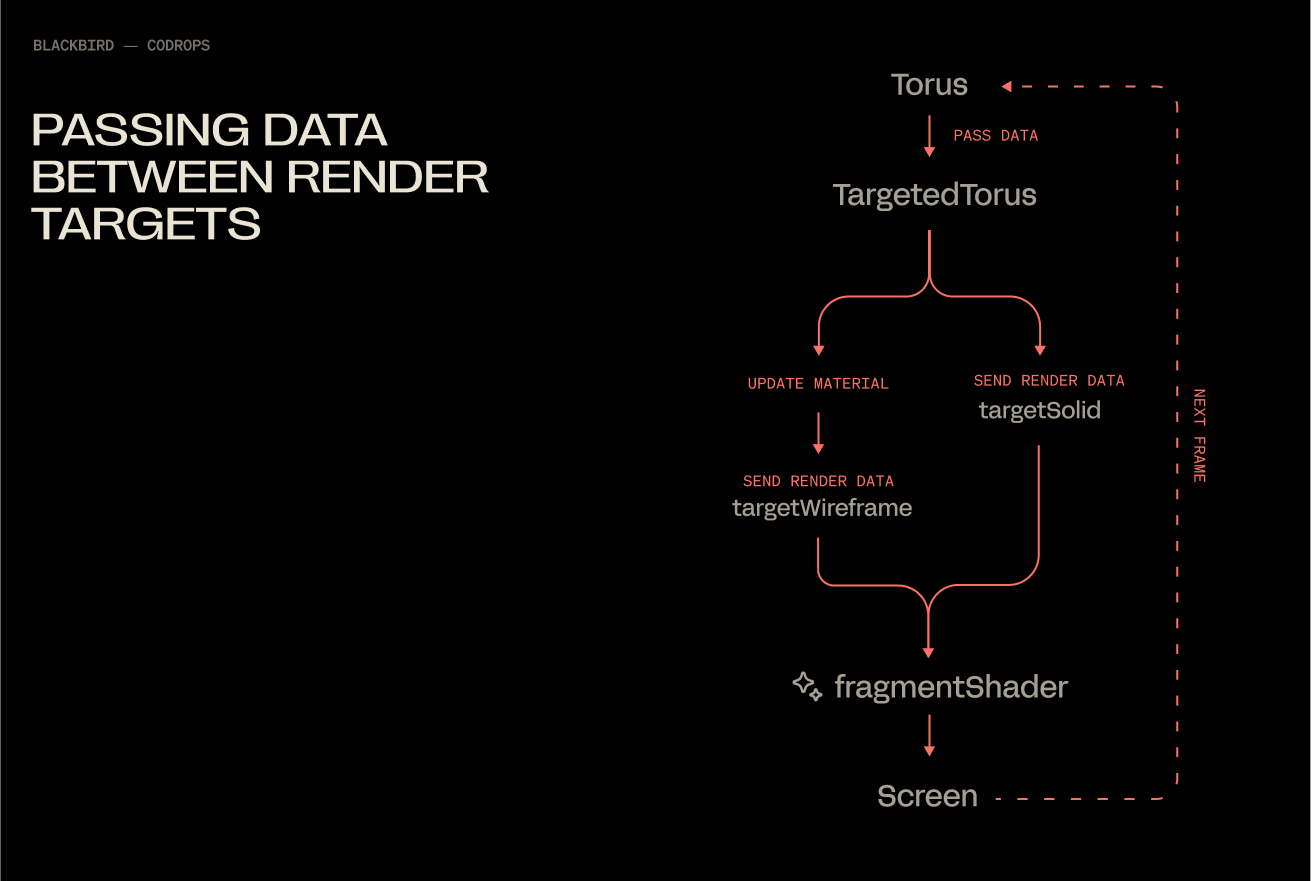

Populating Each Render Targets

In the mean time, we’re solely saving information to the solidTarget render goal by way of normals. We are going to replace our render loop, in order that our shader has them each this and wireframeTarget accessible concurrently.

// src/gl/targeted-torus.js

render() {

// Render wireframe model to wireframe render goal

this.scene.materials.wireframe = true;

Stage.renderer.setRenderTarget(this.targetWireframe);

Stage.renderer.render(this.scene, this.digicam);

this.materials.uniforms.u_texture_wireframe.worth = this.targetWireframe.texture;

// Render stable model to stable render goal

this.scene.materials.wireframe = false;

Stage.renderer.setRenderTarget(this.targetSolid);

Stage.renderer.render(this.scene, this.digicam);

this.materials.uniforms.u_texture_solid.worth = this.targetSolid.texture;

// Reset render goal

Stage.renderer.setRenderTarget(null);

}With this, you find yourself with a stream that below the hood seems like this:

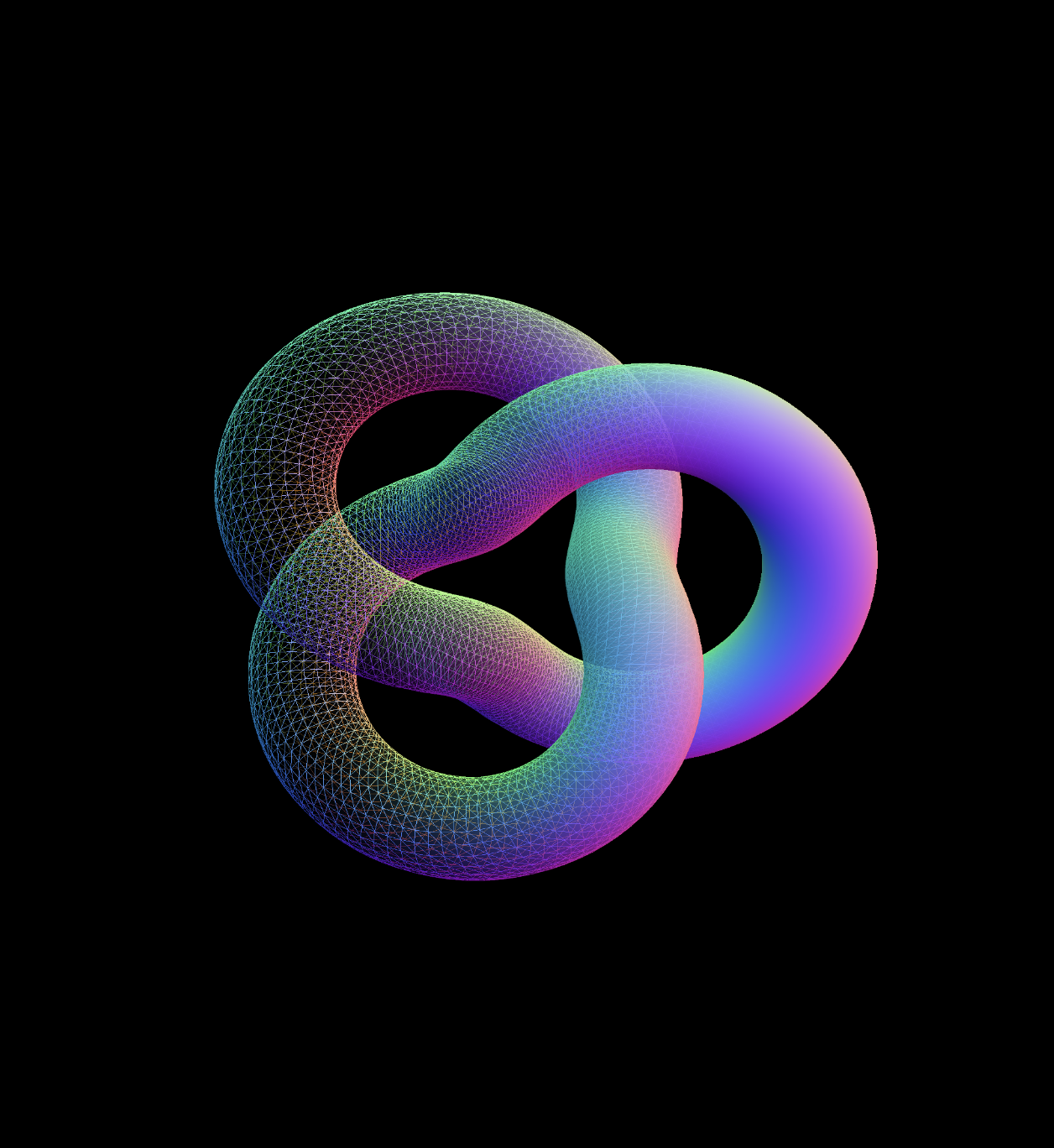

Fading Between Two Textures

Our fragment shader will get just a little replace, 2 additions:

- smoothstep creates a linear ramp between 2 values. UVs solely go from 0 to 1, so on this case we use

.15and.65as the boundaries (they appear make the impact extra apparent than 0 and 1). Then we use the x worth of the uvs to outline which worth will get fed into smoothstep. vec4 blended = combine(wireframe_texture, solid_texture, mix);combine does precisely what it says, mixes 2 values collectively at a ratio decided by mix..5being a wonderfully even break up.

// src/gl/targeted-torus.js

fragmentShader: `

various vec2 v_uv;

various vec3 v_position;

// declare 2 uniforms

uniform sampler2D u_texture_solid;

uniform sampler2D u_texture_wireframe;

void important() {

// declare 2 photos

vec4 wireframe_texture = texture2D(u_texture_wireframe, v_uv);

vec4 solid_texture = texture2D(u_texture_solid, v_uv);

float mix = smoothstep(0.15, 0.65, v_uv.x);

vec4 blended = combine(wireframe_texture, solid_texture, mix);

gl_FragColor = blended;

}

`,And increase, MIXED:

Let’s be sincere with ourselves, this seems exquisitely boring being static so we are able to spice this up with little magic from GSAP.

// src/gl/torus.js

import {

Mesh,

MeshNormalMaterial,

TorusKnotGeometry,

} from 'three';

import gsap from 'gsap';

export default class Torus extends Mesh {

constructor() {

tremendous();

this.geometry = new TorusKnotGeometry(1, 0.285, 300, 26);

this.materials = new MeshNormalMaterial();

this.place.set(0, 0, -8);

// add me!

gsap.to(this.rotation, {

y: 540 * (Math.PI / 180), // must be in radians, not levels

ease: 'power3.inOut',

length: 4,

repeat: -1,

yoyo: true,

});

}

}Thank You!

Congratulations, you’ve formally spent a measurable portion of your day mixing two supplies collectively. It was value it although, wasn’t it? On the very least, I hope this saved you some of the psychological gymnastics orchestrating a pair of render targets.

Have questions? Hit me up on Twitter!