Machine studying algorithms have revolutionized knowledge evaluation, enabling companies and researchers to make extremely correct predictions primarily based on huge datasets. Amongst these, the Random Forest algorithm stands out as one of the versatile and highly effective instruments for classification and regression duties.

This text will discover the important thing ideas behind the Random Forest algorithm, its working ideas, benefits, limitations, and sensible implementation utilizing Python. Whether or not you’re a newbie or an skilled developer, this information gives a complete overview of Random Forest in motion.

Key Takeaways

- The Random Forest algorithm combines a number of timber to create a strong and correct prediction mannequin.

- The Random Forest classifier combines a number of determination timber utilizing ensemble studying ideas, robotically determines characteristic significance, handles classification and regression duties successfully, and seamlessly manages lacking values and outliers.

- Function significance rankings from Random Forest present worthwhile insights into your knowledge.

- Parallel processing capabilities make it environment friendly for big units of coaching knowledge.

- Random Forest reduces overfitting by way of ensemble studying and random characteristic choice.

What Is the Random Forest Algorithm?

The Random Forest algorithm is an ensemble studying technique that constructs a number of determination timber and combines their outputs to make predictions. Every tree is skilled independently on a random subset of the coaching knowledge utilizing bootstrap sampling (sampling with alternative).

Moreover, at every cut up within the tree, solely a random subset of options is taken into account. This random characteristic choice introduces range amongst timber, lowering overfitting and enhancing prediction accuracy.

The idea mirrors the collective knowledge precept. Simply as massive teams usually make higher selections than people, a forest of various determination timber usually outperforms particular person determination timber.

For instance, in a buyer churn prediction mannequin, one determination tree could prioritize fee historical past, whereas one other focuses on customer support interactions. Collectively, these timber seize completely different points of buyer habits, producing a extra balanced and correct prediction.

Equally, in a home value prediction activity, every tree evaluates random subsets of the info and options. Some timber could emphasize location and measurement, whereas others give attention to age and situation. This range ensures the ultimate prediction displays a number of views, resulting in sturdy and dependable outcomes.

Mathematical Foundations of Determination Timber in Random Forest

To grasp how Random Forest makes selections, we have to discover the mathematical metrics that information splits in particular person determination timber:

1. Entropy (H)

Measures the uncertainty or impurity in a dataset.

- pi: Proportion of samples belonging to class

- c: Variety of courses.

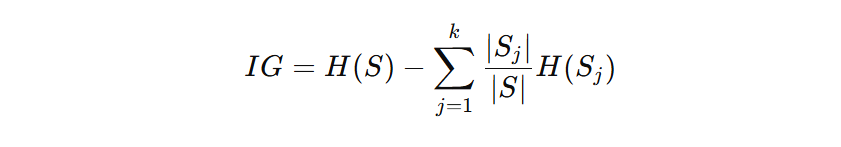

2. Data Achieve (IG)

Measures the discount in entropy achieved by splitting the dataset:

- S: Unique dataset

- Sj: Subset after cut up

- H(S): Entropy earlier than the cut up

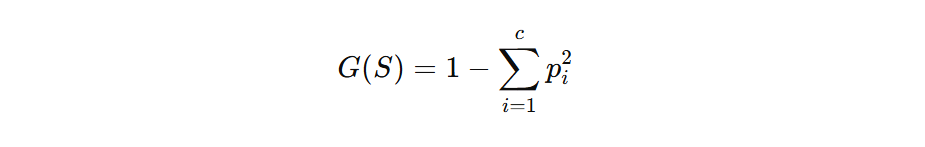

3. Gini Impurity (Utilized in Classification Timber)

This ia an alternative choice to Entropy. Gini Impurity is computed as:

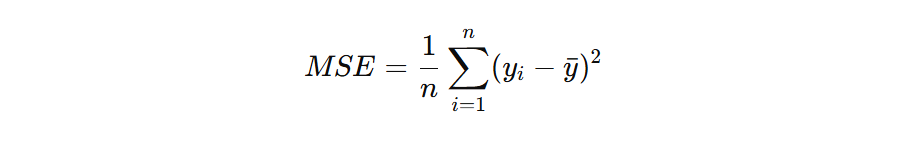

4. Imply Squared Error (MSE) for Regression

For Random Forest regression, splits decrease the imply squared error:

- yi: Precise values

- yˉ: Imply predicted worth

Why Use Random Forest?

The Random forest ML classifier presents important advantages, making it a strong machine studying algorithm amongst different supervised machine studying algorithms.

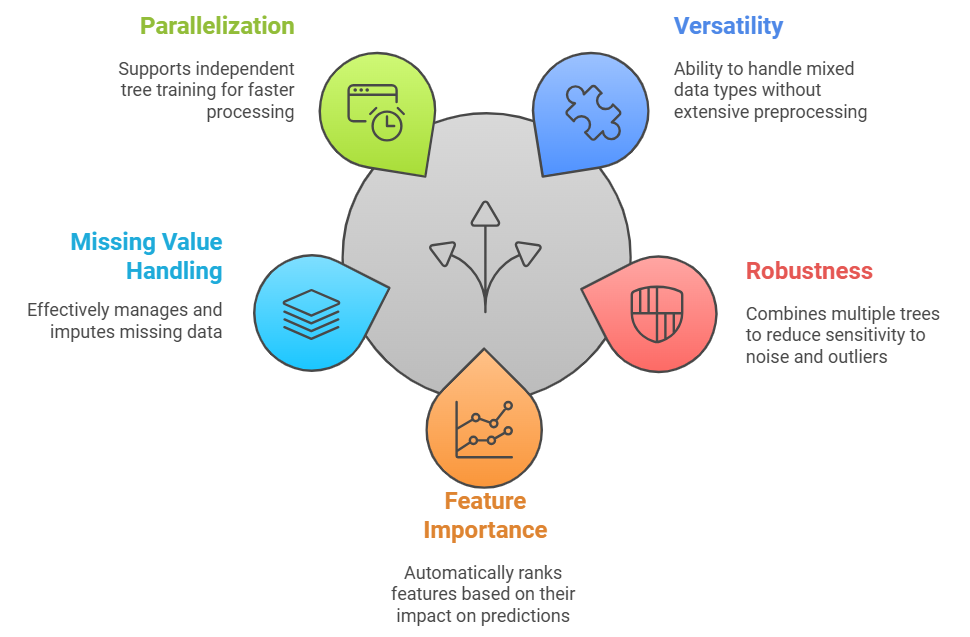

1. Versatility

- Random Forest mannequin excels at concurrently processing numerical and categorical coaching knowledge with out intensive preprocessing.

- The algorithm creates splits primarily based on threshold values for numerical knowledge, reminiscent of age, revenue, or temperature readings. When dealing with categorical knowledge like colour, gender, or product classes, binary splits are created for every class.

- This versatility turns into notably worthwhile in real-world classification duties the place knowledge units usually comprise combined knowledge varieties.

- For instance, in a buyer churn prediction mannequin, Random Forest can seamlessly course of numerical options like account stability and repair length alongside categorical options like subscription sort and buyer location.

2. Robustness

- The ensemble nature of Random Forest gives distinctive robustness by combining a number of determination timber.

- Every determination tree learns from a unique subset of the info, making the general mannequin much less delicate to noisy knowledge and outliers.

- Take into account a housing value prediction situation and one determination tree could be influenced by a expensive home within the dataset. Nevertheless, as a result of tons of of different determination timber are skilled on completely different knowledge subsets, this outlier’s influence will get diluted within the ultimate prediction.

- This collective decision-making course of considerably reduces overfitting – a typical drawback the place fashions study noise within the coaching knowledge quite than real patterns.

3. Function Significance

- Random Forest robotically calculates and ranks the significance of every characteristic within the prediction course of. This rating helps knowledge scientists perceive which variables most strongly affect the result.

- The Random Forest mannequin in machine studying measures significance by monitoring how a lot prediction error will increase when a characteristic is randomly shuffled.

- As an illustration, in a credit score danger evaluation mannequin, the Random Forest mannequin would possibly reveal that fee historical past and debt-to-income ratio are probably the most essential elements, whereas buyer age has much less influence. This perception proves invaluable for characteristic choice and mannequin interpretation.

4. Lacking Worth Dealing with

- Random Forest successfully manages lacking values, making it well-suited for real-world datasets with incomplete or imperfect knowledge. It handles lacking values by way of two major mechanisms:

- Surrogate Splits (Substitute Splits): Throughout tree building, Random Forest identifies different determination paths (surrogate splits) primarily based on correlated options. If a major characteristic worth is lacking, the mannequin makes use of a surrogate characteristic to make the cut up, guaranteeing predictions can nonetheless proceed.

- Proximity-Primarily based Imputation: Random Forest leverages proximity measures between knowledge factors to estimate lacking values. It calculates similarities between observations and imputes lacking entries utilizing values from the closest neighbors, successfully preserving patterns within the knowledge.

- Take into account a situation predicting whether or not somebody will repay a mortgage. If wage data is lacking, Random Forest analyzes associated options, reminiscent of job historical past, previous funds, and age, to make correct predictions. By leveraging correlations amongst options, it compensates for gaps in knowledge quite than discarding incomplete information.

5. Parallelization

- The Random Forest classifier structure naturally helps parallel computation as a result of every determination tree trains independently.

- This improves scalability and reduces coaching time considerably since tree building may be distributed throughout a number of CPU cores or GPU clusters,

- Trendy implementations, reminiscent of Scikit-Be taught’s RandomForestClassifier, leverage multi-threading and distributed computing frameworks like Dask or Spark to course of knowledge in parallel.

- This parallelization turns into essential when working with huge knowledge. As an illustration, when processing hundreds of thousands of buyer transactions for fraud detection, parallel processing can scale back coaching time from hours to minutes.

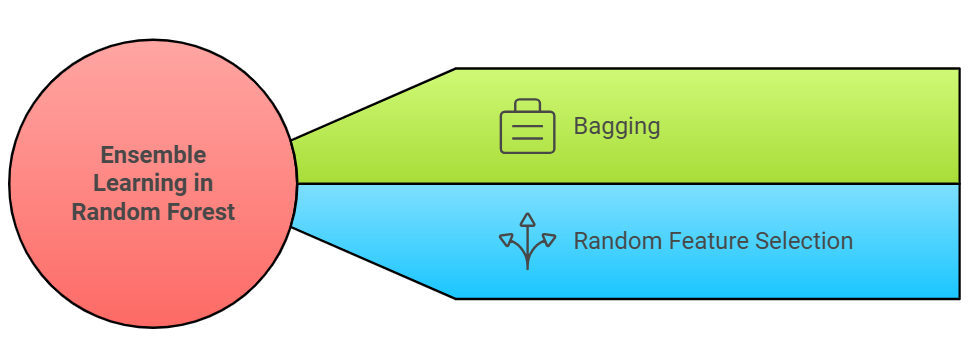

Ensemble Studying Method

Ensemble studying within the Random Forest algorithm combines a number of determination timber to create extra correct predictions than a single tree might obtain alone. This method works by way of two important strategies:

Bagging (Bootstrap Aggregating)

- Every determination tree is skilled on a random pattern of the info. It’s like asking completely different folks for his or her opinions. Every group would possibly discover completely different patterns, and mixing their views usually results in higher selections.

- Consequently, completely different timber study barely various patterns, lowering variance and enhancing generalization.

Random Function Choice

- At every cut up level in a call tree, solely a random subset of options is taken into account, quite than evaluating all options.

- This randomness ensures decorrelation between the timber, stopping them from changing into overly related and lowering the danger of overfitting.

This ensemble method makes machine studying Random Forest algorithm notably efficient for real-world classifications the place knowledge patterns are complicated, and no single perspective can seize all-important relationships.

Variants of Random Forest Algorithm

Random Forest technique has a number of variants and extensions designed to deal with particular challenges, reminiscent of imbalanced knowledge, high-dimensional options, incremental studying, and anomaly detection. Beneath are the important thing variants and their functions:

1. Extraordinarily Randomized Timber (Additional Timber)

- Makes use of random splits as a substitute of discovering the most effective cut up.

- Greatest for high-dimensional knowledge that require sooner coaching quite than 100% accuracy.

2. Rotation Forest

- Applies Principal Part Evaluation (PCA) to remodel options earlier than coaching timber.

- Greatest for multivariate datasets with excessive correlations amongst options.

3. Weighted Random Forest (WRF)

- Assigns weights to samples, prioritizing hard-to-classify or minority class examples.

- Greatest for imbalanced datasets like fraud detection or medical prognosis.

4. Indirect Random Forest (ORF)

- Makes use of linear mixtures of options as a substitute of single options for splits, enabling non-linear boundaries.

- Greatest for duties with complicated patterns reminiscent of picture recognition.

5. Balanced Random Forest (BRF)

- Handles imbalanced datasets by over-sampling minority courses or under-sampling majority courses.

- Greatest for binary classification with skewed class distributions (e.g., fraud detection).

6. Completely Random Timber Embedding (TRTE)

- Initiatives knowledge right into a high-dimensional sparse binary area for characteristic extraction.

- Greatest for unsupervised studying and preprocessing for clustering algorithms.

7. Isolation Forest (Anomaly Detection)

- Focuses on isolating outliers by random characteristic choice and splits.

- Greatest for anomaly detection in fraud detection, community safety, and intrusion detection techniques.

8. Mondrian Forest (Incremental Studying)

- Helps incremental updates, permitting dynamic studying as new knowledge turns into accessible.

- Greatest for streaming knowledge and real-time predictions.

9. Random Survival Forest (RSF)

- Designed for survival evaluation, predicting time-to-event outcomes with censored knowledge.

- Greatest for medical analysis and affected person survival predictions.

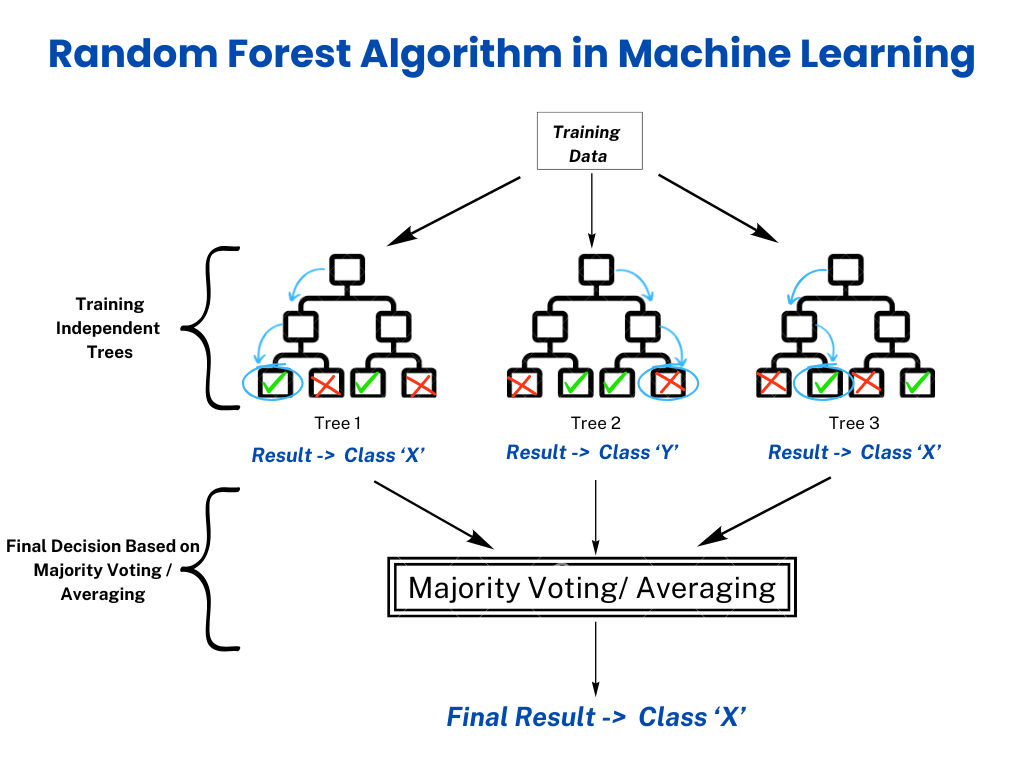

How Does Random Forest Algorithm Work?

The Random Forest algorithm creates a set of determination timber, every skilled on a random subset of the info. Right here’s a step-by-step breakdown:

Step 1: Bootstrap Sampling

- The Random Forest algorithm makes use of bootstrapping, a way for producing a number of datasets by random sampling (with alternative) from the unique coaching dataset. Every bootstrap pattern is barely completely different, guaranteeing that particular person timber see various subsets of the info.

- Roughly 63.2% of the info is utilized in coaching every tree, whereas the remaining 36.8% is ignored as out-of-bag samples (OOB samples), that are later used to estimate mannequin accuracy.

Step 2: Function Choice

- A call tree randomly selects a subset of options quite than all options for every cut up, which helps scale back overfitting and ensures range amongst timber.

- For Classification: The variety of options thought-about at every cut up is ready to:

m = sqrt(p) - For Regression: The variety of options thought-about at every cut up is:

m = p/3the place:- p = whole variety of options within the dataset.

- m = variety of options randomly chosen for analysis at every cut up.

Step 3: Tree Constructing

- Determination timber are constructed independently utilizing the sampled knowledge and the chosen options. Every tree grows till it reaches a stopping criterion, reminiscent of a most depth or a minimal variety of samples per leaf.

- Not like pruning strategies in single determination timber, Random Forest timber are allowed to completely develop. It relys on ensemble averaging to regulate overfitting.

Step 4: Voting or Averaging

- For classification issues, every determination tree votes for a category, and the bulk vote determines the ultimate prediction.

- For regression issues, the predictions from all timber are averaged to provide the ultimate output.

Step 5: Out-of-Bag (OOB) Error Estimation (Elective)

- The OOB samples, which weren’t used to coach every tree, function a validation set.

- The algorithm computes OOB error to evaluate efficiency with out requiring a separate validation dataset. It presents an unbiased accuracy estimate.

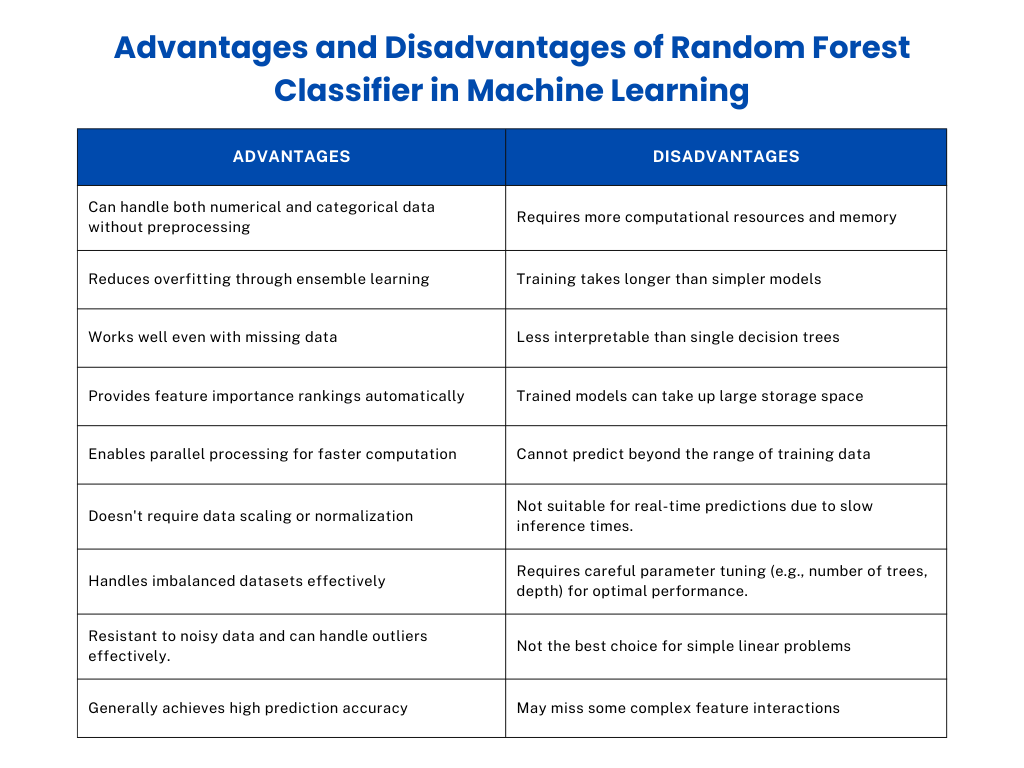

Benefits and Disadvantages of the Random Forest Classifier

The Random Forest machine studying classifier is thought to be one of the highly effective algorithms because of its capability to deal with quite a lot of knowledge varieties and duties, together with classification and regression. Nevertheless, it additionally comes with some trade-offs that have to be thought-about when selecting the best algorithm for a given drawback.

Benefits of Random Forest Classifier

- Random Forest can course of each numerical and categorical knowledge with out requiring intensive preprocessing or transformations.

- Its ensemble studying method reduces variance, making it much less liable to overfitting than single determination timber.

- Random Forest can characterize lacking knowledge or make predictions even when some characteristic values are unavailable.

- It gives a rating of characteristic significance offering insights into which variables contribute most to predictions.

- The power to course of knowledge in parallel makes it scalable and environment friendly for big datasets.

Disadvantages of Random Forest Classifier

- Coaching a number of timber requires extra reminiscence and processing energy than easier fashions like logistic regression.

- Not like single determination timber, the ensemble construction makes it more durable to interpret and visualize predictions.

- Fashions with many timber could occupy important space for storing, particularly for large knowledge functions.

- Random Forest could have sluggish inference instances. This will restrict its use in situations requiring prompt predictions.

- Cautious adjustment of hyperparameters (e.g., variety of timber, most depth) is critical to optimize efficiency and keep away from extreme complexity.

The desk beneath outlines the important thing strengths and limitations of the Random Forest algorithm.

Random Forest Classifier in Classification and Regression

The algorithm for Random Forest adapts successfully to classification and regression duties through the use of barely completely different approaches for every sort of drawback.

Classification

In classification, a Random Forest makes use of a voting system to foretell categorical outcomes (reminiscent of sure/no selections or a number of courses). Every determination tree within the forest makes its prediction, and a majority vote determines the ultimate reply.

For instance, if 60 timber predict “sure” and 40 predict “no,” the ultimate prediction can be “sure.”

This method works notably effectively for issues with:

- Binary classification (e.g., spam vs. non-spam emails).

- Multi-class classification (e.g., figuring out species of flowers primarily based on petal dimensions).

- Imbalanced datasets, the place class distribution is uneven because of its ensemble nature, scale back bias.

Regression

Random Forest employs completely different strategies for regression duties, the place the objective is to foretell steady values (like home costs or temperature). As a substitute of voting, every determination tree predicts a particular numerical worth. The ultimate prediction is calculated by averaging all these particular person predictions. This technique successfully handles complicated relationships in knowledge, particularly when the connections between variables aren’t easy.

This method is good for:

- Forecasting duties (e.g., climate predictions or inventory costs).

- Non-linear relationships, the place complicated interactions exist between variables.

Random Forest vs. Different Machine Studying Algorithms

The desk highlights the important thing variations between Random Forest and different machine studying algorithms, specializing in complexity, accuracy, interpretability, and scalability.

| Facet | Random Forest | Determination Tree | SVM (Help Vector Machine) | KNN (Ok-Nearest Neighbors) | Logistic Regression |

| Mannequin Sort | Ensemble technique (a number of determination timber mixed) | Single determination tree | Non-probabilistic, margin-based classifier | Occasion-based, non-parametric | A probabilistic, linear classifier |

| Complexity | Reasonably excessive (because of the ensemble of timber) | Low | Excessive, particularly with non-linear kernels | Low | Low |

| Accuracy | Excessive accuracy, particularly for big datasets | Can overfit and have decrease accuracy on complicated datasets | Excessive for well-separated knowledge; much less efficient for noisy datasets | Depending on the selection of random okay and distance metric | Performs effectively for linear relationships |

| Dealing with Non-Linear Information | Wonderful, captures complicated patterns because of tree ensembles | Restricted | Wonderful with non-linear kernels | Average, is determined by okay and knowledge distribution | Poor |

| Overfitting | Much less liable to overfitting (because of averaging of timber) | Extremely liable to overfitting | Vulnerable to overfitting with non-linear kernels | Susceptible to overfitting with small okay; underfitting with massive okay | Much less liable to overfitting |

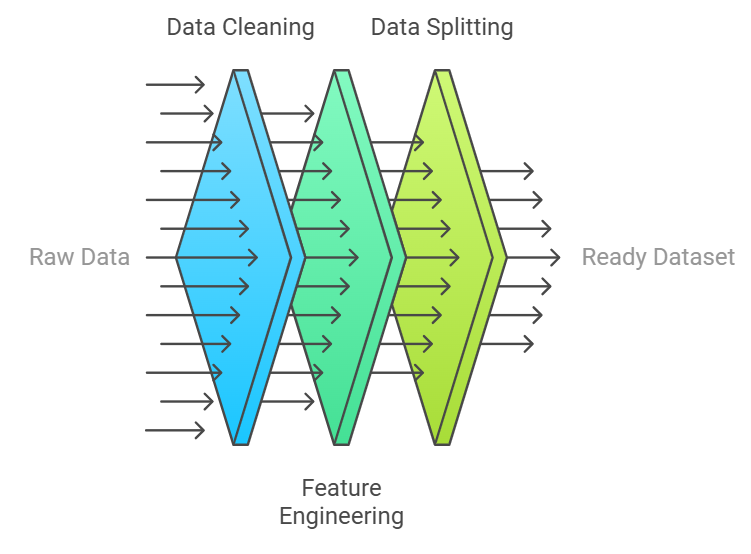

Key Steps of Information Preparation for Random Forest Modeling

Sufficient knowledge preparation is essential for constructing a strong Random Forest mannequin. Right here’s a complete guidelines to make sure optimum knowledge readiness:

1. Information Cleansing

- Use imputation strategies like imply, median, or mode for lacking values. Random Forest may also deal with lacking values natively by way of surrogate splits.

- Use boxplots or z-scores and resolve whether or not to take away or rework outliers primarily based on area data.

- Guarantee categorical values are standardized (e.g., ‘Male’ vs. ‘M’) to keep away from errors throughout encoding.

2. Function Engineering

- Mix options or extract insights, reminiscent of age teams or time intervals from timestamps.

- Use label encoding for ordinal knowledge and apply one-hot encoding for nominal classes.

3. Information Splitting

- Use an 80/20 or 70/30 cut up to stability the coaching and testing phases.

- In classification issues with imbalanced knowledge, use stratified sampling to keep up class proportions in each coaching and testing units.

Tips on how to Implement Random Forest Algorithm

Beneath is a straightforward Random Forest algorithm instance utilizing Scikit-Be taught for classification. The dataset used is the built-in Iris dataset.

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

iris = load_iris()

X = iris.knowledge

y = iris.goal

iris_df = pd.DataFrame(knowledge=iris.knowledge, columns=iris.feature_names)

iris_df['target'] = iris.goal

print(iris_df.head())

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

rf_classifier = RandomForestClassifier(n_estimators=100, random_state=42)

rf_classifier.match(X_train, y_train)

y_pred = rf_classifier.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.2f}")

print("nClassification Report:")

print(classification_report(y_test, y_pred))

print("nConfusion Matrix:")

print(confusion_matrix(y_test, y_pred))Rationalization of the Code

Now, let’s break the above Random Forest algorithm in machine studying instance into a number of components to know how the code works:

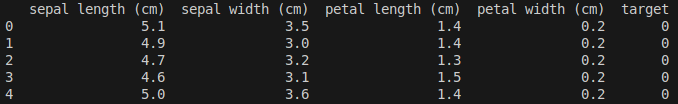

- Information Loading:

- The Iris dataset is a traditional dataset in machine studying for classification duties.

- X incorporates the options (sepal and petal measurements), and y incorporates the goal class (species of iris). Right here is the primary 5 knowledge rows within the Iris dataset.

- Information Splitting:

- The dataset is cut up into coaching and testing units utilizing train_test_split.

- Mannequin Initialization:

- A Random Forest classifier is initialized with 100 timber (n_estimators=100) and a hard and fast random seed (random_state=42) for reproducibility.

- Mannequin Coaching:

- The match technique trains the Random Forest on the coaching knowledge.

- Prediction:

- The predict technique generates predictions on the check set.

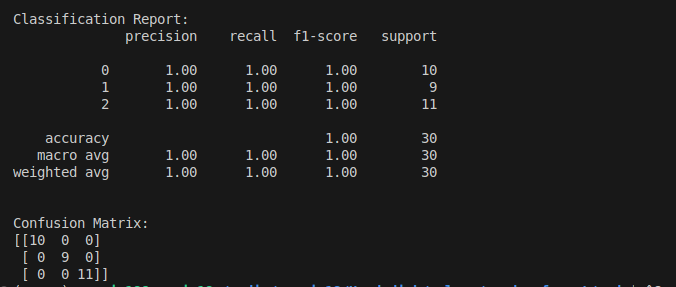

- Analysis:

- The accuracy_score operate computes the mannequin’s accuracy.

- classification_report gives detailed precision, recall, F1-score, and assist metrics for every class.

- confusion_matrix exhibits the classifier’s efficiency when it comes to true positives, false positives, true negatives, and false negatives.

Output Instance:

This instance demonstrates easy methods to successfully use the Random Forest classifier in Scikit-Be taught for a classification drawback. You’ll be able to modify parameters like n_estimators, max_depth, and max_features to fine-tune the mannequin for particular datasets and functions.

Potential Challenges and Options When Utilizing the Random Forest Algorithm

A number of challenges could come up when utilizing the Random Forest algorithm, reminiscent of excessive dimensionality, imbalanced knowledge, and reminiscence constraints. These points may be mitigated by using characteristic choice, class weighting, and tree depth management to enhance mannequin efficiency and effectivity.

1. Excessive Dimensionality

Random Forest can wrestle with datasets containing a lot of options, inflicting elevated computation time and lowered interpretability.

Options:

Use characteristic significance scores to pick probably the most related options.

importances = rf_classifier.feature_importances_Apply algorithms like Principal Part Evaluation (PCA) or t-SNE to cut back characteristic dimensions.

from sklearn.decomposition import PCA

pca = PCA(n_components=10)

X_reduced = pca.fit_transform(X)2. Imbalanced Information

Random Forest could produce biased predictions when the dataset has imbalanced courses.

Options:

Apply class weights. You’ll be able to assign larger weights to minority courses utilizing the class_weight=’balanced’ parameter in Scikit-Be taught.

RandomForestClassifier(class_weight='balanced')Use algorithms like Balanced Random Forest to resample knowledge earlier than coaching.

from imblearn.ensemble import BalancedRandomForestClassifier

clf = BalancedRandomForestClassifier(n_estimators=100)3. Reminiscence Constraints

Coaching massive forests with many determination timber may be memory-intensive, particularly for huge knowledge functions.

Options:

- Cut back the variety of determination timber.

- Set a most depth (max_depth) to keep away from overly massive timber and extreme reminiscence utilization.

- Use instruments like Dask or H2O.ai to deal with datasets too massive to suit into reminiscence.

A Actual-Life Examples of Random Forest

Listed here are three sensible functions of Random Forest displaying the way it solves real-world issues:

Retail Analytics

Random Forest helps predict buyer buying behaviour by analyzing purchasing historical past, shopping patterns, demographic knowledge, and seasonal tendencies. Main retailers use these predictions to optimize stock ranges and create personalised advertising and marketing campaigns, reaching as much as 20% enchancment in gross sales forecasting accuracy.

Medical Diagnostics

Random Forest aids docs in illness detection by processing affected person knowledge, together with blood check outcomes, very important indicators, medical historical past, and genetic markers. A notable instance is breast most cancers detection, the place Random Forest fashions analyze mammogram outcomes alongside affected person historical past to establish potential instances with over 95% accuracy.

Environmental Science

Random Forest predicts wildlife inhabitants modifications by processing knowledge about temperature patterns, rainfall, human exercise, and historic species counts. Conservation groups use these predictions to establish endangered species and implement protecting measures earlier than inhabitants decline turns into vital.

Future Tendencies in Random Forest and Machine Studying

The evolution of Random Forest in machine studying continues to advance alongside broader developments in machine studying expertise. Right here’s an examination of the important thing tendencies shaping its future:

1. Integration with Deep Studying

- Hybrid fashions combining Random Forest with neural networks.

- Enhanced characteristic extraction capabilities.

2. Automated Optimization

- Superior automated hyperparameter tuning

- Clever characteristic choice

3. Distributed Computing

- Improved parallel processing capabilities

- Higher dealing with of huge knowledge

Conclusion

Random Forest is a sturdy mannequin that mixes a number of determination timber to make dependable predictions. Its key strengths embody dealing with varied knowledge varieties, managing lacking values, and figuring out important options robotically.

By way of its ensemble method, Random Forest delivers constant accuracy throughout completely different functions whereas remaining easy to implement. As machine studying advances, Random Forest proves its worth by way of its stability of refined evaluation and sensible utility, making it a trusted alternative for contemporary knowledge science challenges.

FAQs on Random Forest Algorithm

1. What Is the Optimum Variety of Timber for a Random Forest?

Good outcomes usually consequence from beginning with 100-500 determination timber. The quantity may be elevated when extra computational sources can be found, and better prediction stability is required.

2. How Does Random Forest Deal with Lacking Values?

Random Forest successfully manages lacking values by way of a number of strategies, together with surrogate splits and imputation strategies. The algorithm maintains accuracy even when knowledge is incomplete.

3. What Methods Forestall Overfitting in Random Forest?

Random Forest prevents overfitting by way of two important mechanisms: bootstrap sampling and random characteristic choice. These create various timber and scale back prediction variance, main to higher generalization.

4. What Distinguishes Random Forest from Gradient Boosting?

Each algorithms use ensemble strategies, however their approaches differ considerably. Random Forest builds timber independently in parallel, whereas Gradient Boosting constructs timber sequentially. Every new tree focuses on correcting errors made by earlier timber.

5. Does Random Forest Work Successfully with Small Datasets?

Random Forest performs effectively with small datasets. Nevertheless, parameter changes—notably the variety of timber and most depth settings—are essential to sustaining mannequin efficiency and stopping overfitting.

6. What Kinds of Issues Can Random Forest Resolve?

Random Forest is extremely versatile and may deal with:

- Classification: Spam detection, illness prognosis, fraud detection.

- Regression: Home value prediction, gross sales forecasting, temperature prediction.

7. Can Random Forest Be Used for Function Choice?

Sure, Random Forest gives characteristic significance scores to rank variables primarily based on their contribution to predictions. That is notably helpful for dimensionality discount and figuring out key predictors in massive datasets.

8. What Are the Key Hyperparameters in Random Forest, and How Do I Tune Them?

Random Forest algorithms require cautious tuning of a number of key parameters considerably influencing mannequin efficiency. These hyperparameters management how the forest grows and makes selections:

- n_estimators: Variety of timber (default = 100).

- max_depth: Most depth of every tree (default = limitless).

- min_samples_split: Minimal samples required to separate a node.

- min_samples_leaf: Minimal samples required at a leaf node.

- max_features: Variety of options thought-about for every cut up.

9. Can Random Forest Deal with Imbalanced Datasets?

Sure, it could actually deal with imbalance utilizing:

- Class weights: Assign larger weights to minority courses.

- Balanced Random Forest variants: Use sampling strategies to equalize class illustration.

- Oversampling and undersampling strategies: Strategies like SMOTE and Tomek Hyperlinks stability datasets earlier than coaching.

10. Is Random Forest Appropriate for Actual-Time Predictions?

Random Forest isn’t ideally suited for real-time functions because of lengthy inference instances, particularly with a lot of timber. For sooner predictions, take into account algorithms like Logistic Regression or Gradient Boosting with fewer timber.