Hello 👋 My identify is Amagi, a contract frontend developer primarily based in Vancouver.

Some time in the past I launched a library named VFX-JS, which lets you add fancy visible results to your tasks. With VFX-JS, you’ll be able to obtain superior visible results like these, with out the trouble of coping with WebGL:

On this article, I’ll clarify why I created VFX-JS, the way it works, and how one can take your visuals to the following degree.

What’s VFX-JS?

VFX-JS is a JavaScript library that makes it straightforward so as to add WebGL-powered results to DOM components, like pictures and movies.

Creating these sorts of visible results with WebGL normally includes lots of tedious setup. Whereas libraries like Three.js can assist, you continue to must configure cameras, geometry, supplies, and textures—even for one thing so simple as displaying a single picture.

VFX-JS modifications that. It means that you can use graphics components, like pictures or movies, simply as you’d when designing a regular web site with HTML and CSS. VFX-JS mechanically generates 3D objects and applies visible results, so you’ll be able to give attention to creating gorgeous visuals with out worrying in regards to the technical setup.

To make use of VFX-JS in your challenge, simply set up it from npm:

npm i @vfx-js/coreThen create a VFX object in your script, do the next:

import { VFX } from '@vfx-js/core';

const img = doc.querySelector('#img');

const vfx = new VFX();

vfx.add(img, { shader: "glitch", overflow: 100 });…and BOOM!! You’ll see this glitch impact💥

Customized Shaders

VFX-JS contains a number of preset results, however you may also create your personal by writing a GLSL shader.

A shader is the spine of WebGL visible results. It’s primarily a small program that runs in WebGL for each object on the display screen, figuring out the ultimate coloration output of a 3D object. Shaders provide unbelievable flexibility—you’ll be able to carry out coloration correction, glitch results, parallax animations, and even create completely new 3D scenes.

Reasonably than utilizing a preset impact, you’ll be able to cross a customized fragment shader to the shader parameter:

const shader = `

precision highp float;

uniform vec2 decision;

uniform vec2 offset;

uniform sampler2D src;

out vec4 outColor;

void fundamental (void) {

outColor = texture2D(src, (gl_FragCoord.xy - offset) / decision);

outColor.rgb = 1. - outColor.rgb; // Invert colours!

}

`;

vfx.add(el, { shader });By writing customized shaders, you’ll be able to simply create results that reply to person interactions and extra. We’ll discover this in larger element later within the article.

Use VFX-JS in CodePen

VFX-JS is obtainable on CDNs like esm.sh or jsDeliver. You need to use it in CodePen with only a single line of code!

import { VFX } from "https://esm.sh/@vfx-js/core";That is very helpful for shortly sketching out your concepts.

See the Pen

VFX-JS scroll animation by Amagi (@fand)

on CodePen.

Why WebGL?

VFX-JS works by mechanically loading the desired ingredient as a WebGL texture and making use of shader results to it. However what precisely is WebGL?

Think about you need to animate a single picture in your web site. You can transfer the picture utilizing CSS with a @keyframes animation or a property like transition: left 1s;.

You can additionally use JavaScript to manually change the picture’s properties.

Nonetheless, you’ll discover that every one these options solely give us management over DOM properties. They don’t enable us to pixelate a picture, apply coloration correction, add a VHS impact, or create comparable superior results.

That is the place WebGL comes into play.

WebGL is a set of 3D graphics APIs for the net (primarily, OpenGL for the net). It’s broadly utilized in web sites, video games, physics simulations, and AI. Trendy PCs and smartphones come geared up with a GPU (Graphics Processing Unit), designed particularly for graphics duties. WebGL leverages the GPU, offering immense computational energy that can be utilized to create gorgeous visible results.

So sure, with WebGL, you’ll be able to obtain results like pixelation and rather more!

Actually, in the event you’re aiming to create advanced-level visuals on the net, utilizing WebGL is nearly unavoidable. You possibly can discover many articles about WebGL on Codrops: https://tympanus.web/codrops/tag/webgl

How VFX-JS Works

VFX-JS handles all of the tedious features of WebGL, however its underlying mechanics are easy. It overlays the complete window with a big WebGL canvas and positions 3D components to match the unique areas of your pictures and movies.

- Load

<img>/<video>as a WebGL texture - Add 3D planes to match the positions of the weather

- Synchronize with the unique

<img>or<video> - Apply shader results to reinforce visuals

Right here’s an illustration of the way it units up 3D components:

Textual content Results

VFX-JS has one other distinctive characteristic: it might probably apply visible results to textual content components, like <span> and <h1>. Creating textual content results in WebGL is normally fairly tough and tedious. Most WebGL libraries don’t help textual content rendering out of the field, and even when they do, it’s important to manually create 3D textual content geometries or use sprite textures, which include restricted styling choices.

VFX-JS solves this with some black magic underneath the hood 🔮. It converts textual content components into SVG pictures utilizing an SVG characteristic you’ve most likely by no means heard of (foreignObject), attracts them onto a canvas, after which masses them as WebGL textures. Yeah, it’s kinda terrifying, but it surely works:

Although it’s nonetheless considerably experimental and has a number of limitations (e.g., deeply nested components), it’s extremely helpful for sketching textual content results in your web site.

Case Examine: Pixelation Impact

On this chapter, we’re going to discover ways to write customized results utilizing VFX-JS.

We’ll be utilizing a brand new language referred to as GLSL… however belief me, it’s simpler than it sounds!

Let’s begin with a easy pixelation impact.

It’s easy: we’ll take an enter as a WebGL texture and show it on the display screen.

You possibly can strive it out on CodePen:

See the Pen

VFX-JS tutorial: Pixelation Impact by Amagi (@fand)

on CodePen.

First, we arrange a VFX object and cross within the enter ingredient:

import { VFX } from '@vfx-js/core';

// 1. Get the picture ingredient

const img = doc.querySelector('#img');

// 2. Register the picture to VFX-JS

const vfx = new VFX();

vfx.add(img, { shader: "rainbow" });Then let’s create a customized shader!

const shader = `

uniform vec2 decision;

uniform vec2 offset;

uniform sampler2D src;

out vec4 outColor;

void fundamental() {

vec2 uv = (gl_FragCoord.xy - offset) / decision;

vec4 coloration = texture(src, uv);

outColor = coloration;

}

`;

const vfx = new VFX();

vfx.add(img, { shader });Congratulations! 🎉 That is your first GLSL shader. On this code, you’re doing the next:

- Calculate the UV coordinate from the pixel place (

gl_FragCoord.xy). - Extract the colour from the enter texture.

- Assign the colour to the output (

outColor).

// Calculate the UV coordinate

vec2 uv = (gl_FragCoord.xy - offset) / decision;

// Learn the enter texture

vec4 coloration = texture(src, uv);

// Assign the colour to the output

outColor = coloration;The UV coordinate represents the XY place used to fetch the feel coloration. Usually, uv values are throughout the vary of 0 to 1: the bottom-left nook corresponds to vec2(0.0, 0.0), and the top-right nook corresponds to vec2(1.0, 1.0).

Now you’ll be able to tweak the code to regulate colours, animate positions, add glitches, or anything you’ll be able to think about! As an example, you’ll be able to invert the RGB values with only one line of code:

vec4 coloration = texture2D(src, uv);

+ coloration.rgb = 1. - coloration.rgb; // Invert!

outColor = coloration;

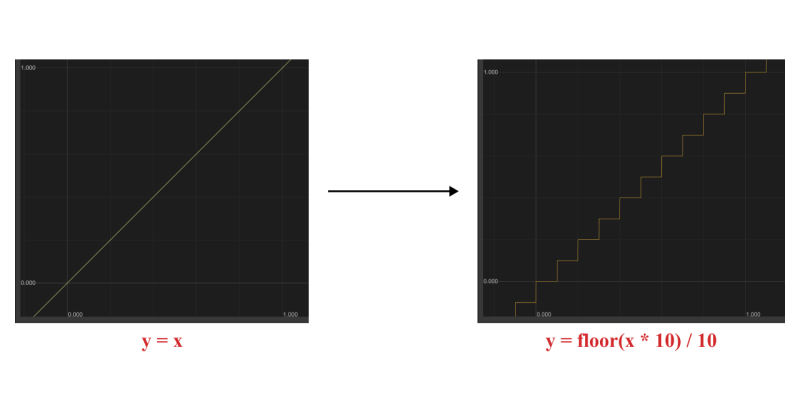

This time, let’s modify the uv to create a pixelation impact. Add the next line of code:

vec2 uv = (gl_FragCoord.xy - offset) / decision;

+ uv.x = ground(uv.x * 10.0) / 10.0;

vec4 coloration = texture(src, uv);ground() is a operate that rounds a quantity right down to the closest integer. When used with uv values, it creates a stair-step sample by turning clean gradients into discrete steps.

Et voilà! We’ve created a pixelation impact with only a single line of code!

You possibly can edit the shader and experiment with totally different variations.

Moreover, VFX-JS features a built-in time variable that tracks the variety of seconds since VFX-JS began. You need to use this to animate your results dynamically!

On this instance, I adjusted the pixelation degree, changed uv with uv.x (to pixelate solely the X coordinate), and animated it utilizing the time variable. I extremely suggest experimenting with the code your self—it’s a good way to know how shaders work in VFX-JS and WebGL!

Case Examine 2: Mouse Shift Impact

One of many nice issues about VFX-JS is which you could dynamically join results to JavaScript!

Let’s create a scroll impact like this and discover ways to hyperlink shaders to JavaScript values.

See the Pen

VFX-JS tutorial: Mouse Shift by Amagi (@fand)

on CodePen.

As soon as once more, let’s begin with a easy fragment shader:

uniform vec2 decision;

uniform vec2 offset;

uniform sampler2D src;

out vec4 outColor;

void fundamental() {

vec2 uv = (gl_FragCoord.xy - offset) / decision;

outColor = texture(src, uv);

}We’re going to trace mouse motion. Let’s add an occasion listener for pointermove occasions.

let pos = [0.5, 0.5]; // Present mouse place

let posDelay = [0.5, 0.5]; // Delayed mouse place

// Replace goal on mouse transfer

window.addEventListener('pointermove', (e) => {

const x = e.clientX / window.innerWidth;

const y = e.clientY / window.innerHeight;

pos = [x, y];

});Right here, pos and posDelay transfer throughout the vary of [0, 1], so their default worth is about to 0.5.

Subsequent, let’s calculate the mouse velocity and cross it to the shader:

// Linear interpolation

const combine = (a, b, t) => a * (1 - t) + b * t;

vfx.add(doc.getElementById('img'), {

shader,

uniforms: {

velocity: () => {

// Transfer posDelay towards the mouse place

posDelay = [

mix(posDelay[0], pos[0], 0.05),

combine(posDelay[1], pos[1], 0.05),

];

// Return the diff as velocity

return [

pos[0] - posDelay[0],

pos[1] - posDelay[1],

];

}

},

});I added a helper operate referred to as combine for higher readability. This operate performs linear interpolation between the arguments a and b utilizing the issue t. For instance, combine(0, 1, 0.5) offers 0.5, combine(0, 1, 0.1) offers 0.1, and combine(0, 100, 0.1) offers 10, and so forth. Linear interpolation is a standard approach in graphics programming, so it’s price studying in the event you’re not already aware of it.

On this code, we outlined a operate that calculates a uniform variable named velocity. This operate executes each body (≈ 60Hz) and strikes posDelay easily towards pos, which represents the present place of the mouse pointer. The operate then returns the distinction between pos and posDelay, successfully giving us the rate of the mouse pointer.

Subsequent, we’ll use the velocity within the shader code:

uniform vec2 velocity;

void fundamental() {

vec2 uv = (gl_FragCoord.xy - offset) / decision;

// Shift texture place

uv -= velocity * vec2(1, -1); // flip Y

outColor = texture(src, uv);

}Now, you’ve made the picture reply to the mouse motion!

Lastly, let’s make it a bit extra dynamic with the RGB shift approach by modifying the place shift worth for every coloration channel:

vec2 d = velocity * vec2(-1, 1);

// Shift the place for every channel

vec4 cr = texture(src, uv + d * 1.0);

vec4 cg = texture(src, uv + d * 1.5);

vec4 cb = texture(src, uv + d * 2.0);

// Composite

outColor = vec4(cr.r, cg.g, cb.b, cr.a + cg.a + cb.a); And increase!💥

Need to Be taught Extra About GLSL?

There are many tutorials and studying supplies accessible on-line. For video tutorials, I like to recommend testing tutorials by the artist Kishimisu and the YouTube channel The Artwork of Code.

When you get pleasure from studying, The E book of Shaders is my go-to reference for inexperienced persons.

Way forward for VFX-JS

I developed VFX-JS to empower builders and designers to create WebGL-powered visible results with out getting slowed down within the technical setup. Whereas I imagine VFX-JS fulfills this goal properly, there are thrilling challenges forward that I’m desirous to deal with.

One main lacking characteristic in VFX-JS is a plugin system. Though it already provides highly effective customized shader capabilities, wiring shaders and passing uniform variables can really feel tedious. Wouldn’t or not it’s nice if we might bundle results, like a Lens Distortion impact, as ES modules and share them on-line for others to make use of?

I plan to implement a plugin system that enables us to bundle results with their inside state administration whereas exposing solely user-defined parameters. This implies results might be reused throughout web sites with only a few parameter modifications and even shared by GitHub or npm.

One other key focus for VFX-JS is stability. Whereas it performs properly in manufacturing, there are identified efficiency points, notably with scrolling lag. We’ve got a number of promising options within the works, and I’m assured we’ll resolve these challenges quickly.

That wraps up this text. I hope you’ll discover the world of shaders and create some mind-blowing visuals with VFX-JS!

If in case you have any questions, be at liberty to ship me a message 👋