Introduction

Over the previous couple of years, a rising curiosity has been in testing the adversarial robustness of pure language processing (NLP) fashions. The analysis on this space covers new methods for producing adversarial examples and defending in opposition to them. Evaluating these assaults straight is difficult as a result of they’re evaluated on completely different information and sufferer fashions.

Replicating earlier work as a baseline takes time and will increase the danger of errors due to lacking supply code. Completely replicating outcomes can also be difficult due to the tiny particulars disregarded of the publications. These points create challenges for benchmark comparisons on this area.

Frameworks like TextAttack have been developed to handle these challenges. It’s an NLP Python framework for adversarial assaults, information augmentation, and adversarial coaching. This framework addresses present challenges and evokes progress in adversarial robustness. Exploring TextAttack, this put up goes into the main points of its elements. Moreover, sensible implementations can be examined via in-depth code examples.

Key Elements of TextAttack

TextAttack unifies adversarial assault strategies by decomposing NLP assaults into 4 elements:

- Purpose Operate: The target of the assault is outlined by the Purpose Operate, which may embody altering the mannequin’s prediction or fooling it into making a specific error.

- Set of Constraints: They’re guidelines that the assault should adhere to, for instance limiting the variety of phrases or guaranteeing that every one modifications made are grammatically correct.

- Transformation: The transformation determines how the enter textual content is modified to generate an adversarial instance. For instance, the substitution of phrases with their synonyms or insertion/deletion of phrases.

- Search Methodology: This element determines how the assault explores the area of potential modifications to search out the simplest adversarial instance, akin to utilizing gradient-based strategies or random search algorithms.

Fundamental options of TextAttack

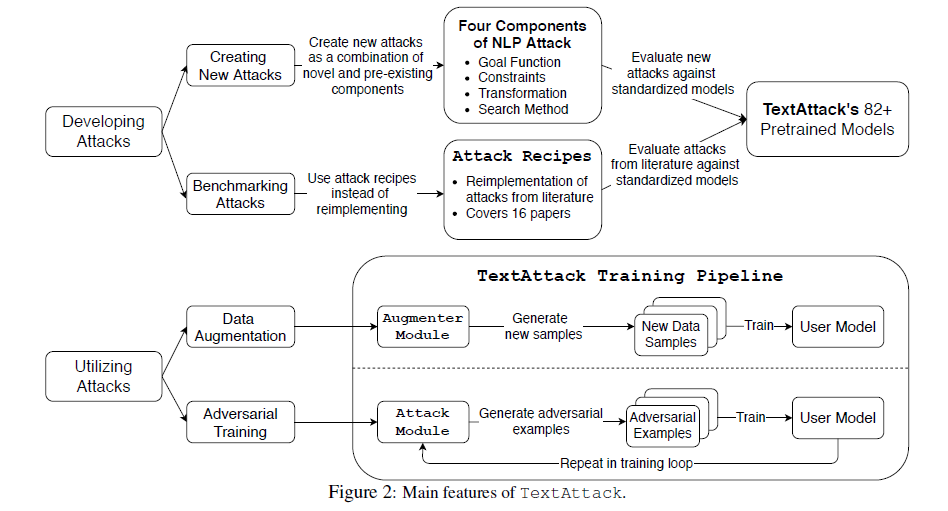

On the coronary heart of TextAttack’s modular design are 4 principal elements, as we’ve got talked about above.

Within the diagram the Assault Module part reveals TextAttack’s skill to re-implement assaults from the literature. This part mentions that there are 16 papers and pre-built assault recipes that we will use.

The diagram reveals easy methods to use TextAttack to construct assaults in two methods, creating new assaults and benchmarking present ones. Customers can create new adversarial methods by combining new and present elements.

TextAttack has over 82 pre-trained fashions, so researchers can check their new assaults in opposition to normal fashions. This enables them to match their outcomes with earlier work.

The determine reveals two strategies of assault: information augmentation and adversarial coaching. Through the use of the Augmenter Module, consumer fashions can enhance by producing new samples to enhance the present coaching dataset. With TextAttack’s coaching pipeline, we will create adversarial examples and feed them right into a coaching course of to enhance our mannequin.

TextAttack’s Pre-trained Fashions and Datasets

TextAttack’s pre-trained fashions embody word-level LSTM and CNN modules alongside transformer-based BERT variants. These fashions have undergone pre-training on various datasets provided by HuggingFace.

Integration of TextAttack with the NLP library allows computerized loading of corresponding pre-trained fashions for check and validation datasets. Whereas a lot prior literature has targeted on classification and entailment, TextAttack’s vary of pre-trained fashions gives a brand new avenue for analysis. This enables researchers to delve into research relating to mannequin robustness throughout all GLUE duties.

Adversarial Coaching

With the textual content assault prepare –attack recipes, TextAttack allows the creation of latest coaching units of adversarial examples. The method includes a number of steps:

- Preliminary coaching: The mannequin is skilled for a lot of epochs on the clear coaching set.

- Adversarial instance technology: An assault makes an adversarial model of every enter.

- Dataset substitution: This course of includes changing the unique dataset with a perturbed variant.

- Periodic regeneration: The adversarial dataset is periodically regenerated based on the mannequin’s present weaknesses.

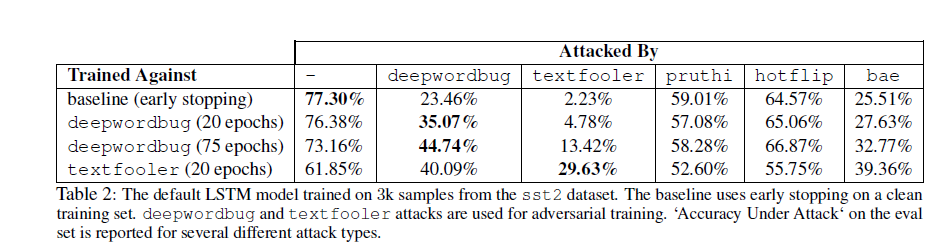

The desk under illustrates the accuracy of a normal LSTM classifier with and with out adversarial coaching in opposition to numerous assault recipes utilized in TextAttack.

It reveals the LSTM mannequin’s efficiency in opposition to deepwordbug, textfooler, pruthi, hotflip, and bae assaults in comparison with its baseline Carlini rating on the clear coaching set. The desk compares accuracy with and with out assaults, evaluating the mannequin’s robustness in opposition to deepwordbug at 20 and 75 epochs respectively. It additionally assesses the mannequin’s vulnerability to textfooler at 20 epochs.

Benchmarking Current Assaults with Assault Recipes

TextAttack permits for a modular construction to mix a number of previous analysis assaults into one framework. That is achieved by including one or two new elements and we get extra flexibility and productiveness in creating new assault plans.

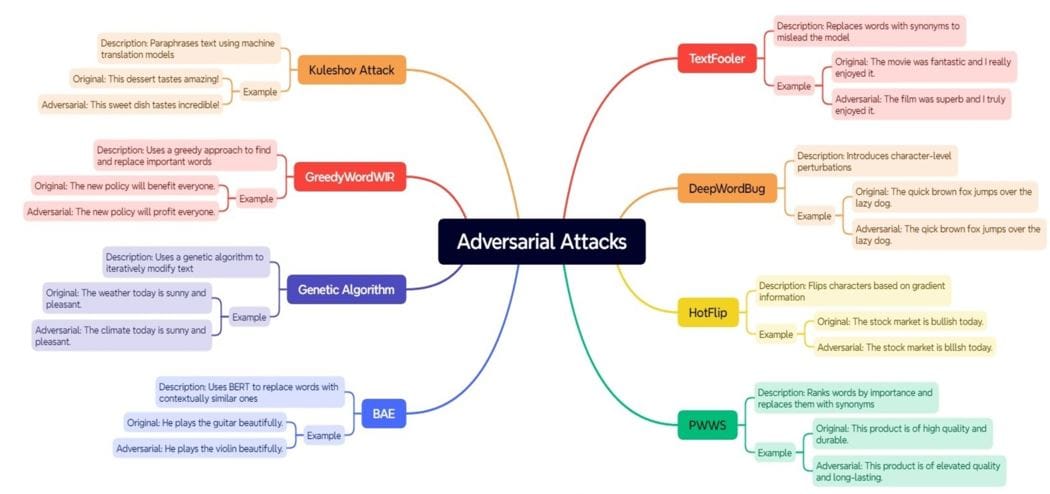

An assault recipe is a set of pre-defined steps and configurations used to provide adversarial examples for NLP fashions. Every assault recipe has 4 principal elements (Purpose Operate, Constraints, Transformation, Search Methodology)

Assault recipes are the perfect practices and methods distilled from the most recent analysis. They velocity up the manufacturing of adversarial examples. Serving to each researchers and practitioners of their work these permit us to implement advanced assault methods with out having to know all of the underlying particulars. We have now illustrated some widespread kinds of assaults within the diagram under:

Sensible Use Case: Sentiment Evaluation

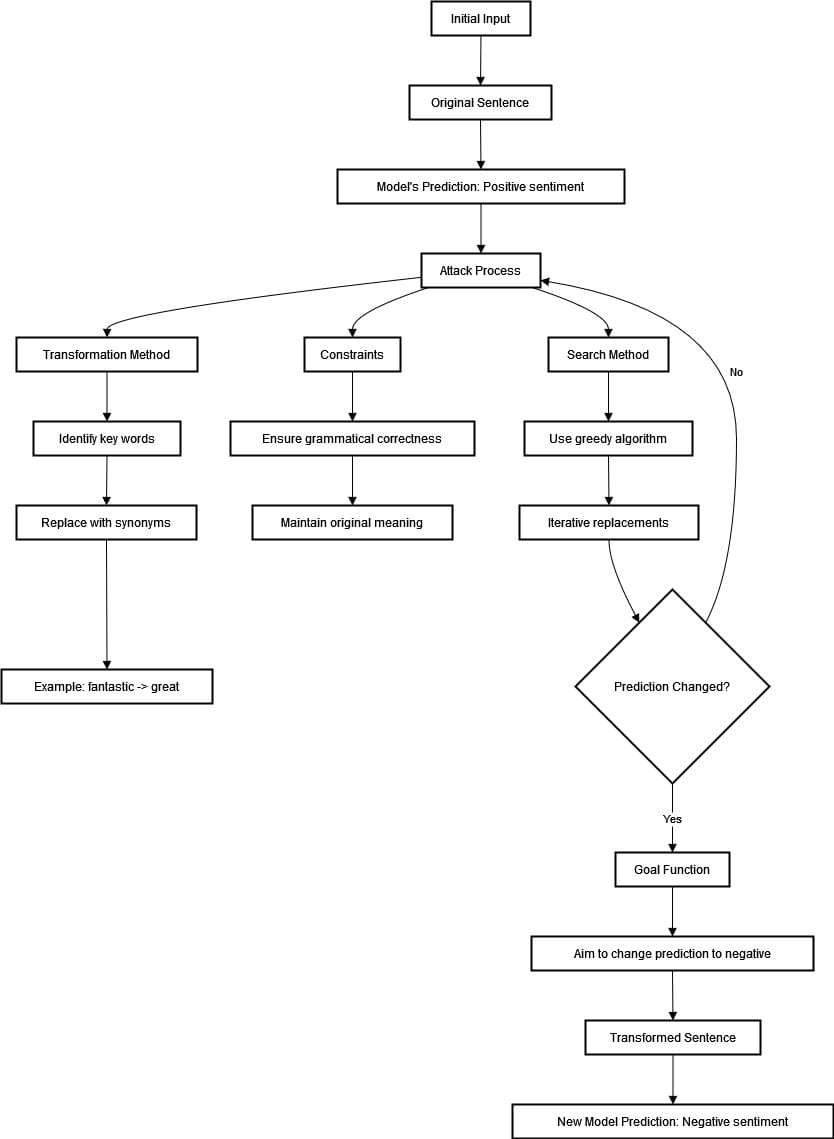

Let’s take into account that we’ve got a sentiment evaluation mannequin that classifies film critiques into constructive or destructive classes. We need to check the power and robustness of this mannequin utilizing textfooler assault recipe. The enter given was “The film was nice” and the mannequin predicted constructive sentiment. Let’s see the method within the diagram under.

To begin the assault course of, we should discover vital phrases associated to constructive sentiment in an announcement. For instance, “implausible” will be changed with synonyms utilizing the WordNet database.

We need to protect grammatical correctness and unique which means as a lot as potential. We use an iterative strategy within the grasping algorithm the place the mannequin’s prediction is checked at every alternative.

The method continues till no extra substitutions that obtain the specified end result will be made or a change within the prediction happens. A profitable assault on the mannequin might happen by changing the phrase “implausible” with an equal like “nice,” which flips sentiments from constructive to destructive.

On this case, the preliminary assertion “The film was implausible” has been modified to “The film was nice,” as we will see the mannequin’s prediction has modified from constructive to destructive. That is proof of a vulnerability within the mannequin.

textfooler is a intelligent scheme designed to show deficiencies in sentiment evaluation fashions. This method can considerably affect mannequin predictions by substituting key phrases with their synonyms whereas sustaining contextual coherence. This compels us to prioritize coaching and analysis frameworks that decrease vulnerability to such adversarial assaults.

Enhancing Adversarial NLP Assaults with TextAttack’s AttackedText Object

When utilizing standard NLP assault implementations, making adjustments to tokenized textual content can typically result in issues with capitalization and phrase segmentation. Tokenization includes breaking down the textual content into separate phrases or tokens, which can trigger disruptions in its preliminary capital letters and limits between phrases.

Tokenizing “The film was implausible!” may result in “the”, “film”, “was”, or “implausible!” which omits the capitalized letter initially. This makes transformations harder. Such issues can weaken adversarial examples’ consistency and validity whereas impeding correct assessments of NLP fashions’ resilience.

TextAttack’s AttackedText object tackles these points by enabling transformations to be carried out straight on the unique textual content as an alternative of tokenized variations. The item retains maintain of the preliminary enter and preserves all its attributes, akin to capitalization and phrase boundaries.

The helper strategies included with this object facilitate managed modifications. They’ll handle challenges like transformations in capitalization or changes for particular phrase boundaries. Let’s take into account for instance a metamorphosis that substitutes “Hiya” with “Hello”. It won’t produce an misguided output like “hello World!” however slightly accurately transforms “Hiya World!” into “”Hello World!”.

The utilization of the AttackedText object bestows numerous benefits. It generates adversarial examples by sustaining the authenticity of the unique textual content construction and capitalization throughout transformations. The precision in phrase segmentation and capitalization augments the reliability of assaults on fashions. This results in a reliable analysis course of for mannequin robustness.

Furthermore, builders can give attention to producing environment friendly adjustments devoid of tokenization hurdles that hinder the adoption of latest assaults. In essence, the AttackedText element amplifies and enhances TextAttack’s adversarial transformation skills by an amazing margin.

Throughout an assault utilizing TextAttack’s search strategies, it’s common to come across the identical enter a number of instances. In such cases, storing (or caching) beforehand computed outcomes can enormously enhance effectivity.

Bettering Effectivity in TextAttack with Caching

Throughout an assault utilizing TextAttack’s search strategies, it’s common to fulfill the identical enter a number of instances. Storing (or caching) beforehand computed outcomes can enhance effectivity in such cases.

TextAttack can expedite the retrieval of pre-calculated mannequin output and confirm if all constraints had been met with out repeating the duty. This course of is called memoization. By means of this optimization approach, search strategies execute sooner and improve general effectivity throughout an assault.

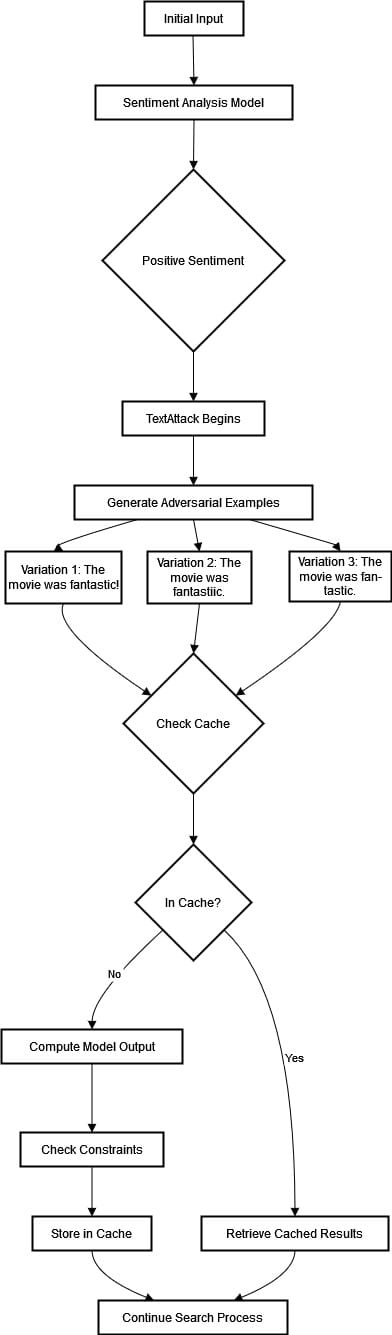

Let’s take into account an software of TextAttack by which we assess the robustness of a sentiment evaluation mannequin by testing it with adversarial examples. Let’s visualize and clarify the method within the diagram under.

Adversarial instance technology with caching- Preliminary Enter: The opening assertion reads, “The film was implausible.” The sentiment analyzer precisely acknowledges the emotional tone that characterizes it as a constructive sentiment.

- Adversarial Assault: TextAttack makes use of an adversarial assault approach the place crafty modifications are utilized to the unique sentence to govern the mannequin’s prediction. Examples with altered wording – as an example, “The film was implausible!” or “The film was implausible.”- could also be a part of this course of.

- Caching: Participating the ability of caching, Variations posing as duplicates are recognized and sorted out in real-time to optimize effectivity, stopping pointless reprocessing. As an illustration, if “The film was implausible.” is generated repeatedly – both verbatim or with slight modifications – the system will use cached responses that had been beforehand computed upon the primary encounter. Constraints are all the time cross-checked for coherence earlier than any additional motion is taken on a specific piece of language below scrutiny by TextAttack‘s subtle algorithmic fashions.

- Effectivity Achieve: TextAttack‘s effectivity achieve is spectacular. Think about encountering the identical pleasant phrase, “The film was implausible,” whereas persevering with our search journey. Our instrument not must compute its output or monitor for any constraints as a result of it retrieves cached outcomes. This spares our treasured time, conserves computing energy, and results in a smoother journey towards profitable outcomes.

Customized Transformation

An easy transformation can be tried to provoke the creation of transformations in TextAttack: altering any phrase with the phrase “banana.” Inside TextAttack, an summary class referred to as WordSwap undertakes to interrupt down sentences into phrases whereas avoiding swapping stopwords. By extending WordSwap and executing just one perform – _get_replacement_words – all phrases will be substituted with “banana“.

Previous to executing the next codes, please run the next command in your atmosphere:

pip3 set up textattack[tensorflow] The next code defines a customized transformation class BananaWordSwap that inherits from WordSwap. It replaces any given phrase within the enter with the phrase “banana”.

from textattack.transformations import WordSwap

class BananaWordSwap(WordSwap):

"""Transforms an enter by changing any phrase with 'banana'."""

# We do not want a constructor, since our class does not require any parameters.

def _get_replacement_words(self, phrase):

"""Returns 'banana', it doesn't matter what 'phrase' was initially.

Returns an inventory with one merchandise, since `_get_replacement_words` is meant to

return an inventory of candidate alternative phrases.

"""

return ["banana"]

Utilizing transformation

The transformation has been chosen. Nevertheless, a couple of objects are nonetheless lacking for the assault to be accomplished. The search technique and constraints should even be chosen to meet the assault. Moreover, we require a purpose perform, mannequin, and dataset earlier than utilizing this technique. (The purpose perform signifies the duty our mannequin performs – on this case, classification – and the kind of assault – on this case, we’ll carry out an untargeted assault.)

Creating the Purpose Operate, Mannequin, and Dataset

Our mission is to launch an assault on a classification mannequin. Due to this fact, we will make use of the UntargetedClassification class. For our job, let’s go for BERT, refined particularly for information categorization utilizing the AG Information dataset. No want to fret as many fashions are ready and conveniently saved in HuggingFace’s Mannequin Hub. TextAttack synergistically merges with any of those high-quality fashions and their datasets.

# Import the mannequin

import transformers

from textattack.fashions.wrappers import HuggingFaceModelWrapper

mannequin = transformers.AutoModelForSequenceClassification.from_pretrained(

"textattack/bert-base-uncased-ag-news"

)

tokenizer = transformers.AutoTokenizer.from_pretrained(

"textattack/bert-base-uncased-ag-news"

)

model_wrapper = HuggingFaceModelWrapper(mannequin, tokenizer)

# Create the purpose perform utilizing the mannequin

from textattack.goal_functions import UntargetedClassification

goal_function = UntargetedClassification(model_wrapper)

# Import the dataset

from textattack.datasets import HuggingFaceDataset

dataset = HuggingFaceDataset("ag_news", None, "check")

Creating the Assault

Let’s use a grasping search technique. We’ll not use any constraints for now.

from textattack.search_methods import GreedySearch

from textattack.constraints.pre_transformation import (

RepeatModification,

StopwordModification,

)

from textattack import Assault

# We'll use our Banana phrase swap class because the assault transformation.

transformation = BananaWordSwap()

# We'll constrain modification of already modified indices and stopwords

constraints = [RepeatModification(), StopwordModification()]

# We'll use the Grasping search technique

search_method = GreedySearch()

# Now, let's make the assault from the 4 elements:

assault = Assault(goal_function, constraints, transformation, search_method)

We will print our assault to see all of the parameters:

print(assault)Output:

Assault(

(search_method): GreedySearch

(goal_function): UntargetedClassification

(transformation): BananaWordSwap

(constraints):

(0): RepeatModification

(1): StopwordModification

(is_black_box): True

)

Utilizing the Assault

Let’s use our assault to efficiently assault 10 samples.

from tqdm import tqdm # tqdm gives us a pleasant progress bar.

from textattack.loggers import CSVLogger # tracks a dataframe for us.

from textattack.attack_results import SuccessfulAttackResult

from textattack import Attacker

from textattack import AttackArgs

from textattack.datasets import Dataset

attack_args = AttackArgs(num_examples=10)

attacker = Attacker(assault, dataset, attack_args)

attack_results = attacker.attack_dataset()

# The next legacy tutorial code reveals how the Assault API works intimately.

# logger = CSVLogger(color_method='html')

# num_successes = 0

# i = 0

# whereas num_successes < 10:

# end result = subsequent(results_iterable)

# instance, ground_truth_output = dataset[i]

# i += 1

# end result = assault.assault(instance, ground_truth_output)

# if isinstance(end result, SuccessfulAttackResult):

# logger.log_attack_result(end result)

# num_successes += 1

# print(f'{num_successes} of 10 successes full.')

Output:

+-------------------------------+--------+

| Assault Outcomes | |

+-------------------------------+--------+

| Variety of profitable assaults: | 8 |

| Variety of failed assaults: | 2 |

| Variety of skipped assaults: | 0 |

| Unique accuracy: | 100.0% |

| Accuracy below assault: | 20.0% |

| Assault success price: | 80.0% |

| Common perturbed phrase %: | 18.71% |

| Common num. phrases per enter: | 63.0 |

| Avg num queries: | 934.0 |

+-------------------------------+--------+

Within the code above, the TextAttack library is utilized to hold out an adversarial assault on a dataset. Important modules are imported, together with tqdm for progress bars and CSVLogger for recording assault outcomes. To run the assault on 10 examples, we’ve got used the AttackArgs class.

We have now created the Attacker object with a delegated assault kind, corresponding dataset, and any vital arguments. Subsequent, we’ve got executed the attack_dataset technique to hold out the assault and procure the end result. Alternatively, we will log profitable assaults manually by going via every component within the dataset, performing the assault, and logging the outcomes if the assault is profitable. – as evidenced by code contained in a commented-out part of this script snippet.

A hit price of 80% was achieved within the adversarial assault on the textual content classification mannequin. Of the ten makes an attempt made, eight efficiently led to misclassification of inputs by inflicting a drop in accuracy from its earlier good rating to simply 20%.

The assault technique proved its robustness and effectiveness, as no skipped assaults had been recorded. On common, every question had 18.71% of the phrases modified in an enter that contained roughly 63 phrases. Furthermore, the mannequin was queried round 934 instances per enter throughout the assault course of.

Visualizing Assault Outcomes

Within the under code, we’ve got used CSVLogger technique to log AttackResult objects. This logger successfully shops all ensuing assaults into an information body. It facilitates simple accessibility and show of knowledge. By setting color_method to ‘html‘, disparities in assault outcomes are represented through HTML coloring for elevated visible readability. We have now used IPython utilities and pandas on this course of.

import pandas as pd

pd.choices.show.max_colwidth = (

480 # improve colum width so we will really learn the examples

)

logger = CSVLogger(color_method="html")

for lead to attack_results:

if isinstance(end result, SuccessfulAttackResult):

logger.log_attack_result(end result)

from IPython.core.show import show, HTML

outcomes = pd.DataFrame.from_records(logger.row_list)

show(HTML(outcomes[["original_text", "perturbed_text"]].to_html(escape=False)))

Observe: The reader can launch the code and visualize the end result.

Conclusion

This text gives an intensive evaluation of adversarial robustness inside NLP fashions, particularly emphasizing the TextAttack Python framework. It is a Python-based framework supposed to streamline and improve the technology course of and protection mechanisms in opposition to opposed assaults.

This framework presents a modular structure incorporating basic elements akin to Purpose Operate, Constraints, Transformation, and Search Methodology. This construction empowers the customers to create personalized assaults effectively. It additionally facilitates simple adaptation and standardized efficiency analysis via benchmarking.

The framework extends assist in the direction of adversarial coaching practices with information augmentation methods. This enhances mannequin robustness in real-world software eventualities. The effectivity of this framework is demonstrated via empirical illustrations. These spotlight its sensible utility throughout numerous domains.