Introduction

YOLO (You Solely Look As soon as) is a well-liked object detection algorithm that has revolutionized the sphere of pc imaginative and prescient. It is quick and environment friendly, making it a wonderful alternative for real-time object detection duties. YOLO NAS (Neural Structure Search) is a latest implementation of the YOLO algorithm that makes use of NAS to seek for the optimum structure for object detection.

On this article, we are going to present a complete overview of the structure of YOLO NAS, highlighting its distinctive options, benefits, and potential use circumstances. We are going to cowl particulars on its neural community design, optimization methods, and any particular enhancements it gives over conventional YOLO fashions. Additionally, we’ll clarify how YOLO NAS might be built-in into present pc imaginative and prescient pipelines.

AutoNAC: Revolutionizing Neural Structure Search in YOLO-NAS

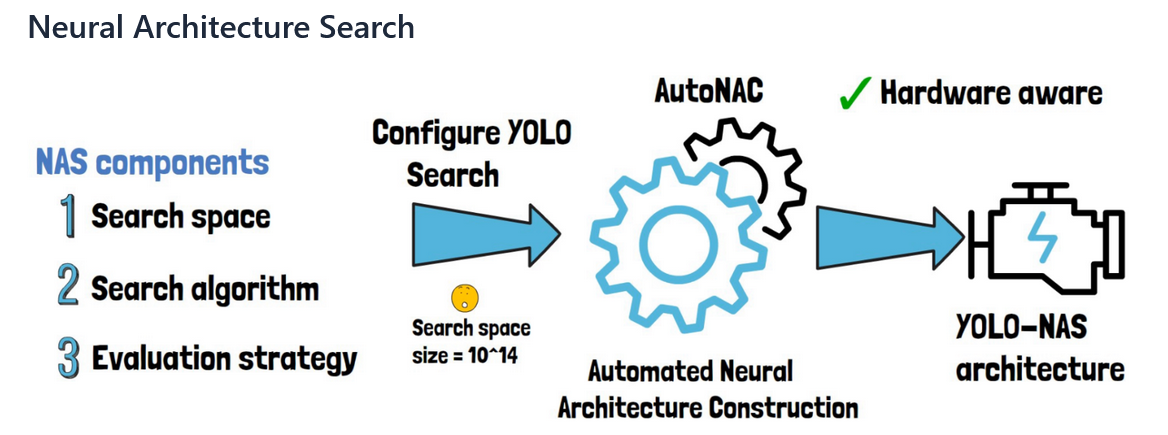

Deep studying has witnessed a revolutionary strategy generally known as Neural Structure Search (NAS) that automates the method of designing optimum neural community architectures. AutoNAC (Automated Neural Structure Building), one of many numerous NAS methodologies, is outstanding for its pioneering contributions in creating YOLO-NAS.

Key Parts of NAS

- Search Area: Defines the set of all doable architectures that may be generated.

- Search Technique: The algorithm used to discover the search house, similar to reinforcement studying, evolutionary algorithms, or gradient-based strategies.

- Efficiency Estimation: Methods to judge the efficiency of candidate architectures effectively.

The operational mechanism of AutoNAC

AutoNAC refers to Deci AI’s unique implementation of NAS expertise, which was meticulously developed to reinforce YOLO-NAS structure. This superior mechanism automates searches in pursuit of optimum neural community configuration.

AutoNAC features by way of a multi-faceted course of, thoughtfully formulated to maneuver the intricacies of neural structure design:

- Defining the Search Area: The preliminary step of AutoNAC includes establishing a complete search house, which spans numerous potential architectures. This inclusive area contains a number of configurations of convolutional layers, activation features, and different architectural constituents.

- Search technique: AutoNAC makes use of subtle search methodologies to discover designated search areas. These methodologies could incorporate reinforcement studying methods, through which an agent learns to pick out optimum architectural elements that optimize effectivity and productiveness. They’ll additionally incorporate evolutionary algorithms, via which architectures are iteratively advanced based mostly on their efficiency metrics.

- Efficiency Estimation: AutoNAC employs a mix of proxy duties and early stopping strategies to expedite the analysis of potential architectures. This strategy allows swift evaluation with out requiring full coaching, thereby significantly expediting the pursuit of optimum options.

- Optimization: AutoNAC optimizes chosen architectures by making use of numerous standards similar to accuracy, latency and useful resource utilization. The implementation incorporates multi-objective optimization to ensure that the resultant structure is match for deployment in sensible settings.

Quantization Conscious Structure in YOLO- NAS

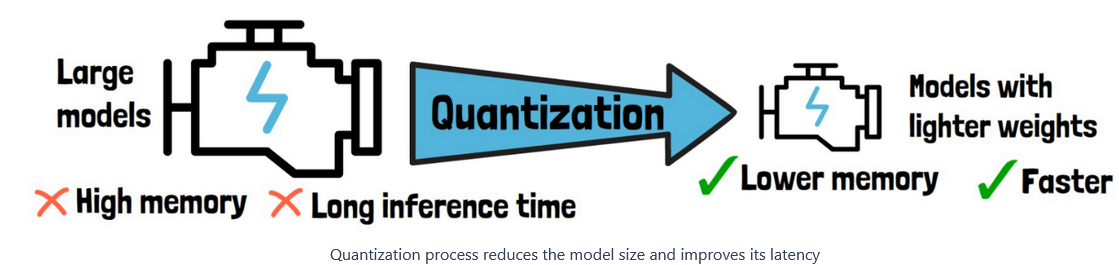

The Quantization Conscious Structure (QAA) marks a leap within the realm of deep studying, particularly when boosting the effectivity of neural networks. By utilizing quantization, high-precision floating-point numbers are mapped to lower-precision numbers.

Utilizing subtle methods in YOLO-NAS quantization is paramount to enhancing the mannequin’s efficacy and operational excellence. The crux elements of this cutting-edge course of are as follows:

- Quantization-Conscious Modules: YOLO-NAS employs specialised, cutting-edge quantization-aware modules generally known as QSP (Quantization-Conscious Spatial Pyramid) and QCI (Quantization-Conscious Convolutional Integrations). These ingenious elements use highly effective re-parameterizing methods to allow 8-bit quantization. They reduce accuracy loss throughout post-training quantification. By incorporating these pioneering improvements, YOLO-NAS ensures that the mannequin preserves optimum efficiency and accuracy.

- Hybrid Quantization Methodology: YOLO-NAS opts for a extra nuanced and selective strategy than utilizing an across-the-board quantization technique. This system includes concentrating on particular areas of the mannequin for quantization functions.

- Minimal drop precision: When transformed to its INT8 quantized model, YOLO-NAS experiences solely a minimal drop in precision. This unprecedented feat distinguishes it from its counterparts and highlights the effectiveness of its quantization technique.

- Quantization-friendly structure: The elemental constituents of YOLO-NAS are crafted to be exceptionally adept at quantization. This architectural strategy ensures that the mannequin stays impressively high-performing, even after present process quantization.

- Superior coaching and quantization methods: YOLO-NAS harnesses coaching methodologies and post-training quantization methods to reinforce its total effectivity.

- Adaptative quantization: The YOLO-NAS mannequin has been crafted to include the adaptive quantization approach. This includes intelligently skipping particular layers to steadiness latency, throughput, and accuracy loss.

The drop in imply Common Precision for YOLO-NAS is simply 0.51, 0. 65, and 0. 45 for the S, M, and L variations of YOLO-NAS. Different fashions normally lose 1-2 factors when quantized.

These methods present YOLO-NAS with unimaginable structure that retains sturdy object detection and efficiency. The quantization in YOLO-NAS permits quick inference with decrease latency with out dropping an excessive amount of accuracy. This makes it an important place to begin for customizing fashions that want real-time inference.

Overcoming Quantization Challenges in YOLO-NAS with QARepVGG

QARepVGG is an improved model of the favored RepVGG block in object detection fashions. It considerably improves on the accuracy drop after quantization. The unique RepVGG block replaces every convolutional layer with numerous operations.

Nonetheless, the accuracy of RepVGG decreases when immediately quantized to INT8. QARepVGG enhances RepVGG to repair its quantization points from the multi-branch design. It reduces the variety of parameters and improves the detection accuracy and pace of the mannequin. Deci’s researchers educated their neural structure search algorithm so as to add QARepVGG into YOLO-NAS. This boosted its quantization-friendly skills and effectivity.

YOLO NAS Structure

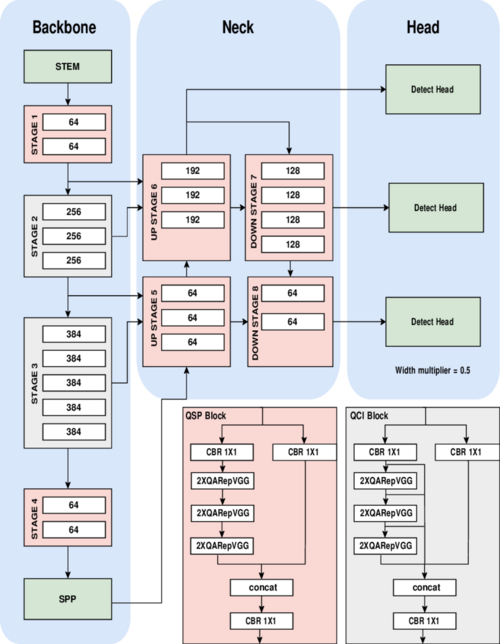

The mannequin makes use of methods like consideration mechanisms, quantization conscious blocks, and reparametrization at inference time. These methods assist YOLO-NAS to determine objects of various sizes and complexities higher than different detection fashions. The YOLO-NAS structure consists of three major elements:

The constituents of this technique have been crafted and optimized utilizing the NAS methodology. It leads to a unified and sturdy object detection system.

Spine

This foundational aspect of YOLO-NAS is chargeable for extracting options from enter pictures. This element makes use of a sequence of convolutional layers and customised blocks which can be optimized to seize low-level and high-level options successfully.

Neck

The YOLO-NAS neck element features as a bridge between the spine and the detection head. It facilitates characteristic aggregation and enhancement. It integrates info from numerous scales to reinforce mannequin effectivity in detecting objects that change in measurement.

The YOLO-NAS system employs a classy characteristic pyramid community (FPN) structure in its neck, incorporating cross-stage partial connections and adaptive characteristic fusion. This progressive design facilitates optimized info circulate throughout numerous neural community ranges. It augments the mannequin’s capability to handle scale variations throughout object detection duties.

Head

The detection head is the place the ultimate object predictions are made. YOLO-NAS makes use of a multi-scale detection head, much like different latest YOLO variants. Nonetheless, it incorporates a number of optimizations to enhance each accuracy and effectivity:

- Adaptive anchor-free detection: The progressive YOLO-NAS approach for detection takes a revolutionary adaptive anchor-free strategy slightly than counting on inflexible, preconceived notions of anchors field. Because of this dynamic and intuitive technique, this methodology gives improved flexibility in precisely predicting bounding containers with outstanding precision.

- Multi-level characteristic fusion: The method of multi-level characteristic fusion includes the pinnacle cleverly integrating numerous options from numerous ranges inside the neck. This results in a superior capacity to detect objects current at various scales.

- Environment friendly channel consideration: The researchers have applied a light-weight channel consideration mechanism with unimaginable effectivity. It sharply hones in on probably the most related options for optimum detection.

Benefits of YOLO NAS

YOLO NAS has a number of benefits over conventional YOLO fashions, making it a wonderful alternative for object detection duties:

- Effectivity: YOLO NAS rocks for object detection. In comparison with conventional YOLO fashions, it is extra environment friendly. Which means we are able to carry out real-time object detection even on wimpy cellphones and drones.

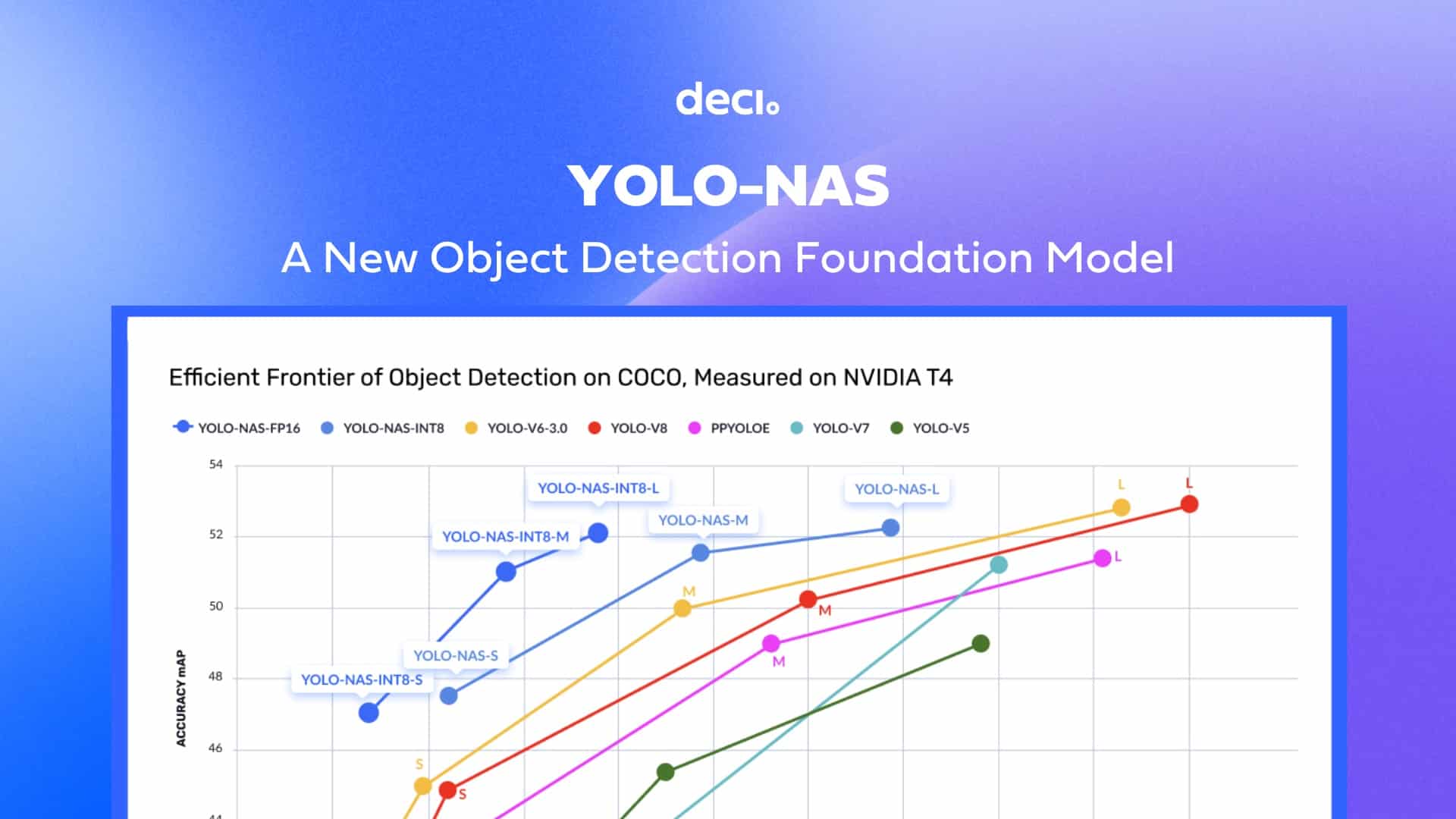

- Accuracy: YOLO NAS would not skimp on accuracy. It excels on all the same old benchmarks. We will belief it for object detection duties.

- Optimum structure: It makes use of NAS to search out the best structure, leading to a extra environment friendly and correct mannequin.

- Robustness: YOLO NAS is strong to occlusion and muddle, making it appropriate for object detection in advanced environments.

Potential Use Instances of YOLO NAS

Suppose an organization desires to develop an AI system to detect real-time objects in a video stream. They’ll use YOLO-NAS as a foundational mannequin to construct their AI system.

They’ll fine-tune the pre-trained weights of YOLO-NAS on their particular dataset utilizing switch studying, a way that enables the mannequin to be taught from a small quantity of labeled information. They’ll additionally use the quantization-friendly primary block launched in YOLO-NAS to optimize the mannequin’s efficiency and cut back its reminiscence and computation necessities.

YOLO NAS can be utilized in numerous real-world purposes, similar to:

- Surveillance: Some safety cameras can detect occasions in actual time utilizing YOLO NAS. Synthetic intelligence can spot intruders or bizarre habits instantly with no human having to observe the footage.

- Autonomous autos: Autonomous autos are enhancing. YOLO NAS may help self-driving vehicles detect objects round them. This contains pedestrians about to cross the road or different vehicles altering lanes. Driverless tech wants to acknowledge obstacles and react shortly to keep away from accidents.

- Medical imaging: It may be used for object detection in medical imaging. It could determine tumors and abnormalities in X-rays or MRI scans.

- Retail: YOLO NAS can be utilized for object detection in retail, similar to detecting merchandise on cabinets or monitoring buyer habits.

Integration of YOLO NAS into Current Pc Imaginative and prescient Pipelines

The YOLO-NAS mannequin is accessible below an open-source license with pre-trained weights for non-commercial use on SuperGradients. YOLO-NAS is quantization-friendly and helps TensorRT deployment, guaranteeing full compatibility with manufacturing use. This breakthrough in object detection can encourage new analysis and revolutionize the sphere, enabling machines to understand and work together with the world extra intelligently and autonomously.

It may be plugged into present pc imaginative and prescient pipelines constructed with PyTorch or TensorFlow. It may be educated to detect customized objects utilizing the identical coaching course of as some other YOLO mannequin.

Introduction to YOLONAS with SuperGradients

Deliver this undertaking to life

SuperGradients is a brand new PyTorch library for coaching fashions on on a regular basis pc imaginative and prescient duties like classification, detection, segmentation, and pose estimation. They’ve about 40 pre-trained fashions already of their mannequin zoo. We will go forward and take a look at the out there ones right here.

The code under makes use of pip to put in 4 Python packages: super-gradients, imutils, roboflow, and pytube.

pip set up super-gradients==3.2.0

pip set up imutils

pip set up roboflow

The primary line installs the super-gradients package deal with model 3.2.0. After that, we carry within the imutils package deal. This offers a bunch of helpful features for performing common picture processing stuff. Then we pull within the roboflow package deal. This package deal offers Python API for the Roboflow platform, which helps to deal with and label datasets for pc imaginative and prescient duties.

Now, we are going to use considered one of their pre-trained fashions – YOLONAS is available in small(yolo_nas_s), medium(yolo_nas_m), and enormous(`yolo_nas_l`) variations. We’ll rock with the big one for now.

Subsequent, we import the fashions module from the super_gradients.coaching package deal to get the YOLO-NAS-l mannequin with pre-trained weights.

from super_gradients.coaching import fashions

yolo_nas_l = fashions.get("yolo_nas_l", pretrained_weights="coco")The primary line within the code above imports the fashions module from the super_gradients.coaching package deal.

The second line makes use of the get perform from the fashions module to get the YOLO-NAS-l mannequin with pre-trained weights.

The get perform takes two parameters: the mannequin title and the pre-trained weights to make use of. On this case, the mannequin title is "yolo_nas_l," which specifies the big variant of the YOLO-NAS mannequin, and the pre-trained weights are "coco," which specifies the pre-trained weights on the COCO dataset.

We will run the cell under if we’re curious concerning the structure. The code under makes use of the abstract perform from the torchinfo package deal to show a abstract of the YOLO-NAS-l mannequin.

pip set up torchinfo

from torchinfo import abstract

abstract(mannequin=yolo_nas_l,

input_size=(16, 3, 640, 640),

col_names=["input_size", "output_size", "num_params", "trainable"],

col_width=20,

row_settings=["var_names"]Within the above code, we import the abstract perform from the torchinfo package deal. The second line makes use of the abstract perform to show a abstract of the YOLO-NAS-l mannequin. The mannequin parameter specifies the YOLO-NAS-l mannequin to summarize.

The input_size parameter specifies the enter measurement of the mannequin. The col_names parameter specifies the column names to show within the output. The col_width parameter specifies the width of every column. The row_settings parameter specifies the row settings to make use of within the manufacturing.

So, we have got our mannequin all setup and able to go. Now comes the enjoyable half – utilizing it to make predictions. The predict methodology is tremendous straightforward to make use of, and it could actually soak up totally different inputs: A PIL picture, a Numpy picture, a file path to a picture, a file path to a video, a folder path with some photos of it, and even simply an URL to a picture.

There’s additionally a conf argument to specify if we wish to regulate the boldness threshold for detections. For instance, we may do mannequin. predict(path/to/picture, conf=0. 35) solely to get detections with 35% or larger confidence. Tweak this as wanted to filter out much less correct predictions. Let’s make an inference from the picture under.

The under code makes use of the YOLO-NAS-l mannequin to make predictions on the picture specified by the url variable.

url = "https://previews.123rf.com/pictures/freeograph/freeograph2011/freeograph201100150/158301822-group-of-friends-gathering-around-table-at-home.jpg"

yolo_nas_l.predict(url, conf=0.25).present()This code exhibits the right way to use YOLO-NAS-l to make a prediction on a picture and present the outcomes. The predict perform takes a picture URL and a confidence degree as enter. It returns the anticipated objects within the picture. The present methodology shows the picture with containers across the predicted objects.

Word: The reader can launch the code and see the end result.

Conclusion

YOLO NAS is a latest implementation of the YOLO algorithm that makes use of NAS to seek for the optimum structure for object detection. It is quicker and extra correct than the unique YOLO networks, so it is nice for recognizing objects in real-time.

YOLO NAS has achieved state-of-the artwork efficiency on a bunch of benchmarks, and we are able to combine it into pc imaginative and prescient techniques utilizing in style deep studying frameworks. To enhance the efficiency of YOLO NAS, researchers are testing stuff like multi-scale coaching, consideration mechanisms, {hardware} acceleration, and automatic NAS strategies.

References

YOLO-NAS: The Subsequent Frontier in Object Detection in Pc Imaginative and prescient

On this article we are going to discover a cutting-edge object detection mannequin,YOLO-NAS which has marked an enormous development in YOLO sequence.

YOLO-NAS by Deci Achieves State-of-the-Artwork Efficiency on Object Detection Utilizing Neural Structure Search

The brand new YOLO-NAS structure units a brand new frontier for object detection duties, providing one of the best accuracy and latency tradeoff efficiency.

What’s YOLO-NAS and The way it Was Created – AI Papers Academy

YOLO-NAS is an object detection mannequin with one of the best accuracy-latency tradeoff thus far. On this put up we clarify the way it was created.

GitHub – Deci-AI/super-gradients: Simply prepare or fine-tune SOTA pc imaginative and prescient fashions with one open supply coaching library. The house of Yolo-NAS.

Simply prepare or fine-tune SOTA pc imaginative and prescient fashions with one open supply coaching library. The house of Yolo-NAS. – GitHub – Deci-AI/super-gradients: Simply prepare or fine-tune SOTA pc imaginative and prescient…

A Complete Overview of YOLO Architectures in Pc Imaginative and prescient: From YOLOv1 to YOLOv8 and YOLO-NAS