Introduction

On this ever-changing period of expertise, synthetic intelligence (AI) is driving innovation and reworking industries. Among the many numerous developments inside AI, the event and deployment of AI brokers are identified to reshape how companies function, improve person experiences, and automate complicated duties.

AI brokers, that are software program entities able to performing particular duties autonomously, have grow to be indispensable in lots of purposes, starting from customer support chatbots to superior information evaluation instruments to finance brokers.

On this article, we’ll create a fundamental AI agent to discover the importance, functionalities, and technological frameworks that facilitate these brokers’ creation and deployment. Particularly, we’ll perceive LangGraph and Ollama, two highly effective instruments that simplify constructing native AI brokers. By the tip of this information, you should have a complete understanding of leveraging these applied sciences to create environment friendly and efficient AI brokers tailor-made to your particular wants.

Understanding AI Brokers

AI brokers are entities or methods that understand their atmosphere and take actions to attain particular objectives or goals. These brokers can vary from easy algorithms to classy methods able to complicated decision-making. Listed below are some key factors about AI brokers:

- Notion: AI brokers use sensors or enter mechanisms to understand their atmosphere. This might contain gathering information from numerous sources comparable to cameras, microphones, or different sensors.

- Reasoning: AI brokers obtain data and use algorithms and fashions to course of and interpret information. This step entails understanding patterns, making predictions, or producing responses.

- Resolution-making: AI brokers, like people, resolve on actions or outputs based mostly on their notion and reasoning. These selections intention to attain particular objectives or goals outlined by their programming or studying course of. Additional, AI brokers will act extra as assistants relatively than exchange people.

- Actuation: AI brokers execute actions based mostly on their selections. This might contain bodily actions in the true world (like transferring a robotic arm) or digital actions in a digital atmosphere (like making suggestions in an app).

An instance of AI brokers in motion is healthcare methods, which analyze affected person information from numerous sources, comparable to medical data, take a look at outcomes, and real-time monitoring units. These AI brokers then use this information to make knowledgeable selections, comparable to predicting the probability of a affected person creating a selected situation or recommending customized therapy plans based mostly on the affected person’s medical historical past and present well being standing. For example, AI brokers in healthcare would possibly assist docs diagnose illnesses earlier by analyzing delicate patterns in medical imaging information or counsel changes to treatment dosages based mostly on real-time physiological information.

Distinction Between AI Agent and RAG Software

RAG (Retrieval-Augmented Era) purposes and AI brokers confer with totally different ideas inside synthetic intelligence.

RAG is used to enhance the efficiency or the output of LLM fashions by incorporating data retrieval strategies. The retrieval system searches for related paperwork or data from a big corpus based mostly on the enter question. The generative mannequin (e.g., a transformer-based language mannequin) then makes use of this retrieved data to generate extra correct and contextually related responses. This helps enhance the generated content material’s accuracy as a result of integration of retrieved data. Moreover, this system removes the necessity to fine-tune or practice a LLM on new information.

Then again, AI brokers are autonomous software program entities designed to carry out particular duties or a collection of duties. They function based mostly on predefined guidelines, machine studying fashions, or each. They typically work together with customers or different methods to collect inputs, present responses, or execute actions. Some AI agent’s efficiency will increase as they will be taught and adapt over time based mostly on new information and experiences. AI can deal with a number of duties concurrently, offering scalability for companies.

| RAG | A.I.Agent |

|---|---|

| RAG is a way used to enhance the efficiency of generative fashions by incorporating data retrieval strategies | An AI private assistant can carry out autonomous duties and make selections |

| Retrieval system + generative mannequin | Rule-based methods, machine studying fashions, or a mix of AI methods |

| Improved accuracy and relevance, leverage exterior information | Improved versatility, adaptability |

| Query answering, buyer assist, content material technology | Digital assistants, autonomous automobiles, suggestion methods |

| Means to leverage giant, exterior datasets for enhancing generative responses with out requiring the generative mannequin itself to be educated on all that information | Functionality to work together with customers and adapt to altering necessities or environments. |

| A chatbot that retrieves related FAQs or information base articles to reply person queries extra successfully. | A suggestion engine that means merchandise or content material based mostly on person preferences and habits. |

In abstract, RAG purposes are particularly designed to boost the capabilities of generative fashions by incorporating retrieval mechanisms; AI brokers are broader entities meant to carry out a big selection of duties autonomously.

Transient Overview of LangGraph

LangGraph is a strong library for constructing stateful, multi-actor purposes utilizing giant language fashions (LLMs). It helps create complicated workflows involving single or a number of brokers, providing vital benefits like cycles, controllability, and persistence.

Key Advantages:

- Cycles and Branching: In contrast to different frameworks that use easy directed acyclic graphs (DAGs), LangGraph helps loops and conditionals, important for creating refined agent behaviors.

- Positive-grained management: LangGraph offers detailed management over your software’s circulation and state as a low-level framework, making it ideally suited for creating dependable brokers.

- Persistence: It contains built-in persistence, permitting you to avoid wasting the state after every step, pause and resume execution, and assist superior options like error restoration and human-in-the-loop workflows.

Options:

- Cycles and Branching: Implement loops and conditionals in your apps.

- Persistence: Mechanically save state after every step, supporting error restoration.

- Human-in-the-Loop: Interrupt execution for human approval or edits.

- Streaming Help: Stream outputs as every node produces them.

- Integration with LangChain: Seamlessly integrates with LangChain and LangSmith however will also be used independently.

LangGraph is impressed by applied sciences like Pregel and Apache Beam, with a user-friendly interface much like NetworkX. Developed by LangChain Inc., it gives a sturdy instrument for constructing dependable, superior AI-driven purposes.

Fast Introduction to Ollama

Ollama is an open-source venture that makes working LLMs in your native machine simple and user-friendly. It offers a user-friendly platform that simplifies the complexities of LLM expertise, making it accessible and customizable for customers who need to harness the facility of AI while not having in depth technical experience.

It’s simple to put in. Moreover, we have now a number of fashions and a complete set of options and functionalities designed to boost the person expertise.

Key Options:

- Native Deployment: Run refined LLMs instantly in your native machine, making certain information privateness and decreasing dependency on exterior servers.

- Person-Pleasant Interface: Designed to be intuitive and straightforward to make use of, making it accessible for customers with various ranges of technical information.

- Customizability: Positive-tune the AI fashions to fulfill your particular wants, whether or not for analysis, growth, or private tasks.

- Open Supply: Being open-source, Ollama encourages neighborhood contributions and steady enchancment, fostering innovation and collaboration.

- Easy Set up: Ollama stands out with its user-friendly set up course of, providing intuitive, hassle-free setup strategies for Home windows, macOS, and Linux customers. We’ve got created an article on downloading and utilizing Ollama; please try the weblog (hyperlink offered within the useful resource part.)

- Ollama Group: The Ollama neighborhood is a vibrant, project-driven that fosters collaboration and innovation, with an energetic open-source neighborhood enhancing its growth, instruments, and integrations.

A step-by-step information to creating an AI agent utilizing LangGraph and Ollama

Convey this venture to life

On this demo, we’ll create a easy instance of an agent utilizing the Mistral mannequin. This agent can search the net utilizing the Tavily Search API and generate responses.

We are going to begin by putting in Langgraph, a library designed to construct stateful, multi-actor purposes with LLMs that are perfect for creating agent and multi-agent workflows. Impressed by Pregel, Apache Beam, and NetworkX, LangGraph is developed by LangChain Inc. and can be utilized independently of LangChain.

We are going to use Mistral as our LLM mannequin, which shall be built-in with Ollama and Tavily’s Search API. Tavily’s API is optimized for LLMs, offering a factual, environment friendly, persistent search expertise.

In our earlier article, we realized the way to use Qwen2 utilizing Ollama, and we have now linked the article. Please observe the article to put in Ollama and the way to run LLMs utilizing Ollama.

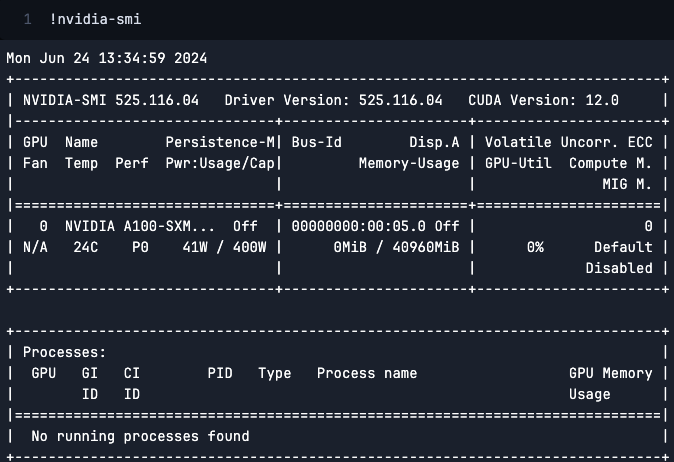

Earlier than we start with the set up, allow us to test our GPU. For this tutorial, we’ll use A100 offered by Paperspace.

The NVIDIA A100 GPU is a powerhouse designed for AI, information analytics, and high-performance computing. Constructed on the NVIDIA Ampere structure, it gives unprecedented acceleration and scalability. Key options embody multi-instance GPU (MIG) expertise for optimum useful resource utilization, tensor cores for AI and machine studying workloads, and assist for large-scale deployments. The A100 excels in coaching and inference, making it ideally suited for numerous purposes, from scientific analysis to enterprise AI options.

You possibly can open a terminal and sort the code under to test your GPU config.

nvidia-smi

Now, we’ll begin with our installations.

pip set up -U langgraph

pip set up -U langchain-nomic langchain_community tiktoken langchainhub chromadb langchain langgraph tavily-python

pip set up langchain-openai

After finishing the installations, we’ll transfer on to the subsequent essential step: offering the Travily API key.

Signal as much as Travily and generate the API key.

export TAVILY_API_KEY="apikeygoeshere"

Now, we’ll run the code under to fetch the mannequin. Please do this utilizing Llama or some other model of Mistral.

ollama pull mistral

Import all the mandatory libraries required to construct the agent.

from langchain import hub

from langchain_community.instruments.tavily_search import TavilySearchResults

from langchain.prompts import PromptTemplate

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import JsonOutputParser

from langchain_community.chat_models import ChatOllamaWe are going to begin by defining the instruments we need to use and bind the instruments with the llm. For this straightforward instance, we’ll make the most of a built-in search instrument through Tavily.

instruments = [TavilySearchResults(max_results=3)]

llm_with_tools = llm.bind_tools(instruments)

The under code snippet retrieves a immediate template and prints it in a readable format. This template can then be used or modified as wanted for the applying.

immediate = hub.pull("wfh/react-agent-executor")

immediate.pretty_print()

Subsequent, we’ll configure the usage of Mistral through the Ollama platform.

llm = ChatOpenAI(mannequin="mistral", api_key="ollama", base_url="http://localhost:11434/v1",

)

Lastly, we’ll create an agent executor utilizing our language mannequin (llm), a set of instruments (instruments), and a immediate template (immediate). The agent is configured to react to inputs, make the most of the instruments, and generate responses based mostly on the required immediate, enabling it to carry out duties in a managed and environment friendly method.

agent_executor = create_react_agent(llm, instruments, messages_modifier=immediate)

================================ System Message ================================

You're a useful assistant.

============================= Messages Placeholder =============================

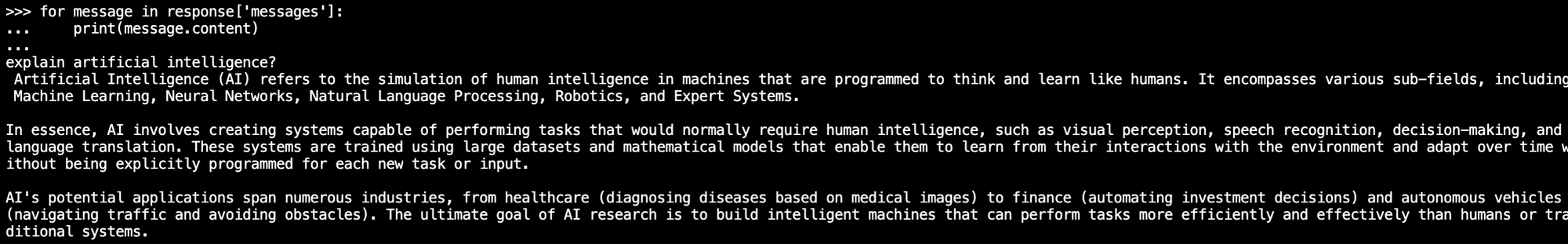

{{messages}}The given code snippet invokes the agent executor to course of the enter message. This step goals to ship a question to the agent executor and obtain a response. The agent will use its configured language mannequin (Mistral on this case), instruments, and prompts to course of the message and generate an acceptable reply

response = agent_executor.invoke({"messages": [("user", "explain artificial intelligence")]})

for message in response['messages']:

print(message.content material)and this may generate the under response.

Conclusion

LangGraph and instruments like AI Brokers and Ollama symbolize a big step ahead in creating and deploying localized synthetic intelligence options. By leveraging LangGraph’s capacity to streamline numerous AI elements and its modular structure, builders can create versatile and scalable AI options which are environment friendly and extremely adaptable to altering wants.

As our weblog describes, AI Brokers provide a versatile strategy to automating duties and enhancing productiveness. These brokers will be personalized to deal with numerous capabilities, from easy activity automation to complicated decision-making processes, making them indispensable instruments for contemporary companies.

Ollama, as a part of this ecosystem, offers extra assist by providing specialised instruments and providers that complement LangGraph’s capabilities.

In abstract, the mixing of LangGraph and Ollama offers a sturdy framework for constructing AI brokers which are each efficient and environment friendly. This information is a worthwhile useful resource for anybody seeking to harness the potential of those applied sciences to drive innovation and obtain their goals within the ever-evolving panorama of synthetic intelligence.

We hope you loved studying this text!