Introduction

Two fashionable choices have not too long ago emerged for constructing an AI utility primarily based on massive language fashions (LLMs): LlamaIndex and LangChain. Deciding which one to make use of may be difficult, so this text goals to clarify the variations between them in easy phrases.

LangChain is a flexible framework designed for constructing varied functions, not simply these confined to massive language fashions. It presents instruments for loading, processing, and indexing knowledge and interacting with LLMs. LangChain’s flexibility permits customers to customise their functions primarily based on the particular wants of their datasets. This makes it a superb selection for creating general-purpose functions that require in depth integration with different software program and programs.

Then again, LlamaIndex is particularly designed for constructing search and retrieval functions. It gives an easy interface for querying LLMs and retrieving related paperwork. Whereas it will not be as general-purpose as LangChain, LlamaIndex is very environment friendly, making it ideally suited for functions that course of massive quantities of knowledge shortly and successfully.

Overview of LlamaIndex and LangChain

LangChain is an open-source framework designed to simplify the event of functions powered by massive language fashions (LLMs). It gives builders with a complete set of instruments and APIs in Python and JavaScript, facilitating the creation of various LLM-driven functions comparable to chatbots, digital brokers, and doc evaluation instruments. LangChain’s structure seamlessly integrates a number of LLMs and exterior knowledge sources, enabling builders to construct complicated and interactive functions shortly.

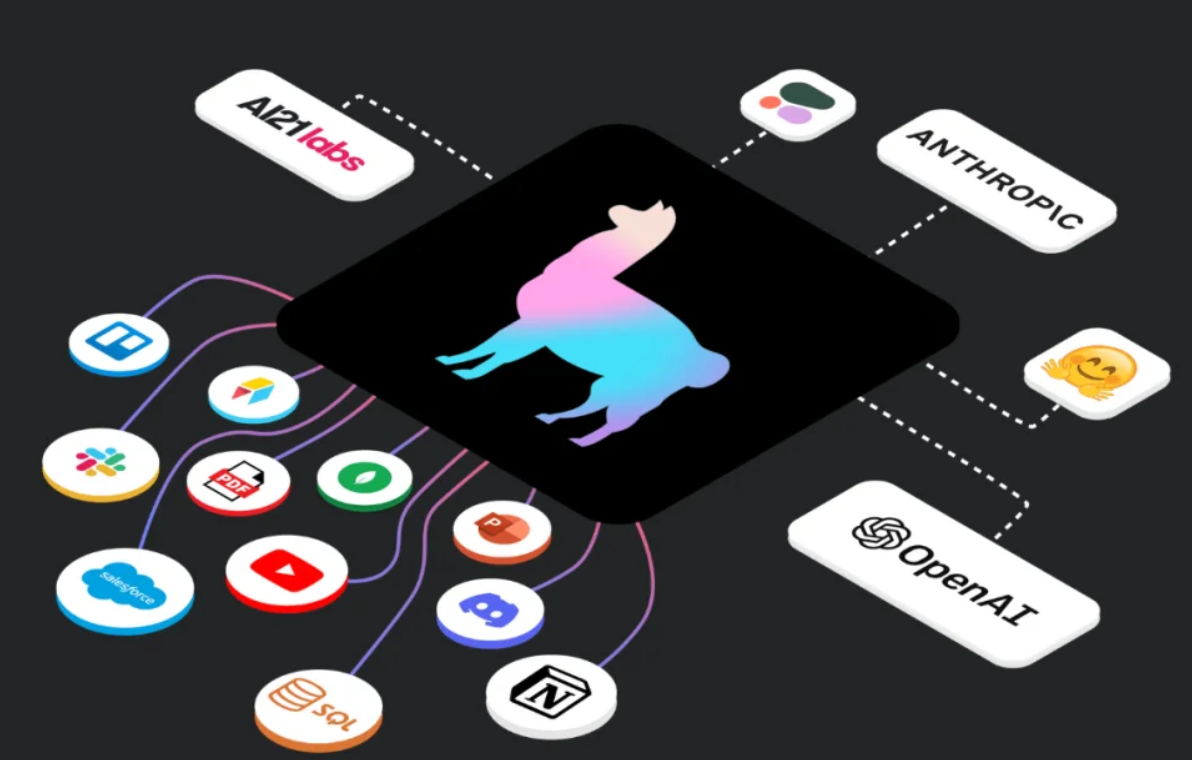

LlamaIndex is a framework designed for environment friendly knowledge retrieval and administration. It excels in creating search and retrieval functions by utilizing algorithms to rank paperwork primarily based on their semantic similarity. LlamaIndex presents completely different knowledge connectors via LlamaHub, permitting direct knowledge ingestion from varied sources with out in depth conversion processes. It’s significantly well-suited for data administration programs and enterprise options that require correct and fast info retrieval capabilities.

What’s LlamaIndex?

Convey this challenge to life

LlamaIndex, beforehand generally known as the GPT index, is a framework that makes life straightforward when working with LLMs. It may be understood as a easy software connecting your customized knowledge—whether or not in APIs, databases, or PDFs—with highly effective language fashions like GPT-4. It simplifies making your knowledge accessible and usable, enabling you to effortlessly create highly effective customized LLM functions and workflows.

With LlamaIndex, customers can simply create highly effective functions comparable to doc Q&A, data-augmented chatbots, data brokers, and extra. The important thing instruments offered by LlamaIndex to reinforce the LLM functions with knowledge are listed under:-

- Knowledge Ingestion:- Helps to attach with any current knowledge sources comparable to APIs, PDFs, paperwork, and so on.,

- Knowledge Indexing:- Shops and indexes the info for various use circumstances.

- Question Interface:- Gives a question interface that accepts the immediate and returns a faster knowledge-augmented response.

- Knowledge Supply:- With LlamaIndex, customers can connect with the unstructured, structured, or semi-structured knowledge supply

Set up LlamaIndex

To put in LlamaIndex, we are able to both clone the repository or use pip.

!git clone https://github.com/jerryjliu/llama_index.gitor

!pip set up llama-indexWhat’s a RAG?

Earlier than diving into the important thing comparability between LlamaIndex and Langchain, allow us to perceive RAG.

RAG, or Retrieval-Augmented Technology, enhances the capabilities of enormous language fashions (LLMs) by combining two elementary processes: retrieval and era. Sometimes, a language mannequin generates responses primarily based solely on its coaching knowledge, generally resulting in outdated or unsupported solutions (a.ok.a hallucination.) RAG improves this by retrieving related info from exterior sources (just like the web or particular databases) primarily based on the person’s question. This retrieved info grounds the mannequin’s response in up-to-date and dependable knowledge, making certain extra correct and knowledgeable solutions. By integrating retrieval with era, RAG addresses challenges comparable to outdated info and lack of sources, making LLM responses extra reliable and related.

RAG helps scale back the necessity to retrain a big language mannequin when new info arises completely. As an alternative, it permits us to replace the info supply with the most recent info. Because of this the subsequent time a person asks a query, we are able to present them with probably the most present info. Secondly, RAG ensures that the language mannequin pays consideration to dependable sources of data earlier than producing a response. This strategy reduces the chance of the mannequin inventing solutions or revealing inappropriate knowledge primarily based solely on its coaching. It additionally allows the mannequin to acknowledge when it can’t confidently reply a query fairly than offering doubtlessly deceptive info. Nevertheless, if the retrieval system does not supply high-quality info, it could lead to some answerable queries going unanswered.

Efficiency on GPU Servers

Computational effectivity is important when working AI workloads on GPU servers. LlamaIndex excels in efficiency metrics, effectively using GPU sources to deal with massive datasets and complicated queries. LangChain additionally demonstrates sturdy efficiency, leveraging GPU capabilities to course of chained fashions with minimal latency. Whereas each frameworks carry out nicely, their suitability might fluctuate primarily based on particular workload necessities.

Organising LlamaIndex and LangChain on GPU servers includes completely different processes. LlamaIndex requires compatibility checks and should contain a steeper preliminary setup course of, however as soon as configured, it integrates seamlessly with current AI workflows. LangChain boasts a extra user-friendly setup, with in depth assist for APIs and libraries, simplifying integration into various AI environments. The training curve for each frameworks is mitigated by complete documentation and energetic neighborhood assist.

LlamaIndex vs Langchain

| LlamaIndex | Langchain |

|---|---|

| LlamaIndex (GPT Index) is a straightforward framework that gives a central interface to attach your LLM’s with exterior knowledge. | LangChain is a software that helps builders simply construct functions that use massive language fashions (LLMs). These are highly effective AI instruments that may perceive and generate human-like textual content. |

| LlamaIndex presents a collection of knowledge connectors on LlamaHub, simplifying knowledge entry by supporting direct ingestion from native sources with out conversion. | LangChain makes use of doc loaders as knowledge connectors to fetch and convert info from varied sources right into a format it may well course of. |

| LlamaIndex focuses on creating search and retrieval functions with an easy interface for indexing and accessing related paperwork, emphasizing environment friendly knowledge administration for LLMs. |

LangChain integrates retrieval algorithms with LLMs to dynamically fetch and course of context-relevant info, making it ideally suited for interactive functions like chatbots. |

| LlamaIndex is optimized for retrieval by utilizing algorithms to rank paperwork primarily based on their semantic similarity for efficient querying | Chains are a key characteristic of LangChain that helps to hyperlink a number of language mannequin API calls in a logical sequence, combining varied parts to construct cohesive functions. |

| LlamaIndex is ideal for inside search programs, creating RAG functions, and extracting exact info for enterprises. | LangChain is a flexible framework and comes with instruments, functionalities, and options important for creating and deploying various functions powered by massive language fashions (LLMs). |

| LlamaIndex focuses on optimizing doc retrieval via algorithms that rank paperwork primarily based on semantic similarity, making it well-suited for environment friendly search programs and data administration options |

LangChain is designed as an orchestration framework for constructing functions utilizing massive language fashions (LLMs), integrating varied parts like retrieval algorithms and knowledge sources to create interactive and dynamic experiences. |

Advantages of LlamaIndex and Langchain

Langchain:

- Ease of Use: Langchain is really helpful in the event you’re beginning a brand new challenge and must get it working shortly. It presents a extra intuitive place to begin and has a bigger developer neighborhood, making it simpler to search out examples and options.

- Retriever Mannequin: It makes use of a retriever paradigm for querying knowledge, which is easy and appropriate for primary retrieval duties.

- Neighborhood and Help: Langchain has a well-established neighborhood with ample sources like tutorials, examples, and neighborhood assist, which might profit newbies.

LlamaIndex:

- Superior Querying: LlamaIndex is extra appropriate in case your challenge requires refined querying capabilities comparable to subqueries or synthesizing responses from exterior datasets.

- Reminiscence Construction Flexibility: It permits for extra complicated reminiscence buildings, comparable to composing completely different indices (like checklist indices, vector indices, and graph indices), which may also help construct chatbots or massive language fashions with particular reminiscence wants.

- Composability: Whereas presently more difficult to study than Langchain, LlamaIndex presents composability on the reminiscence construction aspect, permitting you to customise and construction knowledge indices flexibly.

- Future Potential: With ongoing enhancements and neighborhood development (current funding and improvement updates), LlamaIndex is evolving in the direction of simpler usability whereas retaining highly effective options for superior customers.

Conclusion

In abstract, if that you must develop a general-purpose LLM-based utility that requires flexibility, extensibility, and integration with different software program, LangChain is the higher selection. Nevertheless, if the main focus is on creating an environment friendly and easy search and retrieval utility, LlamaIndex is the superior choice.

Moreover, we extremely suggest the detailed weblog on Langchain, which can aid you achieve a deeper understanding of the framework and supply a hands-on expertise.

We hope you loved the article!