Introduction

Immediate engineering has develop into pivotal in leveraging Massive Language fashions (LLMs) for numerous purposes. As you all know, fundamental immediate engineering covers elementary methods. Nonetheless, advancing to extra subtle strategies permits us to create extremely efficient, context-aware, and sturdy language fashions. This text will delve into a number of superior immediate engineering methods utilizing LangChain. I’ve added code examples and sensible insights for builders.

In superior immediate engineering, we craft advanced prompts and use LangChain’s capabilities to construct clever, context-aware purposes. This consists of dynamic prompting, context-aware prompts, meta-prompting, and utilizing reminiscence to take care of state throughout interactions. These methods can considerably improve the efficiency and reliability of LLM-powered purposes.

Studying Targets

- Study to create multi-step prompts that information the mannequin by way of advanced reasoning and workflows.

- Discover superior immediate engineering methods to regulate prompts based mostly on real-time context and consumer interactions for adaptive purposes.

- Develop prompts that evolve with the dialog or process to take care of relevance and coherence.

- Generate and refine prompts autonomously utilizing the mannequin’s inside state and suggestions mechanisms.

- Implement reminiscence mechanisms to take care of context and data throughout interactions for coherent purposes.

- Use superior immediate engineering in real-world purposes like training, assist, inventive writing, and analysis.

This text was printed as part of the Knowledge Science Blogathon.

Setting Up LangChain

Be sure to arrange LangChain accurately. A strong setup and familiarity with the framework are essential for superior purposes. I hope you all know the way to arrange LangChain in Python.

Set up

First, set up LangChain utilizing pip:

pip set up langchainPrimary setup

from langchain import LangChain

from langchain.fashions import OpenAI

# Initialize the LangChain framework

lc = LangChain()

# Initialize the OpenAI mannequin

mannequin = OpenAI(api_key='your_openai_api_key')

Superior Immediate Structuring

Superior immediate structuring is a complicated model that goes past easy directions or contextual prompts. It entails creating multi-step prompts that information the mannequin by way of logical steps. This method is crucial for duties that require detailed explanations, step-by-step reasoning, or advanced workflows. By breaking the duty into smaller, manageable parts, superior immediate structuring will help improve the mannequin’s capability to generate coherent, correct, and contextually related responses.

Purposes of Superior Immediate Structuring

- Instructional Instruments: Superior immediate engineering instruments can create detailed academic content material, reminiscent of step-by-step tutorials, complete explanations of advanced matters, and interactive studying modules.

- Technical Help:It will probably assist present detailed technical assist, troubleshooting steps, and diagnostic procedures for numerous methods and purposes.

- Inventive Writing: In inventive domains, superior immediate engineering will help generate intricate story plots, character developments, and thematic explorations by guiding the mannequin by way of a collection of narrative-building steps.

- Analysis Help: For analysis functions, structured prompts can help in literature opinions, information evaluation, and the synthesis of knowledge from a number of sources, making certain an intensive and systematic method.

Key Elements of Superior Immediate Structuring

Listed here are superior immediate engineering structuring:

- Step-by-Step Directions: By offering the mannequin with a transparent sequence of steps to comply with, we are able to considerably enhance the standard of its output. That is significantly helpful for problem-solving, procedural explanations, and detailed descriptions. Every step ought to construct logically on the earlier one, guiding the mannequin by way of a structured thought course of.

- Intermediate Objectives: To assist make sure the mannequin stays on observe, we are able to set intermediate targets or checkpoints throughout the immediate. These targets act as mini-prompts inside the principle immediate, permitting the mannequin to give attention to one facet of the duty at a time. This strategy will be significantly efficient in duties that contain a number of phases or require the mixing of varied items of knowledge.

- Contextual Hints and Clues: Incorporating contextual hints and clues throughout the immediate will help the mannequin perceive the broader context of the duty. Examples embody offering background info, defining key phrases, or outlining the anticipated format of the response. Contextual clues be certain that the mannequin’s output is aligned with the consumer’s expectations and the precise necessities of the duty.

- Function Specification: Defining a particular position for the mannequin can improve its efficiency. For example, asking the mannequin to behave as an professional in a specific subject (e.g., a mathematician, a historian, a medical physician) will help tailor its responses to the anticipated degree of experience and elegance. Function specification can enhance the mannequin’s capability to undertake completely different personas and adapt its language accordingly.

- Iterative Refinement: Superior immediate structuring usually entails an iterative course of the place the preliminary immediate is refined based mostly on the mannequin’s responses. This suggestions loop permits builders to fine-tune the immediate, making changes to enhance readability, coherence, and accuracy. Iterative refinement is essential for optimizing advanced prompts and attaining the specified output.

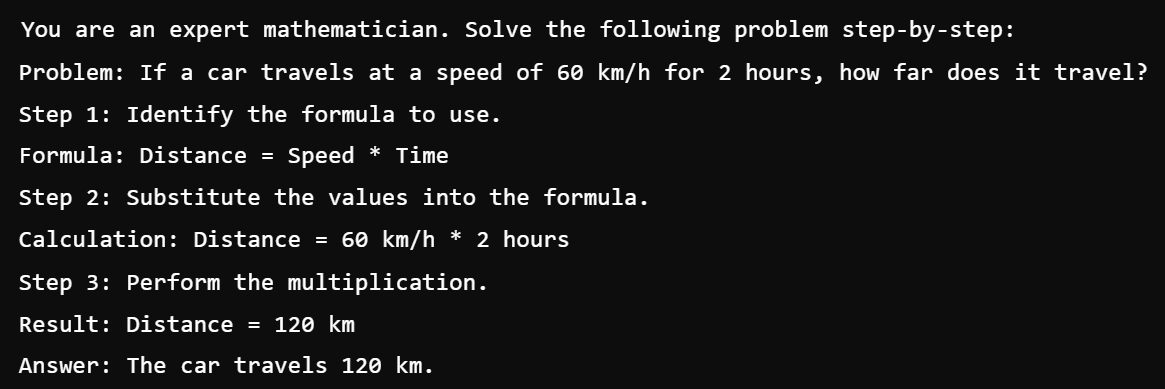

Instance: Multi-Step Reasoning

immediate = """

You're an professional mathematician. Remedy the next drawback step-by-step:

Drawback: If a automobile travels at a velocity of 60 km/h for two hours, how far does it journey?

Step 1: Determine the components to make use of.

Components: Distance = Velocity * Time

Step 2: Substitute the values into the components.

Calculation: Distance = 60 km/h * 2 hours

Step 3: Carry out the multiplication.

Outcome: Distance = 120 km

Reply: The automobile travels 120 km.

"""

response = mannequin.generate(immediate)

print(response)

Dynamic Prompting

In Dynamic prompting, we regulate the immediate based mostly on the context or earlier interactions, enabling extra adaptive and responsive interactions with the language mannequin. In contrast to static prompts, which stay mounted all through the interplay, dynamic prompts can evolve based mostly on the evolving dialog or the precise necessities of the duty at hand. This flexibility in Dynamic prompting permits builders to create extra partaking, contextually related, and customized experiences for customers interacting with language fashions.

Purposes of Dynamic Prompting

- Conversational Brokers: Dynamic prompting is crucial for constructing conversational brokers that may interact in pure, contextually related dialogues with customers, offering customized help and data retrieval.

- Interactive Studying Environments: In academic sectors, dynamic prompting can improve interactive studying environments by adapting the educational content material to the learner’s progress and preferences and might present tailor-made suggestions and assist.

- Data Retrieval Methods: Dynamic prompting can enhance the effectiveness of knowledge retrieval methods by dynamically adjusting and updating the search queries based mostly on the consumer’s context and preferences, resulting in extra correct and related search outcomes.

- Personalised Suggestions: Dynamic prompting can energy customized advice methods by dynamically producing prompts based mostly on consumer preferences and shopping historical past. This method suggests related content material and merchandise to customers based mostly on their pursuits and previous interactions.

Strategies for Dynamic Prompting

- Contextual Question Growth: This entails increasing the preliminary immediate with extra context gathered from the continuing dialog or the consumer’s enter. This expanded immediate offers the mannequin a richer understanding of the present context, enabling extra knowledgeable and related responses.

- Person Intent Recognition: By analyzing the consumer’s intent and extracting the important thing info from their queries, builders can dynamically generate prompts that handle the precise wants and necessities expressed by the consumer. This may make sure the mannequin’s responses are tailor-made to the consumer’s intentions, resulting in extra satisfying interactions.

- Adaptive Immediate Technology: Dynamic prompting can even generate prompts on the fly based mostly on the mannequin’s inside state and the present dialog historical past. These dynamically generated prompts can information the mannequin in direction of producing coherent responses that align with the continuing dialogue and the consumer’s expectations.

- Immediate Refinement by way of Suggestions: By including suggestions mechanisms into the prompting course of, builders can refine the immediate based mostly on the mannequin’s responses and the consumer’s suggestions. This iterative suggestions loop permits steady enchancment and adaptation, resulting in extra correct and efficient interactions over time.

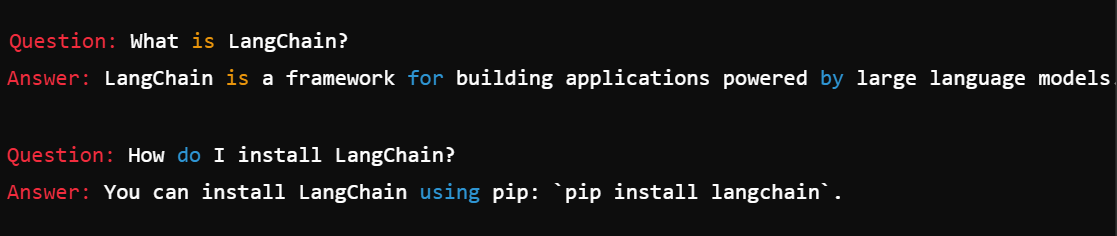

Instance: Dynamic FAQ Generator

faqs = {

"What's LangChain?": "LangChain is a framework for constructing purposes powered by giant language fashions.",

"How do I set up LangChain?": "You'll be able to set up LangChain utilizing pip: `pip set up langchain`."

}

def generate_prompt(query):

return f"""

You're a educated assistant. Reply the next query:

Query: {query}

"""

for query in faqs:

immediate = generate_prompt(query)

response = mannequin.generate(immediate)

print(f"Query: {query}nAnswer: {response}n")

Context-Conscious Prompts

Context-aware prompts symbolize a classy strategy to partaking with language fashions. It entails the immediate to dynamically regulate based mostly on the context of the dialog or the duty at hand. In contrast to static prompts, which stay mounted all through the interplay, context-aware prompts evolve and adapt in actual time, enabling extra nuanced and related interactions with the mannequin. This method leverages the contextual info throughout the interplay to information the mannequin’s responses. It helps in producing output that’s coherent, correct, and aligned with the consumer’s expectations.

Purposes of Context-Conscious Prompts

- Conversational Assistants: Context-aware prompts are important for constructing conversational assistants to interact in pure, contextually related dialogues with customers, offering customized help and data retrieval.

- Process-Oriented Dialog Methods: In task-oriented dialog methods, context-aware prompts allow the mannequin to know and reply to consumer queries within the context of the precise process or area and information the dialog towards attaining the specified objective.

- Interactive Storytelling: Context-aware prompts can improve interactive storytelling experiences by adapting the narrative based mostly on the consumer’s decisions and actions, making certain a customized and immersive storytelling expertise.

- Buyer Help Methods: Context-aware prompts can enhance the effectiveness of buyer assist methods by tailoring the responses to the consumer’s question and historic interactions, offering related and useful help.

Strategies for Context-Conscious Prompts

- Contextual Data Integration: Context-aware prompts take contextual info from the continuing dialog, together with earlier messages, consumer intent, and related exterior information sources. This contextual info enriches the immediate, giving the mannequin a deeper understanding of the dialog’s context and enabling extra knowledgeable responses.

- Contextual Immediate Growth: Context-aware prompts dynamically develop and adapt based mostly on the evolving dialog, including new info and adjusting the immediate’s construction as wanted. This flexibility permits the immediate to stay related and responsive all through the interplay and guides the mannequin towards producing coherent and contextually applicable responses.

- Contextual Immediate Refinement: Because the dialog progresses, context-aware prompts might endure iterative refinement based mostly on suggestions from the mannequin’s responses and the consumer’s enter. This iterative course of permits builders to repeatedly regulate and optimize the immediate to make sure that it precisely captures the evolving context of the dialog.

- Multi-Flip Context Retention: Context-aware prompts preserve a reminiscence of earlier interactions after which add this historic context to the immediate. This allows the mannequin to generate coherent responses with the continuing dialogue and supply a dialog that’s extra up to date and coherent than a message.

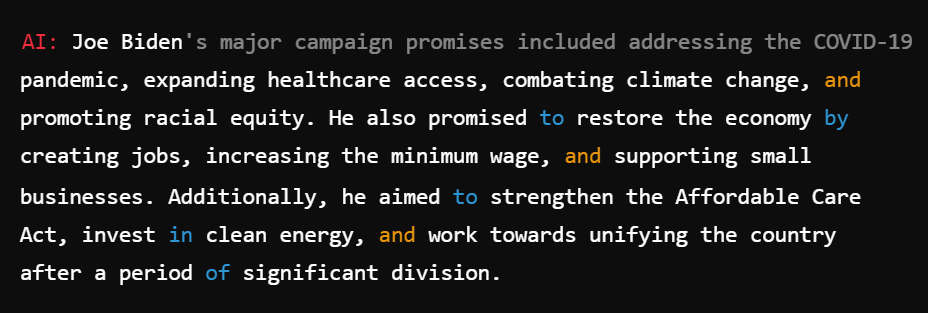

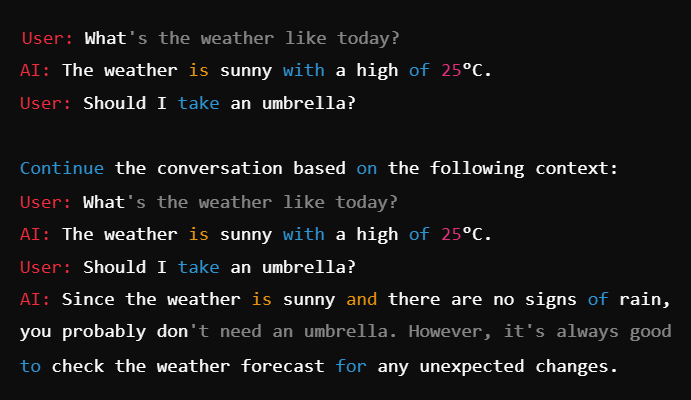

Instance: Contextual Dialog

dialog = [

"User: Hi, who won the 2020 US presidential election?",

"AI: Joe Biden won the 2020 US presidential election.",

"User: What were his major campaign promises?"

]

context = "n".be a part of(dialog)

immediate = f"""

Proceed the dialog based mostly on the next context:

{context}

AI:

"""

response = mannequin.generate(immediate)

print(response)

Meta-prompting is used to reinforce the sophistication and adaptableness of language fashions. In contrast to standard prompts, which offer express directions or queries to the mannequin, meta-prompts function at a better degree of abstraction, which guides the mannequin in producing or refining prompts autonomously. This meta-level steerage empowers the mannequin to regulate its prompting technique dynamically based mostly on the duty necessities, consumer interactions, and inside state. It leads to fostering a extra agile and responsive dialog.

Purposes of Meta-Prompting

- Adaptive Immediate Engineering: Meta-prompting permits the mannequin to regulate its prompting technique dynamically based mostly on the duty necessities and the consumer’s enter, resulting in extra adaptive and contextually related interactions.

- Inventive Immediate Technology: Meta-prompting explores immediate areas, enabling the mannequin to generate numerous and revolutionary prompts. It evokes new heights of thought and expression.

- Process-Particular Immediate Technology: Meta-prompting permits the technology of prompts tailor-made to particular duties or domains, making certain that the mannequin’s responses align with the consumer’s intentions and the duty’s necessities.

- Autonomous Immediate Refinement: Meta-prompting permits the mannequin to refine prompts autonomously based mostly on suggestions and expertise. This helps the mannequin repeatedly enhance and refine its prompting technique.

Additionally learn: Immediate Engineering: Definition, Examples, Suggestions & Extra

Strategies for Meta-Prompting

- Immediate Technology by Instance: Meta-prompting can contain producing prompts based mostly on examples supplied by the consumer from the duty context. By analyzing these examples, the mannequin identifies related patterns and buildings that inform the technology of recent prompts tailor-made to the duty’s particular necessities.

- Immediate Refinement by way of Suggestions: Meta-prompting permits the mannequin to refine prompts iteratively based mostly on suggestions from its personal responses and the consumer’s enter. This suggestions loop permits the mannequin to be taught from its errors and regulate its prompting technique to enhance the standard of its output over time.

- Immediate Technology from Process Descriptions: Meta-prompting can present pure language understanding methods to extract key info from process descriptions or consumer queries and use this info to generate prompts tailor-made to the duty at hand. This ensures that the generated prompts are aligned with the consumer’s intentions and the precise necessities of the duty.

- Immediate Technology based mostly on Mannequin State: Meta-prompting generates prompts by taking account of the interior state of the mannequin, together with its information base, reminiscence, and inference capabilities. This occurs by leveraging the mannequin’s current information and reasoning skills. This enables the mannequin to generate contextually related prompts and align with its present state of understanding.

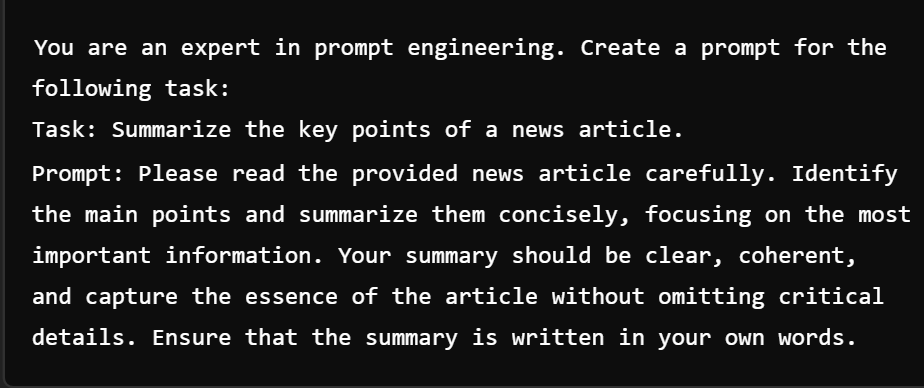

Instance: Producing Prompts for a Process

task_description = "Summarize the important thing factors of a information article."

meta_prompt = f"""

You're an professional in immediate engineering. Create a immediate for the next process:

Process: {task_description}

Immediate:

"""

response = mannequin.generate(meta_prompt)

print(response)

Leveraging Reminiscence and State

Leveraging reminiscence and state inside language fashions permits the mannequin to retain context and data throughout interactions, which helps empower language fashions to exhibit extra human-like behaviors, reminiscent of sustaining conversational context, monitoring dialogue historical past, and adapting responses based mostly on earlier interactions. By including reminiscence and state mechanisms into the prompting course of, builders can create extra coherent, context-aware, and responsive interactions with language fashions.

Purposes of Leveraging Reminiscence and State

- Contextual Conversational Brokers: Reminiscence and state mechanisms allow language fashions to behave as context-aware conversational brokers, sustaining context throughout interactions and producing responses which might be coherent with the continuing dialogue.

- Personalised Suggestions: On this, language fashions can present customized suggestions tailor-made to the consumer’s preferences and previous interactions, bettering the relevance and effectiveness of advice methods.

- Adaptive Studying Environments: It will probably improve interactive studying environments by monitoring learners’ progress and adapting the educational content material based mostly on their wants and studying trajectory.

- Dynamic Process Execution: Language fashions can execute advanced duties over a number of interactions whereas coordinating their actions and responses based mostly on the duty’s evolving context.

Strategies for Leveraging Reminiscence and State

- Dialog Historical past Monitoring: Language fashions can preserve a reminiscence of earlier messages exchanged throughout a dialog, which permits them to retain context and observe the dialogue historical past. By referencing this dialog historical past, fashions can generate extra coherent and contextually related responses that construct upon earlier interactions.

- Contextual Reminiscence Integration: Reminiscence mechanisms will be built-in into the prompting course of to offer the mannequin with entry to related contextual info. This helps builders in guiding the mannequin’s responses based mostly on its previous experiences and interactions.

- Stateful Immediate Technology: State administration methods permit language fashions to take care of an inside state that evolves all through the interplay. Builders can tailor the prompting technique to the mannequin’s inside context to make sure the generated prompts align with its present information and understanding.

- Dynamic State Replace: Language fashions can replace their inside state dynamically based mostly on new info acquired throughout the interplay. Right here, the mannequin repeatedly updates its state in response to consumer inputs and mannequin outputs, adapting its habits in real-time and bettering its capability to generate contextually related responses.

Instance: Sustaining State in Conversations

from langchain.reminiscence import ConversationBufferMemory

reminiscence = ConversationBufferMemory()

dialog = [

"User: What's the weather like today?",

"AI: The weather is sunny with a high of 25°C.",

"User: Should I take an umbrella?"

]

for message in dialog:

reminiscence.add_message(message)

immediate = f"""

Proceed the dialog based mostly on the next context:

{reminiscence.get_memory()}

AI:

"""

response = mannequin.generate(immediate)

print(response)

Sensible Examples

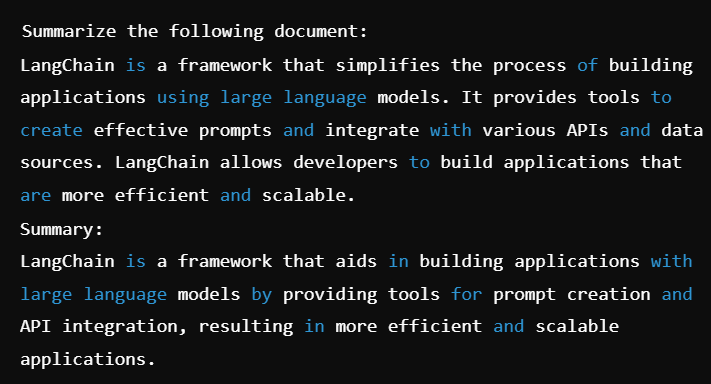

Instance 1: Superior Textual content Summarization

Utilizing dynamic and context-aware prompting to summarize advanced paperwork.

#importdocument = """

LangChain is a framework that simplifies the method of constructing purposes utilizing giant language fashions. It supplies instruments to create efficient prompts and combine with numerous APIs and information sources. LangChain permits builders to construct purposes which might be extra environment friendly and scalable.

"""

immediate = f"""

Summarize the next doc:

{doc}

Abstract:

"""

response = mannequin.generate(immediate)

print(response)

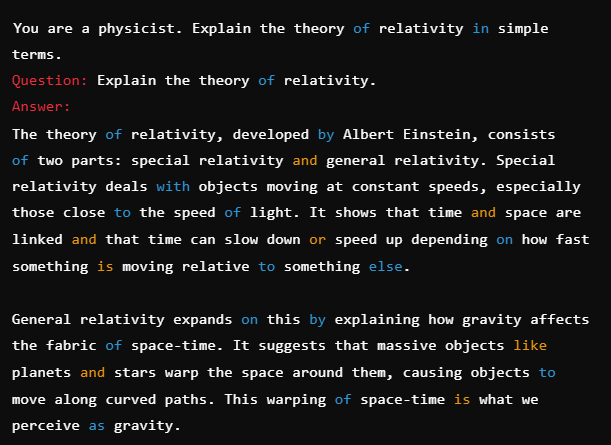

Instance 2: Advanced Query Answering

Combining multi-step reasoning and context-aware prompts for detailed Q&A.

query = "Clarify the speculation of relativity."

immediate = f"""

You're a physicist. Clarify the speculation of relativity in easy phrases.

Query: {query}

Reply:

"""

response = mannequin.generate(immediate)

print(response)

Conclusion

Superior immediate engineering with LangChain helps builders to construct sturdy, context-aware purposes that leverage the complete potential of enormous language fashions. Steady experimentation and refinement of prompts are important for attaining optimum outcomes.

For complete information administration options, discover YData Material. For instruments to profile datasets, think about using ydata-profiling. To generate artificial information with preserved statistical properties, try ydata-synthetic.

Key Takeaways

- Superior Immediate Engineering Structuring: Guides mannequin by way of multi-step reasoning with contextual cues.

- Dynamic Prompting: Adjusts prompts based mostly on real-time context and consumer interactions.

- Context-Conscious Prompts: Evolves prompts to take care of relevance and coherence with dialog context.

- Meta-Prompting: Generates and refines prompts autonomously, leveraging the mannequin’s capabilities.

- Leveraging Reminiscence and State: Maintains context and data throughout interactions for coherent responses.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Writer’s discretion.

Incessantly Requested Questions

A. LangChain can combine with APIs and information sources to dynamically regulate prompts based mostly on real-time consumer enter or exterior information. You’ll be able to create extremely adaptive and context-aware interactions by programmatically setting up prompts incorporating this info.

A. LangChain supplies reminiscence administration capabilities that mean you can retailer and retrieve context throughout a number of interactions, important for creating conversational brokers that bear in mind consumer preferences and previous interactions.

A. Dealing with ambiguous or unclear queries requires designing prompts that information the mannequin in searching for clarification or offering context-aware responses. Finest practices embody:

a. Explicitly Asking for Clarification: Immediate the mannequin to ask follow-up questions.

b. Offering A number of Interpretations: Design prompts permit the mannequin to current completely different interpretations.

A. Meta-prompting leverages the mannequin’s personal capabilities to generate or refine prompts, enhancing the general utility efficiency. This may be significantly helpful for creating adaptive methods that optimize habits based mostly on suggestions and efficiency metrics.

A. Integrating LangChain with current machine studying fashions and workflows entails utilizing its versatile API to mix outputs from numerous fashions and information sources, making a cohesive system that leverages the strengths of a number of parts.