Introduction

Clustering is an unsupervised machine studying algorithm that teams collectively related information factors primarily based on standards like shared attributes. Every cluster has information factors which are much like the opposite information factors within the cluster whereas as an entire, the cluster is dissimilar to different information factors. By making use of clustering algorithms, we will uncover hidden buildings, patterns, and correlations within the information. Fuzzy C Means (FCM) is one among the many number of clustering algorithms. What makes it stand out as a strong clustering approach is that it might deal with complicated, overlapping clusters. Allow us to perceive this method higher by means of this text.

Studying Targets

- Perceive what Fuzzy C Means is.

- Know the way the Fuzzy C Means algorithm works.

- Be capable of differentiate between Fuzzy C Means and Okay Means.

- Study to implement Fuzzy C Means utilizing Python.

This text was revealed as part of the Knowledge Science Blogathon.

What’s Fuzzy C Means?

Fuzzy C Means is a mushy clustering approach through which each information level is assigned a cluster together with the likelihood of it being within the cluster.

However wait! What’s mushy clustering?

Earlier than entering into Fuzzy C Means, allow us to perceive what mushy clustering means and the way it’s totally different from laborious clustering.

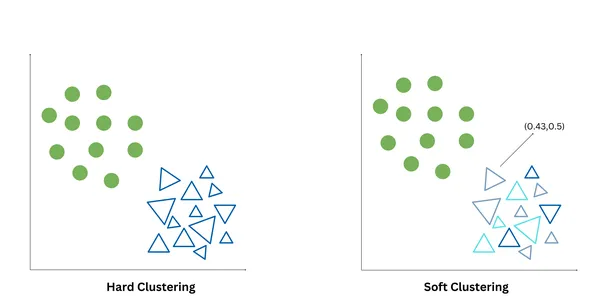

Laborious clustering and mushy clustering are two other ways to partition information factors into clusters. Laborious clustering, often known as crisp clustering, assigns every information level precisely to at least one cluster, primarily based on some standards like for instance – the proximity of the info level to the cluster centroid. It produces non-overlapping clusters. Okay-Means is an instance of laborious clustering.

Smooth clustering, often known as fuzzy clustering or probabilistic clustering, assigns every information level a level of membership/likelihood values that point out the chance of an information level belonging to every cluster. Smooth clustering permits the illustration of information factors which will belong to a number of clusters. Fuzzy C Means and Gaussian Combined Fashions are examples of Smooth clustering.

Working of Fuzzy C Means

Now that we’re clear with the distinction in laborious and mushy clustering, allow us to perceive the working of the Fuzzy C Means algorithm.

Methods to Run the FCM Algorithm

- Initialization: Randomly select and initialize cluster centroids from the info set and specify a fuzziness parameter (m) to manage the diploma of fuzziness within the clustering.

- Membership Replace: Calculate the diploma of membership for every information level to every cluster primarily based on its distance to the cluster centroids utilizing a distance metric (ex: Euclidean distance).

- Centroid Replace: Replace the centroid worth and recalculate the cluster centroids primarily based on the up to date membership values.

- Convergence Examine: Repeat steps 2 and three till a specified variety of iterations is reached or the membership values and centroids converge to steady values.

The Maths Behind Fuzzy C Means

In a conventional k-means algorithm, we mathematically clear up it by way of the next steps:

- Randomly initialize the cluster facilities, primarily based on the k-value.

- Calculate the space to every centroid utilizing a distance metric. Ex: Euclidean distance, Manhattan distance.

- Assign the clusters to every information level after which kind k-clusters.

- For every cluster, compute the imply of the info factors belonging to that cluster after which replace the centroid of every cluster.

- Replace till the centroids don’t change or a pre-defined variety of iterations are over.

However in Fuzzy C-Means, the algorithm differs.

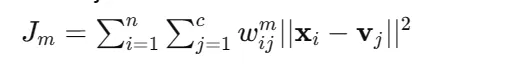

1. Our goal is to reduce the target operate which is as follows:

Right here:

n = variety of information level

c = variety of clusters

x = ‘i’ information level

v = centroid of ‘j’ cluster

w = membership worth of information level of i to cluster j

m = fuzziness parameter (m>1)

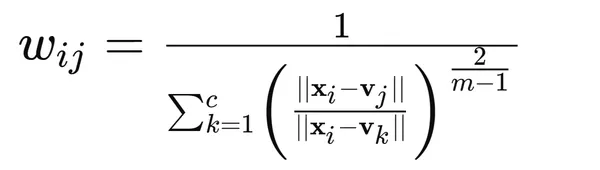

2. Replace the membership values utilizing the formulation:

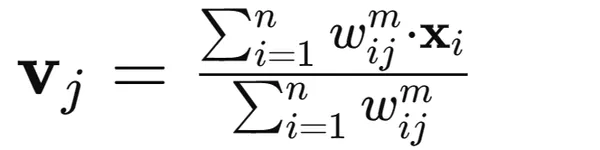

3. Replace cluster centroid values utilizing a weighted common of the info factors:

4. Hold updating the membership values and the cluster facilities till the membership values and cluster facilities cease altering considerably or when a predefined variety of iterations is reached.

5. Assign every information level to the cluster or a number of clusters for which it has the very best membership worth.

How is Fuzzy C Means Completely different from Okay-Means?

There are numerous variations in each these clustering algorithms. Just a few of them are:

| Fuzzy C Means | Okay-Means |

| Every information level is assigned a level of membership to every cluster, indicating the likelihood or chance of the purpose belonging to every cluster. | Every information level is solely assigned to at least one and just one cluster, primarily based on the closest centroid, sometimes decided utilizing Euclidean distance. |

| It doesn’t impose any constraints on the form or variance of clusters. It will possibly deal with clusters of various styles and sizes, making it extra versatile. | It assumes that clusters are spherical and have equal variance. Thus it could not carry out properly with clusters of non-spherical shapes or various sizes. |

| It’s much less delicate to noise and outliers because it permits for mushy, probabilistic cluster assignments. | It’s delicate to noise and outliers within the information |

Implementation of FCM Utilizing Python

Allow us to now implement Fuzzy C Means utilizing Python.

I’ve downloaded the dataset from the next supply: mall_customers.csv · GitHub

!pip set up scikit-fuzzy

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import skfuzzy as fuzz

from sklearn.preprocessing import StandardScaler

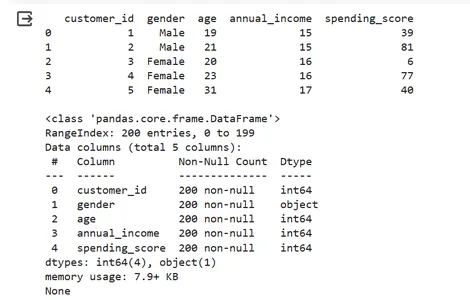

###Load and discover the dataset

information = pd.read_csv("/content material/mall_customers.csv")

# Show the primary few rows of the dataset and examine for lacking values

print(information.head(),"n")

print(information.data())

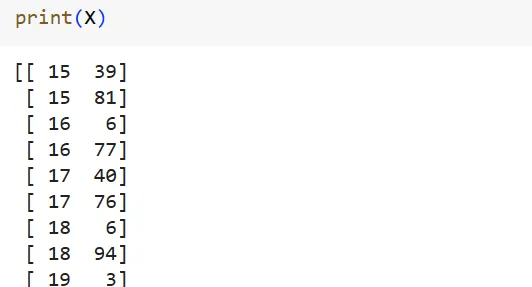

# Preprocess the info

X = information[['Annual Income (k$)', 'Spending Score (1-100)']].values

print(X)

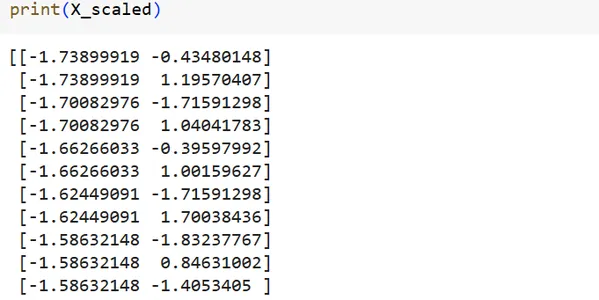

# Scale the options

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

print(X_scaled)

#Apply Fuzzy C Means clustering

n_clusters = 5 # Variety of clusters

m = 2 # Fuzziness parameter

cntr, u, u0,d,jm,p, fpc = fuzz.cluster.cmeans(

X_scaled.T, n_clusters, m, error=0.005, maxiter=1000, init=None

)

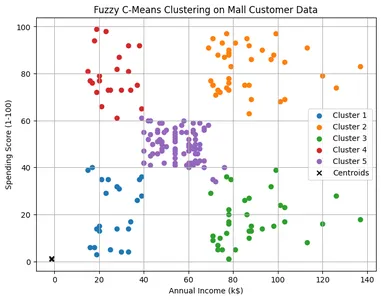

# Visualize the clusters

cluster_membership = np.argmax(u, axis=0)

plt.determine(figsize=(8, 6))

for i in vary(n_clusters):

plt.scatter(X[cluster_membership == i, 0], X[cluster_membership == i, 1], label=f'Cluster {i+1}')

plt.scatter(cntr[0], cntr[1], marker="x", shade="black", label="Centroids")

plt.title('Fuzzy C-Means Clustering on Mall Buyer Knowledge')

plt.xlabel('Annual Earnings (okay$)')

plt.ylabel('Spending Rating (1-100)')

plt.legend()

plt.grid(True)

plt.present()Right here:

- information: The enter information matrix, the place every row represents an information level and every column represents a characteristic.

- clusters: The variety of clusters to be shaped.

- m: The fuzziness exponent, which controls the diploma of fuzziness within the clustering.

- error: The termination criterion specifies the minimal change within the partition matrix (u) between consecutive iterations. If the change falls beneath this threshold, the algorithm terminates.

- maxiter: The utmost variety of iterations allowed for the algorithm to converge. If the algorithm doesn’t converge inside this restrict, it terminates prematurely.

- init: The preliminary cluster facilities. If None, random initialization is used.

The operate returns the next:

- u: The ultimate fuzzy partition matrix, the place every component u[i, j] represents the diploma of membership.

- u0: The preliminary fuzzy partition matrix.

- d: The ultimate distance matrix, the place every component d[i, j] represents the space between the i-th information level and the j-th cluster centroid.

- jm: The target operate worth at every iteration of the algorithm.

- p: The ultimate variety of iterations carried out by the algorithm.

- fpc: The fuzzy partition coefficient (FPC), which measures the standard of the clustering resolution.

Output:

Purposes of FCM

Listed here are the 5 commonest functions of the FCM algorithm:

- Picture Segmentation: Segmenting photographs into significant areas primarily based on pixel intensities.

- Sample Recognition: Recognizing patterns and buildings in datasets with complicated relationships.

- Medical Imaging: Analyzing medical photographs to establish areas of curiosity or anomalies.

- Buyer Segmentation: Segmenting clients primarily based on their buying habits.

- Bioinformatics: Clustering gene expression information to establish co-expressed genes with related capabilities.

Benefits and Disadvantages of FCM

Now, let’s focus on the benefits and downsides of utilizing Fuzzy C Means.

Benefits

- Robustness to Noise: FCM is much less delicate to outliers and noise in comparison with conventional clustering algorithms.

- Smooth Assignments: Offers mushy, probabilistic assignments.

- Flexibility: Can accommodate overlapping clusters and ranging levels of cluster membership.

Limitations

- Sensitivity to Initializations: Efficiency is delicate to the preliminary placement of cluster centroids.

- Computational Complexity: The iterative nature of FCM can enhance computational expense, particularly for big datasets.

- Number of Parameters: Selecting acceptable values for parameters such because the fuzziness parameter (m) can influence the standard of the clustering outcomes.

Conclusion

Fuzzy C Means is a clustering algorithm that may be very various and fairly highly effective in uncovering hidden meanings (within the type of patterns) in information, providing flexibility in dealing with complicated datasets. It may be thought-about a greater algorithm in comparison with the k-means algorithm. By understanding its rules, functions, benefits, and limitations, information scientists and practitioners can leverage this clustering algorithm successfully to extract precious insights from their information, making well-informed selections.

Key Takeaways

- Fuzzy C Means is a mushy clustering approach permitting for probabilistic cluster assignments, contrasting with the unique assignments of laborious clustering algorithms like Okay-Means.

- It iteratively updates cluster membership and centroids, minimizing an goal operate to realize convergence and uncover complicated, overlapping clusters.

- Not like Okay-Means, FCM is much less delicate to noise and outliers on account of its probabilistic method, making it appropriate for datasets with assorted buildings.

- Python implementation of FCM utilizing libraries like scikit-fuzzy permits practitioners to use this method effectively to real-world datasets, facilitating information evaluation and decision-making.

Continuously Requested Questions

A. Fuzzy C Means is a clustering algorithm that goals to enhance the present Okay-Means algorithm by permitting mushy assignments of clusters to information factors, primarily based on the diploma of membership/likelihood values in order that information factors can belong to a number of clusters.

A. Jim Bezdek developed the final case in 1973 for any m>1. He developed this in his PhD thesis at Cornell College. Joe Dunn first reported the FCM algorithm in 1974 for a particular case (m=2).

A. Fuzziness parameter controls the diploma of fuzziness or uncertainty in cluster assignments. For instance: if m=1, then the info level will belong solely to at least one cluster. Whereas, if m=2, then the info level has a level of membership equal to 0.8 for one cluster, and a membership worth equal to 0.2 for one more cluster. This means that there’s a excessive probability of the info level belonging to cluster 1 whereas additionally an opportunity of it belonging to cluster 2.

A. They’re up to date iteratively by performing a weighted common of the info factors the place the weights are the membership values of every information level.

A. Sure! A number of the fuzzy clustering algorithms other than FCM are the Gustafson-Kessel algorithm and the Gath-Geva algorithm.

The media proven on this article on Knowledge Visualization Instruments are usually not owned by Analytics Vidhya and is used on the Writer’s discretion.