There have been a lot of moments in my profession in AI when I’ve been greatly surprised by the progress mankind has made within the area. I recall the primary time I noticed object detection/recognition being carried out at near-human degree of accuracy by Convolutional Neural Networks (CNNs). I’m fairly positive it was this image from Google’s MobileNet (mid 2017) that affected me a lot that I wanted to catch my breath and instantly afterwards exclaim “No means!” (insert expletive in that phrase, too):

Once I first began out in Pc Imaginative and prescient means again in 2004 I used to be adamant that object recognition at this degree of experience and pace could be merely unimaginable for a machine to attain due to the inherent degree of complexity concerned. I used to be really satisfied of this. There have been simply too many parameters for a machine to deal with! And but, there I used to be being confirmed fallacious. It was an unbelievable second of awe, one which I steadily recall to my college students once I lecture on AI.

Since then, I’ve learnt to not underestimate the ability of science. However I nonetheless get caught out infrequently. Nicely, perhaps not caught out (as a result of I actually did be taught my lesson) however extra like greatly surprised.

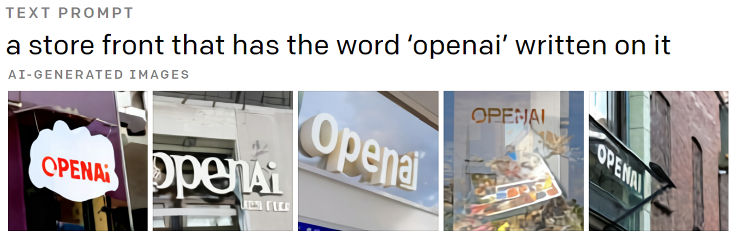

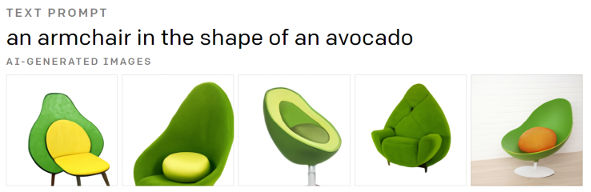

The second memorable second in my profession once I pushed my swivel chair away from my desk and as soon as extra exclaimed “No means!” (insert expletive there once more) was once I noticed image-to-text translation (you present a textual content immediate and a machine creates photos primarily based on it) being carried out by DALL-E in January of 2021. For instance:

I wrote about DALL-E’s preliminary capabilities on the finish of this publish on GPT3. Since then, OpenAI has launched DALL-E 2, which is much more awe-inspiring. However that preliminary second in January of final yr will without end be ingrained in my thoughts – as a result of a machine creating photos from scratch primarily based on textual content enter is one thing really exceptional.

This yr, we’ve seen text-to-image translation change into mainstream. It’s been on the information, John Oliver made a video about it, numerous open supply implementations have been launched to most people (e.g. DeepAI – attempt it out your self!), and it has achieved some milestones – for instance, Cosmopolitan journal used a DALL-E 2 generated picture as a canopy on a particular difficulty of theirs:

That does look groovy, you must admit.

My third “No means!” second (with expletive, after all) occurred only some weeks in the past. It occurred once I realised that text-to-video translation (you present a textual content immediate and a machine creates a sequence of movies primarily based on it) is likewise on its approach to doubtlessly change into mainstream. 4 weeks in the past (Oct 2022) Google introduced ImagenVideo and a short while later additionally revealed one other answer known as Phenaki. A month earlier to this, Meta’s text-to-video translation utility was introduced known as Make-A-Video (Sep 2022), which in flip was preceded by CogVideo by Tsinghua College (Might 2022).

All of those options are of their infancy phases. Other than Phenaki, movies generated after offering an preliminary textual content enter/instruction are only some seconds in size. No generated movies have audio. Outcomes aren’t excellent with distortions (aka artefacts) clearly seen. And the movies that we now have seen have undoubtedly been cherry-picked (CogVideo, nonetheless, has been launched as open supply to the general public so one can attempt it out oneself). However hey, the movies will not be unhealthy both! You must begin someplace, proper?

Let’s check out some examples generated by these 4 fashions. Keep in mind, this can be a machine creating movies purely from textual content enter – nothing else.

CogVideo from Tsinghua College

Textual content immediate: “A contented canine” (video supply)

Right here is a complete sequence of movies created by the mannequin that’s introduced on the official github web site (you might must press “play” to see the movies in movement):

As I discussed earlier, CogVideo is on the market as open supply software program, so you may obtain the mannequin your self and run it in your machine when you’ve got an A100 GPU. And it’s also possible to mess around with an on-line demo right here. The one down facet of this mannequin is that it solely accepts simplified Chinese language as textual content enter, so that you’ll must get your Google Translate up and working, too, for those who’re not aware of the language.

Make-A-Video from Meta

Some instance movies generated from textual content enter:

The opposite wonderful options of Make-A-Video are you could present a nonetheless picture and get the applying to offer it movement, or you may present 2 nonetheless photos and the applying will “fill-in” the movement between them, or you may present a video and request totally different variations of this video to be produced.

Instance – left picture is enter picture, proper picture exhibits generated movement for it:

It’s arduous to not be impressed by this. Nonetheless, as I discussed earlier, these outcomes are clearly cherry-picked. We would not have entry to any API or code to provide our personal creations.

ImagenVideo from Google

Google’s first answer makes an attempt to construct on the standard of Meta’s and Tsinghua College’s releases. Firstly, the decision of movies has been upscaled to 1024×768 with 24 fps (frames per second). Meta’s movies by default are created with 256 x 256 decision. Meta mentions, nonetheless, that max decision could be set to 768 x 768 with 16 fps. CogVideo has comparable limitations to their generated movies.

Listed below are some examples launched by Google from ImagenVideo:

Google claims that the movies generated surpass these of different state-of-the-art fashions. Supposedly, ImagenVideo has a greater understanding of the 3D world and can even course of far more advanced textual content inputs. In the event you have a look at the examples introduced by Google on their challenge’s web page, it seems as if their declare isn’t unfounded.

Phenaki by Google

This can be a answer that basically blew my thoughts.

Whereas ImagenVideo had its concentrate on high quality, Phenaki, which was developed by a unique crew of Google researchers, focussed on coherency and size. With Phenaki, a consumer can current an extended record of prompts (quite than only one) that the system then takes and creates a movie of arbitrary size. Related sorts of glitches and jitteriness are exhibited in these generated clips, however the truth that movies could be created of two-minute plus size, is simply astounding (though of decrease decision). Really.

Listed below are some examples:

Phenaki can even generate movies from single photos, however these photos can moreover be accompanied by textual content prompts. The next instance makes use of the enter picture as its first body after which builds on that by following the textual content immediate:

For extra wonderful examples like this (together with a couple of 2+ minute movies), I’d encourage you to view the challenge’s web page.

Moreover, phrase on the road is that the crew behind ImagenVideo and Phenaki are combining strengths to provide one thing even higher. Watch this house!

Conclusion

A number of months in the past I wrote two posts on this weblog discussing why I feel AI is beginning to decelerate (half 2 right here) and that there’s proof that we’re slowly starting to hit the ceiling of AI’s potentialities (until new breakthroughs happen). I nonetheless stand by that publish due to the sheer quantity of time and money that’s required to coach any of those giant neural networks performing these feats. That is the principle cause I used to be so astonished to see text-to-video fashions being launched so shortly after solely simply getting used to their text-to-image counterparts. I assumed we might be a great distance away from this. However science discovered a means, didn’t it?

So, what’s subsequent in retailer for us? What’s going to trigger one other “No means!” second for me? Textual content-to-music era and text-to-video with audio could be good wouldn’t it? I’ll attempt to analysis these out and see how far we’re from them and current my findings in a future publish.

To learn when new content material like that is posted, subscribe to the mailing record: