Synthetic intelligence, like every transformative expertise, is a piece in progress — frequently rising in its capabilities and its societal affect. Reliable AI initiatives acknowledge the real-world results that AI can have on folks and society, and goal to channel that energy responsibly for constructive change.

What Is Reliable AI?

Reliable AI is an method to AI improvement that prioritizes security and transparency for individuals who work together with it. Builders of reliable AI perceive that no mannequin is ideal, and take steps to assist prospects and most people perceive how the expertise was constructed, its meant use instances and its limitations.

Along with complying with privateness and client safety legal guidelines, reliable AI fashions are examined for security, safety and mitigation of undesirable bias. They’re additionally clear — offering data akin to accuracy benchmarks or an outline of the coaching dataset — to varied audiences together with regulatory authorities, builders and customers.

Rules of Reliable AI

Reliable AI ideas are foundational to NVIDIA’s end-to-end AI improvement. They’ve a easy objective: to allow belief and transparency in AI and assist the work of companions, prospects and builders.

Privateness: Complying With Laws, Safeguarding Knowledge

AI is commonly described as knowledge hungry. Usually, the extra knowledge an algorithm is educated on, the extra correct its predictions.

However knowledge has to come back from someplace. To develop reliable AI, it’s key to think about not simply what knowledge is legally accessible to make use of, however what knowledge is socially accountable to make use of.

Builders of AI fashions that depend on knowledge akin to an individual’s picture, voice, creative work or well being information ought to consider whether or not people have supplied applicable consent for his or her private data for use on this approach.

For establishments like hospitals and banks, constructing AI fashions means balancing the duty of holding affected person or buyer knowledge non-public whereas coaching a sturdy algorithm. NVIDIA has created expertise that permits federated studying, the place researchers develop AI fashions educated on knowledge from a number of establishments with out confidential data leaving an organization’s non-public servers.

NVIDIA DGX programs and NVIDIA FLARE software program have enabled a number of federated studying tasks in healthcare and monetary providers, facilitating safe collaboration by a number of knowledge suppliers on extra correct, generalizable AI fashions for medical picture evaluation and fraud detection.

Security and Safety: Avoiding Unintended Hurt, Malicious Threats

As soon as deployed, AI programs have real-world affect, so it’s important they carry out as meant to protect consumer security.

The liberty to make use of publicly accessible AI algorithms creates immense prospects for constructive purposes, but in addition means the expertise can be utilized for unintended functions.

To assist mitigate dangers, NVIDIA NeMo Guardrails retains AI language fashions on monitor by permitting enterprise builders to set boundaries for his or her purposes. Topical guardrails make sure that chatbots stick with particular topics. Security guardrails set limits on the language and knowledge sources the apps use of their responses. Safety guardrails search to stop malicious use of a big language mannequin that’s related to third-party purposes or utility programming interfaces.

NVIDIA Analysis is working with the DARPA-run SemaFor program to assist digital forensics consultants establish AI-generated photographs. Final 12 months, researchers printed a novel methodology for addressing social bias utilizing ChatGPT. They’re additionally creating strategies for avatar fingerprinting — a strategy to detect if somebody is utilizing an AI-animated likeness of one other particular person with out their consent.

To guard knowledge and AI purposes from safety threats, NVIDIA H100 and H200 Tensor Core GPUs are constructed with confidential computing, which ensures delicate knowledge is protected whereas in use, whether or not deployed on premises, within the cloud or on the edge. NVIDIA Confidential Computing makes use of hardware-based safety strategies to make sure unauthorized entities can’t view or modify knowledge or purposes whereas they’re operating — historically a time when knowledge is left weak.

Transparency: Making AI Explainable

To create a reliable AI mannequin, the algorithm can’t be a black field — its creators, customers and stakeholders should be capable to perceive how the AI works to belief its outcomes.

Transparency in AI is a set of finest practices, instruments and design ideas that helps customers and different stakeholders perceive how an AI mannequin was educated and the way it works. Explainable AI, or XAI, is a subset of transparency masking instruments that inform stakeholders how an AI mannequin makes sure predictions and selections.

Transparency and XAI are essential to establishing belief in AI programs, however there’s no common answer to suit each type of AI mannequin and stakeholder. Discovering the proper answer entails a scientific method to establish who the AI impacts, analyze the related dangers and implement efficient mechanisms to offer details about the AI system.

Retrieval-augmented era, or RAG, is a method that advances AI transparency by connecting generative AI providers to authoritative exterior databases, enabling fashions to quote their sources and supply extra correct solutions. NVIDIA helps builders get began with a RAG workflow that makes use of the NVIDIA NeMo framework for growing and customizing generative AI fashions.

NVIDIA can be a part of the Nationwide Institute of Requirements and Expertise’s U.S. Synthetic Intelligence Security Institute Consortium, or AISIC, to assist create instruments and requirements for accountable AI improvement and deployment. As a consortium member, NVIDIA will promote reliable AI by leveraging finest practices for implementing AI mannequin transparency.

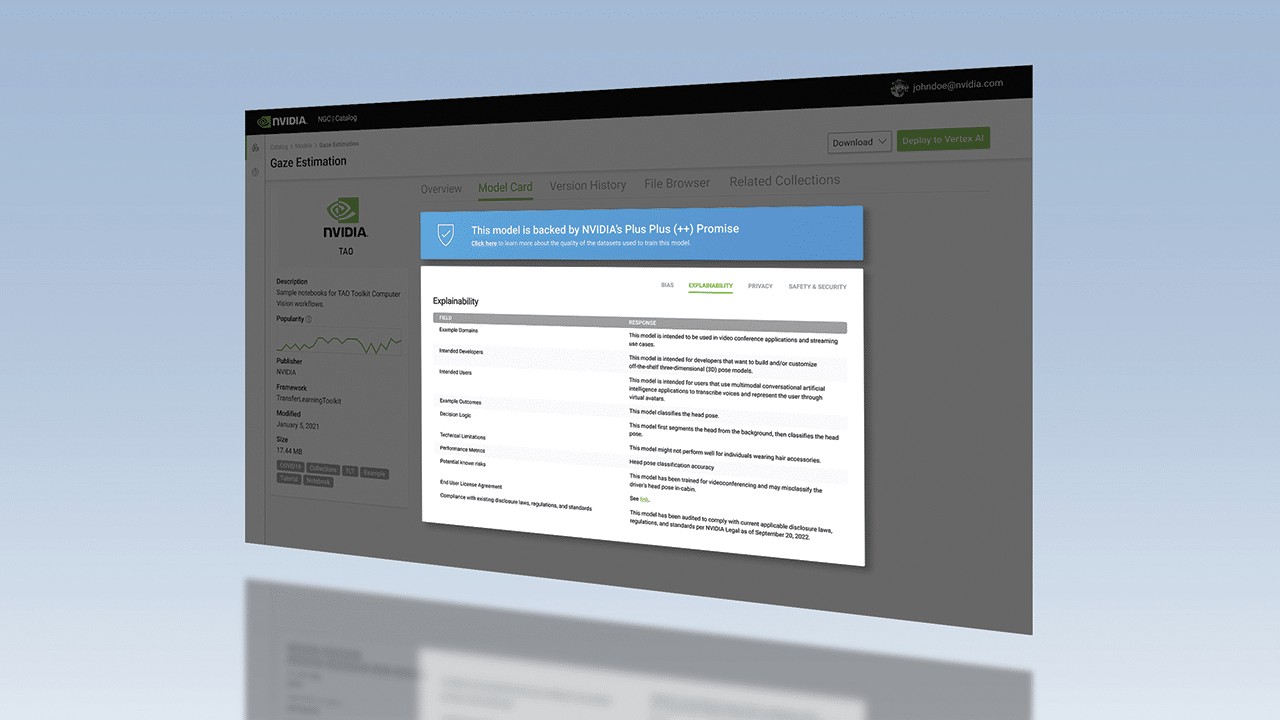

And on NVIDIA’s hub for accelerated software program, NGC, mannequin playing cards supply detailed details about how every AI mannequin works and was constructed. NVIDIA’s Mannequin Card ++ format describes the datasets, coaching strategies and efficiency measures used, licensing data, in addition to particular moral issues.

Nondiscrimination: Minimizing Bias

AI fashions are educated by people, usually utilizing knowledge that’s restricted by dimension, scope and variety. To make sure that all folks and communities have the chance to profit from this expertise, it’s essential to scale back undesirable bias in AI programs.

Past following authorities pointers and antidiscrimination legal guidelines, reliable AI builders mitigate potential undesirable bias by searching for clues and patterns that recommend an algorithm is discriminatory, or entails the inappropriate use of sure traits. Racial and gender bias in knowledge are well-known, however different issues embody cultural bias and bias launched throughout knowledge labeling. To scale back undesirable bias, builders would possibly incorporate totally different variables into their fashions.

Artificial datasets supply one answer to scale back undesirable bias in coaching knowledge used to develop AI for autonomous autos and robotics. If knowledge used to coach self-driving automobiles underrepresents unusual scenes akin to excessive climate situations or visitors accidents, artificial knowledge may also help increase the range of those datasets to raised signify the actual world, serving to enhance AI accuracy.

NVIDIA Omniverse Replicator, a framework constructed on the NVIDIA Omniverse platform for creating and working 3D pipelines and digital worlds, helps builders arrange customized pipelines for artificial knowledge era. And by integrating the NVIDIA TAO Toolkit for switch studying with Innotescus, an internet platform for curating unbiased datasets for laptop imaginative and prescient, builders can higher perceive dataset patterns and biases to assist tackle statistical imbalances.

Study extra about reliable AI on NVIDIA.com and the NVIDIA Weblog. For extra on tackling undesirable bias in AI, watch this discuss from NVIDIA GTC and attend the reliable AI monitor on the upcoming convention, going down March 18-21 in San Jose, Calif, and on-line.